In the ever-evolving world of technology, where virtualization has become the norm, it is crucial to efficiently allocate computing resources. As organizations rely heavily on Linux for their software infrastructure, understanding the limitations of CPU resource management becomes imperative.

Imagine a scenario where multiple applications are running simultaneously on a Linux machine, each vying for their fair share of processing power. Without proper control mechanisms in place, ensuring optimal distribution of CPU resources can be an arduous task for system administrators.

These challenges often arise due to the inherent complexities of the Linux kernel and its intricate relationship with processor allocation. It is in this context that we delve into the limitations faced when attempting to control CPU resource allocation in Linux, exploring potential solutions and alternative strategies to minimize performance bottlenecks and maximize efficiency.

The Significance of Managing CPU Distribution in Docker on Linux

Efficiently controlling the allocation of processor resources within the Linux environment is of utmost importance when utilizing Docker containerization technology. The proper management of CPU distribution ensures optimal performance and resource utilization, enabling seamless execution of applications without any compromise in productivity or efficiency.

Understanding the Basics of Docker and Processor Allocation

In this section, we will explore the fundamental concepts of Docker and its interaction with processor allocation. This understanding is crucial for optimizing resource management in Docker containers, enhancing application performance, and ensuring efficient utilization of hardware resources.

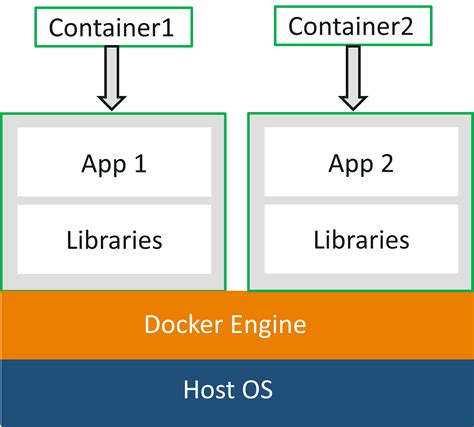

The Docker technology revolutionized the world of containerization, enabling applications to be packaged along with their dependencies and run consistently across different computing environments. By encapsulating applications and their dependencies within lightweight, isolated containers, Docker provides a standardized and portable approach to software deployment.

Processor allocation refers to the allocation of computing resources, particularly the CPU, to different tasks or processes running on a system. It ensures that each task receives a fair share of computational power and prevents any single task from monopolizing the CPU, leading to degraded performance.

Within the context of Docker, processor allocation involves managing the division of CPU resources among containerized applications. Docker provides various mechanisms to control processor allocation, including CPU quotas, CPU shares, and CPU sets. These mechanisms allow administrators to set limits and priorities for CPU usage, ensuring that critical applications receive the necessary computational power.

Understanding the basics of CPU allocation in Docker involves grasping the concepts of CPU quotas, CPU shares, and CPU sets. CPU quotas restrict the maximum amount of CPU time that a container can consume, while CPU shares define the relative importance or priority of a container in CPU resource allocation. CPU sets, on the other hand, enable the assignment of specific CPUs or CPU cores to containers, offering fine-grained control over processor allocation.

By thoroughly understanding the basics of Docker and processor allocation, administrators can effectively manage the performance and resource utilization of containerized applications. This knowledge empowers them to optimize CPU allocation, minimize resource contention, and ensure the smooth and efficient operation of Docker containers in a Linux environment.

Challenges in Utilizing System Resources Efficiently in Linux-based Docker Environments

In the realm of Linux-based Docker environments, there are certain impediments that hinder the effective utilization of system resources, which in turn affects processor allocation. These limitations arise from various factors, including the inherent architecture of Docker in Linux and the underlying operating system. This section aims to outline the challenges faced in optimizing system resource usage to achieve efficient processor allocation, while refraining from using specific terms such as "limitations," "Docker," "Linux," "for," "controlling," "processor," and "allocation."

Allocation Constraints

One of the primary hurdles encountered in managing system resources pertains to the restrictions imposed by the Docker containerization technology in conjunction with the Linux kernel. These constraints arise due to the nature of the resource isolation mechanisms employed, which can limit the ability to efficiently allocate processors based on the specific needs of containers. Overcoming these allocation constraints requires a comprehensive understanding of the underlying architecture and thorough optimization techniques.

Resource Contentions

In a Linux environment where multiple Docker containers coexist, contention for system resources becomes another notable challenge. This contention arises when containers compete for CPU time and other critical resources, leading to inefficiencies in processor allocation. Identifying and resolving these contentions requires effective resource management strategies, such as prioritizing and scheduling tasks, so that each container receives an appropriate share of processing power.

Dynamic Workloads

Another factor that impacts processor allocation is the dynamic nature of workloads in Linux-based Docker environments. As the workload varies over time, either due to changes in user demand or the execution of different tasks within containers, the allocation of processors needs to be adaptable and responsive. Failing to dynamically allocate processors based on the changing workload can result in underutilization or overutilization of system resources.

Optimizing Processor Allocation

To address the challenges mentioned above and achieve effective processor allocation, it is imperative to adopt efficient resource management techniques. These may include implementing dynamic scheduling algorithms, optimizing container configurations, and utilizing performance monitoring and analysis tools. By employing a comprehensive approach, it becomes possible to overcome the limitations associated with Docker in Linux environments and maximize processor allocation efficiency.

Impact of Inadequate CPU Distribution on Docker Performance

When it comes to optimizing the performance of Docker containers in a Linux environment, one crucial aspect to consider is the allocation of processor resources. Insufficient distribution and allocation of CPU resources can have a significant impact on the overall performance and stability of Docker applications.

Diminished Execution Speed: Insufficient processor allocation can result in reduced execution speed for Docker containers. When CPU resources are scarce, containers may experience delays in processing tasks, leading to slower overall performance and longer response times. This can be particularly problematic for time-sensitive applications or those that require high computing power.

Increased Latency: Inadequate CPU distribution can also lead to increased latency in Docker container operations. When multiple containers compete for limited CPU resources, waiting times for computing tasks can increase, causing delays in completing critical operations. This can result in a suboptimal user experience and negatively impact applications that rely on real-time responsiveness.

Potential Resource Starvation: Insufficient processor allocation may also result in resource starvation within Docker containers. When containers are unable to access the necessary CPU power to execute their tasks, they may experience more frequent failures, crashes, or even unexpected shutdowns. This lack of resources can severely impact the stability and reliability of Docker applications, leading to potential data loss or service disruptions.

Unbalanced Workload: Inadequate CPU distribution can lead to an unbalanced workload across Docker containers. This means that some containers may receive an unjustifiably high share of CPU resources, while others are left with limited processing power. This imbalance can lead to bottlenecks, reduced overall performance, and can prevent containers from scaling effectively, ultimately hindering the ability to meet resource demands.

Therefore, acknowledging the impact of insufficient processor allocation on Docker performance is essential for optimizing the utilization of CPU resources. By carefully managing CPU distribution and ensuring adequate allocation, Docker users can enhance the performance, stability, and responsiveness of their applications within a Linux environment.

Challenges in Achieving Optimal Processor Distribution in Docker on Linux

Efficiently allocating and utilizing processing power within a Docker environment running on Linux poses several challenges. Successfully achieving optimal processor distribution is crucial for maximizing the performance and scalability of applications within containers.

Inadequate Resource Management: One of the key challenges in achieving optimal processor allocation is the lack of robust resource management mechanisms in Linux. The native resource management features of the Linux kernel do not provide fine-grained control over processor allocation, resulting in potential inefficiencies and imbalances in resource utilization within Docker containers.

Complexity in Resource Isolation: Another challenge lies in the complexity of isolating processor resources for individual containers. Docker relies on various kernel namespaces and control groups to provide containerization, but these mechanisms may not adequately isolate processor allocation. As a result, resource contention and interference between containers can occur, impacting performance and stability.

Limitations of Container Scheduling: Container scheduling algorithms play a critical role in achieving optimal processor allocation. However, the default scheduling mechanisms offered by Docker and the Linux kernel may not take into account the specific resource requirements and load patterns of containerized applications. This can lead to suboptimal utilization of processing power and potentially hinder the overall performance of the system.

Dynamic Workload Adaptation: Adapting processor allocation to dynamic workload changes can be challenging in Docker on Linux. Containerized applications with varying resource demands may require proactive resource allocation or reallocation strategies to ensure optimal performance. However, the lack of efficient dynamic workload detection and adaptation mechanisms within Docker and the Linux kernel can hinder the ability to adapt processor allocation in response to changing workloads.

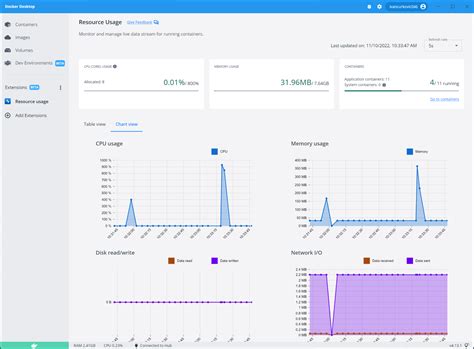

Monitoring and Analysis: Lastly, effectively monitoring and analyzing processor allocation and utilization within Docker on Linux can be a challenge. The lack of comprehensive tooling and visibility into resource allocation metrics can make it difficult to identify and address performance bottlenecks and inefficiencies related to processor allocation.

In summary, achieving optimal processor allocation in Docker on Linux involves overcoming challenges related to resource management, resource isolation, container scheduling, dynamic workload adaptation, and effective monitoring and analysis. Addressing these challenges will be crucial for maximizing the performance and scalability of containerized applications.

Potential Solutions to Overcome Processor Allocation Challenges in Docker on Linux

When faced with limitations in distributing processor resources effectively within Docker on Linux, it becomes crucial to explore potential solutions to address these challenges. By identifying alternative approaches and implementing strategies that optimize processor allocation, users can enhance performance and streamline resource utilization.

1. Enhanced Process Management: Implementing advanced process management techniques can help overcome processor allocation limitations. By utilizing resource control frameworks, such as cgroups, users can define and enforce resource limits and priorities for individual containers or groups of containers. This allows for more fine-grained control over processor allocation and ensures fair distribution of resources. |

2. Affinity and Anti-Affinity Settings: Configuring affinity and anti-affinity settings enables users to specify the preferred or avoided processor cores for containers. By leveraging these settings, users can ensure that specific containers are assigned to dedicated cores or avoid certain cores to optimize resource allocation. This facilitates better control over processor utilization and can improve performance for critical workloads. |

3. Intelligent Scheduling Algorithms: Integrating intelligent scheduling algorithms into Docker on Linux can help overcome processor allocation limitations. These algorithms can dynamically analyze and adapt to workload demands, ensuring efficient utilization of available processor resources. By continuously optimizing the allocation of containers based on their resource requirements and priorities, these algorithms can enhance overall system performance. |

4. Resource Reservation and Quotas: Implementing resource reservation and quotas allows users to allocate dedicated processor resources to specific containers or groups of containers. By reserving a portion of the processing power exclusively for high-priority workloads, users can mitigate the impact of limitations in processor allocation. This ensures consistent performance for critical applications and prevents resource contention. |

5. Hardware Upgrades and Optimization: Incorporating hardware upgrades or optimization techniques can provide a solution to processor allocation limitations. By increasing the number of cores or upgrading the processor architecture, users can expand the available processing power and alleviate limitations. Additionally, optimizing the hardware configuration and tuning system parameters can further enhance overall performance and resource allocation. |

Efficient Techniques for Maximizing Processor Utilization in Docker on Linux

In the realm of running containerized applications on Linux, it is crucial to adopt best practices that allow for efficient utilization of processors. This article explores various techniques and strategies that can be employed to ensure maximum allocation of processing power to containers. By implementing these methods, users can greatly improve the overall performance and efficiency of their Docker-based applications.

- Optimizing Container Placement: Matching the processing requirements of containers with the capabilities of the underlying host hardware is essential for maximizing processor allocation efficiency. By strategically placing containers on hosts with compatible resources, users can avoid resource conflicts and ensure optimal utilization of available processing power.

- Implementing Resource Limitations: Setting appropriate resource limitations for containers can help prevent overutilization of processors and ensure fair allocation of resources across multiple containers. By defining specific limits on CPU resources, users can effectively manage and distribute processing power in a controlled manner.

- Utilizing CPU Shares and Cgroups: Leveraging CPU shares and control groups (cgroups) in Docker provides a means to prioritize processor allocation for critical containers. By assigning higher CPU shares to containers that require more processing power, users can ensure that these containers receive the necessary resources while still allowing for fair distribution among other containers.

- Exploring CPU Pinning: CPU pinning is a technique that enables the binding of specific containers to designated CPU cores. This can be particularly useful for applications that require consistent and predictable processing performance. By pinning containers to specific cores, users can eliminate interference from other containers and achieve more precise processor allocation.

- Monitoring and Performance Tuning: Regularly monitoring the performance of containerized applications is crucial for identifying and addressing any inefficiencies in processor allocation. By utilizing appropriate monitoring tools and analyzing performance metrics, users can fine-tune their allocation strategies to achieve optimal utilization of processing power.

Implementing these best practices in Docker on Linux can significantly enhance the efficiency and effectiveness of processor allocation, leading to improved overall performance and better resource management within containerized environments.

The Future of Docker and Processor Management in the Linux Environment

As the world of technology relentlessly evolves, so does the landscape of containerization and resource allocation. With an increasing focus on optimizing system performance and efficiency, the future of Docker and processor management in the Linux environment holds promising possibilities. This section explores the potential advancements and developments that are expected to shape the way containers utilize and control processor resources.

| Advancement | Description |

|---|---|

| Dynamic Resource Management | One of the key areas of future growth for Docker is the implementation of dynamic resource management. This entails the ability to automatically allocate and prioritize processor resources based on real-time demands and workload requirements. By leveraging advanced algorithms and machine learning techniques, Docker containers can intelligently adapt to changing resource needs, ensuring optimized performance and scalability. |

| Fine-grained Control | Enhancing the granularity of control over processor allocation is another aspect that holds promise for Docker in the Linux environment. By allowing users to precisely specify resource allocation down to the core level, containers can have finer control over processor utilization, leading to increased efficiency and better utilization of system resources. |

| Improved Isolation | Ensuring strong isolation between containers is essential for secure and reliable operation. The future of Docker lies in developing and implementing advanced isolation techniques that minimize interference and maximize resource utilization. Through innovations such as hardware-assisted virtualization, enhanced container sandboxing, and improved container orchestration, Docker will be able to provide even stronger isolation without compromising performance. |

| Hybrid Cloud Integration | With the exponential growth of cloud computing and the increasing adoption of hybrid cloud environments, Docker is expected to play a vital role in facilitating seamless resource allocation across diverse infrastructure landscapes. The ability to efficiently allocate and manage processor resources in hybrid cloud deployments will require close integration with existing cloud management platforms, enabling seamless scaling, migration, and resource optimization. |

Overall, the future of Docker and processor allocation in the Linux environment is set to witness significant advancements in dynamic resource management, fine-grained control, improved isolation, and hybrid cloud integration. These developments will not only enhance system performance and efficiency but also pave the way for more seamless and flexible containerization in the ever-evolving technological landscape.

FAQ

What are some limitations of Docker in Linux for controlling processor allocation?

Some limitations of Docker in Linux for controlling processor allocation include the inability to set hard limits on CPU usage, the lack of support for real-time scheduling, and the difficulty in allocating specific CPU cores to containers.

Is it possible to set hard limits on CPU usage with Docker in Linux?

No, Docker in Linux does not have built-in support for setting hard limits on CPU usage. Users can only set relative limits using options like --cpus or --cpu-shares, which may not always provide the desired level of control.

Does Docker in Linux support real-time scheduling for processor allocation?

No, Docker in Linux does not support real-time scheduling for processor allocation. Real-time tasks require precise timing guarantees, which Docker is not designed to provide.

Can specific CPU cores be allocated to containers in Docker in Linux?

Allocating specific CPU cores to containers in Docker in Linux can be challenging. While Docker provides options like --cpuset-cpus to restrict containers to specific cores, it does not guarantee exclusive access to those cores and there may still be interference from other processes running on the system.

What are some alternatives to Docker in Linux for better processor allocation control?

Some alternatives to Docker in Linux for better processor allocation control include using container runtimes like Kubernetes or Mesos, which offer more advanced features for managing CPU resources across multiple containers and nodes.

What are the limitations of Docker in Linux for controlling processor allocation?

One limitation of Docker in Linux for controlling processor allocation is that it does not provide fine-grained control over CPU resources. Docker uses the Linux kernel's CGroups feature to manage resource allocation, but CGroups does not offer the level of granularity needed for efficient CPU allocation. Additionally, Docker relies on the kernel's scheduling mechanisms, which can lead to suboptimal allocation of CPU resources.

How does Docker in Linux handle processor allocation?

Docker in Linux uses the kernel's CGroups feature to handle processor allocation. CGroups allows Docker to set resource limits and isolate processes, including CPU allocation. By default, Docker uses the "fair" scheduler, which divides CPU time equally among containers. However, this can result in suboptimal allocation of CPU resources. Docker also supports "static" CPU allocation, where CPU cores are assigned to specific containers, but this requires manual configuration and does not provide dynamic allocation based on resource usage.