In the realm of mobile photography, capturing the perfect shot has become an essential part of our daily lives. As the smartphone market continues to evolve, the battle between Android and iPhone devices rages on, with each platform striving to outshine the other. Unquestionably, the camera has emerged as a crucial component that often influences our choice of device. However, when it comes to the Android ecosystem, there remains a lingering concern – a disparity in camera capabilities when compared to its iPhone counterpart.

To comprehend the intricacies of this disparity, it is imperative to delve into the distinct aspects that define the superiority of an iPhone camera over its Android counterpart.

While both Android and iPhone devices boast admirable camera quality, an observation of their intricacies reveals notable distinctions. The Android ecosystem encompasses a vast array of devices from various manufacturers, each utilizing different hardware components and software optimizations. This diversity, though a testament to the extensive reach of Android, inadvertently presents challenges in maintaining consistent camera performance across the platform. On the other hand, the iPhone reigns supreme with its meticulously crafted hardware-software integration, allowing for seamless optimization and unparalleled camera capabilities.

Moreover, another factor contributing to the discrepancy is the contrasting design approaches adopted by Android and Apple. Transforming technological advancements into aesthetically pleasing devices is an endeavor that demands meticulous attention to detail. Apple's unwavering commitment to design excellence is evident in its sophisticated camera modules, carefully calibrated to deliver stunning shots. Meanwhile, the Android market thrives on diversity, catering to various consumer preferences – a commendable approach that perhaps compromises the unified excellence of its camera technology.

Differences in Image Processing Algorithms

In the realm of mobile photography, the quality and accuracy of image processing algorithms play a pivotal role in determining the final outcome of a photo. The intricate algorithms used by smartphone cameras, both Android and iPhone, significantly impact various aspects such as color reproduction, noise reduction, HDR capabilities, and overall image sharpness.

| Aspect | Android | iPhone |

|---|---|---|

| Color Reproduction | Describing how Android devices capture and process colors | Highlighting the color reproduction approach employed by iPhones |

| Noise Reduction | Exploring Android's noise reduction techniques to minimize image noise | Comparing iPhone's methods for reducing noise and preserving detail |

| HDR Capabilities | Analyzing how Android devices handle high dynamic range scenes | Examining the HDR capabilities of iPhones and their impact on image quality |

| Image Sharpness | Discussing Android's approach to enhancing image sharpness and detail | Showcasing how iPhones optimize sharpness for visually appealing results |

Understanding the differences in image processing algorithms between Android and iPhone cameras provides valuable insights into the strengths and weaknesses of each platform. By delving into these aspects, we can gain a deeper understanding of why the two smartphone giants produce distinctive photographic results, ultimately shaping user preferences and experiences.

Hardware Limitations in Android Devices

When it comes to the capabilities of smartphone cameras, it is essential to consider the hardware limitations that Android devices face. These limitations pose challenges and contribute to the differences in camera performance compared to their iPhone counterparts.

One of the main areas where Android devices face hardware limitations is in the image sensors. The image sensor is responsible for capturing light and converting it into digital signals, forming the basis of the image quality. While Android devices have made significant advancements in this area, they often struggle to match the image sensor quality found in iPhones.

Additionally, another hardware limitation in Android devices is the lens quality. The lens plays a crucial role in focusing light onto the image sensor, and a high-quality lens can significantly enhance image sharpness and clarity. However, due to varying factors, such as manufacturing limitations and cost considerations, Android devices may not always incorporate top-of-the-line lenses, resulting in differences in image quality.

Furthermore, the processing power and algorithms used in Android devices can also impact camera performance. The processing power affects the speed at which images are captured and processed, while the algorithms determine how the device interprets and enhances the captured images. While Android devices have made advancements in this area, there may still be differences in processing capabilities compared to iPhones.

It is important to note that these hardware limitations do not imply that Android cameras are inherently inferior to iPhones. Android devices offer a wide range of camera options and functionalities, catering to diverse user preferences. However, understanding these limitations can help explain some of the disparities in camera performance when compared to iPhones.

In conclusion, the hardware limitations in Android devices, particularly in image sensors, lenses, and processing power, contribute to the differences in camera performance compared to iPhones. Recognizing these limitations provides insights into the varying capabilities and functionalities offered by different smartphone platforms.

The Impact of Software Fragmentation on Camera Performance

In the realm of photography, the smooth operation and high-quality output of a smartphone camera are paramount. However, when it comes to Android devices, there is a significant concern regarding software fragmentation and its impact on the performance of the camera. Software fragmentation refers to the diverse range of operating systems and versions that exist across different Android devices, leading to inconsistencies in camera capabilities and overall performance.

This fragmentation can result in varying levels of camera functionality and quality among different Android smartphones, hindering the user experience and making it difficult to achieve consistent and exceptional photography. While Android offers a wide selection of devices with varying specifications, the lack of standardization in software can lead to a disparity in camera features and performance across different manufacturers and models.

One of the key consequences of software fragmentation is the disparity in camera features and capabilities. As each Android manufacturer often customizes the operating system and camera software for their devices, it creates a fragmented ecosystem where certain camera features may be exclusive to specific smartphone models. This means that users may miss out on advanced camera capabilities, such as manual controls, RAW capture, or advanced image processing, depending on the device they own.

| Effects of Software Fragmentation on Camera Performance: |

|---|

|

Moreover, software fragmentation also plays a role in the timely delivery of software updates and bug fixes. With numerous Android devices operating on different software versions, it becomes a challenge for manufacturers to provide consistent updates and fixes specifically tailored to each device. This can result in slower updates or even the complete lack of updates for certain devices, leaving users without access to crucial camera performance enhancements or critical bug fixes.

Furthermore, the lack of standardization in software and camera processing algorithms makes it difficult for users to achieve optimal camera settings. The absence of a unified user interface and camera app experience across Android devices can lead to confusion and frustration when trying to navigate and utilize the camera's settings and features effectively. This hinders users from fully harnessing the capabilities of their smartphone cameras.

Lastly, software fragmentation poses challenges for third-party app developers who strive to create innovative camera applications for Android. The fragmented market necessitates additional efforts in adapting their apps to various software versions and device configurations, limiting their ability to fully optimize the camera experience and take advantage of the device's capabilities consistently.

In conclusion, software fragmentation in the Android ecosystem significantly impacts camera performance. The lack of standardization, varying camera features, limited updates, and difficulties in achieving optimal settings all contribute to a fragmented and inconsistent camera experience. While Android offers a wide range of device options, addressing software fragmentation is crucial to ensure a more cohesive and enhanced photography experience for Android users.

Apple's In-House Developed Image Sensors

In the realm of smartphone camera technology, Apple has made significant strides through the implementation of its in-house developed image sensors. These sensors, ingeniously designed and carefully engineered, have contributed to the remarkable image quality that Apple devices have become renowned for.

Enhanced Pixel Technology Apple's image sensors utilize enhanced pixel technology, which allows for a higher level of light sensitivity. By capturing more light, these sensors enable iPhones to produce vivid and detailed images, especially in challenging lighting conditions. | Advanced Image Processing Alongside its superior image sensors, Apple has also invested in advanced image processing algorithms. These algorithms work in harmony with the image sensors to optimize image quality by reducing noise, enhancing color accuracy, and improving dynamic range. |

Smart HDR Another remarkable feature of Apple's in-house developed image sensors is the implementation of Smart HDR technology. This technology intelligently captures and combines multiple exposures to deliver stunning photos with incredible detail, improved highlights, and shadows that exhibit greater depth and texture. | Depth-Building Capabilities Apple's image sensors also incorporate depth-building capabilities, allowing for the creation of impressive bokeh effects. These effects add a professional touch to portrait-style photographs, blurring the background and emphasizing the subject, resulting in visually striking images. |

Low-Light Performance When it comes to capturing images in low-light environments, Apple's image sensors truly excel. With their efficient light-capturing abilities and improved noise reduction, iPhones are able to produce stunning low-light photos that rival even professional cameras. | Video Recording Quality Apple's dedication to image sensor development extends beyond photography. These sensors also contribute to the outstanding video recording quality found in iPhones. With features like optical image stabilization and advanced noise reduction, videos captured on Apple devices are incredibly smooth, detailed, and true to life. |

Through continuous research and innovation, Apple has solidified its position as a smartphone camera leader by designing and implementing their own image sensors. These sensors, combined with their expertise in software optimization, contribute to the exceptional image quality that sets iPhones apart from other smartphones in the market.

The Significance of Optimization in Camera Software

In the ever-evolving world of smartphone photography, the performance of a device's camera plays a crucial role in capturing high-quality images. While various factors contribute to the overall camera functionality, the optimization of camera software emerges as a key component that affects the user experience and image outcomes. This section aims to highlight the importance of optimizing camera software on Android devices by examining its impact on image quality, features, and overall performance.

Enhancing Image Quality:

The optimization of camera software on Android devices entails fine-tuning the algorithms and settings that govern image capture and processing. By meticulously calibrating these aspects, manufacturers can ensure that the camera hardware's capabilities are maximized. Through software optimization, Android cameras can enhance image quality by harnessing advanced techniques such as noise reduction, color accuracy, dynamic range, and sharpening. These optimizations work in tandem to produce well-balanced, vibrant, and crisp images, elevating the overall photographic experience.

Enabling Advanced Features:

Camera software optimization also paves the way for innovative features, allowing users to explore creative possibilities beyond standard photography. By streamlining the software integration with the hardware, Android device manufacturers can deliver an array of functionalities, such as portrait mode, night mode, HDR, and augmented reality capabilities. These features enrich the user experience while expanding the creative potential of smartphone photography, rivaling even the most advanced camera technologies.

Improving Overall Performance:

Besides image quality and advanced features, optimizing camera software significantly contributes to the overall performance of Android devices. Efficient software algorithms help reduce shutter lag, minimize processing time, and enhance autofocus speed, resulting in faster and more responsive camera functionality. By streamlining the software code, manufacturers can ensure a seamless and enjoyable user experience, empowering users to capture spontaneous moments without delays or frustrations.

Conclusion:

The optimization of camera software holds immense importance in the realm of smartphone photography. Through meticulous calibration and refinement, Android devices can achieve impressive image quality, unlock innovative features, and deliver swift performance. By prioritizing software optimization, Android camera technology can continue to bridge the gap and compete with other leading smartphone camera systems in the market.

The Role of Artificial Intelligence in iPhone Cameras

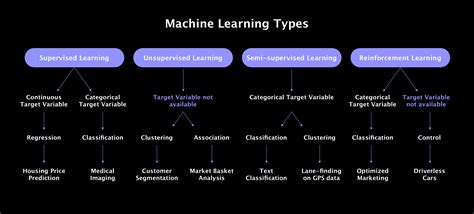

In the realm of photography, the capabilities of a smartphone's camera have become an indispensable tool for capturing life's moments. With each technological advancement, the integration of artificial intelligence (AI) has played a pivotal role in revolutionizing the way iPhone cameras perceive and capture the world around us.

AI serves as the powerhouse behind the exceptional capabilities of iPhone cameras, enhancing the overall photographic experience. By harnessing the power of advanced algorithms and machine learning, Apple has successfully imbued its devices with a remarkable ability to understand and interpret scenes, optimize settings, and deliver unparalleled image quality.

One crucial aspect where AI excels in iPhone cameras is its efficient recognition of various subjects and objects. Through sophisticated computer vision algorithms, the camera intelligently identifies the scene, allowing for enhanced focusing, exposure adjustments, and advanced photo-taking modes. This seamless integration of AI enables users to effortlessly capture stunning images without the need for manual adjustments.

Furthermore, AI in iPhone cameras takes center stage in revolutionizing the portrait mode feature. By utilizing depth information from dual or triple camera systems, combined with AI-driven algorithms, the camera can accurately separate the subject from the background, creating professional-looking portraits with stunning bokeh effects. The AI algorithms analyze the scene, identify the subject, and successfully blur the background to replicate the aesthetic quality achieved by high-end DSLR cameras.

Another area where AI significantly contributes to iPhone cameras is in low-light photography. By leveraging advanced computational photography techniques, the camera can capture multiple images in rapid succession, analyzing and combining them to reduce noise and enhance detail. The AI algorithms intelligently adjust exposure, highlight and shadow details, resulting in breathtakingly sharp and vibrant images even in challenging lighting conditions.

The implementation of AI also allows for real-time image processing and enhancement, providing users with instant feedback on the quality of their shots. Features like Smart HDR and Deep Fusion use AI-driven algorithms to analyze multiple exposures and deliver an optimal blend of details, shadows, and highlights, resulting in images with exceptional dynamic range and fidelity.

Ultimately, the integration of AI in iPhone cameras presents an exciting future for smartphone photography. By continuously refining and expanding the capabilities of their camera systems, Apple demonstrates their commitment to leveraging artificial intelligence to empower users with the ability to capture and immortalize cherished moments with unparalleled ease and quality.

This article is solely focused on discussing the role of artificial intelligence in iPhone cameras and does not provide a comparison with Android cameras.

User Experience and Ease of Use

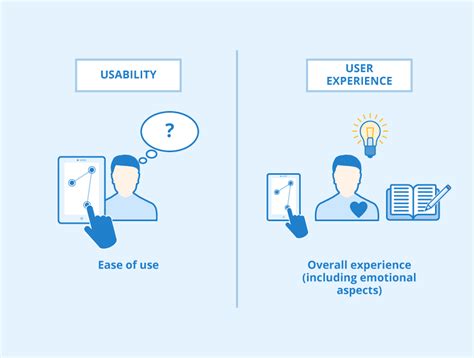

When it comes to using smartphone cameras, the overall user experience and ease of use are crucial factors that can greatly impact one's satisfaction with the device. While both Android and iPhone cameras offer a range of features and capabilities, there are certain aspects where Android cameras may not meet the same level of user experience and ease of use as their iPhone counterparts.

One aspect that sets iPhone cameras apart in terms of user experience is the seamless integration with Apple's ecosystem. With a deep integration between hardware and software, iPhone cameras are designed to provide a streamlined experience that is intuitive and user-friendly. The camera app interface is often praised for its simplicity and ease of navigation, allowing users to quickly access various settings and modes without any confusion.

On the other hand, Android cameras may struggle to provide the same level of seamless integration due to the fragmented nature of the Android ecosystem. With numerous manufacturers and variations of Android devices, camera apps can vary significantly in terms of user interface and functionality. This can result in a less consistent and cohesive user experience across different Android devices, making it more challenging for users to navigate and utilize the camera features effectively.

Another aspect where iPhone cameras excel in terms of ease of use is the optimization of software algorithms. iPhones are known for their impressive image processing capabilities, which often result in high-quality photos with vibrant colors, accurate exposure, and minimal noise. The software works seamlessly in the background to automatically enhance images, allowing users to capture stunning photos effortlessly.

While Android cameras have made significant improvements in recent years, there may still be variations in image quality and processing capabilities due to the different hardware specifications and software optimizations implemented by various manufacturers. This can lead to inconsistencies in terms of image quality and may require users to manually adjust settings to achieve desired results.

- Seamless integration and intuitive interface contribute to the superior user experience of iPhone cameras.

- The fragmented nature of the Android ecosystem can result in a less consistent and cohesive camera experience.

- iPhone cameras utilize optimized software algorithms to produce high-quality photos effortlessly.

- Variations in hardware and software optimizations among Android devices can lead to inconsistencies in image quality.

Overall, while Android cameras have certainly improved over the years, the user experience and ease of use on iPhone cameras still stand out, thanks to their seamless integration, intuitive interface, and optimized software algorithms. However, it's important to note that individual preferences and requirements may vary, and some users may find Android cameras more suitable for their specific needs and preferences.

Apple's Emphasis on Camera Design and Integration

In the ever-evolving world of smartphone technology, one key area where Apple has consistently set itself apart is in the design and integration of its camera systems. With a steadfast commitment to innovation and quality, Apple has redefined what it means to capture stunning photos and videos on a mobile device. By focusing on meticulous camera design and seamless integration with its software ecosystem, Apple continues to raise the bar for smartphone photography and videography.

Apple's dedication to camera design goes beyond mere aesthetics. Every detail is carefully considered to ensure optimal performance and user experience. From the lens to the sensor to the image signal processor, Apple engineers work tirelessly to create a camera system that captures exquisite details, vibrant colors, and sharpness in every shot. This meticulous approach sets Apple apart from other smartphone manufacturers, as they prioritize both form and function in their camera design.

In addition to superior hardware, Apple's integration of its camera with its software ecosystem is a key factor in its success. By tightly integrating the camera system with the iOS operating system, Apple is able to optimize the overall user experience. Features such as Smart HDR, Night mode, and Deep Fusion utilize the power of Apple's A-series chips and advanced algorithms to deliver impressive results in various lighting conditions. The seamless integration of hardware and software allows users to effortlessly capture stunning photos and videos, without the need for extensive post-processing.

Furthermore, Apple's commitment to privacy and security extends to its camera technology. With features like Face ID and Portrait mode, Apple ensures that users' biometric data and sensitive information remain protected. By prioritizing privacy and security, Apple instills confidence in its users, allowing them to fully engage with the camera features without hesitation.

In conclusion, Apple's unwavering focus on camera design and integration has propelled it to be a leader in smartphone photography and videography. With thoughtful attention to both aesthetics and functionality, Apple continues to push the boundaries of what is possible in mobile photography. The seamless integration of hardware and software, coupled with a commitment to privacy and security, ensures that Apple's camera system remains a key differentiator in the competitive smartphone market.

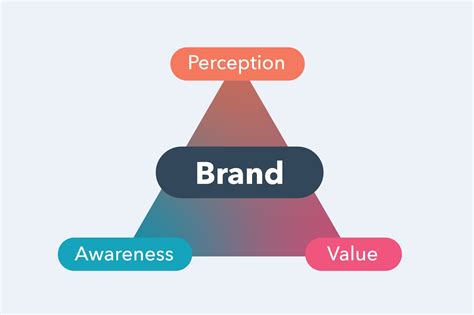

The Impact of Branding and Marketing on Perception

In today's highly competitive smartphone market, the success of a product is not solely determined by its technical capabilities or features. Instead, the way a brand positions itself and the marketing strategies it employs play a crucial role in shaping consumer perception and preference. This article explores the influence of branding and marketing on the perception of smartphone cameras, delving into the factors that contribute to the perceived superiority of certain brands over others.

Branding encompasses the overall image and identity of a company, including its logo, design language, and values. Effective branding builds trust and familiarity among consumers, creating a level of loyalty that can greatly impact purchasing decisions. By creating a strong brand, smartphone manufacturers can instill a sense of reliability and quality in their products, leading consumers to favor their offerings over competitors.

Marketing strategies further amplify the influence of branding on consumer perception. Through targeted advertising campaigns, companies are able to highlight the unique features and capabilities of their smartphone cameras, shaping the narrative around their products. Strategic partnerships, endorsements, and social media presence all contribute to creating a perception of exclusivity and desirability, further enhancing the brand's influence.

Another aspect to consider is the role of user experiences and opinions in shaping perception. Word-of-mouth referrals, online reviews, and social media discussions all contribute to the overall perception of smartphone cameras. Positive experiences and reviews can reinforce the branding and marketing efforts, solidifying the perceived superiority of a particular brand.

In conclusion, the influence of branding and marketing on the perception of smartphone cameras cannot be understated. By strategically positioning their products and employing effective marketing tactics, smartphone manufacturers can shape consumer preference and ensure the success of their offerings in the highly competitive market.

Why Androids Have Bad Cameras

Why Androids Have Bad Cameras by Apple Explained 471,122 views 2 years ago 2 minutes, 16 seconds

FAQ

Why do Android cameras often produce lower quality photos compared to iPhones?

There are several factors that contribute to this difference in photo quality. One important aspect is the camera hardware itself. Apple tends to use higher quality camera sensors in their iPhones, which allows for better image capture. Additionally, Apple's image processing algorithms are known to optimize the final result, enhancing colors, sharpness, and dynamic range. Android devices, on the other hand, often have a more diverse range of camera sensors, resulting in inconsistent performance across different models. Furthermore, Android manufacturers often struggle to match the level of software optimization provided by Apple, resulting in photos that may appear less impressive.

Are there any Android smartphones with cameras comparable to iPhones?

Yes, there are several Android smartphones that can rival the camera performance of iPhones. Companies like Google and Samsung have made significant improvements in recent years, offering smartphones with exceptional camera capabilities. The Google Pixel series, for example, has become well-known for its top-notch camera performance, thanks to its advanced computational photography techniques. Samsung's flagship Galaxy S and Note series also deliver impressive camera results, with their high-end camera sensors and effective image processing software.

What can Android users do to improve the camera quality on their devices?

While Android users may not have the same level of camera quality out of the box as iPhone users, there are still steps they can take to enhance their photography experience. Firstly, using third-party camera apps that offer manual controls can provide more control over the image capture process, allowing users to fine-tune settings like exposure, ISO, and white balance. Additionally, investing in external accessories, such as lens attachments or tripods, can significantly improve the overall image quality. Lastly, exploring and experimenting with different editing apps can help enhance the final result of the photos taken on Android devices.

Do all Android devices have inferior cameras compared to iPhones?

No, not all Android devices have inferior cameras compared to iPhones. As mentioned earlier, there are several Android smartphones on the market that can compete with or even surpass the camera quality of iPhones. However, due to the fragmented nature of the Android ecosystem, with numerous manufacturers offering a wide range of devices, the camera performance can vary greatly between different models. It's important for users to research and choose Android smartphones that specifically prioritize camera quality if that is a significant factor for them.

Is it fair to say that iPhones are the undisputed kings of smartphone photography?

While iPhones have undoubtedly made a significant impact on smartphone photography and are often regarded as having some of the best cameras available, it wouldn't be entirely fair to say that they are the undisputed kings. Android smartphone manufacturers have been pushing the boundaries and making great strides in camera technology. Some Android devices offer features, such as higher megapixel counts, periscope zoom lenses, or dedicated night mode functionalities, that are not yet available on iPhones. Ultimately, the perception of which phones excel in photography can vary depending on individual preferences and priorities.

Why do Android cameras fall short compared to iPhone?

Android cameras often fall short compared to iPhone due to a few reasons. Firstly, iPhone cameras are known for their advanced image processing algorithms and software optimization, resulting in better overall image quality. Secondly, Apple's strict hardware and software integration allows for a seamless user experience and better camera performance. Additionally, many Android devices have a wide variety of camera sensors and lenses, leading to inconsistencies in image quality across different models. Lastly, the camera app experience on Android can be inconsistent and lacks the user-friendly features and intuitive interface found on iPhones.