Within the vast realm of computer operating systems lies a revered contender, capable of unleashing the true power of digital text manipulation. Enveloped in an intricate ecosystem, Linux, with its robust framework, presents an unmatched opportunity for leveraging the art of language processing. This comprehensive guide aims to navigate you through the labyrinth of possibilities that Linux offers, equipping you with the tools and knowledge to harness its potential.

Prepare to embark on a journey where the unassuming ASCII characters intertwine with the intricacies of linguistic analysis. By delving into Linux's vast expanse, you'll unveil a wealth of utilities, each more powerful than the last, to dissect and decipher textual data. From humble command-line interfaces to versatile scripting languages, Linux offers an arsenal of tools that will transform the way you perceive, extract, and manipulate information within linguistic constructs.

Become a virtuoso of text processing: With Linux, you'll unravel the inner structure of words, sentences, and even entire documents. Equipped with its unmatched flexibility and speed, you'll witness the birth of a new era in language analysis. Unleash your computational prowess and traverse the endless possibilities that await you in the realm of text processing. By traversing the crossroads of linguistics and technology, you'll uncover a world where the boundaries of language become fluid and malleable, bending to your command.

Choosing the Right Tools for Efficient Text Manipulation on the Linux Platform

When it comes to processing textual data on the versatile and robust Linux operating system, having the right tools at your disposal can make all the difference. In this section, we will explore the various options available for manipulating and extracting meaningful information from text in a concise and efficient manner.

Command Line Text Editors: One of the most fundamental tools for text processing on Linux is a command line text editor. Options like vi, Emacs, and nano provide powerful features for editing and manipulating text files directly from the terminal. These editors offer customizable options, efficient navigation, and a wide range of functionalities that cater to different user preferences.

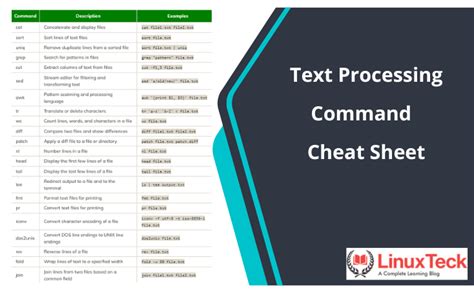

Text Processing Utilities: Linux offers a vast array of text processing utilities, each designed for specific tasks. Tools like grep, awk, and sed enable searching for patterns, extracting specific fields, and performing transformations on text files. These utilities are highly versatile and can be combined with other tools to create complex text processing pipelines.

Regular Expressions: Regular expressions are a powerful tool for pattern matching and text manipulation on the Linux platform. By using a combination of characters, metacharacters, and modifiers, regular expressions allow for precise and flexible searching and substitution operations. Understanding and mastering regular expressions can significantly enhance your text processing capabilities.

Text Processing Libraries: When it comes to more advanced text processing tasks, using libraries can save time and effort. Linux offers libraries like Perl, Python, and Ruby, which provide extensive text processing functionalities. These libraries come with built-in functions and modules that simplify complex tasks, enabling efficient handling of large volumes of text data.

Choosing the Right Tools: To ensure efficient text processing on Linux, it is essential to select the appropriate tools based on the requirements of your specific task. Consider factors such as the complexity of the task, performance requirements, and familiarity with different tools. Experimenting with different options and evaluating their effectiveness will help you identify the most suitable tools for your text processing needs.

Whether you are manipulating large text files, extracting data from log files, or processing textual data for analysis, having a solid understanding of the various text processing tools available on Linux can greatly enhance your productivity and efficiency. By making informed choices and leveraging the power of these tools, you can streamline your text processing workflows and unlock the full potential of the Linux ecosystem.

Text Editors on Linux: An Extensive Overview

In this section, we will delve into an extensive overview of the various text editors available on the Linux platform. Whether you are a seasoned Linux user or just starting to explore this versatile operating system, having a comprehensive understanding of different text editors can greatly enhance your text processing tasks.

Text editors play a crucial role in manipulating and formatting textual content on Linux systems. They serve as powerful tools for developers, writers, system administrators, and anyone who deals with text-based tasks regularly. With an array of options available, each text editor comes with its unique set of features, interface, and customization capabilities.

To begin with, we will provide an overview of some of the most popular and widely used text editors, such as Vim, Emacs, Nano, and Sublime Text. We will explore their key characteristics, strengths, and weaknesses, giving you insights into which editor might best suit your specific needs.

- Vim: Known for its unparalleled customization options and expansive feature set, Vim offers an extensive command-line interface that appeals to advanced users and programmers.

- Emacs: Renowned for its extensibility and adaptability, Emacs provides a rich ecosystem of plugins and modes that allow users to tailor the editor according to their preferences.

- Nano: As a beginner-friendly text editor, Nano offers a simple and intuitive interface, making it an ideal choice for users who are new to Linux or prefer a straightforward editing experience.

- Sublime Text: Combining elegance and functionality, Sublime Text provides a sleek user interface along with an extensive selection of plugins and themes, making it popular among both professional and casual users.

In addition to these prominent choices, we will also explore other text editors that offer unique features or cater to specific use cases. By providing a comprehensive overview of different text editors, this section aims to equip you with the knowledge necessary to make an informed decision when selecting a text editor for your Linux text processing endeavors.

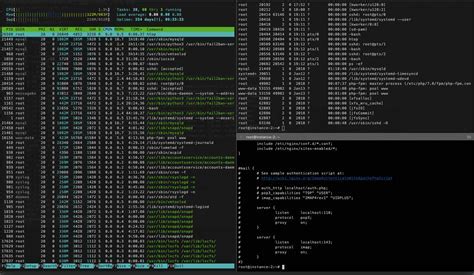

Mastering the Command Line for Efficient Text Manipulation on a Linux Environment

In this section, we will delve into the mastery of the command line interface in order to efficiently process text on a Linux operating system. By honing your skills in executing commands through the terminal, you will gain a greater understanding of how to manipulate and analyze text effectively, enabling you to streamline your workflow and boost your productivity.

Automating Text Processing Tasks with Shell Scripts on Linux

In this section, we will explore the power of shell scripting in automating various text processing tasks on a Linux system. Shell scripts provide a convenient way to automate repetitive tasks, improve productivity, and save time. By leveraging the capabilities of the Linux command-line interface, you can achieve efficient and flexible text processing workflows.

One of the main advantages of using shell scripts for text processing is the ability to combine different commands and utilities seamlessly. This allows you to perform complex operations on text files, such as searching, replacing, sorting, filtering, and formatting, with just a few lines of code. By utilizing the wide range of Linux command-line tools and their respective options, you can create powerful and customized text processing scripts tailored to your specific needs.

Shell scripts also enable you to automate the processing of large volumes of text files in a batch manner. By using loops and conditional statements, you can iterate over multiple files, apply the same set of processing steps to each file, and generate consistent results. This not only saves you from repetitive manual work but also ensures consistency and accuracy across your text processing tasks.

- Performing search and replace operations on text files using regular expressions

- Sorting and filtering text files based on specific criteria or patterns

- Extracting specific information from text files and generating reports

- Converting text files between different formats and encodings

- Combining multiple text files into a single consolidated file

- Processing text input from standard input or other command outputs

- Creating reusable and modular scripts for text processing tasks

In the upcoming sections, we will delve into the details of these automation techniques and provide practical examples to illustrate their usage. By the end of this guide, you will have a solid understanding of how to harness the power of shell scripting to automate your text processing workflows on a Linux system.

Advanced Techniques for Processing Text on Linux

In this section, we will explore advanced techniques for manipulating and analyzing textual data on the Linux operating system. By employing sophisticated methods and tools, you can optimize your text processing tasks and streamline your workflow.

1. Regular Expressions: Mastering regular expressions is a fundamental skill for text processing on Linux. We will delve into the syntax and usage of regular expressions to search, match, and replace patterns within text files.

2. Command Line Tools: Linux offers a plethora of command line tools specifically designed for text processing. We will explore the power of tools like sed, awk, grep, and cut, and learn how to leverage their capabilities to perform complex operations on large text datasets.

3. Text Mining: Discover how to extract valuable insights from text data using advanced techniques such as tokenization, stemming, and named entity recognition. We will also explore topic modeling and sentiment analysis, enabling you to uncover patterns and sentiments hidden within textual information.

4. Text Classification: Learn how to classify text documents using machine learning algorithms on Linux. We will cover techniques such as feature extraction, model training, and evaluation, allowing you to build efficient classifiers for tasks like spam detection, sentiment analysis, and document categorization.

5. Natural Language Processing: Dive into the exciting field of natural language processing (NLP) on Linux. Discover how to utilize libraries like NLTK and spaCy to perform advanced linguistic processing tasks, including part-of-speech tagging, named entity recognition, and syntactic parsing.

By mastering these advanced text processing techniques on Linux, you will be able to efficiently manipulate, analyze, and extract valuable information from textual data, enabling you to tackle a wide range of real-world text processing challenges.

Data Manipulation and Analysis with Linux Command Line Tools

In this section, we will explore how Linux command line tools can be utilized for manipulating and analyzing data. Linux, being a versatile and powerful operating system, offers a wide range of command line tools that can efficiently handle data processing tasks.

With the help of these tools, users can transform, modify, and analyze data in various formats, such as text files, CSV files, and JSON files. The command line tools provide a flexible and efficient way to perform tasks like filtering, sorting, merging, and aggregating data.

One of the key benefits of using Linux command line tools for data manipulation and analysis is their ability to handle large datasets effectively. These tools are optimized for processing large amounts of data efficiently, making them ideal for tasks involving big data analysis.

Some of the commonly used Linux command line tools for data manipulation and analysis include sed, awk, grep, sort, cut, and join. Each of these tools offers a unique set of functionalities that can be used to perform specific data processing tasks.

Furthermore, the combination of these tools with shell scripting allows for the automation of complex data processing workflows. By scripting the commands and utilizing various command line tools, users can build powerful data pipelines to perform repetitive tasks and analyze data on a regular basis.

Overall, understanding and utilizing Linux command line tools for data manipulation and analysis can greatly enhance productivity and efficiency in various fields, such as data science, research, and business analytics. These tools provide a flexible and powerful environment for working with data, enabling users to efficiently process and analyze large datasets.

Collaborative Text Processing with Version Control on Linux

In this section, we explore the power of collaboration and version control in the context of text processing on the Linux operating system. By leveraging version control tools, such as Git, we can enhance productivity, ensure project integrity, and streamline the collaborative workflow when working with textual content.

Streamlining Collaboration: Discover how version control systems enable efficient collaboration among multiple users on text processing projects. We discuss the benefits of decentralized version control, branching strategies, and resolving conflicts to foster seamless teamwork and enhance project outcomes.

Ensuring Project Integrity: Delve into the importance of version control in maintaining project integrity. Learn how version control systems allow us to track and monitor changes made to text files, facilitating backups, rollbacks, and ensuring data integrity throughout the project lifecycle.

Enhancing Productivity: Explore how version control systems can boost productivity in text processing tasks on Linux. Discover techniques to optimize workflow, such as using commit messages effectively, utilizing issue tracking systems, and leveraging the power of code reviews to streamline the collaborative text processing process.

Utilizing Collaboration Tools: Explore a range of collaboration tools within the Linux environment that integrate seamlessly with version control systems. From text editors with built-in version control support to online platforms for code collaboration, we delve into the diverse options available and their potential benefits for efficient collaborative text processing.

Best Practices for Collaborative Text Processing: Gain insights into best practices for collaborating on text processing projects using version control on Linux. Explore strategies for effective communication, documentation, and project management that can help maximize productivity and facilitate successful collaboration in text processing tasks.

Case Studies: We present real-world examples and case studies showcasing the successful application of version control systems in collaborative text processing on Linux. By examining these cases, we highlight the diverse ways in which teams have utilized version control tools to enhance their efficiency, quality, and collaboration in text processing projects.

Embark on a journey to unlock the potential of collaborative text processing using version control on Linux. By embracing these strategies and tools, you can optimize your workflow, ensure project integrity, and elevate the productivity of your text processing endeavors.

Boosting Efficiency in Manipulating Text Data on the Linux Platform

Enhancing the performance of text processing on Linux can greatly impact the speed and efficiency of various tasks that involve handling textual data. This section explores techniques and strategies to optimize text processing performance, ensuring quicker execution and improved overall productivity.

1. Understanding Text Processing Algorithms: Gaining insights into the inner workings of text processing algorithms helps identify areas for improvement. Analyzing algorithms such as string searching, sorting, and pattern matching provides a foundation for optimizing and fine-tuning their performance.

2. Streamlining File I/O Operations: Effective utilization of Linux file I/O mechanisms can significantly enhance text processing performance. Exploring techniques like memory mapping, buffered I/O, and asynchronous I/O helps minimize disk operations and maximize read/write speeds.

3. Harnessing Multi-core Processing: Capitalizing on the power of multi-core processors allows for parallel text processing, where multiple parts of a file or multiple files can be processed simultaneously. Employing techniques such as parallel execution and thread pooling can substantially reduce processing times for computationally intensive tasks.

4. Utilizing Regular Expressions: Leveraging the expressive power of regular expressions optimizes text processing tasks involving pattern matching. Employing efficient regular expression libraries and employing preprocessing techniques, like regex compilation, can significantly boost performance.

5. Memory Management Techniques: Proper memory management plays a crucial role in optimizing text processing performance. Implementing strategies such as efficient memory allocation, caching, and avoiding memory leaks ensures efficient memory usage, resulting in faster text processing execution.

6. Leveraging External Tools and Libraries: Utilizing specialized external tools and libraries tailored for text processing can expedite various tasks. Exploring popular tools like AWK, sed, and GNU parallel, as well as leveraging high-performance libraries like Boost.Regex, can significantly enhance text processing efficiency.

By employing these techniques and strategies, Linux users can optimize text processing performance, enabling faster execution times, streamlined workflows, and improved productivity in handling textual data.

Text Processing in Linux, Unix (How to use Cat, More, Less, Find commands in Linux)

Text Processing in Linux, Unix (How to use Cat, More, Less, Find commands in Linux) by Virtual IT Learning 573 views 2 years ago 15 minutes

FAQ

What is Linux and why is it used for text processing?

Linux is an open-source operating system that is widely used for its stability, security, and flexibility. It is preferred for text processing because of its powerful command-line tools and scripting capabilities that allow users to easily manipulate and process text data.

What are some of the most commonly used text processing tools in Linux?

Some commonly used text processing tools in Linux are sed, awk, grep, and tr. These tools provide various functionalities such as pattern matching, text substitution, data extraction, and processing, making them essential for text manipulation tasks.

Can I use regular expressions for text processing in Linux?

Yes, Linux provides support for regular expressions in many of its text processing tools. Regular expressions are a powerful way to search, match, and manipulate text based on specific patterns. They can be used for tasks like finding and replacing text, extracting specific data, or validating input.

Is it possible to automate text processing tasks in Linux?

Absolutely! One of the great advantages of Linux for text processing is its ability to automate tasks using scripts. With the help of scripting languages like Bash, Python, or Perl, you can create scripts that can perform complex text processing operations automatically. This saves time and effort, especially when dealing with large volumes of text data.