Scientists around the world are constantly seeking innovative ways to push the boundaries of our understanding of the natural world. In this relentless quest for knowledge, the choice of an operating system can play a significant role in the success of scientific research. While the market offers a plethora of options, Linux emerges as a powerful and versatile tool that empowers scientists across various disciplines.

Embracing the Untapped Potential:

Unlike its counterparts, Linux offers an unparalleled level of flexibility and customization, providing scientists with a limitless playground for their research endeavors. With its open-source nature, Linux enables researchers to delve into every aspect of the operating system, adapting it to their specific needs and preferences. This unrivaled access to the inner workings of the system allows for the seamless integration of cutting-edge scientific tools and algorithms, fostering groundbreaking discoveries.

The Pathway to Stability and Security:

When conducting scientific research, maintaining the integrity and security of data is of utmost importance. Linux, renowned for its stability, robust architecture, and rock-solid security measures, ensures that researchers' efforts are safeguarded against potential threats. Its tireless community of developers constantly scrutinizes the system, promptly addressing vulnerabilities and enhancing the overall reliability of the operating system. Furthermore, Linux provides scientists with advanced data management capabilities, ensuring seamless storage, analysis, and sharing of voluminous research data.

Unleashing the Power of Distributed Computing:

Scientific research often requires significant computational resources to handle complex simulations, data analysis, and modelling tasks. Linux, with its outstanding support for distributed computing, enables scientists to harness the power of multiple interconnected machines, expediting research processes. The ease of creating computer clusters and managing distributed computing environments empowers researchers to tackle increasingly intricate problems, making scientific breakthroughs within reach faster than ever before.

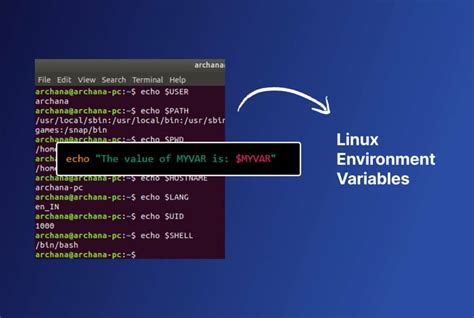

Creating a Linux Environment for Scientific Inquiry

In this section, we will delve into the process of establishing a Linux-based environment tailored specifically for conducting scientific research. By customizing your Linux setup to meet the unique requirements of your experiments and analyses, you can ensure an efficient and productive workflow, while benefiting from the robustness and flexibility of the Linux operating system.

One of the foundational steps in setting up a Linux environment for scientific research is selecting a suitable Linux distribution. We will explore the various distributions available and discuss their features and suitability for different scientific disciplines. Additionally, we will explain the process of installing the chosen distribution and addressing any specific requirements that may arise during the installation.

Furthermore, we will cover the essential tools and packages that are indispensable for scientific research. This includes software for data manipulation, statistical analysis, plotting and visualization, as well as programming environments and compilers for scientific computing. We will provide recommendations and step-by-step instructions for installing and configuring these tools, ensuring a seamless integration within your Linux environment.

| Key Topics Covered | Summary |

|---|---|

| Choosing a Linux Distribution | Exploring different distributions and selecting one that aligns with your research requirements. |

| Installation Process | Step-by-step instructions for installing your chosen Linux distribution, including tips for addressing potential hurdles. |

| Essential Tools and Packages | An overview of the must-have software for scientific research and detailed installation guidance. |

By the end of this section, you will have a solid foundation for establishing a Linux environment tailored to your scientific inquiry needs, providing a reliable and efficient platform for your research endeavors.

Mastering Key Commands for Scientific Exploration on Linux

Delving into the realm of scientific research requires a solid grasp of essential commands on the Linux operating system. Effectively navigating the command line interface empowers scientists and researchers with the necessary tools to extract, analyze, and manipulate data in their respective fields. This section aims to provide a comprehensive overview of fundamental Linux commands that are indispensable for conducting scientific investigations, enabling researchers to harness the full potential of their Linux environment.

1. File and Directory Manipulation: Gain expertise in manipulating files and directories through commands such as ls (to list files and directories), cd (to change directories), mkdir (to create directories), and rm (to remove files and directories). These commands form the foundation of managing and organizing scientific data efficiently.

2. Data Extraction and Analysis: Familiarize yourself with powerful commands like grep (to search for specific patterns within files), awk (to extract and process data), and sed (to perform text transformations on data). Acquiring proficiency in these commands equips researchers with the ability to extract valuable insights from complex datasets.

3. Text File Manipulation: Develop proficiency in manipulating text-based files using commands such as cat (to concatenate files), head (to display the beginning of a file), and tail (to display the end of a file). These commands enable researchers to extract and manipulate specific portions of text-based data, aiding in efficient data extraction and analysis.

4. Process Monitoring and Management: Explore commands like ps (to display active processes), top (to monitor system activity), and kill (to terminate running processes) that provide researchers with the ability to monitor and manage system resources. These commands are essential for ensuring the stability and efficiency of scientific simulations and computations.

5. Version Control: Discover the power of version control with commands such as git (to track changes in source code) and svn (to maintain and manage a repository). Version control is crucial in collaborative scientific research, enabling researchers to track, manage, and collaborate on code and data effectively.

In conclusion, having a firm grasp of essential Linux commands empowers scientists and researchers to navigate their Linux environment seamlessly. By mastering key commands for file manipulation, data extraction and analysis, text file manipulation, process monitoring and management, as well as version control, researchers can streamline their scientific workflow and unleash the full potential of Linux for scientific exploration.

Exploring the Installation of Scientific Software on the Linux Operating System

Scientific research often requires the use of specialized software to analyze data, simulate experiments, and perform complex calculations. This section aims to provide a comprehensive overview of the process of installing scientific software on the Linux operating system.

Understanding the Dependencies

Before proceeding with the installation of scientific software on Linux, it is crucial to understand the dependencies associated with each tool. These dependencies may include specific libraries, compilers, or other software packages that must be installed prior to using the desired scientific software. Failure to meet these requirements can result in installation errors or runtime issues.

Exploring Package Management Systems

Linux distributions typically employ package management systems, such as apt-get, yum, or dnf, to simplify the installation and management of software. This section will delve into the usage of these package managers and demonstrate how to search, install, and update scientific software packages efficiently.

Compiling from Source

While package managers provide a convenient way of installing software, some scientific tools may not be available in pre-compiled packages. In such cases, it becomes necessary to compile the software from source code. This section will guide you through the process of obtaining the source code, configuring dependencies, compiling, and installing the desired scientific software.

Exploring Virtual Environments

Scientific researchers often work with multiple projects and software versions simultaneously. Virtual environments, such as Conda or Virtualenv, offer a solution by providing isolated environments with specific software configurations. This section will discuss the benefits of using virtual environments and the process of setting them up for scientific research purposes.

Verifying the Installation

After successfully installing scientific software, it is essential to verify its functionality and integration within the Linux environment. This section will cover techniques for testing the installed software and ensuring its compatibility with the existing tools and libraries.

By following the steps and recommendations outlined in this guide, researchers will gain a solid foundation in the installation of scientific software on the Linux operating system. With the ability to successfully install and manage such software, scientists can streamline their research processes and efficiently leverage the power of Linux for scientific discovery and innovation.

Enhancing Collaboration and Managing Versions in Scientific Research with Linux

In scientific research, effective collaboration and precise version control are paramount for ensuring the accuracy, reproducibility, and continued progress of studies. This section delves into the significance of version control and collaboration in scientific research conducted using Linux-based systems, emphasizing the immense benefits and specialized tools available for researchers.

Promoting Openness and Reproducibility: Version control systems play a pivotal role in enabling researchers to document changes made to their research projects. By using Linux-based version control tools, scientists can meticulously track alterations to their code, datasets, and analytical pipelines over time, ensuring transparency and promoting reproducibility. Moreover, these version control systems facilitate collaboration among researchers, allowing them to seamlessly share their work, collaborate on projects, and merge different contributions into a unified research outcome.

Managing Complexity and Maintaining Consistency: Scientific research often involves complex algorithms, intricate codebases, and vast datasets. As such, managing this complexity and maintaining consistency throughout different stages of a project can be challenging. Linux provides researchers with robust version control systems that allow them to organize their work, manage dependencies, and keep track of updates and modifications. These systems enable researchers to easily revert to previous versions, recover lost work, and synchronize their projects across multiple platforms and team members.

Utilizing Specialized Tools: Linux offers an array of powerful tools designed specifically for version control and collaboration in scientific research. One such tool is Git, a widely-used distributed version control system that allows researchers to track changes, collaborate with peers, and manage different branches of their project. Additionally, tools like GitLab, Bitbucket, and GitHub provide web-based platforms for hosting and sharing research projects, allowing for seamless collaboration, issue tracking, and documentation. Linux also supports various integrated development environments (IDEs) and text editors that further enhance the research workflow and facilitate version control integration.

Embracing Automation and Continuous Integration: Linux-based systems empower researchers to automate various aspects of their research workflow, including version control and continuous integration. By leveraging tools such as Jenkins, Travis CI, and GitLab CI/CD, researchers can automatically build, test, and deploy their research projects, ensuring that changes are thoroughly vetted and integrated. This level of automation minimizes the risk of errors and enhances efficiency, enabling researchers to focus their energy on the scientific aspects of their work.

Conclusion: Version control and collaboration are integral components of scientific research utilizing Linux. By harnessing the power of version control systems and specialized tools, researchers can streamline their workflows, promote transparency, and facilitate collaborative scientific breakthroughs. Embracing Linux-based solutions for version control and collaboration can ultimately lead to more robust and impactful scientific research outcomes.

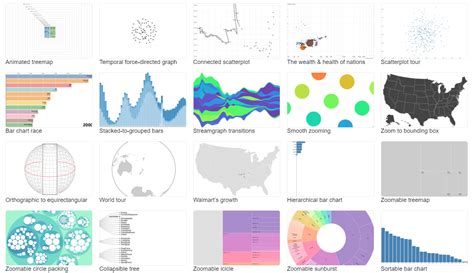

Data Analysis and Visualization Tools in the World of Linux

In the realm of Linux, users are presented with a wide range of robust data analysis and visualization tools to aid in their scientific research endeavors. These tools serve as invaluable assets, empowering researchers to efficiently process and interpret their data, ultimately unlocking valuable insights and actionable conclusions.

One such tool is the renowned R programming language, which provides a comprehensive suite of statistical and graphical techniques for data analysis. With its extensive library of packages, R enables users to effortlessly manipulate, model, and visualize data, unleashing the full potential of their research. Additionally, R's flexibility and open-source nature allow for seamless integration with other tools and languages, offering limitless possibilities for data exploration and exploration.

Another popular choice for data analysis in the Linux ecosystem is Python. Esteemed for its simplicity and versatility, Python boasts an array of powerful libraries, such as NumPy and Pandas, that facilitate data manipulation and analysis. Furthermore, Python's user-friendly syntax and extensive community support make it an ideal choice for researchers looking to streamline their data analysis workflows.

When it comes to visualizing data, Linux users can rely on tools such as GNU Plot and Matplotlib. GNU Plot, a command-line tool, offers a vast range of plotting options and customization features, enabling users to create publication-ready graphs effortlessly. On the other hand, Matplotlib, a Python library, provides a flexible and intuitive interface for creating stunning visualizations and interactive plots, making it a favored choice for researchers seeking to communicate their findings effectively.

Furthermore, for those dealing with complex and voluminous datasets, tools like Tableau and Gephi stand as powerful allies. Tableau, an industry-leading data visualization platform, offers an intuitive drag-and-drop interface, empowering researchers to create interactive visualizations with ease. Gephi, on the other hand, specializes in network analysis and visualization, enabling scientists to uncover intricate patterns and relationships within their data.

In conclusion, the Linux world offers a rich ecosystem of data analysis and visualization tools that cater to the diverse needs of scientific researchers. Whether through renowned languages like R and Python or specialized tools like GNU Plot, Matplotlib, Tableau, and Gephi, Linux users are equipped with the means to analyze and present their data effectively, ultimately enriching their research endeavors.

Maximizing Computational Power: Harnessing High-Performance Computing for Scientific Endeavors

In the domain of scientific research, the pursuit of knowledge often necessitates the utilization of vast computational resources. As researchers endeavor to uncover novel insights and embark on complex simulations, the demand for high-performance computing (HPC) becomes increasingly crucial. This section delves into the realm of HPC on Linux, shedding light on its pivotal role in scientific endeavors and exploring various strategies to utilize its immense power to maximize computational performance.

Embracing Cutting-Edge Technology:

High-performance computing brings forth a multitude of innovative concepts and techniques that enable scientists to push the boundaries of computational capabilities. Harnessing the power of parallel computing, researchers can enhance their simulations and data analysis, accelerating scientific progress in fields ranging from physics and chemistry to biology and climate research.

Parallel Computing:

At the core of HPC lies parallel computing, a technique that allows multiple computations to be executed simultaneously. By partitioning complex problems into smaller, manageable tasks that can be solved concurrently, researchers can tap into the immense processing power of multiple cores or even distributed computing systems. This approach not only expedites data processing but also enables the exploration of more intricate models and simulations.

Optimizing Algorithms for High-Performance:

In the quest for efficient computation, optimizing algorithms for HPC plays a crucial role. Researchers need to carefully analyze their workflows and algorithms to identify potential bottlenecks and restructure them to exploit the full potential of HPC systems. By utilizing parallel algorithms, task-based approaches, and adopting efficient data structures, scientists can significantly improve the scalability, speed, and performance of their computations.

Parallel Algorithms:

Parallel algorithms are specifically designed to exploit the capabilities of parallel computing architectures. By cleverly dividing tasks and data across multiple processors or nodes, these algorithms enable researchers to distribute work efficiently and reduce computational time. Parallel libraries and frameworks further facilitate the development and implementation of these algorithms, empowering scientists to leverage HPC resources with relative ease.

Optimal Utilization of HPC Resources:

Effectively harnessing the power of HPC requires careful resource management and optimization. Researchers should consider various factors, such as workload distribution, memory usage, and I/O operations, to ensure optimal performance. Techniques like load balancing, data caching, and minimizing data movement can enhance the overall efficiency and utilization of HPC systems, enabling researchers to tackle larger and more complex computational challenges.

Load Balancing:

Load balancing, a technique that distributes work evenly across multiple computing resources, is essential in HPC environments. By allocating computational tasks efficiently and preventing resource underutilization or overload, load balancing enhances the overall performance and minimizes computational bottlenecks. Sophisticated load-balancing algorithms and tools have been developed to assist researchers in achieving optimal resource allocation and workload distribution.

The Future of High-Performance Computing:

The landscape of high-performance computing continues to evolve at a rapid pace, paving the way for groundbreaking scientific advancements. As researchers uncover new avenues for innovation and simulation complexity grows exponentially, staying abreast of the latest trends and developments in HPC becomes indispensable. This section concludes with an exploration of emerging technologies and trends shaping the future of HPC, empowering scientists with the tools and capabilities necessary to push the boundaries of scientific research.

Emerging Technologies:

From the advent of quantum computing and advanced AI algorithms to the proliferation of cloud-based HPC solutions, emerging technologies are revolutionizing the way scientific research is conducted. Adapting to these advancements and embracing new paradigms enables researchers to extract valuable insights from vast data sets, facilitate collaboration, and expedite scientific discoveries in an increasingly interconnected and data-driven world.

Tips and Tricks for Maximizing Efficiency in Scientific Exploration on Linux

In this section, we will explore several valuable strategies and techniques to enhance productivity and optimize the scientific research process on the Linux operating system. By leveraging the vast array of powerful tools and customizable features that Linux offers, scientists can streamline their workflows, analyze data more effectively, and achieve higher levels of efficiency in their research endeavors.

1. Automation and Scripting: Harness the power of Linux's command-line interface and scripting capabilities to automate repetitive tasks, reduce manual errors, and save time. By creating scripts, researchers can perform complex data analysis, schedule tasks, and automate data retrieval and processing, allowing for focus on the critical aspects of scientific inquiry.

2. Version Control: Employ a version control system like Git to efficiently manage and track changes in research code and files. By using branching and merging functionalities, researchers can collaborate seamlessly, maintain a centralized repository, and safely experiment with different analysis approaches without the risk of losing valuable work.

3. Remote Computing: Take advantage of Linux's robust networking capabilities to perform computationally intensive tasks on high-performance remote servers or clusters. By utilizing tools such as SSH (Secure Shell) and parallel computing frameworks, researchers can distribute workload, access powerful computational resources, and expedite complex simulations or calculations.

4. Data Visualization: Enhance the understanding and presentation of research findings by utilizing Linux's extensive range of data visualization tools. From libraries like Matplotlib and GNUplot to advanced software such as ParaView and VisIt, researchers can effectively communicate their scientific results through visually appealing and insightful graphics.

5. Package Management: Leverage Linux package managers like apt-get and yum to seamlessly install, update, and manage scientific software packages and libraries. These package management systems simplify the process of acquiring and maintaining cutting-edge tools, ensuring researchers have access to the latest advancements in their respective fields.

6. Collaborative Documentation: Take advantage of Linux-based collaborative documentation platforms such as Wiki systems or LaTeX for collaborative writing, sharing of research notes, and compiling scientific papers. These platforms enable efficient collaboration, version tracking, and simultaneous editing, fostering effective knowledge exchange among researchers.

7. Customize and Optimize your Environment: Linux's open-source nature allows scientists to tailor their research environment to suit their specific needs. By leveraging customizable window managers, powerful text editors like Vim or Emacs, and productive IDEs like Jupyter Notebook or RStudio, researchers can create an efficient and personalized workspace that enhances their scientific productivity.

By adopting these tips and tricks, scientists can unlock the full potential of Linux for scientific research, empowering them to tackle complex challenges and make significant contributions to their respective fields.

[MOVIES] [/MOVIES] [/MOVIES_ENABLED]FAQ

What are the advantages of using Linux for scientific research?

Linux offers numerous advantages for scientific research. Firstly, it provides a highly customizable and flexible environment, allowing researchers to tailor their operating system to their specific needs. Secondly, Linux is known for its stability, security, and performance, which are essential for scientific computing tasks. Additionally, Linux distributions offer a wide range of scientific software and tools, as well as excellent support from the open-source community. Finally, Linux's command-line interface enables efficient workflows and automation, crucial for scientific research.

Can Linux be used for various scientific domains?

Absolutely! Linux is widely used in various scientific domains, including but not limited to physics, biology, chemistry, bioinformatics, data science, and engineering. Linux's flexibility allows researchers in these fields to customize their software environment, integrate specialized tools, and efficiently process and analyze data. Many scientific software packages are specifically developed for Linux, making it a popular choice among researchers worldwide.

Is it difficult to transition from another operating system to Linux for scientific research?

The difficulty of transitioning to Linux for scientific research largely depends on the researcher's prior experience and technical skills. For users already familiar with Linux or other Unix-like systems, the transition is likely to be smoother. However, even for those coming from Windows or macOS, there are extensive resources available online, tutorials, and user-friendly Linux distributions that minimize the learning curve. With determination and willingness to explore, researchers can adapt to Linux and its scientific tools relatively quickly.