In today's digital landscape, harnessing the power of containerization has become imperative for efficient software development and deployment. Docker, as a leading containerization platform, provides immense flexibility and scalability to make the development process smoother. For Windows users, utilizing DockerOperator within Apache Airflow takes this experience to a whole new level.

The use of DockerOperator in Airflow allows Windows users to seamlessly run and manage Docker containers within their workflows. With the ability to encapsulate applications and dependencies, Docker empowers developers to create independent environments, accelerating both development and deployment processes.

Through this step-by-step guide, we will explore the ins and outs of incorporating DockerOperator in Airflow specifically tailored for Windows operating systems. Whether you are a seasoned developer or new to the world of containerization, this comprehensive walkthrough will equip you with the knowledge and skills necessary to leverage DockerOperator effectively.

Setting Up Apache Airflow with Docker on Windows: A Comprehensive Overview

In this section, we will explore the process of configuring Apache Airflow using Docker on the Windows operating system. We will delve into the step-by-step instructions and necessary components required for a seamless setup. By following this comprehensive guide, you will be able to leverage the power of Apache Airflow in a Docker environment on your Windows machine.

Apache Airflow is a powerful open-source platform that enables users to programmatically author, schedule, and monitor complex workflows. Docker, on the other hand, provides an efficient and scalable way to package and deploy applications in isolated environments. Combining these two technologies can result in a robust and reliable workflow management system.

To set up Apache Airflow with Docker on Windows, we will cover the installation of Docker Desktop, configuring Docker images and containers, and configuring Apache Airflow within the Docker environment. We will also discuss the benefits of using Docker for Apache Airflow development and deployment on Windows.

Whether you are a data engineer, data scientist, or a developer looking for an efficient way to manage workflows, this section will guide you through the entire process of setting up Apache Airflow with Docker on Windows. By the end of this guide, you will have the necessary knowledge and tools to harness the full potential of Apache Airflow in a Dockerized environment on your Windows machine.

Note: Prior experience with Docker and Windows environment will be beneficial in understanding the concepts and following the instructions in this section. However, even if you are new to either Docker or Windows, this guide will provide you with a solid foundation to get started with Apache Airflow using Docker on your Windows OS.

Step 1: Configuring Docker on a Windows Environment

In this section, we will outline the necessary steps to set up Docker on a Windows operating system. Docker provides a versatile and efficient way to run applications within isolated containers. By following the instructions below, you will be able to install and configure Docker on your Windows machine, enabling you to utilize its benefits for managing and deploying your Airflow workflows.

1. Install Docker Desktop:

Begin by downloading and installing Docker Desktop, which is the recommended version of Docker for Windows users. Docker Desktop includes everything you need to develop, build, and deploy Docker-based applications on your Windows system.

2. Enable Hyper-V:

Hyper-V is required to run Docker containers on Windows. Ensure that your system's hardware supports Hyper-V virtualization and enable it by following the necessary steps in the Windows Features settings.

3. Configure Docker settings:

Once Docker Desktop is installed, access its settings and configure the necessary options. This includes allocating system resources, defining network settings, and adjusting other parameters according to your specific requirements.

4. Verify Docker installation:

To validate that Docker is installed correctly, open a command prompt or PowerShell window and run the command docker version. This will display information about the installed Docker version and confirm that it is accessible from the command line.

By completing the steps above, you will have successfully set up and configured Docker on your Windows environment. With Docker in place, you can now proceed to the next steps to leverage the DockerOperator functionality within Airflow for efficient workflow management.

Installation and Configuration of Apache Airflow

In this section, we will explore the process of setting up and configuring Apache Airflow for your environment. By following the step-by-step instructions below, you will be able to seamlessly install and configure Apache Airflow without any hassle.

Firstly, we will guide you through the installation process, ensuring that all the necessary dependencies and packages are in place. This will include downloading and installing the required software components, as well as setting up any additional prerequisites.

Once the installation is complete, we will move on to the configuration phase. Here, you will learn how to customize and fine-tune Apache Airflow to best suit your needs. This will involve adjusting various settings and parameters, such as specifying the database backend, enabling authentication, and configuring the scheduler.

Furthermore, you will discover how to integrate Apache Airflow with external systems and services, such as databases, cloud storage, and notification services. This will allow you to leverage the full potential of Apache Airflow and enhance its capabilities within your environment.

Lastly, we will provide tips and best practices for managing, monitoring, and troubleshooting your Apache Airflow installation. This will ensure smooth operation and enable you to quickly identify and resolve any issues that may arise.

By the end of this section, you will have a fully functional and well-configured Apache Airflow setup, ready to tackle your data processing and workflow management needs efficiently.

Step 3: Unveiling DockerOperator Functionality in Airflow

In this section, we will delve into the inner workings of the DockerOperator feature within Airflow, shedding light on its capabilities and providing an understanding of how it can enhance your workflow processes.

We will explore the functionality of DockerOperator by examining its core components and their respective roles. By comprehending the various aspects of DockerOperator, you will gain insights into how to effectively leverage its power for executing Dockerized tasks within your Airflow environment.

Furthermore, we will discuss the advantages and benefits that DockerOperator brings to your workflow orchestration, such as containerization, scalability, and reproducibility. By grasping the underlying concepts and benefits, you will be able to unleash the true potential of DockerOperator for your specific use cases.

Throughout this section, we will provide practical examples and code snippets illustrating the usage of DockerOperator, allowing you to follow along and apply the knowledge gained in your own Airflow projects. By the end of this section, you will be equipped with the necessary knowledge to incorporate DockerOperator seamlessly into your workflow pipelines, boosting productivity and efficiency.

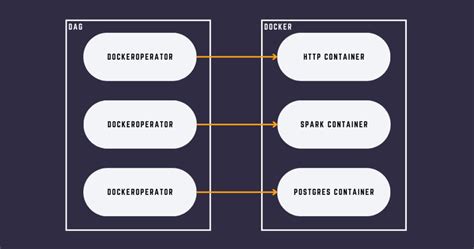

Section 4: Creating a DAG using DockerOperator

Once you have set up the necessary environment and installed the required dependencies, it's time to create a DAG using DockerOperator. In this section, we will explore the step-by-step process of creating a DAG that leverages DockerOperator to execute tasks within Docker containers.

The main idea behind using DockerOperator is to provide a reliable and isolated environment to run tasks in Airflow. With Docker containers, you can encapsulate all the dependencies, libraries, and configurations required for each task, ensuring consistency and reproducibility.

To create a DAG using DockerOperator, you need to follow several key steps:

| Step 1: | Define the required Docker image(s) for your tasks. These images contain the necessary software and libraries needed to execute the tasks. |

| Step 2: | Create DockerOperator instances for each task in your DAG. Configure the operator with the appropriate Docker image, command, environment variables, volumes, and other necessary parameters. |

| Step 3: | Define the dependencies between tasks using the set_upstream() and set_downstream() methods. This ensures that tasks are executed in the correct order. |

| Step 4: | Specify the schedule_interval for the DAG, determining how often the DAG should be triggered based on time or other conditions. |

| Step 5: | Save the DAG file in the correct Airflow directory and start the Airflow scheduler. This will enable the DAG to be executed based on the defined schedule. |

By following these steps, you can easily create a DockerOperator DAG that utilizes the power of Docker containers to execute tasks reliably and efficiently.

Step 5: Running Docker-based Tasks on the Windows Platform

In this section, we will explore the process of executing tasks utilizing the DockerOperator within a Windows environment. By leveraging the power of Docker containers, we can seamlessly run different tasks on Windows machines, enhancing the overall efficiency and reliability of our workflow.

Before executing DockerOperator tasks on Windows, it is essential to ensure that Docker is properly installed and configured on your machine. Once the setup is complete, we can proceed with defining and executing our tasks using the DockerOperator.

The DockerOperator allows us to specify the Docker image, Docker command, and different parameters required for executing the desired task. By encapsulating our tasks in Docker containers, we can achieve better isolation and reproducibility, making it easier to manage dependencies and streamline deployment.

When running DockerOperator tasks on the Windows platform, it is important to be aware of any platform-specific considerations. For example, certain Docker images may be designed specifically for Linux environments and may not work correctly on Windows.

The following table showcases a step-by-step approach for implementing DockerOperator tasks on Windows:

| Step | Description |

| 1 | Install Docker on Windows |

| 2 | Pull the required Docker image |

| 3 | Create a DockerOperator task |

| 4 | Specify the Docker image and command |

| 5 | Set any required parameters for the task |

| 6 | Execute the task |

| 7 | Ensure the task runs successfully |

By following these steps, you will be able to seamlessly execute DockerOperator tasks on the Windows platform, harnessing the power and flexibility of Docker containers to enhance your Airflow workflows.

FAQ

Can I use DockerOperator with Airflow on Windows?

Yes, you can use the DockerOperator with Airflow on Windows. This step-by-step guide will help you understand how to use it effectively.

What is DockerOperator in Airflow?

DockerOperator is a type of operator in Apache Airflow that allows you to execute tasks within Docker containers. It provides a way to manage and automate containers as part of your data pipeline.

Is there a specific setup required before using DockerOperator on Windows?

Yes, there are a few setup steps you need to follow before using DockerOperator on Windows. The guide provides a detailed step-by-step explanation of the setup process to ensure a smooth experience.