In today's rapidly evolving technology landscape, the concept of containerization has gained significant traction and revolutionized the way applications are developed, deployed, and managed. Docker, a leading platform in containerization, has empowered developers and system administrators alike to encapsulate their applications and dependencies into portable and lightweight containers, enabling seamless deployment across various environments. While Docker containers have primarily been associated with Linux-based systems, Docker also offers full support for running containers on the Windows operating system.

With the increasing demand for Windows-based applications and the need for platform consistency across diverse environments, the integration of Windows operating system support within the Docker ecosystem holds immense value. This comprehensive guide aims to demystify the process of running Windows containers using Docker, showcasing the powerful capabilities and versatility that this combination offers.

Throughout this guide, we will delve into the intricacies of configuring and deploying Windows containers, exploiting the numerous advantages they bring to the table. Discover how Windows containers can provide enhanced isolation, facilitate efficient application scaling, and streamline the management of complex applications. Moreover, explore key considerations and best practices for utilizing Windows containers effectively, ultimately empowering you to leverage the power of Docker and the Windows operating system to drive your application development and deployment efforts to new heights.

Understanding the Functionality and Operation of Windows Containers

In this section, we will explore the fundamental concepts and mechanics behind the operation of Windows containers. By gaining insight into the underlying principles, you can better leverage the capabilities offered by this technology.

Windows containers offer a lightweight and portable solution for deploying applications, enabling the efficient utilization of resources while maintaining isolation between applications. These containers rely on the host operating system's kernel to provide the necessary functionality, allowing multiple containers to run simultaneously without conflicts.

When a Windows container is created, it includes all the required dependencies and libraries to run the application successfully. This encapsulation ensures that the container is self-contained and can be easily moved across different environments, such as development, testing, and production, without any compatibility issues.

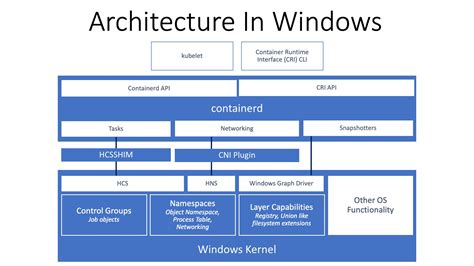

The core operating system services provided by the host are shared across all containers, which helps in minimizing the resource overhead. Each container has its isolated user space where the application processes run, providing a secure boundary that prevents interference between containers and the host system.

Windows containers utilize namespaces to isolate the processes, networking, and file system of each container. The namespace isolation ensures that each container has a unique perspective of the system, with its own hostname, IP address, and file system layout, while still enabling efficient resource sharing.

Another key aspect of Windows containers is their ability to be rapidly deployed and scaled. By leveraging container orchestration platforms like Kubernetes, organizations can easily manage and scale applications across clusters of container hosts, ensuring high availability and resiliency.

In summary, Windows containers are a powerful technology that allows applications to run in isolated environments, while sharing the underlying kernel services of the host operating system. This approach enables portability, efficiency, and scalability, making Windows containers a valuable tool for modern application deployment.

Advantages of leveraging Windows Containers in Docker

Windows Containers offer a multitude of benefits that can greatly enhance the development and deployment processes. By harnessing the power of Windows Containers in Docker, developers and IT professionals can leverage a flexible and efficient environment for deploying applications. This section will explore the advantages of utilizing Windows Containers in Docker, focusing on the enhancements they bring to the software development lifecycle.

1. Isolation: Windows Containers provide a secure and isolated environment for running applications, ensuring that each application operates independently from others. This isolation prevents any conflicts between applications and provides a reliable and consistent runtime environment.

2. Resource Efficiency: Windows Containers enable efficient utilization of system resources, allowing for higher density of applications on a single host. With containers, developers can run multiple instances of an application without the need for separate virtual machines, reducing the hardware and operational costs.

3. Speed and Portability: Windows Containers facilitate faster application deployment and scalability. The lightweight nature of containers enables quick provisioning and easy replication, ensuring that applications can be efficiently managed and scaled in various environments.

4. Development Flexibility: Windows Containers in Docker provide a consistent development environment across different platforms. Developers can package their applications and dependencies into containers, enabling seamless development and deployment across multiple environments.

5. DevOps Integration: Windows Containers seamlessly integrate with DevOps practices, allowing for automated deployment, continuous integration, and rapid scaling. Containers provide a foundation for building a robust and scalable DevOps ecosystem, enabling quicker time-to-market and increased agility.

6. Versioning and Rollbacks: Windows Containers in Docker enable versioning and easy rollbacks, making it effortless to manage and deploy different versions of applications. This capability ensures that any changes or updates can be rolled back to a previous working state, minimizing any potential downtime or disruption.

7. Ecosystem Support: Windows Containers benefit from a growing ecosystem of tooling, libraries, and community support. This ecosystem enhances the Windows Container experience and provides a wide range of resources for developers and IT professionals to leverage in their containerized environments.

In summary, leveraging Windows Containers in Docker offers significant advantages, including enhanced isolation, resource efficiency, speed, portability, development flexibility, integration with DevOps practices, versioning capabilities, and a strong ecosystem of support. By embracing Windows Containers, organizations can optimize their development and deployment processes, ultimately leading to improved efficiency, scalability, and agility in application delivery.

Getting started: Setting up Windows Containers on Docker

In this section, we will explore the initial steps required to set up Windows containers within the Docker environment. By following these instructions, you will be able to create and run Windows-based containers effortlessly.

| Step | Description |

|---|---|

| Step 1 | Install Docker Engine |

| Step 2 | Check Windows container support |

| Step 3 | Enable Windows container feature |

| Step 4 | Get a Docker Windows base image |

| Step 5 | Create a new Windows container |

| Step 6 | Run and manage your Windows container |

By following the sequential steps outlined above, you will gain a solid foundation in setting up and working with Windows containers on the Docker platform. This guide will equip you with the necessary knowledge to efficiently leverage the benefits of Windows containers for your development and deployment processes.

Creating Windows Images for Docker

In the realm of containerization, the process of creating a Windows image for Docker involves a series of carefully orchestrated steps. These steps allow developers to package their applications and dependencies into a portable and isolated environment that can be easily deployed across different systems.

When creating a Windows image, it is important to consider various aspects such as the base operating system, software prerequisites, and application-specific configurations. This ensures that the resulting image contains all the necessary components and can be consistently reproduced.

One of the key elements in creating Windows images is the selection of a suitable base image. This serves as the foundation upon which all the additional layers will be built. The choice of base image depends on factors such as the desired Windows version, architecture, and compatibility requirements with specific applications.

Once the base image is selected, it is essential to install any necessary software prerequisites. This includes packages, libraries, and tools required by the applications that will run within the container. Additionally, any specific configurations or customizations needed for the application can be applied at this stage.

After installing the prerequisites and configuring the environment, the next step involves packaging the application and its dependencies into the image. This can be done using tools such as the Dockerfile, which allows for the specification of the desired state of the image and the steps required to achieve it.

Finally, testing and validation are crucial to ensure the integrity and functionality of the created image. This involves running automated tests, validating the application's behavior within the container, and ensuring that all dependencies are correctly packaged.

By following these steps, developers can create efficient and reliable Windows images for Docker, enabling the seamless deployment and execution of applications across different environments.

Understanding the Architecture of Windows Docker Images

In this section, we will delve into the intricacies of the underlying structure of Windows Docker images. By gaining a clear understanding of their architecture, you will be able to better navigate and utilize these powerful containers.

At its core, a Windows Docker image is a compact and self-contained unit that encapsulates all the necessary components required to run a specific application or service. These images are built using layers, which serve as building blocks that contribute to the final composition of the container.

Each layer within a Windows Docker image represents a specific snapshot of the file system at a given point in time. These layers are stacked on top of each other, forming a hierarchy that collectively represents the complete state of the container. These layers can include various elements such as the OS, libraries, dependencies, and application code.

One key advantage of this layering approach is its ability to promote reusability and efficiency. By separating different components into individual layers, it becomes easier to update, modify, and share specific parts of the container without affecting the entire image. This not only saves disk space but also allows for more flexible and rapid deployments.

| Layer | Description |

|---|---|

| Base Layer | The foundation layer of the image that includes the underlying operating system and its core components. |

| Dependency Layer | Contains any additional dependencies or frameworks required by the application. |

| Application Layer | Includes the actual application code and any associated configuration files. |

By structuring a Windows Docker image in this layered manner, it becomes easier to manage dependencies, update individual components, and ensure a consistent and reproducible environment across different systems. Moreover, it allows for efficient sharing of images between developers and across platforms, enabling seamless collaboration and reducing potential conflicts.

Understanding the structure of Windows Docker images is crucial for efficiently managing and leveraging the power of containers. With this knowledge, you will be well-equipped to build, modify, and deploy Windows containers effectively.

Best practices for constructing Windows container images

When it comes to building Windows container images, there are several best practices that can help ensure the efficiency, security, and stability of your containers. By following these guidelines, you can optimize the performance of your containers, reduce their size, and enhance their overall functionality.

- Choose a lightweight base image: Start by selecting a base image that is minimal in size and contains only the necessary components for your application. This will help reduce the size of your final container image and minimize the attack surface.

- Use multi-stage builds: Leveraging multi-stage builds can significantly decrease the size of your container images. By separating the build environment from the runtime environment, you can discard unnecessary build dependencies, resulting in smaller and more efficient images.

- Utilize layer caching: Docker utilizes layer caching to speed up the build process. To take advantage of this, structure your Dockerfile in a way that minimizes the number of invalidated layers during image rebuilds. This can be achieved by placing frequently changing instructions later in the Dockerfile, while the static instructions are placed earlier.

- Secure your container images: Implement security measures by using secure base images from trusted sources, regularly updating your images to include the latest security patches, and scanning your container images for vulnerabilities. Additionally, ensure that your containers are running with the least privilege necessary to reduce the risk of potential attacks.

- Optimize container startup time: Improve the startup time of your containers by minimizing the number of unnecessary services and processes running inside them. Additionally, consider using pre-warmed containers or implementing strategies such as health checks to ensure that containers are ready to receive traffic as quickly as possible.

- Design for scalability and resilience: When designing your containerized applications, consider scalability and resilience in order to handle varying workload demands. This can be achieved by utilizing orchestration platforms such as Kubernetes and implementing strategies like scaling and load balancing.

By following these best practices, you can create efficient, secure, and reliable Windows container images that meet the requirements of your applications and provide a seamless experience for your users.

Setting up and Deploying Containers: A step-by-step walkthrough

In this section, we will take you through the process of setting up and deploying containers in a Windows environment. Whether you're new to containerization or an experienced user, this step-by-step guide will provide you with the necessary knowledge and instructions to effectively run and manage containers on your Windows system.

Step 1: Preparing your Windows Environment

Before you can start running containers on your Windows machine, it is essential to ensure that your environment is properly set up. This involves installing and configuring the required software, including the container runtime and management tools. We will walk you through this initial step, ensuring that your Windows environment is ready for container deployment.

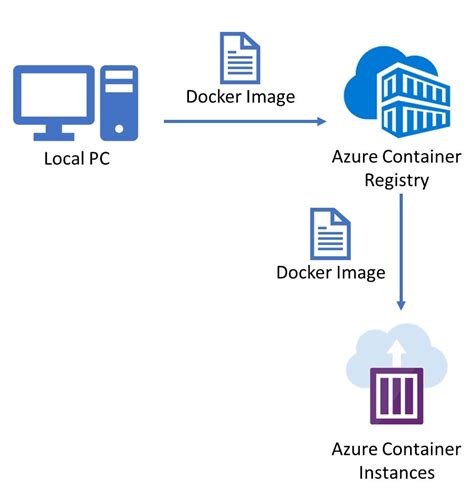

Step 2: Obtaining and Configuring Container Base Images

Once your system is prepared, the next step is to obtain and configure the base images for your containers. These base images serve as the foundation for your containers and contain the necessary operating system and dependencies. We will explain how to select the right base images, retrieve them from official repositories, and customize them according to your application requirements.

Step 3: Building and Managing Windows Containers

In this step, we will dive into the process of building and managing Windows containers. We will guide you through the creation of container images from your customized base images and demonstrate how to effectively manage and version them. You will learn the essential commands and techniques for container management, including starting, stopping, and monitoring container instances.

Step 4: Deploying and Scaling Containerized Applications

Once your containers are built and managed, the final step is to deploy and scale your containerized applications. We will discuss various deployment strategies, including running containers locally, on-premises, or in the cloud. Additionally, we will explore techniques for scaling your containerized applications to meet your application demands, ensuring optimal performance and resource utilization.

Step 5: Monitoring and Troubleshooting Containers

As containers become an integral part of your Windows environment, it is crucial to have effective monitoring and troubleshooting mechanisms in place. In this step, we will cover monitoring tools and techniques specific to Windows containers. We will also address common troubleshooting scenarios and provide solutions to ensure the smooth operation of your containerized applications.

Conclusion

By following this comprehensive step-by-step guide, you will gain the knowledge and skills necessary to successfully run and manage Windows containers. Whether you're a beginner or an experienced user, these instructions will enable you to harness the power of containerization and leverage the benefits it offers in terms of flexibility, scalability, and resource efficiency.

Running Windows Containers using Docker commands

Exploring the realm of containerization, we delve into the powerful world of Windows containers and their utilization through Docker commands. In this section, we will uncover the step-by-step process of running Windows containers, utilizing various Docker commands to streamline the deployment and management of your containerized applications.

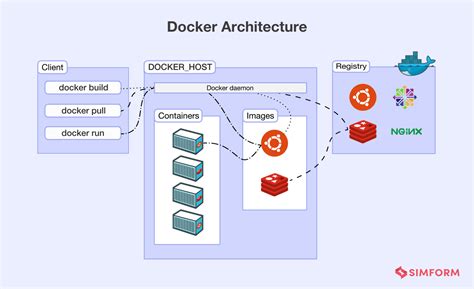

Containerizing with Docker run

One essential command in the Docker toolkit when working with Windows containers is the docker run command. This command enables the creation and execution of containers based on the designated Windows container image. By specifying the necessary parameters, such as the image name and any additional options, we can effortlessly spin up a Windows container and deploy applications within a contained environment.

Managing the container lifecycle

In addition to running containers, Docker provides a range of commands that allow us to manage the lifecycle of our Windows containers effectively. With the docker start command, we can initiate a stopped container, while the docker stop command halts the execution of a running container. The docker restart command offers a quick way to restart a container without altering its configuration, ensuring seamless application continuity.

Networking and inter-container communication

Efficient networking plays a vital role in container orchestration. Docker offers a comprehensive suite of networking commands that facilitate seamless communication between containers. By utilizing the docker network create command, we can create separate networks for our containers, enabling secure and isolated communication channels. Additionally, the docker network connect command allows us to connect existing containers to newly created networks, fostering inter-container communication.

Inspecting containers and obtaining container information

Understanding the inner workings of a container is crucial for troubleshooting and optimization. Docker provides a set of commands that allow us to inspect container details and obtain vital information. With the docker inspect command, we can retrieve in-depth information about containers, including IP addresses, network configurations, and storage utilization. This empowers administrators and developers to gain a comprehensive understanding of their Windows containers and efficiently manage them.

In conclusion, mastering Docker commands for running Windows containers unlocks a world of possibilities for efficient application deployment and management. By leveraging the power of Docker's commands, we can effortlessly create, manage, and inspect containers, fostering seamless containerization experiences.

Running Windows Containers with Docker Compose

In this section, we will explore the process of deploying and managing Windows containers using Docker Compose, a powerful tool for defining and running multi-container applications.

We will delve into the intricacies of running Windows containers and how Docker Compose can streamline the process. Throughout this section, we will demonstrate various techniques and best practices for effectively orchestrating multiple containers within a Windows environment.

By leveraging Docker Compose, you can simplify the management of your Windows containers, ensuring seamless deployment and scaling. We will discuss how to define and configure your containers, specify dependencies, handle networking, and deploy your application stack using the intuitive syntax provided by Docker Compose.

Moreover, we will explore the flexibility of Docker Compose and how it can be used to streamline the development workflow for Windows container-based applications. We will investigate techniques for efficiently managing development and production environments, including utilizing configuration files, managing environment variables, and deploying updates to running containers.

Throughout this section, we will provide step-by-step instructions, sample code snippets, and practical examples to guide you through the process of running Windows containers using Docker Compose. Whether you are new to Docker or an experienced user, this section will equip you with the knowledge and skills needed to efficiently manage your Windows container-based applications.

Windows Docker Containers in 5 minutes

Windows Docker Containers in 5 minutes by TechsavvyProductions 14,807 views 3 years ago 7 minutes, 7 seconds

Windows Containers and Docker: 101

Windows Containers and Docker: 101 by Docker 64,392 views 6 years ago 19 minutes

FAQ

What is a Windows image in Docker?

A Windows image in Docker is a lightweight, standalone, and executable package that contains everything needed to run a piece of software, including the code, runtime, system tools, libraries, and settings.

How can I run a Windows container in Docker?

To run a Windows container in Docker, you need to have a base Windows image that is compatible with your host OS version. You can then use the Docker command-line interface (CLI) to pull the image from a registry, create a container from the image, and start the container.

Can I use Windows images in Docker on a Linux host?

No, Windows images in Docker can only be used on a Windows host. Docker containers are based on the host's kernel, so if you are running Docker on Linux, you can only use Linux containers.

Are there any major differences between running Windows containers and Linux containers in Docker?

Yes, there are some differences between running Windows containers and Linux containers in Docker. Windows containers require a Windows host, while Linux containers can run on Windows, Linux, or macOS. Additionally, Windows containers use a different isolation technology called process isolation, while Linux containers use lightweight virtualization with namespaces and cgroups.

What are the benefits of using Windows containers in Docker?

Using Windows containers in Docker provides several benefits, such as increased portability, faster deployment, improved resource utilization, and simplified software distribution. Containers also offer better isolation between applications, making it easier to manage multiple applications running on the same host.