Achieving optimal functionality when managing large amounts of textual information is an essential aspect of modern-day computer usage. Whether you are a data scientist, a writer, or simply a user dealing with sizable text files on a regular basis, understanding how to optimize the performance of your Windows operating system is crucial for seamless handling of substantial textual data.

Efficiently managing and processing massive volumes of textual content requires a systematic approach that enhances both the speed and accuracy of information retrieval. Harnessing the power of your computer to effortlessly navigate through extensive textual data can significantly improve productivity and save you valuable time in various professional and personal endeavors.

In this article, we will explore a range of practical strategies and techniques designed to streamline the management of large textual datasets on Windows-based systems. From optimizing system settings and utilizing dedicated software, to employing efficient search and filter methods, we will delve into proven approaches to maximizing the performance of your machine for handling vast amounts of textual information.

Effective Approaches for Enhancing Windows Performance With Large Textual Data

In the realm of powerful systems for managing extensive blocks of text, it becomes paramount to implement effective techniques to optimize Windows performance. By employing specialized methodologies, users can enhance the efficiency and handling capabilities of their Windows operating system in dealing with massive volumes of textual data.

Enhancing Memory Management: One proven strategy involves employing advanced memory management techniques that enable Windows to efficiently handle large textual datasets. By implementing optimized memory allocation algorithms and utilizing virtual memory techniques, Windows can allocate and utilize available system memory effectively, resulting in improved performance and reduced latency.

Implementing Multithreading: Another crucial approach is the exploitation of multithreading capabilities to maximize computational efficiency when processing large textual datasets. By utilizing multiple threads, Windows can simultaneously process diverse textual components, such as indexing, searching, and sorting, thereby significantly reducing overall processing time.

Utilizing Compression Techniques: Compressing textual data can prove advantageous when handling sizeable amounts of text. By employing compression algorithms such as LZ77 or Huffman coding, Windows can reduce the storage requirements for textual data while maintaining data integrity. This not only leads to substantial space savings but also enhances data retrieval and manipulation speed.

Optimizing File I/O Operations: Effective management of file input/output (I/O) operations is essential when dealing with large textual datasets. Utilizing advanced buffering techniques and optimizing disk access patterns can significantly enhance Windows' ability to read and write large text files efficiently. This minimizes the time required for data processing and improves overall system performance.

Applying Indexing and Searching Techniques: Implementing efficient indexing and searching mechanisms can vastly improve Windows' ability to handle and retrieve large textual data. Utilizing data structures like B-trees or hash tables enables faster searching and precise retrieval of specific data elements, enhancing overall system responsiveness when dealing with extensive textual datasets.

Regular System Maintenance: Regularly optimizing and maintaining the Windows operating system is crucial for enhancing performance with large textual data. This includes tasks such as updating system drivers, performing disk defragmentation, and removing unnecessary files, ensuring that the system operates smoothly and efficiently for managing extensive textual datasets.

In conclusion, by implementing these best practices for optimizing Windows performance when handling large textual data, users can significantly enhance system efficiency, reduce processing time, and improve overall performance when dealing with extensive amounts of text.

Understanding the Impact of Massive Textual Data on Windows Performance

In the realm of Windows operations, it is crucial to comprehend the profound impact that extensive textual data can have on the overall system performance. By delving into the implications of handling copious amounts of text, we gain insight into the significant challenges that arise when processing and managing such data sets efficiently.

Comprehending the Ramifications:

Augmenting our understanding of the ramifications that arise when dealing with massive textual data sets is essential to devise effective strategies for optimizing Windows performance. The way in which Windows manages and processes textual information directly influences a system's efficiency and responsiveness.

The Challenge of Data Processing:

Processing large volumes of textual data can impose a considerable strain on a Windows system, leading to decreased performance and potential bottlenecks. These challenges involve the efficient handling of file I/O operations, memory allocation, and search algorithms.

Memory Management and Optimization:

The management and utilization of system memory play a vital role in enhancing the performance of Windows when handling extensive textual data. Employing efficient memory management techniques, such as caching and memory compression algorithms, can aid in minimizing latency and maximizing efficiency.

Optimizing Search and Indexing Operations:

The process of searching and indexing large textual data sets presents unique challenges in terms of speed and accuracy. Employing advanced indexing algorithms and leveraging hardware acceleration can significantly enhance the efficiency of search operations, ultimately improving overall performance.

Minimizing File I/O Overhead:

Efficiently managing file I/O operations is crucial when dealing with massive textual data sets. Reducing redundant I/O operations, optimizing disk access patterns, and utilizing asynchronous I/O techniques can help minimize the overhead associated with reading and writing vast amounts of textual data.

Conclusion:

Gaining a deep understanding of how large textual data impacts Windows performance empowers us to adopt effective strategies for optimizing system efficiency. By addressing the challenges of data processing, memory management, search algorithms, and file I/O overhead, we can unlock the full potential of Windows in handling immense amounts of textual data.

Choosing the Optimal Hardware Configuration for Effective Management of Vast Amounts of Textual Information

In order to efficiently handle and process large textual data sets, it is essential to carefully select the appropriate hardware configuration. The hardware setup plays a vital role in optimizing the performance and enhancing the data processing capabilities, enabling efficient analysis and retrieval of textual information.

Processing Power: Selecting a powerful processing unit with ample computational capacity is crucial when dealing with extensive textual data. Multicore processors or high-performance CPUs, capable of handling complex algorithms and intensive computations, are preferable for seamless data manipulation and analysis.

Memory Capacity: In managing massive textual datasets, a significant amount of memory is required to store and manipulate the data. Opting for a system with a large RAM capacity enables effective caching and manipulation of the text, facilitating quick access and processing.

Storage Solution: The storage system plays a critical role in the efficient handling of large text-based datasets. Choosing a high-capacity storage device, such as solid-state drives (SSDs) or large capacity hard disk drives (HDDs), ensures ample storage space for the data, as well as faster data retrieval and transfer rates.

Network Connectivity: In scenarios where large textual data needs to be accessed and processed across multiple devices or systems, having robust network connectivity is essential. Opting for high-speed Ethernet or Wi-Fi connections ensures seamless data transfer and real-time collaboration.

Graphics Processing: While textual data may not heavily rely on graphical processing, certain applications or analysis techniques may benefit from a dedicated graphics processing unit (GPU). GPUs provide enhanced parallel processing capabilities, accelerating certain tasks and enabling efficient data visualization.

Peripheral Devices: Selecting appropriate peripheral devices like keyboards, mice, and monitors also contribute to efficient data handling. Ergonomic and reliable input devices and high-resolution monitors enhance productivity, ease of use, and reduce user fatigue during prolonged data analysis sessions.

Software Compatibility: Lastly, in conjunction with the hardware configuration, it is important to ensure compatibility with the software being utilized. Compatibility, optimal drivers, and performance tuning between the hardware and software components are vital to achieve optimal results in managing large textual data efficiently.

With careful consideration of the hardware configuration, organizations and individuals can optimize their systems for successfully handling and analyzing vast amounts of textual data, enabling effective decision-making and actionable insights.

Enhancing the Efficiency of Windows File System for Storing and Accessing Enormous Textual Data

Managing and manipulating extensive amounts of textual data is becoming increasingly crucial in various domains such as data analysis, natural language processing, and scientific research. As such, optimizing the Windows file system to effectively handle these large volumes of textual data becomes a priority.

When it comes to storing and retrieving vast amounts of textual data within Windows, several strategies can be implemented to enhance overall performance. These strategies include leveraging efficient file system configurations, utilizing appropriate disk storage technologies, and adopting optimized data retrieval techniques.

- Implementing File System Configurations: By choosing the most suitable file system configuration, such as NTFS (New Technology File System), ReFS (Resilient File System), or a combination of both, it is possible to enhance data storage efficiency, ensure data integrity, and improve file access speeds.

- Utilizing Advanced Disk Storage Technologies: Taking advantage of technologies like solid-state drives (SSDs) or NVMe (Non-Volatile Memory Express) drives can significantly improve data read and write speeds, thereby accelerating the storage and retrieval of large textual data sets.

- Optimizing Data Retrieval Techniques: Employing indexing mechanisms, such as full-text indexing or in-memory databases, can expedite the retrieval of specific textual data elements, reducing the time required for searches or data mining operations.

- Utilizing Compression and Deduplication: Implementing compression techniques and eliminating duplicate data can both reduce storage footprint and enhance data retrieval speeds, especially when dealing with repetitive or highly similar textual data.

- Employing Parallel Processing: Exploiting the power of multi-core processors and parallel computing techniques can enable simultaneous processing of large textual data sets, accelerating data handling tasks and improving overall performance.

By implementing these optimization strategies, it is possible to greatly enhance the efficiency of the Windows file system for storing and retrieving vast amounts of textual data. As a result, users can experience improved data management capabilities, faster data access times, and more efficient analysis and processing of large textual data sets.

Utilizing Compression Techniques to Reduce the Size of Textual Data Files

Improving the efficiency of Windows systems in managing and processing large amounts of textual data is crucial for various industries and applications. One effective approach involves the utilization of compression techniques to reduce the size of textual data files. By compressing the data, the overall storage requirements and transmission time can be significantly reduced, resulting in improved performance and resource optimization.

Compression techniques aim to eliminate redundant information and exploit patterns within the text to achieve data compression. These techniques can be broadly categorized into two main types: lossless compression and lossy compression. Lossless compression algorithms preserve the original data completely, allowing for accurate recovery of the uncompressed data. On the other hand, lossy compression methods sacrifice some level of data fidelity to achieve higher compression ratios; however, they are often suitable for certain applications where slight loss of information is tolerable.

Some commonly used compression algorithms for textual data include Huffman coding, Lempel-Ziv-Welch (LZW) algorithm, Deflate algorithm, and Burrows-Wheeler Transform (BWT). These algorithms employ various approaches to identify repeating patterns, construct dictionaries, and encode the data in a more compact representation. The choice of a specific compression algorithm depends on factors such as the type of textual data, desired compression ratio, and the level of accuracy required during decompression.

Implementing compression techniques in Windows systems can be accomplished through the utilization of third-party libraries and tools specifically designed for data compression. These tools provide functionalities for compressing and decompressing textual data files using different compression algorithms. By integrating such libraries into existing Windows applications or workflows, users can effectively reduce the size of textual data files, optimize storage usage, and enhance the overall performance of their systems.

In conclusion, utilizing compression techniques is a valuable strategy for optimizing Windows systems in handling large textual data. By reducing the size of textual data files through compression algorithms, users can achieve improved efficiency, faster transmission, and resource optimization. It is essential to carefully select the appropriate compression algorithm based on the specific requirements of the application and the desired trade-off between compression ratio and data fidelity.

Implementing Efficient Search and Indexing Mechanisms for Handling Vast Amounts of Textual Information

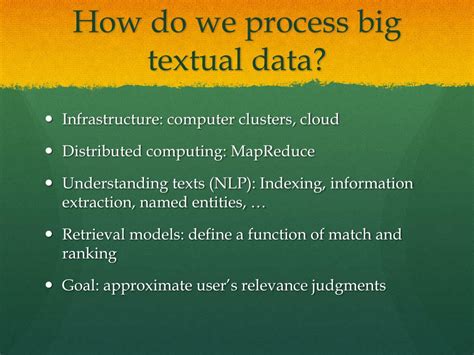

In this section, we explore advanced techniques for optimizing the search and indexing processes to effectively handle large volumes of textual data. Efficiently searching and indexing massive amounts of text is crucial for improving performance and user experience in applications that deal with extensive textual information. Therefore, it is essential to implement mechanisms that can quickly retrieve and present relevant information to users.

One of the key aspects of implementing efficient search and indexing mechanisms is the use of appropriate data structures and algorithms. These components play a vital role in organizing the textual data in a way that facilitates swift searching and indexing operations. Various methods, such as the creation of inverted indexes, can be employed to enhance the search process.

Inverted indexes are specialized data structures that enable quick retrieval of information by mapping terms or words to the documents or locations where they appear. By using inverted indexes, search engines and applications can significantly reduce the search time by directly accessing the relevant documents instead of scanning the entire dataset. Implementing efficient indexing mechanisms, such as compression techniques and in-memory indexing, can further optimize the storage and retrieval of textual data.

Additionally, integrating natural language processing (NLP) techniques can improve the accuracy and relevance of search results. NLP algorithms can analyze the textual data on a deeper semantic level, understanding concepts, relationships, and context to provide more accurate search outcomes. By leveraging NLP, applications can enhance their capabilities to handle large textual datasets and provide users with more relevant and meaningful information.

In conclusion, implementing efficient search and indexing mechanisms for handling vast amounts of textual information is essential to optimize performance and enhance user experience. By utilizing appropriate data structures, algorithms, and integrating NLP techniques, applications can efficiently search and retrieve relevant information from extensive textual datasets, improving the effectiveness and usability of text-based applications.

Improving Memory Management for Efficiently Handling Large Text-Based Data in the Windows Environment

In this section, we will explore techniques and strategies to enhance the management of system memory with the goal of optimizing the handling and processing of extensive textual data in a Windows operating system environment. By employing effective memory management practices, we can ensure that our system efficiently utilizes available resources and provides improved performance when dealing with large sets of text-based information.

Enhancing Memory Allocation and Deallocation: One crucial aspect of memory management is the efficient allocation and deallocation of memory resources. Implementing strategies such as dynamic memory allocation and timely deallocation when not needed can help minimize memory fragmentation and optimize the availability of memory for handling large textual datasets.

Utilizing Memory-Mapped Files: Memory-mapped files enable the mapping of large files directly into system memory, allowing for efficient read and write operations. By leveraging memory-mapped files, we can significantly improve the performance of handling large textual data by minimizing the need to load the entire dataset into memory at once.

Implementing Effective Caching Mechanisms: Caching frequently accessed data can drastically enhance the handling of large textual datasets. By storing frequently used information in a cache, we can reduce the need to retrieve data from slower storage mediums, such as disks, and improve overall processing speed and efficiency.

Optimizing String Manipulation: Efficiently manipulating strings is crucial when dealing with extensive textual data. Utilizing optimized algorithms and techniques for string operations, such as searching, parsing, and concatenation, can significantly enhance the performance of handling large text-based datasets.

Monitoring and Analyzing Memory Usage: Regularly monitoring and analyzing system memory usage is critical when optimizing the handling of large textual data. By identifying any potential memory leaks, excessive memory consumption, or inefficient memory allocation patterns, we can proactively address these issues and improve overall system performance and stability.

Considering Multithreading and Parallel Processing: Leveraging multithreading and parallel processing capabilities can greatly improve the handling of large textual data in Windows. By distributing tasks across multiple threads or utilizing parallel processing techniques, we can effectively utilize available system resources and expedite data processing and analysis.

By implementing these memory management practices and strategies, Windows users can significantly enhance their system's ability to handle and process vast amounts of textual information efficiently. The combination of optimized memory allocation, leveraging memory-mapped files, effective caching mechanisms, efficient string manipulation, monitoring memory usage, and utilizing multithreading and parallel processing can result in substantial performance improvements when working with large text-based datasets in the Windows environment.

Ensuring Data Security and Backup Strategies for Large Textual Data in the Windows Environment

When dealing with vast amounts of textual data in a Windows environment, it is crucial to have robust data security and backup strategies in place to protect valuable information from potential risks and ensure its integrity and availability. This section focuses on exploring various measures and best practices for establishing a secure and reliable data storage and backup system specifically tailored for handling large textual data.

- Implementing Secure Access Control: Establishing appropriate access controls is fundamental in safeguarding sensitive textual data. This involves employing strong passwords, utilizing multi-factor authentication, and defining user roles and permissions to limit unauthorized access to the data. Regularly reviewing and updating access controls is essential to ensure data confidentiality and prevent data breaches.

- Encrypting Data at Rest and in Transit: Encrypting large textual data both at rest and in transit is critical to protect its confidentiality. Utilizing robust encryption algorithms and protocols, such as AES (Advanced Encryption Standard) and SSL/TLS (Secure Sockets Layer/Transport Layer Security), ensures that data remains secure while stored on physical drives or when transmitted across networks.

- Implementing Data Backup Solutions: Having reliable data backup mechanisms in place is essential for safeguarding against data loss due to hardware failures, software glitches, or malicious activities. Regularly backing up large textual data to remote servers, external storage devices, or cloud-based platforms ensures that duplicate copies are readily available in case of emergencies.

- Utilizing Version Control Systems: Version control systems provide an efficient way to manage changes made to large textual data over time. By maintaining a record of different versions and allowing easy rollback to previous states, version control systems enable data recovery in case of accidental modifications, data corruption, or the need to analyze historical data.

- Monitoring and Auditing Data Access: Implementing monitoring and auditing mechanisms enables organizations to track and trace activities related to large textual data. This includes logging access events, monitoring user activities, and conducting regular audits to identify any anomalies or potential security breaches. Such monitoring provides insights into data usage patterns and helps detect any unauthorized or suspicious activities.

- Testing Data Recovery Procedures: Regularly testing data recovery procedures ensures that backup systems and processes are functioning correctly and are capable of restoring large textual data effectively. By validating the integrity and accessibility of backup data, organizations can mitigate the risks associated with data loss and confidently rely on their backup strategies.

By implementing comprehensive data security measures and robust backup strategies, organizations can minimize the risks associated with handling large textual data in a Windows environment. These practices provide the foundation for maintaining the confidentiality, integrity, and availability of valuable information, safeguarding against data loss or unauthorized access.

FAQ

What are some techniques for optimizing Windows to handle large textual data?

There are several techniques that can help optimize Windows for handling large textual data. One important technique is to increase the amount of RAM in your system. This can help improve the speed at which Windows can process and manipulate large amounts of text. Another technique is to use a solid-state drive (SSD) instead of a traditional hard drive. SSDs are faster and can help improve the overall performance of your system when dealing with large textual data. Additionally, optimizing your system's virtual memory settings and using compression techniques for large text files can also be beneficial.

Is it necessary to allocate more RAM when dealing with large textual data in Windows?

Allocating more RAM can significantly improve the performance of handling large textual data in Windows. When working with large text files, having more RAM allows the system to store and access more data in its memory, reducing the need to continuously read from the hard drive. This can result in faster processing times and smoother data manipulation. However, it is important to note that increasing the amount of RAM will depend on your specific needs and the limitations of your hardware.

Can using a solid-state drive (SSD) help optimize Windows for handling large textual data?

Absolutely, using an SSD can greatly enhance the performance of handling large textual data in Windows. SSDs are known for their faster read and write speeds compared to traditional hard drives. This means that accessing and manipulating large text files can be significantly quicker, allowing for smoother data processing. Upgrading to an SSD can be a worthwhile investment if you frequently work with large textual data on your Windows system.

Are there any specific virtual memory settings that can be adjusted to optimize Windows for handling large textual data?

Yes, adjusting the virtual memory settings can help optimize Windows for handling large textual data. When dealing with large files, it is recommended to increase the virtual memory size. This can be done by going to the System Properties, selecting the Advanced tab, and clicking on the Performance Settings button. From there, go to the Advanced tab, click on the Change button under Virtual memory, and adjust the size accordingly. Increasing the virtual memory can provide additional space for Windows to store and process large amounts of text data.

Are there any compression techniques that can be used to optimize Windows for handling large textual data?

Yes, using compression techniques can be beneficial when dealing with large textual data in Windows. There are various compression algorithms and tools available that can help reduce the file size of large text files without compromising their contents. Compressing text files can not only save storage space but also improve the speed at which Windows can read and process the data. However, it is important to note that the trade-off for compressed files is the need for decompression before accessing the data.