As technology advances and businesses look for more efficient ways to develop and deploy applications, containerization has emerged as a game-changing solution. In today's ever-evolving landscape, staying ahead of the curve is crucial, and one way to do so is by familiarizing ourselves with Docker, a powerful tool that enables the creation and management of lightweight, portable containers.

Imagine a world where you no longer have to worry about compatibility issues or complex installation processes. Containers provide a way to package applications and their dependencies, allowing them to run consistently across different environments. With Docker, you can encapsulate your command line application, complete with all its necessary libraries and components, into a portable container that can be easily deployed on any Windows system.

Why limit yourself to traditional application development methods when you can leverage the power of Docker to streamline your workflow? By containerizing your command line application, you can achieve better scalability, enhanced security, and simplified deployment. Say goodbye to the tedious process of setting up development environments and debugging compatibility issues. With Docker, you can focus on what really matters - developing your application and delivering value to your users.

Understanding Docker and its Advantages

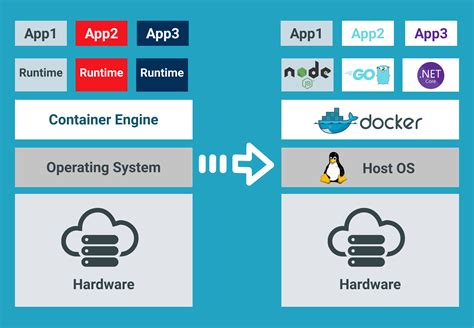

Docker is a powerful open-source platform that enables developers to automate the deployment and management of applications within containers. These containers provide a lightweight and efficient way to package software, its dependencies, and configuration, ensuring consistent behavior across different environments.

The benefits of using Docker in software development are numerous. Firstly, Docker allows developers to create isolated containers that encapsulate an application and all its dependencies. This isolation eliminates conflicts between different software components and makes it easier to manage dependencies, resulting in more reliable and reproducible builds.

Another advantage of Docker is its portability. Docker containers can run on any system that has Docker installed, regardless of the underlying operating system. This makes it easier to develop and deploy applications on different platforms without worrying about compatibility issues.

Docker also promotes scalability and resource efficiency. With Docker, developers can quickly create and deploy multiple instances of an application, known as containers, to efficiently utilize their resources. This flexibility allows for rapid scaling up or down, depending on application demand, reducing costs and improving performance.

Moreover, Docker simplifies the deployment process by providing a consistent environment across different stages of the software development lifecycle. By using the same container in development, testing, and production environments, developers can ensure that the application behaves consistently, minimizing the chances of unexpected issues arising when moving from one environment to another.

| Benefits of Docker |

|---|

| Isolation of applications and dependencies |

| Portability across different platforms |

| Scalability and resource efficiency |

| Consistent environment throughout the development lifecycle |

In conclusion, understanding Docker and its benefits can greatly enhance the development and deployment of command line applications. By leveraging Docker's isolation, portability, scalability, and consistency, developers can streamline their workflows, reduce conflicts, and improve the overall quality and reliability of their applications.

Installing Docker on a Windows Machine

In this section, we will guide you through the process of installing Docker on your Windows operating system. By installing Docker, you will gain the ability to efficiently run and manage containerized applications, thereby enhancing the scalability and flexibility of your software projects.

- Firstly, navigate to the official Docker website and download the Docker Desktop installer for Windows.

- Once the installer is downloaded, double-click it to start the installation process.

- Follow the on-screen instructions to proceed with the installation. Make sure to review and agree to the license terms.

- During the installation, Docker may prompt you to enable certain features, such as Hyper-V virtualization or the Windows Subsystem for Linux (WSL). Enable these features as instructed.

- After the installation is complete, Docker Desktop will be available in your Windows system tray. Click on the Docker icon to launch it.

- Upon launching Docker Desktop, it may take a few moments to initialize and start. Be patient while the necessary services are being set up.

- Once Docker is up and running, you can verify its installation by opening a command prompt or PowerShell window and executing the command

docker version. This command should display the Docker version information and indicate that the Docker engine is successfully installed and operational on your machine.

Now that you have successfully installed Docker on your Windows system, you are ready to proceed with setting up your Dockerized command line application. The next section will cover the necessary steps for configuring and running your application within a Docker container.

Configuring Docker on a Windows Environment

When it comes to utilizing Docker for your applications in a Windows environment, proper configuration is crucial for achieving optimal performance and seamless integration. This section explores the necessary steps to set up and configure Docker on your Windows system, enabling you to leverage the power of containerization for your command line application.

- Step 1: Install Docker Desktop

- Step 2: Verify System Requirements

- Step 3: Configure Docker Settings

- Step 4: Adjust Networking Configuration

- Step 5: Manage Docker Images

- Step 6: Set Up Docker Volumes

- Step 7: Customize Container Resources

- Step 8: Utilize Docker Compose for Seamless Deployment

First and foremost, you need to install Docker Desktop on your Windows machine. Once installed, you should verify that your system meets the necessary requirements for running Docker, ensuring compatibility and smooth operation of your command line application. After installation and verification, you can proceed with configuring Docker settings to optimize resource usage and tailor Docker to your specific needs.

Additionally, adjusting networking configuration is essential for allowing seamless communication between your command line application running in Docker containers and other network resources. Managing Docker images is another crucial aspect, as it enables you to pull and push container images from various repositories, facilitating easy deployment and scalability.

Furthermore, setting up Docker volumes allows you to persist data generated by your command line application, ensuring that important information is retained even after containers are stopped or restarted. Customizing container resources, such as CPU and memory allocations, can provide better performance and resource utilization for your application within Docker.

Lastly, utilizing Docker Compose, a tool for defining and managing multiple containers, brings convenience and streamlines your deployment process. By utilizing a YAML file to describe the containers in your application, you can easily manage dependencies and configurations, simplifying the deployment process and minimizing potential errors.

Creating a Dockerfile for the Command Line Program

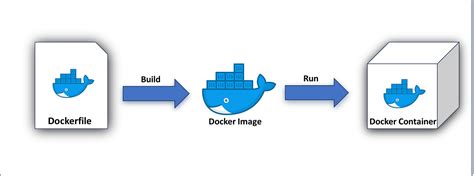

In this section, we will explore the process of generating a custom Dockerfile configuration for our command line program. The Dockerfile serves as a blueprint for building a Docker image, encapsulating all the necessary dependencies, configurations, and settings required to run our application efficiently within a Docker container.

To begin, we will outline the essential steps involved in creating a Dockerfile. This process includes defining the base image, copying the application code, setting up environment variables, installing dependencies and packages, executing desired commands, and exposing necessary ports. Through this systematic approach, we can ensure an optimized and reproducible setup for our command line program.

We will also discuss the various directives and instructions provided by Docker that can be utilized in the Dockerfile to fine-tune our application's deployment. These directives, such as RUN, COPY, ENV, EXPOSE, and ENTRYPOINT, allow us to automate the installation and configuration process, making it more efficient and scalable.

In addition, we will explore best practices for structuring and organizing the Dockerfile to maintain clarity and readability. This includes techniques like leveraging multi-stage builds, utilizing build arguments, and properly handling caching mechanisms to achieve faster build times and reduce the size of the resulting Docker image.

By following the guidelines and examples provided in this section, you will be equipped with the knowledge and skills required to create a Dockerfile tailored specifically for your command line application. This will enable you to package and deploy your application effortlessly, ensuring consistent and reliable execution across different environments.

Creating the Docker Image

In this section, we will explore the process of constructing the Docker image for our application. We will delve into the steps required to build a self-contained environment that encapsulates all the necessary dependencies and configurations of our command line application. By containerizing our application with Docker, we ensure its portability, enabling it to run consistently across various operating systems.

We will start by defining and configuring the base image, which serves as the foundation for our Docker image. Next, we will explore strategies to optimize the Docker build process, such as leveraging caching mechanisms to avoid unnecessary reinstallation of dependencies. Additionally, we will learn how to include the required files and directories in our image, ensuring that our application has access to all the necessary resources.

Throughout the process, we will showcase best practices in Dockerfile composition and discuss strategies to minimize the overall image size. This includes utilizing multi-stage builds, where intermediate images are used to discard unnecessary artifacts, resulting in a smaller final image. Furthermore, we will cover techniques for efficient Docker image layering, ensuring that subsequent deployment and updates are as streamlined as possible.

By the end of this section, you will have a clear understanding of how to create a Docker image that encapsulates your command line application, ready to be deployed to any Docker environment. You will possess the knowledge to optimize the building process and ensure a lightweight, efficient, and portable Docker image.

Running the Docker Container

In this section, we will explore the steps involved in launching and executing the Docker container for our application. By following these instructions, you will be able to effortlessly initiate and run the containerized version of the application on your system.

Firstly, you will need to ensure that you have Docker installed and properly configured on your host machine. Once this requirement is fulfilled, you can proceed to download the Docker image of the application from the designated repository. After acquiring the image, the next step involves initializing the container by utilizing the Docker run command.

As the container starts, it creates an isolated environment that encapsulates all the necessary dependencies and resources required by the application to execute seamlessly. You will be able to observe the progress of the containerization process through the terminal or command prompt, gaining valuable insights into the container's status and resource utilization.

When the Docker container is up and running, you can interact with the application via command line prompts or by accessing any relevant endpoints through your web browser. The container effectively provides a portable and self-contained environment for executing the application, enabling consistent behavior across different systems and eliminating any potential compatibility issues.

Furthermore, you have the flexibility to customize the container's runtime behavior by utilizing various Docker flags and commands. These options allow you to fine-tune aspects such as resource allocation, networking configurations, and persistent data storage, among others, to suit your specific requirements.

Finally, when you have completed using the Docker container, you can gracefully stop and remove it from your system. This step ensures proper resource management and prevents any unnecessary overhead.

| Key Points: |

|---|

| - Launch the Docker container using the Docker run command |

| - Interact with the application through command line or web access |

| - Customize the container's runtime behavior using Docker flags and commands |

| - Stop and remove the container when no longer needed |

Ensuring Persistent Data Storage with Mounted Volumes

In this section, we will explore the importance of mounting volumes for data persistence in our Dockerized command line application. Storing and preserving data securely is crucial for any application, and by utilizing mounted volumes, we can ensure that our data remains intact even when the application is stopped or updated.

Preserving Data Integrity

When working with a Dockerized application, it's essential to consider how to handle data storage to prevent any loss or corruption. Instead of relying on the container's filesystem, which is ephemeral and gets reset with each container restart, we can mount external volumes to store our data. These volumes act as a persistent storage solution, allowing us to retain data between container sessions and even across different containers.

Implementing Volume Mounting

To set up volume mounting in our Dockerized command line application, we can specify the paths we want to map using the appropriate Docker commands or configurations. By defining the source and destination directories, we establish a connection between the container and the host machine, enabling the application to read from and write to the mounted volume. This ensures that our data remains accessible even if the container is destroyed or rebuilt.

Advantages of Data Persistence

By utilizing mounted volumes for data storage, we enjoy several benefits. Firstly, we achieve the flexibility to mount volumes from different locations, enabling easy access to external data sources or databases. Additionally, data persistence allows for easier debugging and testing, as we can examine the contents of the mounted volume outside of the application container.

Moreover, with data remaining intact even when the container is rebuilt or upgraded, we reduce the risk of data loss and maintain business continuity. This is especially crucial when dealing with important user files or application configurations.

Conclusion

Implementing mounted volumes in our Dockerized command line application ensures data persistence and integrity, providing a robust solution for storing and accessing important information. By understanding the advantages of using mounted volumes and how to implement them, we can create a reliable and efficient environment for our application that guarantees our data remains secure and accessible.

Managing Multiple Docker Containers

In this section, we will explore strategies for efficiently managing multiple Docker containers within a Windows environment. The ability to effectively handle a multitude of containers is essential for scalability and resource optimization purposes, ensuring that your applications run smoothly and efficiently.

One approach to managing multiple Docker containers is through the use of container orchestration tools such as Docker Compose or Kubernetes. These tools provide a higher level of abstraction that allows you to define multi-container applications, including their dependencies and the desired configuration, in a declarative manner. Using these tools, you can easily scale your application by defining and managing groups of containers, known as services, and orchestrating their deployment and scaling.

Another important aspect when managing multiple containers is ensuring effective communication and coordination among them. This can be achieved using container networking features in Docker, such as creating custom networks or utilizing the default bridge networks. By connecting containers to the same network, you enable them to communicate with each other using container names as hostnames. Additionally, you can use port mapping to expose specific container ports to the host or other containers, allowing seamless communication and coordination.

Monitoring and managing the health and performance of multiple Docker containers is also crucial. Docker provides various tools and plugins for container monitoring, such as Prometheus and cAdvisor, which enable you to collect and analyze metrics related to CPU, memory, and network usage. These tools can help identify potential bottlenecks or issues within your containerized applications, allowing you to take necessary actions to optimize performance and troubleshoot problems.

Lastly, it is essential to implement effective container lifecycle management strategies when dealing with multiple containers. This includes defining restart policies to ensure containers are automatically restarted in case of failure, keeping track of container logs for debugging purposes, and regularly updating and patching container images to incorporate the latest security fixes and enhancements.

- Utilize container orchestration tools like Docker Compose or Kubernetes

- Create custom networks and use port mapping for effective communication among containers

- Monitor and manage container health and performance using tools like Prometheus and cAdvisor

- Implement container lifecycle management strategies, including restart policies and regular updates

By employing these strategies and tools, you can effectively manage multiple Docker containers in a Windows environment, ensuring the scalability, reliability, and performance of your containerized applications.

Troubleshooting Common Issues

When encountering technical problems while working with a Dockerized CLI application on the Windows operating system, it is essential to be prepared to troubleshoot common issues that may arise. This section aims to provide guidance on identifying and resolving these challenges, without directly referring to the specific setting, technology, or platform.

1. Error Messages and Logs: One of the first steps in troubleshooting is to carefully analyze any error messages or logs that are generated. These messages often contain valuable information about the nature of the problem and help in understanding the underlying issue.

2. Environmental Factors: It is important to consider the various environmental factors that might impact the functioning of the application. This includes examining the system dependencies, configurations, network settings, and any other relevant aspects that could be influencing the behavior of the application.

3. Version Compatibility: Another common issue is compatibility conflicts between different software versions. It is crucial to ensure that all the components required for the application, such as the operating system, Docker engine, and any specific dependencies, are compatible and updated to the appropriate versions.

4. Resource Limitations: Insufficient system resources, such as memory or CPU capacity, can lead to problems when running the application. Monitoring and managing resource usage can help identify and resolve such issues, potentially by adjusting resource allocation or optimizing the application's performance.

5. Network Connectivity: Network-related issues can also cause problems while working with a Dockerized CLI application. Verifying network connectivity, checking firewall settings, and ensuring proper configuration of network-related components can aid in resolving these difficulties.

6. Configuration Errors: Misconfiguration of application settings, environment variables, or Docker container configurations can result in unexpected behavior. Reviewing and validating the configuration parameters can help pinpoint and rectify such issues.

7. Community Resources: Lastly, when encountering persistent difficulties, seeking assistance from online communities, forums, or official documentation related to the specific technology or framework used in the Dockerized CLI application can often provide valuable insights and potential solutions.

In conclusion, troubleshooting common issues when working with a Dockerized CLI application on Windows requires a systematic approach and attention to detail. By carefully analyzing error messages, considering the environment and compatibility factors, addressing potential resource limitations and network connectivity problems, reviewing and validating configurations, and leveraging community resources, many challenges can be resolved effectively.

FAQ

What is Docker?

Docker is an open-source platform that allows developers to automate the deployment and running of applications in lightweight containers.

Why should I use Docker for my command line application?

Using Docker for your command line application provides a number of benefits such as easy deployment, portability, isolation, and scalability. It allows you to bundle all the dependencies and configurations of your application into a container, making it consistent across different environments.