Operating and scaling a virtualized infrastructure can be a daunting task, especially when dealing with numerous instances of diverse applications. However, utilizing innovative technology, such as containerization, allows enhanced management and deployment of these applications on a reliable and robust Linux-based web hosting environment.

By leveraging the benefits of containerization, administrators can encapsulate each application, isolating them from one another, while providing efficient resource allocation and simplified maintenance. Containers offer a lightweight alternative to traditional virtualization, optimizing performance and reducing overhead, thereby ensuring reliable and swift deployment of multiple applications.

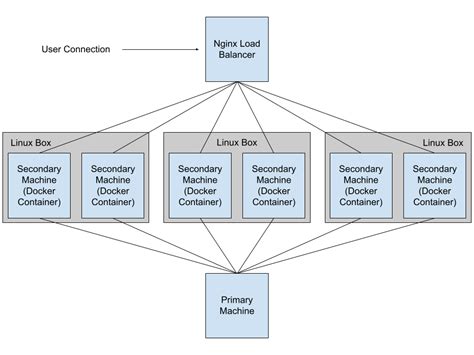

Furthermore, the utilization of container orchestrators, like Kubernetes or Docker Swarm, empowers administrators to coordinate and manage these application instances effectively. These orchestrators enable seamless horizontal scaling, load balancing, and fault tolerance, thereby guaranteeing high availability and uninterrupted service to end-users.

In addition to the advantages mentioned, containerization also facilitates integration with continuous integration and continuous deployment (CI/CD) pipelines. The availability of container registries and a multitude of automation tools allows seamless integration of various stages of the development and deployment process, ensuring efficient collaboration between development and operations teams.

In summary, containerization revolutionizes the process of managing and scaling multiple instances of applications on a Linux-based web hosting infrastructure. By leveraging this technology and utilizing container orchestrators, administrators can ensure efficient resource allocation, robust application deployment, and streamline collaboration between development and operations teams.

Understanding Docker and Containerization

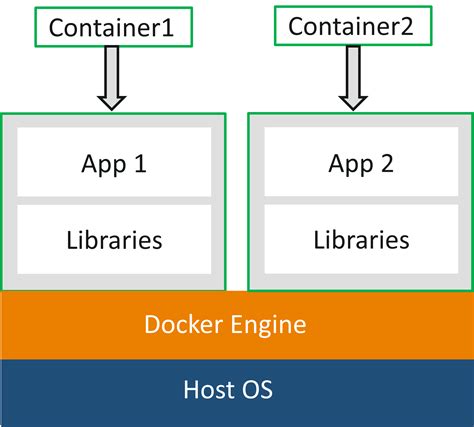

In this section, we will delve into the fundamental concept of Docker and containerization, exploring the core principles and benefits they offer in the world of modern web development. By comprehending the underlying mechanisms and key terminology, we can gain a deeper understanding of how Docker enables the efficient deployment and management of applications in isolated environments, without relying on traditional virtualization techniques.

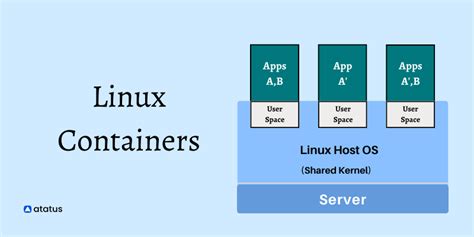

Containerization revolutionizes the way we package and distribute software, allowing developers to encapsulate applications and their dependencies into lightweight and portable entities known as containers. These containers, which can be thought of as self-contained units, provide an isolated runtime environment that ensures consistency and reproducibility across different systems and platforms.

By leveraging containerization technologies like Docker, organizations can achieve unprecedented levels of scalability, efficiency, and flexibility in deploying complex web applications. With containers acting as building blocks, developers gain the ability to efficiently run and scale multiple applications, each composed of its own set of microservices, on a single server without conflicting dependencies.

Furthermore, containerization enhances resource utilization as containers share the host system's kernel, resulting in lower overhead compared to traditional virtual machines. This efficient use of resources allows for increased density of application instances on a given server, maximizing overall hardware performance.

In summary, understanding Docker and containerization empowers developers and system administrators to optimize the deployment, management, and scalability of web applications. By adopting containerization practices, organizations can harness the benefits of increased efficiency, portability, and flexibility, leading to faster development cycles, seamless deployment pipelines, and improved overall operations.

Advantages of Utilizing Multiple Containers on a Solo Web Host

In the realm of modern web hosting, it is becoming increasingly common for developers and system administrators to leverage the power of employing multiple containers on a solitary server infrastructure. This innovative approach offers noteworthy benefits and enhances several crucial aspects of web application deployment and management.

- Resource Isolation: By utilizing multiple containers, one can compartmentalize various aspects of their web application, ensuring that different components do not interfere with one another. This enhances resource isolation and prevents issues such as performance bottlenecks or conflicts between different software versions.

- Improved Scalability: Running multiple containers allows for flexible and efficient scaling of individual components of a web application. Each container can be independently scaled up or down based on its specific requirements, allowing for better resource allocation and cost optimization.

- Enhanced Fault Tolerance: By distributing critical components of a web application across multiple containers, the risk of a single point of failure is mitigated. In the event of a container failure, other containers can continue to operate independently, ensuring uninterrupted availability and minimal impact to end-users.

- Simplified Development and Testing: Running multiple containers on a single server can simplify the development and testing process. Each container can represent a specific component of the application or a different environment, enabling developers to isolate and test individual functionalities more effectively.

- Easy Deployment and Rollbacks: Containers facilitate easy deployment and rollback procedures. By packaging different components and dependencies within containers, deployment becomes consistent and reproducible. Furthermore, rolling back to a previous version can be achieved by spinning up containers with the previous configuration, reducing downtime and simplifying the rollback process.

Adopting the practice of running multiple containers on a solo web host holds the potential to significantly improve various aspects of web application deployment, management, and overall efficiency. By leveraging the advantages offered by containerization, developers and system administrators can optimize resource utilization, enhance scalability, improve fault tolerance, simplify development and testing, as well as streamline deployment and rollback procedures.

Configuring and Managing Containers in a Linux Environment

When it comes to efficiently managing and configuring your containers in a Linux environment, there are several essential aspects to consider. This section aims to provide you with an overview of the best practices and techniques for setting up and controlling your containers, ensuring seamless operations and optimal performance.

Container Configuration:

One crucial aspect of managing containers is the configuration process. This involves defining various parameters such as resource allocation, networking, storage, and security settings to ensure the containers function cohesively and efficiently. Additionally, utilizing container orchestration tools can help automate and streamline the configuration process, enhancing scalability and flexibility.

Network Configuration:

The networking aspect of container management is essential for enabling communication between containers and external resources. Configuring network bridges, setting up port mappings, and utilizing overlay networks are key techniques to facilitate seamless connectivity among containers and the outside world.

Storage Management:

Proper storage management is critical when running multiple containers concurrently. Utilizing storage drivers, volume mounts, and managing container data can help ensure data persistence, efficient resource utilization, and facilitate seamless container scaling and migration.

Monitoring and Logging:

Monitoring and logging play a vital role in container management. Implementing appropriate logging mechanisms and leveraging monitoring tools enable you to track container performance, identify potential bottlenecks, and ensure effective troubleshooting. This proactive approach allows you to optimize resource allocation and enhance overall system stability.

Security Considerations:

Security is an indispensable aspect of managing containers. Implementing proper access controls, utilizing container isolation mechanisms, and regularly updating and patching container images are fundamental practices to mitigate security vulnerabilities. Additionally, ensuring proper network segmentation, deploying SSL certificates, and implementing security policies contribute to safeguarding your containers and underlying infrastructure.

| Configuration | Networking | Storage Management | Monitoring and Logging | Security Considerations |

|---|---|---|---|---|

| Define parameters | Establish connectivity | Ensure data persistence | Track performance | Implement access controls |

| Utilize orchestration tools | Configure network bridges | Efficient resource utilization | Identify bottlenecks | Implement container isolation |

| Set up port mappings | Facilitate scaling and migration | Enable effective troubleshooting | Regularly update container images | |

| Utilize overlay networks | Optimize resource allocation | Implement network segmentation | ||

| Ensure overall system stability | Deploy SSL certificates |

Best Practices for Orchestrating Multiple Instances on a Linux Environment

In this section, we will explore some essential guidelines and strategies for orchestrating and managing multiple instances within a Linux-based environment. By adhering to these best practices, you can optimize the performance, scalability, and reliability of your containerized applications.

1. Effective Resource Allocation: Properly allocating system resources, such as CPU, memory, and network bandwidth, is crucial for achieving optimal performance. Analyze individual container requirements and assign appropriate limits and shares to avoid resource contention and ensure fair distribution among instances.

2. Container Isolation: Maintain strict isolation between containers to prevent interference and improve security. Utilize namespace and control group technologies available in Linux to create isolated environments for each container instance. This isolation ensures that any issues or vulnerabilities in one container do not affect others.

3. Fine-tune Networking: Design a well-structured network architecture that allows efficient communication between containers and external services. Implement container networking technologies like bridge networks, overlay networks, or third-party network plugins to achieve seamless connectivity and enable cross-container communication.

4. Reliable Data Storage: Choose appropriate storage options to achieve data persistence and reliability across multiple instances. Consider technologies such as Docker volumes or bind mounts to ensure persistent data storage while minimizing data loss risks. Implement backup and recovery mechanisms to safeguard critical data.

5. Monitoring and Logging: Implement robust monitoring and logging solutions to gain insights into container performance, resource utilization, and application behavior. Utilize container orchestrators like Kubernetes or generic monitoring solutions like Prometheus and Grafana to collect and analyze metrics effectively.

6. Container Health and Scalability: Implement health checks to continuously monitor the status of each instance and ensure rapid mitigation of failures. Utilize container orchestrators' scaling capabilities to dynamically adjust resource allocation based on demand, ensuring optimal scalability and mitigating performance bottlenecks.

7. Version Control and Updates: Utilize version control systems like Git to manage container images, configuration files, and application code. Implement rolling updates or blue/green deployment strategies to ensure smooth updates and minimize downtime during application upgrades.

8. Security and Access Control: Implement strict security measures and access control mechanisms to protect containerized applications and sensitive data. Utilize container security tools, implement user access controls, and regularly apply security patches and updates to minimize vulnerabilities and unauthorized access.

By following these best practices, you can maximize the efficiency, scalability, and resiliency of your containerized applications running in a Linux-based environment. Remember that continuous improvement and adapting to evolving technologies and best practices are key to achieving optimal container management.

Scaling and Load Balancing Containers on a Server: Ensuring Efficiency and Performance

In the context of managing and optimizing resources on a server, the importance of scaling and load balancing containers cannot be understated. By distributing the workload across multiple containers, this approach ensures that the server can efficiently handle increased traffic and workload demands without compromising performance.

Scaling containers involves horizontally expanding the number of instances running on a server to achieve higher capacity and availability. This approach allows for greater flexibility and better resource utilization, as each container can handle a portion of the overall workload. Load balancing, on the other hand, involves intelligently distributing incoming requests across multiple containers to evenly distribute the load, preventing any single container from becoming overloaded and potentially causing performance issues.

- Efficient resource utilization: By scaling containers on a single server, businesses can fully utilize available resources and prevent bottlenecks. Instead of relying on a single container to handle all incoming requests, workload distribution across multiple containers ensures that the overall system can perform at its optimal capacity.

- Improved performance: Load balancing distributes the incoming requests across multiple containers, preventing any single container from being overwhelmed. By evenly distributing the load, load balancing optimizes response times, reduces latency, and improves the overall performance of the applications running within the containers.

- High availability: Scaling and load balancing containers on a single server enhances the system's availability. If there is a failure or maintenance event on one container, the workload can be automatically redirected to other containers, ensuring that the applications remain accessible and users experience minimal disruptions.

- Flexibility and scalability: With a containerized environment, scaling and load balancing can be easily adjusted to meet changing demands. As traffic increases or decreases, containers can be scaled up or down accordingly, allowing businesses to adapt quickly to fluctuating demand without the need for extensive infrastructure changes.

In conclusion, scaling and load balancing containers on a single server provide a robust solution for optimizing resource utilization, improving performance, ensuring high availability, and enabling flexibility and scalability. This approach allows businesses to efficiently handle increased workload demands while maintaining optimal performance levels, ultimately enhancing the overall user experience.

Running multiple Laravel apps locally with Docker

Running multiple Laravel apps locally with Docker by Andrew Schmelyun 36,446 views 4 years ago 13 minutes, 20 seconds

FAQ

Can I run multiple Docker containers on a single Linux web server?

Yes, you can. Docker allows you to run multiple containers on a single Linux web server by utilizing containerization technology.

What are the benefits of running multiple Docker containers on a single Linux web server?

There are several benefits to running multiple Docker containers on a single Linux web server. Firstly, it allows you to isolate different services or applications, ensuring they do not interfere with each other. This improves security and makes it easier to manage and update individual components. Additionally, running multiple containers enables better resource utilization, as each container can be allocated the necessary amount of CPU, memory, and disk space. This optimizes the server's performance and allows for efficient scaling of the services.

How can I manage multiple Docker containers running on a single Linux web server?

There are various tools and approaches you can use to manage multiple Docker containers on a single Linux web server. One common approach is to use Docker Compose, which allows you to define and manage multiple containers as a single service. Docker Compose uses a YAML file to specify the configuration of each container, including their dependencies and networking requirements. This simplifies the management of complex multi-container setups. Another option is to use container orchestration platforms like Kubernetes, which provide advanced features for managing and scaling containers across multiple servers.