In the realm of Linux-based operations, unleashing the potential of your tasks is a paramount goal. While Docker presents itself as a popular choice for containerization, there exist alternative methods for running Linux tasks that don't rely on it. This article will guide you through the process of sidestepping Docker and operating directly within the foundational system.

Discovering ways to execute operations without the need for Docker opens up a world of possibilities. By delving into alternative methods, you can expand your understanding of Linux ecosystems and streamline the execution of tasks.

We will explore techniques to embark on Linux operations without the dependency on Docker, freeing you from typical constraints and enabling you to manipulate the core system environment with heightened efficiency. So let's dive in and unlock the secrets to running Linux tasks outside of Docker's grasp.

Understanding Containers: Docker and Its Limitations

Exploring the Concept of Containers

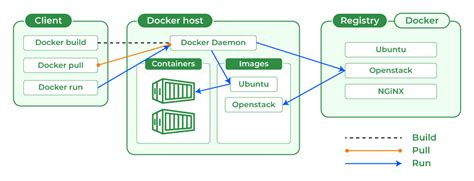

In the world of software development, containers have emerged as a powerful solution for packaging and deploying applications. Containers provide an isolated and lightweight environment that allows applications to run consistently across different operating systems and platforms. At the forefront of this containerization revolution is Docker, a widely-used containerization platform.

Diving into Docker's Functionality

Docker leverages operating system-level virtualization to create and manage containers. It utilizes the Linux kernel's cgroups and namespaces to isolate resources such as CPU, memory, network interfaces, and file systems. By packaging an application and its dependencies into a container, Docker enables developers to ensure consistent and reproducible deployment, regardless of the underlying infrastructure.

Understanding the Limitations of Docker

While Docker brings several benefits to the table, it also has its limitations. One of the key limitations is the reliance on the host operating system's kernel. Since Docker containers share a common kernel with the host, any vulnerabilities or misconfigurations in the kernel can impact the security and stability of all containers running on that host.

The constraints imposed by the shared kernel can be circumvented by using virtual machines instead of containers. However, virtual machines introduce more significant overhead in terms of resource consumption and deployment complexities.

Another limitation of Docker is the inability to run certain workloads that require direct access to physical hardware or specialized drivers. For example, applications that rely on hardware-specific functionalities or interact with USB devices may not be suitable for containerization with Docker.

Additionally, Docker is primarily designed to run Linux-based applications, limiting its support for other operating systems. Although efforts have been made to support Windows containers, the overall maturity and ecosystem around Windows containerization are still relatively limited compared to the Linux counterpart.

Conclusion

While Docker has revolutionized the way we package and deploy applications, it is important to be aware of its limitations. Understanding the trade-offs and constraints of Docker allows developers and operators to make informed decisions on when and where to utilize containerization, and when alternatives such as virtual machines may be more appropriate.

Alternative Methods: Implementing Linux Tasks Directly within the Host Environment

Linux tasks can be executed within the host environment using various alternative approaches instead of relying on Docker. This section explores alternative methods for running Linux tasks without Docker, allowing for flexible and efficient management of tasks without the need for containerization.

| Method | Description |

|---|---|

| Chroot | By utilizing chroot, tasks can be executed within a customized environment that is isolated from the rest of the system. This method allows for separate file systems, libraries, and configurations, offering a secure and controlled environment for running Linux tasks without Docker. |

| Systemd-nspawn | Systemd-nspawn provides a lightweight and secure approach for running Linux tasks directly within the host environment. It offers process isolation and resource management capabilities, allowing for convenient execution of tasks without the need for containerization. |

| LXC (Linux Containers) | LXC provides a full Linux environment for running tasks directly on the host system. It utilizes Linux namespaces and control groups to achieve process isolation and resource control. With LXC, tasks can be executed with low overhead and minimal performance impact, making it a suitable alternative to Docker. |

| KVM (Kernel-based Virtual Machine) | KVM enables the creation of virtual machines that can run full-fledged Linux environments. By leveraging KVM, tasks can be executed directly within virtual machines on the host system, offering strong isolation and performance capabilities without the dependency on Docker. |

By exploring these alternative methods, users can find a solution that best fits their specific requirements for running Linux tasks directly within the base system. These approaches offer flexibility, control, and resource efficiency, providing an alternative to Docker for task management within a Linux environment.

Ensuring Compatibility: Identifying Dependency Requirements

Integrating Linux tasks into the system effectively and without the use of Docker requires careful consideration of dependency requirements.

We need to ensure that the necessary dependencies for running Linux tasks on the base system are identified and configured appropriately to guarantee compatibility.

By accurately identifying the dependencies, we can analyze their compatibility with the existing system components and determine if any adjustments need to be made.

Thoroughly examining the requirements enables us to create a seamless integration plan and minimize potential conflicts.

Identifying the dependency requirements involves:

- Analyzing the Linux task's functionality and resource demands

- Identifying the necessary libraries, packages, or frameworks

- Checking the versions and compatibility of the required dependencies

- Assessing potential conflicts or overlapping dependencies

By understanding the specific dependency needs of each Linux task, we can leverage this knowledge to make informed decisions and ensure the compatibility of our system.

Moreover, regularly reviewing and updating the identified dependencies is crucial to ensure the system remains compatible as new versions or patches are released.

Overall, accurately identifying and managing dependency requirements is pivotal in efficiently integrating Linux tasks into the base system, ensuring compatibility, and optimizing system performance.

Configuration and Isolation: Setting Up the Foundation for Task Execution

When it comes to executing tasks in a Linux environment, it is essential to establish a robust configuration and isolation framework. By carefully configuring and isolating the system, you can ensure the smooth execution of tasks while safeguarding the integrity of the underlying infrastructure.

Configuring the base system involves fine-tuning various settings and parameters to optimize performance and enhance security. It entails carefully selecting and configuring the necessary software components, libraries, and dependencies to support the execution of tasks without relying on Docker or containerization.

In addition to configuration, establishing isolation mechanisms is crucial for maintaining the stability and security of the base system. Isolation involves creating boundaries and separation between tasks to prevent conflicts and mitigate potential risks. This can be achieved through techniques such as user and group permissions, resource allocation, and network isolation.

By leveraging appropriate configuration and isolation techniques, you can ensure that each task operates within its own enclosed environment, free from interference and potential vulnerabilities. This level of separation not only enhances system stability but also minimizes the impact of any potential issues that may arise during task execution.

Process Monitoring: Managing and Tracking Executions in Linux Environments

In the realm of Linux environments, keeping track of and managing the execution of processes is crucial for maintaining system stability and efficiency. Process monitoring involves the oversight and control of tasks and activities taking place within the operating system, ensuring their smooth operation and troubleshooting any potential issues.

With the ability to monitor processes, administrators can gain valuable insights into the performance of their Linux systems, identify resource-intensive tasks, and optimize system utilization. By keeping a watchful eye on the activities occurring within the environment, administrators can promptly detect any anomalies or irregularities, allowing for timely intervention and mitigation of potential problems.

Proper process tracking enables administrators to effectively manage the execution of tasks, ensuring that critical operations are prioritized, and resources are allocated optimally. By monitoring and logging the activities of running processes, system administrators can gain a comprehensive understanding of the overall system behavior, detecting patterns and trends that can aid in future optimization efforts.

Moreover, process monitoring provides valuable information for system audit and compliance purposes. By accurately tracking the execution of processes, administrators can maintain records of activities, which can be used for security analysis, troubleshooting, and ensuring adherence to regulatory guidelines.

Implementing robust process monitoring tools and techniques is essential for organizations operating within Linux environments. By effectively managing and tracking Linux tasks, administrators can maintain system stability, optimize resource utilization, and ensure the overall reliability of their systems.

Resource Management: Enhancing Performance for Linux Workloads

In this section, we will explore techniques and strategies for optimizing the performance of Linux tasks. Efficiently managing resources is essential to ensure that your system operates at its peak capacity and delivers optimal results. By effectively allocating and managing resources, you can maximize performance, minimize latency, and improve the overall efficiency of your Linux workloads.

1. Utilize Resource Allocation Techniques

- Consider implementing resource allocation techniques such as cgroups and namespaces, which allow you to limit resource usage and improve isolation between tasks.

- Explore CPU affinity settings to ensure that critical tasks are assigned to specific CPU cores, optimizing performance and reducing contention.

- Utilize memory management techniques, such as setting limits and allocating appropriate swap space, to prevent memory-related bottlenecks.

2. Optimize I/O Performance

- Maximize I/O performance by leveraging advanced file system features such as buffering, caching, and I/O schedulers.

- Consider using optimized file systems like ext4 or XFS to enhance the speed of read and write operations.

- Fine-tune disk I/O settings, including block size and disk scheduler, to match the workload requirements.

3. Monitor and Analyze Performance

- Implement performance monitoring tools and metrics to gain insight into resource utilization and identify areas for improvement.

- Utilize tools like top, vmstat, and iostat to monitor CPU, memory, and disk usage.

- Analyze performance data to identify potential bottlenecks and optimize resource allocation accordingly.

4. Consider Performance Tuning Parameters

- Explore kernel tuning parameters to fine-tune your system's performance, such as adjusting TCP/IP settings or optimizing network buffers.

- Optimize process scheduling parameters to provide timely responsiveness and prioritize critical tasks.

- Increase system-wide limits, such as file descriptors and maximum number of processes, to accommodate high-demand workloads.

By incorporating these resource management techniques and strategies into your Linux system, you can significantly enhance the performance of your tasks, improve efficiency, and ensure smooth execution of workloads.

Protecting the Foundation: Addressing Security Concerns without Utilizing Docker

In this section, we will explore various security considerations to safeguard the underlying infrastructure without relying on Docker technology. By implementing these measures, you can enhance the overall security posture of your base system, ensuring the protection of critical assets and mitigating potential vulnerabilities.

1. Isolation and Resource Limitations: Emphasize the importance of creating and managing isolated environments to restrict access and prevent unauthorized actions. Utilize resource limitations to ensure fair allocation and prevent resource abuse.

2. User Access Control: Implement robust user access control mechanisms to restrict root privileges and limit the exposure of critical system components. Empower users with appropriate permissions and employ strong authentication mechanisms to prevent unauthorized access.

3. Secure Communication Channels: Utilize secure communication protocols, such as TLS or SSH, to protect data exchange and remote access. Encrypt sensitive information during transmission to mitigate the risk of interception or tampering.

4. Regular System Updates: Maintain your base system by regularly applying security patches and updates to address known vulnerabilities. Keep abreast of security advisories and follow best practices to ensure a robust and secure system.

5. Intrusion Detection and Monitoring: Implement intrusion detection systems and monitoring tools to detect and respond to potential security breaches. Regularly analyze system logs and network traffic to identify suspicious activities and promptly take action.

6. Application Whitelisting: Deploy application whitelisting mechanisms to allow only authorized and trusted software to run on the base system. Prevent the execution of malicious or unverified applications that could compromise system integrity.

7. Backup and Disaster Recovery: Establish a comprehensive backup and disaster recovery strategy to protect critical data and enable swift recovery in the event of a security incident. Regularly test backups and ensure their integrity.

8. Vulnerability Scanning and Penetration Testing: Conduct regular vulnerability scans and penetration testing on your base system to identify potential weaknesses and proactively address them. Use industry-standard tools and methodologies for comprehensive security assessment.

By applying these security considerations, you can strengthen the foundation of your system and ensure optimal protection against potential threats, even in the absence of Docker technology.

Testing and Troubleshooting: Strategies for Rectifying Task Execution Issues

In this section, we will explore effective strategies for diagnosing and resolving issues that arise during the execution of tasks in a Linux environment. When faced with challenges in task execution, it is crucial to adopt a systematic approach in order to identify and rectify the underlying problems.

1. Analyzing Error Messages and Logs: When encountering issues, error messages and logs serve as valuable sources of information. These resources provide insights into the specific errors or failures that occurred during task execution. By carefully examining these messages and logs, you can gain an understanding of the root causes and pinpoint areas that require attention.

2. Debugging and Isolation: Debugging is an important technique for identifying and resolving issues during task execution. By isolating the problematic components or processes, you can focus your efforts on troubleshooting specific areas. Tools such as breakpoints, step-by-step execution, and inspection of variables can aid in the identification of errors or unexpected behavior. Additionally, using sandbox environments can assist in creating controlled testing environments for debugging purposes.

3. Testing Methodologies: Adopting appropriate testing methodologies is essential for ensuring the proper functioning of tasks in a Linux environment. Various testing techniques, such as unit testing, integration testing, and system testing, can help uncover issues at different levels of the task execution process. Implementing thorough test suites, including edge cases and boundary conditions, can enhance the reliability and stability of the tasks.

4. Version Control and Rollbacks: Maintaining a version control system allows for the tracking of changes made to tasks and their associated dependencies. When facing issues, having the ability to revert to a previous working version can be invaluable. By utilizing version control effectively, you can mitigate the risks associated with unexpected behavior during task execution.

5. Collaborative Problem Solving: Seeking assistance from peers, online communities, or forums can lead to valuable insights and potential solutions. Collaborative problem-solving not only expands your knowledge but also provides fresh perspectives and diverse approaches to resolving task execution issues.

In conclusion, thorough testing, effective debugging techniques, utilization of error messages and logs, version control practices, and collaboration with the community are all vital strategies for identifying and resolving issues that may arise during the execution of tasks in a Linux environment.

Introduction to Linux – Full Course for Beginners

Introduction to Linux – Full Course for Beginners by freeCodeCamp.org 1,489,127 views 1 year ago 6 hours, 7 minutes

Using Containers without Docker Desktop

Using Containers without Docker Desktop by The Pragmatic Programmer 7,466 views 1 year ago 20 minutes

FAQ

Why would I want to run Linux tasks without Docker?

Docker is a popular tool for containerization, but there are certain scenarios where running Linux tasks without Docker might be preferred. This could be due to performance reasons, resource limitations, or simply wanting to avoid the overhead of running Docker.

What are the alternatives to Docker for running Linux tasks?

There are several alternatives to Docker for running Linux tasks. Some popular ones include LXC (Linux Containers), systemd-nspawn, and Podman. These tools provide similar functionality to Docker while avoiding the need for a containerization platform.

How can I run Linux tasks without Docker in the base system?

To run Linux tasks without Docker, you can utilize tools like systemd-nspawn or Podman. These tools allow you to create lightweight containers that behave like virtual machines but without the associated overhead. By using these tools, you can run your tasks directly on the base system without the need for a dedicated containerization platform like Docker.