Exploring new dimensions in software development and system administration has led to the revolutionary concept of launching Docker containers inside Docker containers on the Windows platform. This innovative approach opens up a world of possibilities for DevOps practitioners seeking to optimize their build agent workflows and enhance overall efficiency.

By harnessing the potential of nested containerization, developers and system administrators can achieve a higher level of flexibility, scalability, and security. This cutting-edge technique facilitates the isolation of multiple environments within a single Windows instance, paving the way for seamless integration and collaboration in complex software projects.

As the demand for agile application development continues to soar, the need for dynamic and adaptable infrastructure is paramount. With the ability to spin up Docker containers within existing containers on Windows, organizations are empowered to streamline their software development lifecycle, accelerate deployment processes, and ensure consistent delivery of high-quality code.

A brief overview of Docker and its advantages in the context of DevOps

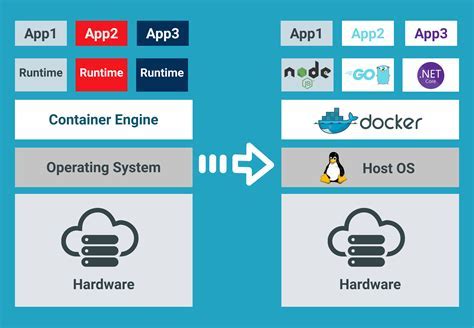

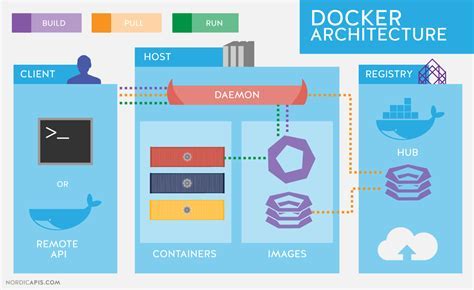

In the realm of DevOps, utilizing containerization technologies has become essential for efficient application development and deployment processes. Among these technologies, Docker stands out as a powerful tool that revolutionizes the way software is developed, tested, and deployed.

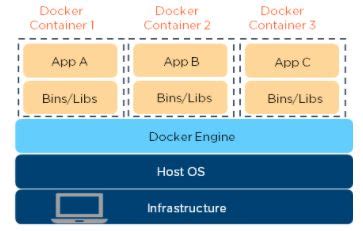

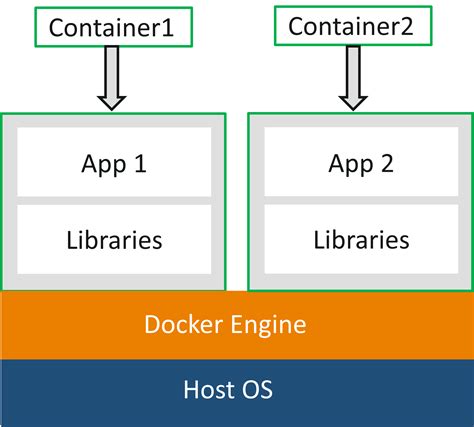

Docker enables the creation and management of lightweight, isolated environments called containers. These containers encapsulate the dependencies and components required by an application, allowing developers to easily package, distribute, and run their software across different environments without worrying about compatibility issues or conflicting dependencies.

One of the key advantages of Docker is its ability to provide consistent and reproducible environments. By defining the application's dependencies in a Dockerfile, developers can ensure that the development, testing, and production environments are identical, eliminating the common "it works on my machine" problem. This consistency enhances collaboration between team members and allows for smoother application deployments.

Another benefit of Docker is its scalability and resource efficiency. With Docker, applications can be easily scaled up or down by launching or stopping containers, avoiding the need to provision and manage separate virtual machines for each individual component. This flexibility not only optimizes resource allocation but also enables organizations to quickly adapt to changing demands.

Docker also provides simplified management and version control of applications. Through its image-based approach, Docker enables versioning and easy rollbacks, ensuring that previous versions of an application can be quickly restored if needed. Additionally, Docker's integration with orchestration tools, such as Kubernetes, simplifies the management of complex, distributed architectures.

In summary, Docker brings numerous benefits to the world of DevOps, including consistent and reproducible environments, scalability, resource efficiency, simplified management, and version control. Its ability to package applications and their dependencies into lightweight, portable containers makes it an invaluable tool for modern software development and deployment practices.

Setting up Docker on Windows

In this section, we will explore the process of configuring Docker on the Windows operating system. The focus will be on providing a step-by-step guide that does not rely on the use of unfamiliar terminology or jargon.

- Understanding the requirements for running Docker on Windows

- Downloading and installing Docker Desktop

- Verifying the successful installation of Docker

- Configuring Docker settings for optimal performance

- Exploring the Docker command-line interface (CLI) and its basic functionalities

Before we delve into the technical aspects, it is essential to grasp the fundamental requirements for running Docker on the Windows platform. These requirements will ensure a smooth installation and operation of Docker. Next, we will guide you through the process of downloading and installing Docker Desktop, a user-friendly tool that provides a graphical interface for managing Docker containers.

After the installation is complete, it is crucial to verify that Docker is running properly on your Windows machine. We will walk you through the verification process, ensuring that all necessary components are functioning as expected. Additionally, we'll cover essential settings that can be adjusted to optimize Docker's performance on Windows.

Finally, we will introduce you to the Docker command-line interface (CLI) and its basic functionalities. Familiarizing yourself with CLI commands will enable you to interact with Docker more efficiently and effectively, empowering you to perform a wide range of operations that are pivotal in a DevOps environment.

Step-by-step Guide on Setting Up Docker Environment on Windows

Installing and configuring Docker on a Windows machine is an essential step for anyone involved in DevOps and containerization. This guide will provide you with a comprehensive step-by-step process to install Docker, ensuring you have a fully functional environment to leverage the power of containerization.

- Check System Requirements: Before proceeding with the installation process, it's important to ensure that your Windows machine meets the necessary system requirements for Docker.

- Download Docker: Visit the official Docker website to download the Docker Desktop installer for Windows.

- Run the Installer: Once the Docker Desktop installer is downloaded, run the executable file and follow the on-screen instructions to begin the installation process.

- Choose Installation Options: During the installation, you will have the option to customize certain settings, such as choosing the installation location and enabling Docker to start automatically at system startup.

- Enable Hyper-V: Docker for Windows requires Hyper-V to be enabled on your machine. If Hyper-V is not already enabled, the installer will prompt you to enable it.

- Complete Installation: After selecting your desired installation options and enabling Hyper-V, the Docker installation process will continue. Once completed, you will be notified that Docker is successfully installed.

- Verify Installation: To ensure that Docker is fully functional, open a command prompt and run the command "docker version" to confirm the installation and display the Docker version, as well as the client and server information.

- Congratulations! You have successfully installed Docker on your Windows machine. You can now start leveraging the power of containerization for your development and deployment activities.

By following this step-by-step guide, you can easily set up Docker on your Windows machine, enabling you to create and manage containers for your DevOps processes. Containerization offers numerous benefits, including easier dependency management, faster deployment, and improved scalability, making it an essential tool for modern software development and deployment workflows.

Exploring the Inner Workings of Dockerized Environments

Within the realm of containerization, there lies a fascinating aspect called "Inside Docker Containers". This section delves deep into the intricate mechanisms that govern the inner workings of Dockerized environments, where lightweight and isolated containers allow for efficient application deployment and scalability.

By scrutinizing the inner workings, we gain a comprehensive understanding of how Docker containers operate independently from the host system, utilizing a combination of kernel namespaces, cgroups, and layered file systems. These underlying technologies enable the seamless execution of applications within isolated environments, ensuring portability and reproducibility across different platforms.

Furthermore, this section explores the concept of container images, their role in encapsulating application dependencies, and the utilization of Docker registries to manage and distribute these images. With the ability to create, modify, and share container images, developers can seamlessly reproduce and distribute their applications across various stages of the software development lifecycle.

Moreover, this exploration of Docker's inner workings will also shed light on the networking capabilities of containers, enabling seamless communication between different containerized services. Through the usage of customizable network bridges, port mappings, and orchestration tools, developers can construct complex, distributed systems using Docker containers.

Finally, we will delve into the security aspects of Docker containers, exploring the mechanisms in place to isolate running processes and safeguard the host system from potential vulnerabilities. From user namespaces to capabilities management, Docker offers a variety of security features that allow administrators to reduce the attack surface and enforce isolation between containers.

In conclusion, this section enlightens us about the fascinating intricacies of "Inside Docker Containers," providing a comprehensive understanding of the underlying technologies, network capabilities, image management, and security aspects that make Docker a powerful tool for building and deploying applications.

| Key Aspects Covered in This Section: |

|---|

| 1. Inner workings of Docker containers |

| 2. Kernel namespaces, cgroups, and layered file systems |

| 3. Container images and Docker registries |

| 4. Networking capabilities within Docker |

| 5. Security aspects of Docker containers |

Understanding the concept of Docker containers

In this section, we will delve into the fundamental concept of Docker containers and explore their importance in modern development practices. By gaining a solid understanding of Docker containers, you will be better equipped to appreciate the significance of launching Docker inside Docker containers on Windows for DevOps build agents.

At its core, Docker containers are lightweight and portable units of software that encapsulate everything needed to run an application, including the code, runtime, system tools, libraries, and configuration files. These containers are isolated from each other, allowing applications to run reliably and consistently regardless of differences in underlying environments.

Docker containers rely on containerization technology, which leverages operating system features to provide process-level isolation and resource control for running applications. This approach eliminates many of the dependencies and compatibility issues associated with traditional software installations, making it easier to deploy and manage applications across different environments.

By using Docker containers, developers and operations teams can package their applications and dependencies into self-contained units, making them highly portable and scalable. Containers enable faster development cycles by ensuring consistent behavior across different environments, reducing the risk of issues related to software dependencies and configuration. Additionally, containers simplify deployment processes and allow for efficient scaling, as they can be easily replicated and distributed across various host machines.

Overall, the concept of Docker containers revolutionizes software development and deployment by providing a standardized and efficient approach to package, distribute, and run applications. Understanding the principles behind Docker containers is crucial for harnessing their full potential in the context of launching Docker inside Docker containers on Windows for DevOps build agents.

Running Docker Within a Dockerized Environment

In this section, we will explore the concept of executing Docker commands within a Docker container, creating a self-contained environment for running containerized applications. By utilizing this approach, developers can efficiently manage their containerized workflows and streamline the deployment process.

To achieve this, we will delve into the intricacies of setting up a Docker-in-Docker (DinD) configuration, which enables the creation and management of Docker containers from within an existing Docker container. This powerful technique eliminates the need for separate Docker installations on each individual machine and provides a consistent environment for developers to work within.

We will discuss the various steps involved in configuring a Docker-in-Docker setup, including the necessary dependencies and configuration details. Additionally, we will explore the advantages and limitations of running Docker within a Docker container, such as isolation and security considerations.

Furthermore, we will showcase real-world use cases where running Docker inside a Docker container proves beneficial, such as when building and testing complex multi-service applications or automating CI/CD pipelines.

Throughout this section, we will provide practical examples and best practices for implementing and managing Docker within a Dockerized environment, empowering developers to effectively leverage this powerful tool within their DevOps workflows.

Exploring the concept of nested Docker containers on Windows

In this section, we will delve into the intriguing concept of nested Docker containers on Windows. We will discuss the idea of running one Docker container within another, exploring the potential benefits and challenges it presents in the context of DevOps build agents.

The concept of nesting Docker containers involves running a Docker container inside another, creating a hierarchical structure. This approach allows for fine-grained isolation and encapsulation of different components and services within a single environment. By nesting containers, we can achieve greater flexibility, scalability, and resource utilization, enabling more efficient management of complex systems.

One of the key advantages of nesting Docker containers is the ability to separate individual components of an application or system into distinct containers, each with its own isolated resources. This can enhance modularity, making it easier to develop, test, and deploy components independently, without affecting the overall system.

However, nesting containers also brings forth certain challenges. It introduces additional complexity and may require careful coordination and orchestration to ensure proper communication between the nested containers. Furthermore, resource utilization and performance considerations need to be taken into account to avoid overloading the host system.

In summary, exploring the concept of nested Docker containers on Windows provides insights into a powerful technique for managing complex systems and applications efficiently. While offering benefits such as enhanced modularity and isolation, it also introduces challenges that need to be carefully addressed. By understanding and leveraging the capabilities of nested containers, DevOps build agents can optimize their workflows and achieve greater productivity.

Benefits of Utilizing Docker within a Dockerized Environment

Implementing a Docker environment within another Docker container offers numerous advantages for DevOps professionals. This unique setup allows for streamlined development processes and enhanced container management. By harnessing the power of Docker within a Dockerized environment, teams can effectively isolate and manage different workloads, improve resource utilization, and achieve greater scalability.

One prominent benefit of launching Docker within a Docker container is the improved isolation it provides. By encapsulating applications and their dependencies within separate containers, it becomes easier to manage and control various components of the application stack. This enhanced isolation minimizes the risk of conflicts between different software versions and safeguards against potential security vulnerabilities or runtime errors.

Furthermore, utilizing Docker within a Dockerized environment facilitates efficient resource utilization. By containerizing different components of the development process, teams can allocate resources more effectively, ensuring that each container receives the necessary computing power and memory. This allows for optimal performance and reduces the risk of resource bottlenecks, allowing for smoother and more reliable build processes.

Scalability is another key advantage of launching Docker within a Docker container. With this approach, DevOps teams can easily scale their infrastructure to match the demands of their workload. By dynamically spinning up or tearing down Docker containers, resources can be efficiently allocated based on current needs. This flexibility enables seamless horizontal scaling, providing the ability to handle varying workloads and ensuring high availability for critical applications.

In conclusion, the benefits of launching Docker within a Docker container are significant for DevOps professionals. The improved isolation, efficient resource utilization, and scalability offered by this approach make it an invaluable tool for building and managing containerized environments. By harnessing the power of Docker within a Dockerized setup, teams can streamline their development processes, enhance security, and achieve greater flexibility and efficiency in their infrastructure.

Advantages of Running Docker within Docker Containers for DevOps Deployment Executives

Containerization has revolutionized the software development and deployment process, empowering DevOps professionals to efficiently manage and streamline the deployment pipeline. Within the realm of DevOps, running Docker inside Docker containers presents several significant advantages for build agents.

| Benefit | Description |

|---|---|

| Isolation | By utilizing Docker within Docker containers, DevOps build agents can achieve enhanced isolation for different stages of the deployment process. Each container acts as a self-contained environment, reducing the risk of conflicts, ensuring consistent results, and promoting easier testing and debugging. |

| Scalability | The ability to easily scale the deployment infrastructure is a crucial aspect of DevOps. Running Docker within Docker containers allows for seamless scalability, as additional build agents can be spun up as needed without impacting existing resources. This flexibility enables efficient resource management and faster response times for fluctuating workloads. |

| Version Control | Docker's version control capabilities are further leveraged when running Docker within Docker containers. DevOps build agents can easily manage different versions of application dependencies, libraries, and frameworks within isolated containers, ensuring consistent and reproducible deployments across different environments. |

| Speed and Efficiency | Running Docker inside Docker containers optimizes the execution speed and efficiency of build agents. By leveraging containerization, DevOps professionals can avoid the overhead of setting up and tearing down resources for each build, leading to faster and more reliable deployments. Additionally, the ability to cache and share container images further enhances the overall speed and efficiency of the build process. |

By embracing the advantages of running Docker inside Docker containers, DevOps build agents can significantly improve the reliability, scalability, and overall efficiency of their deployment workflows. This approach empowers organizations to adopt a more streamlined and agile approach to software delivery, ensuring consistent results and reducing the potential for conflicts and errors.

Challenges and Considerations

When it comes to implementing a solution that involves the utilization of Docker within a Docker container on the Windows platform for DevOps build agents, there are several challenges and considerations that need to be taken into account. This section will explore some of the key issues that may arise during the implementation process.

- Compatibility: Ensuring compatibility between the different versions of Docker and the Windows operating system is crucial. It is important to carefully evaluate the compatibility matrix and choose the appropriate versions to avoid any potential conflicts or limitations.

- Performance: Running Docker inside a Docker container on Windows can have an impact on performance due to the additional layers of virtualization. It is important to analyze the system's resources and optimize the configuration to mitigate any performance bottlenecks.

- Security: The use of Docker within a Docker container introduces additional security considerations. It is necessary to implement proper isolation mechanisms and securely manage the Docker environment to prevent unauthorized access or potential breaches.

- Networking: Configuring networking within a Docker inside a Docker container setup on Windows can be challenging. Various network modes and configurations need to be evaluated to ensure seamless communication between containers and external resources.

- Resource Management: Managing resources such as CPU, memory, and disk space becomes more complex when using Docker within a Docker container. It is crucial to implement effective resource allocation and monitoring strategies to optimize resource usage and prevent resource constraints.

By addressing these challenges and considerations, organizations can successfully leverage Docker within Docker containers on Windows for DevOps build agents, thereby enabling efficient and streamlined development and deployment processes.

Run Docker in Windows - Setup, Docker Compose, Extensions

Run Docker in Windows - Setup, Docker Compose, Extensions by Raid Owl 47,701 views 1 year ago 16 minutes

Install Docker on Windows Server 2022 Complete Tutorial - Build your own Custom IIS Container!

Install Docker on Windows Server 2022 Complete Tutorial - Build your own Custom IIS Container! by VirtualizationHowto 36,139 views 1 year ago 12 minutes, 54 seconds

FAQ

Can I launch Docker inside a Docker container on Windows?

Yes, it is possible to launch Docker inside a Docker container on Windows. This allows you to create isolated environments for building and testing applications.

What are the benefits of launching Docker inside a Docker container?

Launching Docker inside a Docker container provides several benefits. It allows you to create multiple isolated environments, helps in streamlining the build and test process, ensures that the build environment is consistent, and provides greater flexibility in managing dependencies.

How can I set up Docker inside a Docker container on Windows?

To set up Docker inside a Docker container on Windows, you need to install Docker for Windows, configure Docker to run in Linux container mode, and then create a Docker image with the necessary tools and configurations to run Docker inside the container.

Are there any limitations or considerations when launching Docker inside a Docker container on Windows?

Yes, there are a few limitations to consider. Firstly, Docker-in-Docker on Windows requires running Docker in Linux container mode. Additionally, there may be performance implications and potential security risks when running Docker inside a Docker container.

What are some use cases for launching Docker inside a Docker container on Windows?

Launching Docker inside a Docker container on Windows is useful for DevOps teams who need to create isolated environments for building and testing applications. It can also be used for continuous integration and deployment pipelines, where each build is executed in a clean and reproducible environment.

What is the purpose of launching Docker inside a Docker container on Windows for DevOps build agents?

The purpose of launching Docker inside a Docker container on Windows for DevOps build agents is to provide a consistent and isolated environment for running Docker commands and building Docker images. This allows DevOps teams to easily manage and automate their build processes, ensuring that builds are reproducible and free from external dependencies.