Delving into the intricate workings of cutting-edge technology often presents a myriad of enigmatic concepts, gracefully concealed beneath layers of innovation. In the realm of digitalization, a phenomenon has quietly emerged, revolutionizing the landscape of software development. As silent as a whisper, an omnipresent entity has been lingering within the depths of your Windows 10 operating system, waiting to be unraveled.

Imagine a secured chamber, a parallel universe residing within the very heart of your computer. Amidst the vast intricacy of virtualization, a curious phenomenon known as "containers" has taken center stage. These containers, acting as digital receptacles for applications, allow for seamless operations and the encapsulation of services within an isolated environment. Think of them as metaphysical capsules, embodying the essence of the digital facets they hold.

But where does this elusive world materialize? The answer lies in the amalgamation of ingenuity and resourcefulness, as these containers discretely occupy exclusive spaces within your Windows 10 environment. In this bewildering quest for knowledge, we traverse the labyrinthine pathways of the hidden realms–unveiling the clandestine location, stealthily discarded by conventional nomenclature.

Prepare to embark on an extraordinary expedition, where the secrets of the paradoxically ephemeral yet tangible lay bare before your inquisitive gaze. Discover the intricacies and arcane pathways that govern the mercurial world of containerization. Unveil the concealed locations, obscured from the prying eyes of the uninitiated, and reveal the true essence of these digital wonders within your Windows 10 microcosm.

The Fundamentals of Container Storage in the Windows 10 Environment

In the landscape of modern computing, the way containers are stored and managed within the Windows 10 ecosystem plays a crucial role in their efficient utilization. Understanding the inner workings of container storage is essential for developers and system administrators alike.

Here are some key aspects to consider when exploring the basics of container location in the Windows 10 environment:

- The Role of Host Operating System: The host operating system serves as the foundation for container storage in Windows 10. It provides the necessary resources and infrastructure for containers to operate seamlessly.

- Container Images: Container images, similar to virtual machine snapshots, contain the blueprint for creating and launching specific containers. These images are stored and managed using various mechanisms within the Windows 10 environment.

- Container Registries: Container registries act as centralized repositories for storing container images. They allow easy distribution and sharing of container images across different environments and enable version control and management.

- Layered File System: In Windows 10, containers utilize a layered file system, which provides an efficient and lightweight approach to storage. This system allows for the sharing of base layers among multiple containers and enables faster container startup and deployment.

- Container Storage Drivers: Container storage drivers handle the interactions between containers and the underlying storage infrastructure. Windows 10 offers various storage drivers that cater to different requirements, including local disk, network, and cloud-based storage options.

By delving into the fundamentals of container storage in the Windows 10 environment, individuals can optimize their container workflows, ensure robust data management, and enhance overall system performance.

Understanding the Purpose of Docker Containers

In this section, we will explore the fundamental concept behind the utilization of Docker containers in modern computing environments. By leveraging the power of containerization, developers and system administrators are able to achieve greater flexibility, efficiency, and scalability in their applications and infrastructure.

Containerization refers to the process of bundling an application and its dependencies into a standardized, lightweight unit called a container. These containers encapsulate the software and its required libraries, frameworks, and system tools, enabling it to run consistently and reliably across different computing environments.

One of the primary purposes of Docker containers is to enable the seamless deployment and management of applications across diverse platforms and operating systems. By abstracting the underlying infrastructure, containers provide a stable and isolated environment for applications to run, ensuring consistent behavior regardless of the host environment.

Containers promote modularity and portability, making it easier to package and distribute applications. With containerization, developers can avoid conflicts between different software components and dependencies, simplifying the development process and reducing compatibility issues.

Moreover, containerized applications can be efficiently scaled horizontally, allowing them to handle increased workloads and traffic demands. By leveraging container orchestration platforms and tools, such as Kubernetes, administrators can easily manage and scale their applications across multiple servers or cloud instances.

In summary, the purpose of Docker containers is to provide a lightweight, portable, and scalable environment for running applications. By encapsulating the application and its dependencies, containers ensure consistent behavior and ease the deployment process, ultimately enhancing productivity and efficiency in modern software development and deployment workflows.

Understanding the Inner Workings of Containers in the Windows 10 Environment

In this section, we will delve into the intricate mechanics behind the functioning of containers within the Windows 10 environment. By exploring the underlying processes and concepts that drive containerization, we will gain a deeper understanding of how these self-contained entities operate seamlessly and independently.

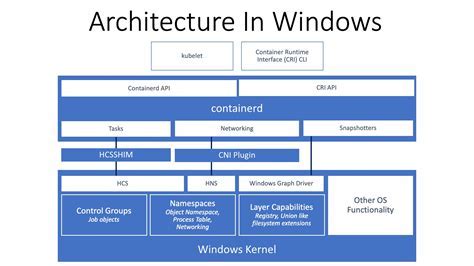

Underlying Mechanisms: Within the Windows 10 ecosystem, containers leverage various underlying mechanisms to achieve their isolated and portable nature. These mechanisms involve the utilization of lightweight virtualization techniques that allow applications to run in isolated environments without the need for full-fledged virtual machines.

Image Layers: A fundamental aspect of containerization is the concept of image layers. These layers serve as the building blocks for containers, containing all the necessary dependencies and files required for an application to run. By stacking these layers on top of each other, containers can be easily created, modified, and shared across different systems.

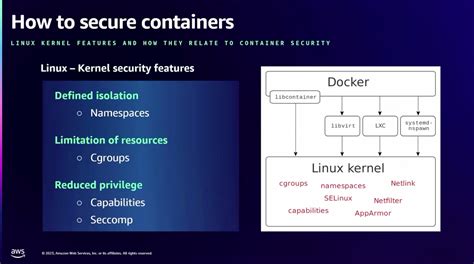

Kernel Isolation: Containers rely on kernel isolation to ensure that applications within the container are isolated from other containers and the host operating system. Through the use of namespaces and control groups, containers have their own isolated namespaces for processes, network interfaces, filesystems, and more, providing them with the necessary level of isolation and security.

Resource Management: To maintain optimal performance and resource utilization, containers in Windows 10 employ resource management techniques. These techniques allow for efficient allocation and control of CPU, memory, disk I/O, and network resources, ensuring that containers coexist harmoniously within the shared host environment.

By diving into the inner workings of containers in the Windows 10 environment, we can gain a comprehensive understanding of how these self-contained entities effectively encapsulate and operate applications. This knowledge equips us with the necessary insights to leverage the power and benefits of containerization within our own development and deployment workflows.

Untangling the Falsehoods Surrounding the Location of Docker Containers

Exploring the veracity of commonly held beliefs about the whereabouts of Docker containers unveils a fascinating journey through misconceptions and misunderstandings. Challenging the prevailing notions, this section investigates the true nature of container positioning without directly referring to specific terms.

Exploring the Default Path of Docker Instances in Windows 10

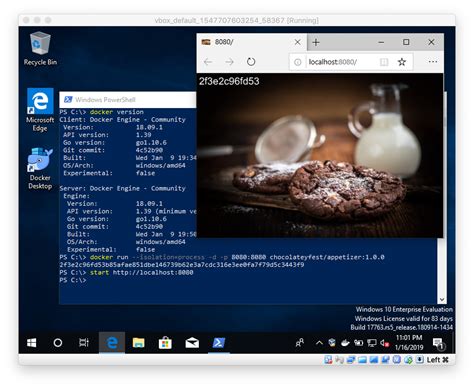

Discovering the default location where Docker creates and stores its virtual environments in Windows 10 unveils essential insights into the underlying structure and organization of these instances.

Exploring Different Ways to Modify the Storage Path of Docker Instances

In this section, we will delve into various approaches to adjust the file path where Docker containers are stored. By adjusting the storage location, we can improve flexibility, optimize disk space usage, and enhance overall performance. Let's explore some techniques to modify the storage path of Docker instances.

| Approach | Description |

| 1. Altering the Default Storage Driver Configuration | Discover how to modify the default storage driver configuration to change the location where Docker stores its containers and images. We will explore different configuration options and the corresponding impact on container location. |

| 2. Utilizing Symbolic Links | Learn how to leverage symbolic links to redirect the storage path of Docker containers. We will examine the steps involved in creating symbolic links and discuss the benefits and potential drawbacks of this approach. |

| 3. Employing Docker Volumes | Explore Docker volumes as an alternative approach to change the location of your containers. We will discuss the creation and management of volumes and demonstrate their usage in modifying the storage path. |

| 4. Customizing Docker Root Directory | Discover the steps to customize the Docker root directory, allowing you to define the storage path for your containers. We will discuss the impact of this adjustment and the considerations to keep in mind. |

By exploring these different approaches, you will gain a comprehensive understanding of how to change the location of Docker containers. This flexibility will enable you to optimize your Docker environment, meet specific storage requirements, and enhance your overall Docker experience.

Navigating Potential Challenges and Considerations with Container Positioning

In this section, we will explore the various obstacles and factors that need to be taken into account when determining the placement of containers in different operating environments.

When it comes to the physical location of containers, it is important to understand the potential challenges that may arise. These challenges encompass a wide range of considerations, including the optimal positioning within the host system, the potential impact on infrastructure, and the overall efficiency and performance of the containers.

One key consideration is the placement of containers within the host system. Determining the right position involves taking into account factors such as resource allocation, network connectivity, and hardware compatibility. Properly positioning containers can help maximize their performance and minimize any potential conflicts or bottlenecks.

Another important aspect to consider is the impact on infrastructure. Placing containers in a strategic manner can help optimize resource utilization and enhance scalability. By carefully considering factors such as load balancing and network bandwidth, businesses can ensure that their containers are deployed in a way that minimizes strain on the overall system.

Efficiency and performance are also critical considerations. Proper container positioning can help improve deployment speed, reduce overhead, and enhance overall operational efficiency. By strategically placing containers based on workload requirements and specific objectives, organizations can ensure that their containers deliver optimal performance.

In conclusion, navigating challenges and considerations related to container positioning involves a comprehensive understanding of the impact on the host system, the infrastructure, and the overall efficiency and performance. By carefully evaluating these factors and making informed decisions, businesses can effectively optimize container placement and maximize the benefits of containerization technology.

Choosing the Optimal Environment for Enhancing Docker Container Performance

In the context of Docker containerization, the selection of an ideal ecosystem plays a crucial role in maximizing performance capabilities. By strategically analyzing the most suitable environment for running containers, organizations can unlock superior efficiency, scalability, and responsiveness. This section explores the significance of making informed decisions regarding the location and infrastructure for Docker containers, enabling businesses to optimize resource utilization and achieve seamless operations.

Assessing the Appropriate Setting

When it comes to selecting the right setting for Docker containers, careful evaluation and consideration are essential. By assessing various factors such as resource availability, network connectivity, and container dependencies, organizations can identify the optimal environment that aligns with their specific requirements. By avoiding generic solutions and tailoring the ecosystem to the unique needs of the containers, businesses can ensure efficient utilization of resources, enhance performance, and guarantee optimal container orchestration.

Understanding Infrastructure Requirements

Each Docker container possesses distinct infrastructure needs that must be met for optimal performance. By comprehensively understanding the resource demands and dependencies of containers, organizations can tailor the environment accordingly. This includes ensuring an adequate amount of CPU, memory, disk space, and network bandwidth to accommodate the containerized applications. By fine-tuning the infrastructure settings, businesses can eliminate potential bottlenecks, enhance response times, and achieve a highly efficient and streamlined Docker container environment.

Considering Proximity and Compatibility

The proximity and compatibility of the Docker container environment with its surrounding ecosystem significantly impact performance. Containers that are located close to dependent services and resources experience reduced latency, enabling faster communication and data transfer. In addition, compatibility between the container environment and its host system ensures smooth integration and seamless cooperation, ensuring efficient utilization of available resources. By optimizing proximity and compatibility, organizations can streamline operations, achieve enhanced performance gains, and optimize the overall application delivery process.

Monitoring and Continuous Improvement

After selecting an optimal environment for Docker containers, it is crucial to continuously monitor and analyze performance metrics. This allows organizations to identify potential performance bottlenecks, resource limitations, and other areas for improvement. Regularly reviewing and fine-tuning the environment based on real-time insights enables businesses to continually optimize Docker container performance. This iterative approach drives continuous improvement and helps organizations reap the full benefits of containerization.

Exploring Strategies for Efficiently Organizing Containers on the Windows 10 Operating System

In this section, we will delve into the most effective practices for structuring and managing containers on the Windows 10 platform. By implementing these strategies, you can enhance the efficiency and organization of your containerized applications without compromising performance or stability.

Optimizing Container Grouping: When it comes to organizing containers on Windows 10, grouping them based on specific characteristics can significantly improve maintenance and accessibility. By categorizing containers according to their functionalities, dependencies, or application domains, you can easily identify and manage related containers as coherent units.

Implementing Metadata: Utilizing metadata allows for the seamless organization and retrieval of relevant information about containers. By tagging containers with metadata, such as version numbers, labels, or descriptions, you can quickly filter and search for specific containers based on your requirements. This practice also facilitates effective tracking and auditing of container instances.

Utilizing Naming Conventions: Applying consistent and clear naming conventions to container artifacts simplifies identification and reduces ambiguity. By adhering to a standardized naming pattern, you can easily recognize the purpose, role, and dependencies of each container. This practice is particularly useful when managing a large number of containers or collaborating with multiple development teams.

Implementing Folder Hierarchies: Organizing containers within a hierarchical folder structure provides a logical organization that mirrors the relationships between containerized applications. This approach enables straightforward navigation and simplifies maintenance tasks, making it easier to locate, update, or remove specific containers and their associated resources.

Utilizing Container Labels and Annotations: Leveraging container labels and annotations permits additional metadata to be associated with containers, enhancing their discoverability and categorization. These lightweight yet powerful mechanisms facilitate the classification and management of containers based on various criteria, including specific project phases, environments, or ownership.

Considering Resource Limits: Allocating appropriate resource limits to containers is crucial for ensuring optimal performance and resource utilization. By setting constraints on CPU, memory, and disk usage, you can prevent resource contention and guarantee that your containers operate smoothly without impacting other system components.

Documenting Container Configurations: Maintaining thorough documentation of container configurations, including dependencies, environment variables, and networking details, is essential for efficient container management. By providing detailed and up-to-date documentation, you can streamline troubleshooting, replication, and container scaling processes.

Regular Maintenance and Cleanup: Conducting regular maintenance activities, such as removing unused or outdated containers, helps declutter your environment and optimize resource allocation. This practice ensures that your container ecosystem remains organized and free from unnecessary artifacts that may hinder performance or consume unnecessary storage space.

Implementing Security Best Practices: Embedding security measures within your container management practices is crucial to safeguarding your applications and data. By adhering to recommended security practices, such as container isolation, vulnerability scanning, and secure image sourcing, you can protect your containers from potential threats and vulnerabilities.

Monitoring and Analysis: Implementing monitoring and analysis tools allows for continuous assessment and optimization of container usage. By gathering metrics, analyzing performance trends, and identifying potential bottlenecks, you can refine your container organization strategies and optimize resource allocation.

Utilizing Tools and Techniques to Monitor the Location of Docker Containers

In this section, we will explore various tools and techniques that can be employed to efficiently monitor the whereabouts of Docker containers. By adopting these methods, administrators can gain valuable insights into the positioning of containers, enabling better management and troubleshooting.

1. Utilizing Resource Control:

- Monitoring and controlling resource allocation can indirectly provide insights into container location.

- By analyzing resource utilization patterns, such as CPU and memory usage, administrators can identify the host system where a particular container is executing.

2. Employing Network Tracing:

- Monitoring network traffic can offer valuable information about container location.

- Network tracing tools can help administrators track the network connections established by containers, providing clues about their physical or virtual location.

3. Leveraging Container Orchestration Platforms:

- Container orchestration platforms like Kubernetes offer built-in features for container management and visibility.

- By utilizing these platforms, administrators can easily track the location of containers in a cluster and gain insights into their deployments.

4. Monitoring Container Events:

- Monitoring container events, such as creation, start, stop, and migration, can provide real-time information about the movements of containers.

- Administrators can leverage tools like Docker events or container runtime interfaces to capture and analyze these events.

5. Utilizing Container Labels and Metadata:

- Adding labels and metadata to containers can serve as a useful mechanism for tracking their location.

- Administrators can assign custom labels indicating the physical or virtual environment where a particular container is deployed.

By employing these tools and techniques, administrators can effectively monitor the location of Docker containers, enabling better management and troubleshooting in diverse computing environments.

FAQ

What is the real location of Docker container in Windows 10?

The real location of Docker containers in Windows 10 is typically within the Docker engine's internal virtualization system. It creates a Virtual Hard Disk (VHD) file, which contains the container's file system and application runtime. This VHD file is stored in a folder named "C:\ProgramData\Docker\Containers" by default.

Can I change the location of Docker containers in Windows 10?

Yes, you can change the default location of Docker containers in Windows 10 by modifying the Docker daemon configuration. To do this, stop the Docker service, locate the Docker daemon configuration file (typically located at "C:\ProgramData\Docker\config\daemon.json"), and add/edit the "data-root" property in the JSON file to specify the desired location for the containers. Save the file, start the Docker service, and the containers will be stored in the new location.

Are Docker containers stored within the Windows file system?

No, Docker containers are not stored within the Windows file system directly. Instead, they are stored as Virtual Hard Disk (VHD) files, which are essentially disk images. These VHD files are managed and accessed through the Docker engine's internal virtualization system. The containers within these VHD files have their own isolated file systems that are separate from the host Windows file system.

What happens if I delete the VHD file of a Docker container in Windows 10?

If you delete the VHD file of a Docker container in Windows 10, the container will no longer be accessible or usable. The container and its associated file system and runtime will be completely removed. However, keep in mind that deleting the VHD file does not remove the container's information from Docker's metadata and management systems. To completely remove the container, you should use Docker commands (e.g., "docker rm") to ensure proper cleanup.