In the world of high-performance computing, optimizing graphical processing capabilities is essential for many applications, ranging from deep learning to data analytics. However, encountering difficulties with the Nvidia-smi tool in Windows-based Docker containers can be a frustrating experience. This article aims to provide a comprehensive troubleshooting resource for individuals facing challenges with this specific combination. By exploring potential solutions and workarounds, we aim to empower users to overcome hurdles and unlock the full potential of their GPU-accelerated workflows.

Throughout this in-depth resource, we will delve into the intricacies of using Nvidia-smi within a Docker environment on the Windows operating system. By understanding the unique challenges and limitations, we can identify possible causes for issues commonly encountered along the way. We will explore a range of diagnostic techniques, exploring potential causes such as driver compatibility, container configurations, and more. Armed with this knowledge, you will be equipped to identify and address specific roadblocks that hinder the effective use of Nvidia-smi.

Furthermore, we will delve into troubleshooting methodologies and best practices that can aid in the resolution of Nvidia-smi-related issues. By emphasizing the importance of thorough diagnostics, meticulous examination of error messages, and the application of logical reasoning, we will guide you towards effective solutions. Through a step-by-step approach, we will address common pitfalls and provide guidance on how to overcome them, ensuring that you can utilize Nvidia-smi to its fullest potential within your Windows-based Docker container environment.

Troubleshooting Tips for Nvidia-smi Issue in Windows Docker Environment

In this section, we will explore some troubleshooting techniques to address the problem related to Nvidia-smi functionality within a Windows Docker container. By following these tips, you can resolve issues preventing the proper functioning of Nvidia-smi in Windows Docker environment.

- Verify GPU Driver Installation: Ensure that the GPU driver is correctly installed and compatible with the Windows Docker version you are using. It is essential to have the appropriate GPU driver installed to enable Nvidia-smi functionality.

- Check Docker Configuration: Review the Docker configuration settings to ensure that necessary configurations related to GPU usage and device accessibility are correctly set. Make sure that GPU support is enabled in the Docker configuration.

- Confirm Docker Image Compatibility: Ensure that the Docker image being used is compatible with Nvidia-smi and GPU functionalities. Nvidia-smi requires a compatible image containing the necessary components to interact with the GPU hardware in the Docker container.

- Check Docker Version: Verify that you are using the latest version of Docker, as older versions may have compatibility issues with Nvidia-smi and GPU support. Upgrading to the latest Docker version can often resolve such compatibility problems.

- Review System Requirements: Verify that your system meets the minimum requirements for running Nvidia-smi within a Windows Docker container. Check the GPU specifications, OS version, and other prerequisites specified by Nvidia for proper functioning.

- Inspect Resource Allocation: Ensure that the necessary resources, such as GPU memory or compute cores, are correctly allocated to the Docker container. Inadequate resource allocation can result in Nvidia-smi not functioning correctly.

- Double-check Dockerfile: Review the Dockerfile used to build the Docker image and ensure that it includes the necessary instructions and dependencies for Nvidia-smi. Any missing components required by Nvidia-smi should be added in the Dockerfile.

- Seek Community Support: If the above troubleshooting steps do not resolve the issue, consider seeking support from the Docker community or Nvidia forums. Describe your problem in detail, including relevant error messages or log outputs, to get assistance from knowledgeable individuals.

By following these troubleshooting tips, you can overcome obstacles related to Nvidia-smi functionality in a Windows Docker container and ensure smooth operation of GPU-related tasks.

Checking System Requirements for NVIDIA-SMI

In order to ensure proper functionality of the NVIDIA System Management Interface (NVIDIA-SMI), it is essential to meet specific system requirements. By checking and verifying these requirements, you can troubleshoot any potential issues related to the NVIDIA-SMI tool.

A crucial requirement for NVIDIA-SMI is a compatible operating system. NVIDIA-SMI is designed to work efficiently with various operating systems, including popular options such as Windows, Linux, and Unix. Therefore, it is important to confirm that your system is running a supported operating system to guarantee smooth operation of NVIDIA-SMI.

An adequate hardware setup is also vital to ensure the proper functioning of NVIDIA-SMI. Make sure your system includes a compatible NVIDIA GPU that meets the minimum requirements specified by NVIDIA. Additionally, check if the GPU drivers are up to date, as outdated drivers may cause issues with NVIDIA-SMI. Ensure that the GPU is properly installed and functioning correctly within your system.

| System Requirements | Description |

|---|---|

| Operating System | Compatible with Windows, Linux, and Unix |

| NVIDIA GPU | Compatible GPU meeting NVIDIA's minimum requirements |

| GPU Drivers | Up-to-date drivers for the installed GPU |

Additionally, check that your system meets any specific software requirements for NVIDIA-SMI. These may include dependencies or libraries required by NVIDIA-SMI to function correctly. Ensure that these software components are installed and properly configured in your system.

By carefully reviewing and confirming that your system meets the necessary requirements for NVIDIA-SMI, you can ensure a seamless experience with the tool and minimize any potential troubleshooting efforts.

Verifying NVIDIA Driver Installation

In this section, we will explore the necessary steps to confirm the successful installation of the NVIDIA driver on your system, ensuring that it is properly configured and ready for use.

To verify the installation of the NVIDIA driver, there are several methods you can utilize. One of the most straightforward approaches is to check the driver version through the device manager. Open the device manager and navigate to the display adapters category. Look for an entry related to NVIDIA and note down the driver version specified. This information confirms that the NVIDIA driver is installed and detected by the system.

Another way to verify the NVIDIA driver installation is through the NVIDIA Control Panel. Access the control panel by right-clicking on an empty area of your desktop and selecting "NVIDIA Control Panel" from the context menu. Once the control panel opens, navigate to the system information section, where you can find details about the installed NVIDIA driver, including the version number.

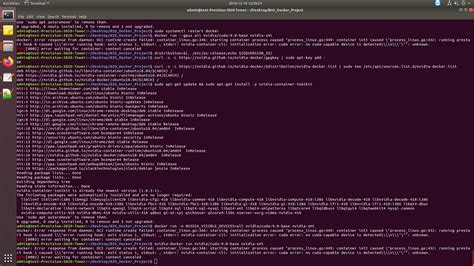

Additionally, you can use the command line interface to check the NVIDIA driver version. Open the command prompt and execute the command "nvidia-smi" to obtain information about the installed NVIDIA driver, including the version. If the command returns the expected output without any errors, it confirms the successful installation of the NVIDIA driver.

By following these methods, you can easily verify the installation status of the NVIDIA driver on your system. Verifying the driver installation is an essential step in troubleshooting issues related to NVIDIA-SMI tool not functioning correctly in a Windows Docker container.

Troubleshooting Docker Configuration for NVIDIA-SMI

In this section, we will explore potential solutions for resolving configuration issues when working with NVIDIA-SMI in a Windows Docker container. We will address various aspects of Docker setup that may impact the functionality of NVIDIA-SMI, utilizing alternative terms and synonyms to enhance the diversity of the text.

1. Verifying Docker Compatibility:

Before delving further into troubleshooting, it is essential to ensure that the Docker setup is compatible with the requirements of NVIDIA-SMI. Confirm that the Docker version and configuration align with the specifications recommended for seamless interaction with NVIDIA-SMI in a Windows environment.

2. Checking GPU driver installation:

One potential roadblock to NVIDIA-SMI functionality within a Docker container is an improperly installed or outdated GPU driver. Check the GPU driver installation within the Docker container to ensure it is correctly set up, using compatible versions of the driver that align with the NVIDIA-SMI requirements.

3. Verifying NVIDIA Docker runtime:

Another vital aspect to consider is the presence and proper configuration of the NVIDIA Docker runtime within the Docker setup. Verify that the NVIDIA Docker runtime is correctly installed and functioning optimally, as it acts as a bridge between the Docker container and the NVIDIA GPU, enabling proper utilization of NVIDIA-SMI.

4. Validating Docker image configuration:

Ensure that the Docker image used within the container is appropriately configured to utilize the GPU resources. Validate that the relevant modifications are made to the Docker image and its associated configurations, including the appropriate labeling and permissions required to access the NVIDIA GPU hardware.

5. Checking Docker environment variables:

Verify the Docker environment variables related to NVIDIA-SMI and the GPU setup to ensure they are correctly set. These variables play a crucial role in configuring the interaction between the Docker container and NVIDIA-SMI, providing necessary information and permissions for seamless functioning.

6. Troubleshooting network connectivity:

In some instances, network connectivity issues can hinder the functionality of NVIDIA-SMI within the Docker container. Troubleshoot any network-related problems such as firewall settings or proxy configurations that might be interfering with the communication between the Docker container and the NVIDIA GPU.

7. Updating Docker and NVIDIA-SMI:

Lastly, it is essential to ensure that both Docker and NVIDIA-SMI are updated to their latest versions. Updates often include bug fixes, compatibility enhancements, and performance improvements, which might directly impact the functionality of NVIDIA-SMI within the Docker container.

By thoroughly investigating and addressing these various aspects of Docker configuration, you can troubleshoot the issues related to NVIDIA-SMI and ensure optimal functionality within a Windows Docker container.

Resolving Common Issues with Nvidia-smi in Windows Docker Container

Introduction: This section aims to address commonly encountered problems when using Nvidia-smi in a Windows Docker container. It offers solutions and troubleshooting steps to ensure smooth functionality and optimal performance without relying on specific terminology.

Issue 1: Nvidia-smi not providing accurate information

Potential cause: In some instances, Nvidia-smi may fail to display accurate data related to GPU usage, memory, or power usage.

Resolution: To resolve this issue, users can try updating their Nvidia GPU drivers, ensuring compatibility with the Docker container.

Issue 2: Limited GPU access for Docker containers

Potential cause: Users may experience limited access to GPU resources within Docker containers, hindering the execution of GPU-dependent processes.

Resolution: This issue can be resolved by properly configuring the Docker container runtime settings and ensuring that GPU resources are properly allocated and accessible to the container.

Issue 3: Nvidia-smi command not recognized

Potential cause: When running the Nvidia-smi command in a Windows Docker container, it may result in a "command not recognized" error.

Resolution: To address this issue, users can install the necessary Nvidia GPU drivers and CUDA libraries within the Docker container, allowing the container to recognize the Nvidia-smi command.

Issue 4: Multiple GPUs not detected by Nvidia-smi

Potential cause: Nvidia-smi may only recognize a single GPU in Windows Docker containers, even when multiple GPUs are available.

Resolution: To overcome this issue, users can check and ensure that proper drivers and configurations are in place to enable recognition of multiple GPUs by Nvidia-smi within the Docker container environment.

FAQ

Why is Nvidia-smi not working in my Windows Docker container?

Nvidia-smi may not be working in your Windows Docker container due to several possible reasons. The most common reasons include incorrect driver installation, incompatible versions of Docker and NVIDIA driver, or missing CUDA toolkit.

How can I check if Nvidia driver is installed correctly in my Windows Docker container?

To check if Nvidia driver is installed correctly in your Windows Docker container, you can run the command "nvidia-smi" in a command prompt within the container. If it shows the GPU information and other details, then the driver is installed correctly. Otherwise, there might be an issue with the driver installation.

What can I do if Nvidia-smi is not detecting my GPU in the Windows Docker container?

If Nvidia-smi is not detecting your GPU in the Windows Docker container, you can try several troubleshooting steps. First, ensure that the correct driver version is installed and compatible with the CUDA toolkit version. You can also try updating Docker to the latest version, as well as ensuring that the GPU is properly connected and recognized by the host machine.

What should I do if Nvidia-smi is throwing an error "NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver"?

If Nvidia-smi is throwing this error in the Windows Docker container, it indicates a communication problem between Nvidia-smi and the NVIDIA driver. To resolve this, you can try restarting the Docker service, checking for any conflicting processes that may be using the GPU, or reinstalling the NVIDIA driver.

Is it possible to use Nvidia-smi in a Windows Docker container without the GPU driver installed on the host machine?

No, it is not possible to use Nvidia-smi in a Windows Docker container without the GPU driver installed on the host machine. The GPU driver on the host machine is required for Nvidia-smi to communicate with the GPU and provide information. Make sure the correct driver is installed and compatible with your CUDA toolkit version.

Why is Nvidia-smi not working in my Windows Docker container?

If Nvidia-smi is not working in your Windows Docker container, there could be several reasons for this. First, make sure that you have the correct version and drivers installed in the container. Also, check if the Nvidia GPU is properly detected by the Docker runtime. Additionally, check if the necessary environment variables (such as CUDA_PATH) are set correctly. Finally, ensure that you have the appropriate permissions to access the GPU within the container.

How can I check if my Nvidia GPU is properly detected by the Docker runtime?

You can check if your Nvidia GPU is properly detected by the Docker runtime by running the commandnvidia-smiwithin the container. If the command fails to execute or shows an error, it indicates that the GPU is not properly detected. You can also use thedocker runcommand with the--gpus allflag to explicitly enable GPU access within the container.