In the fast-paced digital era, where unyielding competition prevails, organizations are constantly seeking innovative ways to optimize their software development and deployment processes. Amidst this quest for efficiency, containerization has emerged as a game-changer, revolutionizing the way applications are packaged and delivered. This revolutionary technology has paved the way for Kubernetes in Docker for Windows, a dynamic solution that unlocks boundless potential.

Embark on a journey where seamless synergy between containers and orchestrators transcends barriers, empowering developers, architects, and IT professionals alike to unlock the full potential of their software in a Windows environment. Explore the depths of a comprehensive exploration that encompasses the realm of containerization, presenting a panoramic view of how Docker, amidst its manifold capabilities, seamlessly aligns with the Windows ecosystem.

Discover the myriad of possibilities that unravel when container orchestration meets the empowering capabilities of Docker. Dive deep into this all-encompassing guide, where we unravel the intricate tapestry of Kubernetes in Docker for Windows. From grasping the fundamentals of containerization, to harnessing the powerful orchestration capabilities of Kubernetes, this guide is designed to equip you with the knowledge necessary to navigate through the world of agile software development with absolute finesse.

Take a leap into the future, where containerization becomes synonymous with the ability to drive transformative change in the software development landscape. Unleash the true potential of Docker's dynamic nature, while leveraging the familiarity and versatility of Windows, enabling your organization to build, deploy, and scale applications with unparalleled efficiency in a rapidly evolving technological landscape.

Getting Started with Running Containerized Applications on Your Local Machine

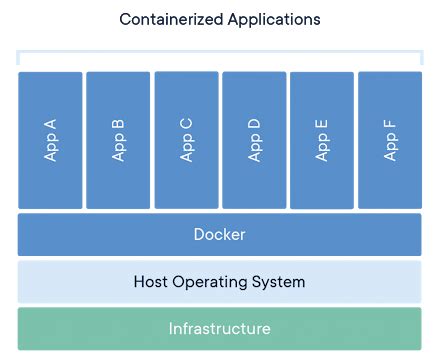

Operating containerized applications on your personal computer has become increasingly popular as it offers the benefit of seamless deployment and scalability. This section will guide you through the process of setting up a powerful platform that enables running containerized applications on your Windows machine, without the need for complex configuration or additional software installation.

Creating and Managing Clusters

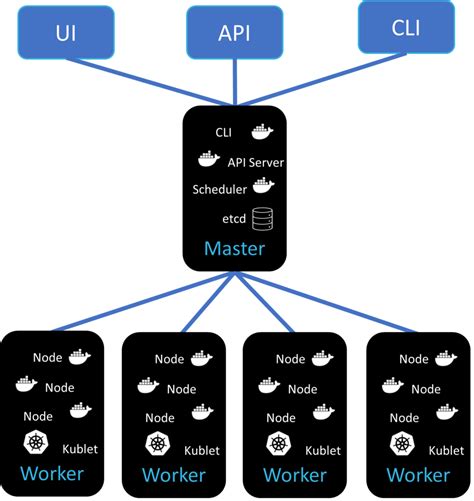

When it comes to deploying and managing applications in a distributed environment, having a reliable and scalable infrastructure is crucial. In this section, we will explore the process of creating and managing clusters, which provide the foundation for running containerized applications.

Cluster Creation

- Understanding the importance of cluster architecture

- Exploring different cluster creation methods

- Setting up a cluster using container orchestration tools

- Configuring cluster settings and parameters

Cluster Management

- Monitoring cluster health and performance

- Scaling clusters based on application requirements

- Managing cluster resources efficiently

- Implementing backup and disaster recovery strategies

Cluster Troubleshooting

- Identifying common cluster issues

- Troubleshooting cluster connectivity problems

- Debugging application failures within a cluster

- Utilizing monitoring and logging tools for cluster analysis

By mastering the creation and management of clusters, you will gain the necessary skills to deploy and maintain robust containerized environments. A well-designed and properly managed cluster can ensure high availability, efficient resource allocation, and seamless scalability for your applications.

Exploring Kubernetes Object Management

In this section, we will delve into the intricacies of working with various entities within a Kubernetes cluster. By understanding the key concepts and functionalities associated with Kubernetes objects, you will gain a comprehensive understanding of how to efficiently manage your containerized applications.

Diving into Kubernetes Object Types:

- Discover the various types of Kubernetes objects that play a crucial role in defining the desired state of your application. Explore how each object encapsulates a specific configuration and how they interrelate.

- Learn about Pods - the fundamental building blocks of Kubernetes. Understand how Pods facilitate the deployment and scaling of your containers, while maintaining the isolation and availability of your application's components.

- Explore Services and their significance in enabling seamless communication between Pods. Acquire insights into how Services abstract away the complexities of dynamic IP addresses and load balancing, allowing you to expose your application to external entities.

- Uncover the power of Deployments, ReplicationControllers, and ReplicaSets, which provide declarative approaches to managing the lifecycle of your application's Pods, ensuring the desired state is achieved and maintained.

- Delve into ConfigMaps and Secrets and understand how they allow you to safely manage configuration and sensitive data within your Kubernetes cluster. Discover how these objects can be utilized to inject environment variables, files, or even entire configuration files into your running Pods.

- Learn about StatefulSets and their ability to manage stateful applications within Kubernetes. Gain insights into how StatefulSets guarantee stable network identities and persistent storage, enhancing the reliability and resilience of your application.

Manipulating Kubernetes Objects:

- Learn how to create, update, and delete Kubernetes objects using imperative and declarative approaches. Understand the benefits and drawbacks of each method and choose the one that suits your workflow and requirements.

- Discover the power of labels and annotations in organizing and categorizing your Kubernetes objects. Learn how to efficiently filter and query objects based on these attributes to simplify management and streamline operations.

- Explore the extensive range of kubectl commands and YAML specifications that enable you to interact with and manipulate Kubernetes objects seamlessly. Gain a deeper understanding of the syntax and structure of these commands and specifications.

- Delve into the world of templating with tools like Helm, allowing you to define, package, and deploy complex applications as Kubernetes objects using reusable templates. Uncover the advantages of leveraging Helm charts in your development and deployment workflows.

By mastering the management of Kubernetes objects, you will empower yourself to orchestrate and scale your containerized applications effortlessly within your Kubernetes environment. Understanding the nuances of these objects and their interdependencies is key to achieving optimal performance and efficiency.

Exploring Networking and Data Management in Kubernetes Clusters

In this section, we delve into the intricate world of networking and data management within a Kubernetes environment. This includes understanding how information flows between different components, as well as the various mechanisms and tools available to efficiently handle storage and data requirements.

Networking: Communication is vital in any distributed system, and Kubernetes is no exception. We explore how the cluster's networking model enables seamless interaction between various containers and nodes, ensuring reliable and efficient communication. We also discuss the different network plugins and solutions available, such as overlay networks and virtual LANs, that facilitate effective network management and security.

Data Storage and Management: Kubernetes provides robust storage capabilities to manage and persist data within the cluster. We examine the concepts of persistent volumes (PVs) and persistent volume claims (PVCs), which allow for efficient storage allocation and utilization. We also cover the various types of storage provided by Kubernetes, including local storage, network-attached storage (NAS), and cloud-based storage solutions.

Stateful vs. Stateless Applications: Understanding the distinction between stateful and stateless applications is crucial when it comes to data management in Kubernetes. We discuss the challenges and considerations involved in deploying and managing stateful applications, which require persistent storage and data management strategies to ensure data integrity and availability.

Data Persistence and Replication: Data replication is essential for fault tolerance and high availability in Kubernetes clusters. We explore how Kubernetes handles data replication through features like replicasets and statefulsets, which ensure that data is replicated across multiple nodes for reliability and resilience.

Data Security: Data security is a paramount concern in any system. We examine the security features provided by Kubernetes, such as encryption and access controls, to ensure the confidentiality, integrity, and availability of data within the cluster.

In this section, we will dive deep into the intricate networking and storage aspects of Kubernetes clusters. We will explore the mechanisms and tools that Kubernetes offers to enable seamless communication between components and efficient data storage and management. By the end, you will have a comprehensive understanding of how Kubernetes handles networking and data in a distributed environment.

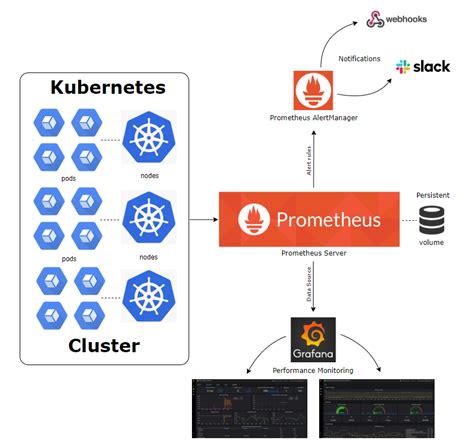

Monitoring and Troubleshooting for Optimal Kubernetes Performance

In this section, we will explore strategies and techniques for effectively monitoring and troubleshooting your Kubernetes environment, ensuring optimal performance and smooth operation. Understanding the health and status of your Kubernetes cluster is crucial for maintaining the stability and reliability of your applications. Additionally, being able to quickly identify and resolve any issues that may arise is key to minimizing downtime and ensuring efficient resource utilization.

Monitoring:

Effective monitoring of your Kubernetes cluster involves gathering and analyzing various metrics and logs to gain insights into its overall health and performance. By closely monitoring resource utilization, service availability, error rates, and other relevant metrics, you can proactively identify bottlenecks, optimize resource allocation, and detect any anomalies or potential issues before they impact your applications.

There are several popular monitoring solutions available for Kubernetes, such as Prometheus, Grafana, and Datadog, which can provide valuable visualizations and alerts based on the collected metrics. Leveraging these tools allows you to set up customized monitoring dashboards, create alerting rules, and track the performance of your cluster over time.

Troubleshooting:

When it comes to troubleshooting Kubernetes, having a systematic approach is essential to efficiently identify and resolve issues. By leveraging the Kubernetes command-line interface (CLI) and various diagnostic tools, you can gather relevant information about your cluster, such as pod logs, events, and resource utilization data.

When troubleshooting, it's important to analyze the logs and events to identify any error messages, warning signs, or performance bottlenecks. Additionally, understanding the lifecycles and dependencies of your Kubernetes resources, such as pods, services, and deployments, can greatly aid in troubleshooting and resolving any issues.

In complex scenarios, it may be necessary to perform advanced troubleshooting techniques, such as debugging individual pods, examining network connectivity, or analyzing application-specific metrics. By utilizing these techniques and leveraging the wealth of resources available in the Kubernetes community, you can effectively diagnose and resolve issues, ensuring the optimal performance and stability of your applications.

Remember, monitoring and troubleshooting are ongoing processes that require continuous attention and adaptation. Regularly reviewing and analyzing the collected metrics and logs, as well as staying updated with the latest best practices and tools, will help you maintain a robust and resilient Kubernetes environment.

Best practices for optimizing the use of Kubernetes in Docker on the Windows platform

When working with Kubernetes in Docker on the Windows platform, there are certain best practices that can help ensure efficient and smooth operations. By following these guidelines, you can enhance the performance and reliability of your containerized applications, and make the most out of the powerful capabilities provided by Kubernetes.

- Optimize resource allocation: Efficiently allocate resources such as CPU, memory, and storage to your containers. Analyze resource usage patterns and adjust resource allocations accordingly to ensure optimal performance.

- Use containers for specific tasks: Design your containers to perform specific tasks, rather than including multiple functionalities within a single container. This allows for better isolation, scalability, and maintainability of your applications.

- Implement container security measures: Ensure that your containerized applications are protected from potential security threats. Use secure images, regularly update software packages, and implement appropriate access controls to safeguard your environments.

- Automate container operations: Utilize automation tools and frameworks to streamline container operations, such as deployment, scaling, and monitoring. This helps reduce manual interventions, improve efficiency, and ensure consistent deployment practices.

- Implement container networking: Set up effective networking configurations for your containers to enable seamless communication between different components of your application stack. Utilize container networking plugins or overlays to simplify network management and enhance application performance.

- Regularly monitor and analyze container performance: Monitor resource usage, application logs, and container metrics to identify potential bottlenecks or performance issues. Use monitoring tools to gain insights into your containers' behavior, and proactively address any performance degradation.

- Plan for backup and disaster recovery: Implement backup and disaster recovery strategies for your containers and their associated data. Regularly back up critical data and have plans in place to restore containers in the event of failures or disasters.

By following these best practices, you can optimize the use of Kubernetes in Docker on the Windows platform, ensuring efficient, secure, and high-performing containerized applications.

Easily Run Kubernetes on Docker Desktop

Easily Run Kubernetes on Docker Desktop by Technical Potpourri 9,245 views 1 year ago 7 minutes, 9 seconds

Kubernetes Crash Course for Absolute Beginners [NEW]

Kubernetes Crash Course for Absolute Beginners [NEW] by TechWorld with Nana 2,540,871 views 2 years ago 1 hour, 12 minutes

FAQ

Is Kubernetes in Docker for Windows suitable for small-scale deployments?

Yes, Kubernetes in Docker for Windows is suitable for small-scale deployments. It provides a lightweight and easy-to-use solution for managing containerized applications on Windows environments.

What are the prerequisites for installing Kubernetes in Docker for Windows?

The prerequisites for installing Kubernetes in Docker for Windows include having a Windows 10 or Windows Server 2016 machine with Hyper-V enabled, at least 4GB of RAM, and administrative privileges. Additionally, you need to have Docker for Windows installed.

Can Kubernetes in Docker for Windows be used in a production environment?

Yes, Kubernetes in Docker for Windows can be used in a production environment. However, it is important to carefully configure and scale the deployment according to the specific requirements of the production environment. It is recommended to consult the official documentation and consider best practices for running Kubernetes in production.