As technology evolves and businesses seek to optimize their operations, the demand for efficient and scalable infrastructure solutions continues to grow. One such solution is Kubernetes, a powerful container orchestration platform that allows for seamless management and deployment of applications across a distributed system.

However, before diving into the world of Kubernetes, it is crucial to understand the underlying foundation upon which it relies heavily - the Linux kernel. The Linux kernel, known for its stability and flexibility, forms the backbone of many modern operating systems.

In order to ensure a smooth and reliable Kubernetes deployment, specific prerequisites must be met at the kernel level. Fine-tuning a Linux distribution to meet these requirements is essential to enable the seamless coordination and management of containers within a cluster.

By optimizing kernel configurations and carefully selecting compatible components, administrators can create a robust environment that not only meets the demands of running Kubernetes, but also enhances the overall performance and security of the system. In this article, we will delve into the intricacies of the Linux kernel prerequisites for running Kubernetes, exploring the key considerations and best practices that system administrators should be aware of.

Understanding the Core of the Linux Operating System

The core of the Linux operating system forms the foundational layer upon which various technologies, such as Kubernetes, are built. By understanding the core principles and components of the Linux kernel, one can gain insights into how it operates and how it interacts with other software and hardware components.

At its essence, the Linux kernel serves as the bridge between software applications and the underlying hardware. It provides the necessary mechanisms for managing system resources, including memory, input/output devices, and process scheduling. This allows applications to run efficiently and smoothly on a Linux-based system.

To delve deeper into the functionality of the Linux kernel, it is crucial to explore key concepts such as process management, memory management, file systems, and device drivers. These components work in harmony to facilitate the execution of processes, allocation of memory, storage of data, and interaction with peripheral devices.

- Process Management: The Linux kernel employs various mechanisms, such as the scheduler and process control block, to manage and prioritize the execution of multiple processes simultaneously.

- Memory Management: Memory allocation and management are crucial aspects of the Linux kernel. It ensures efficient utilization of physical and virtual memory, enabling seamless execution of programs.

- File Systems: The Linux kernel supports a wide range of file systems, including ext4, XFS, and Btrfs. These file systems provide the structure and organization needed for storing and retrieving data reliably.

- Device Drivers: Device drivers are essential for enabling communication between the kernel and hardware devices. The Linux kernel incorporates numerous drivers to support a variety of devices, from network interfaces to storage controllers.

By grasping the intricacies of the Linux kernel, one can better comprehend the requirements and dependencies it imposes on running technologies like Kubernetes. This understanding becomes invaluable when configuring and optimizing a Linux-based system to ensure optimal performance and stability.

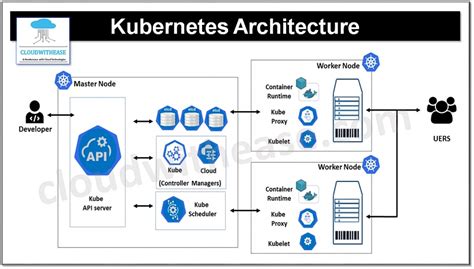

Key Components of Kubernetes

In this section, we will explore the fundamental elements that make up the Kubernetes platform. Understanding these key components is crucial for anyone looking to harness the power of Kubernetes for container orchestration.

Cluster: At the heart of Kubernetes is the cluster, which consists of a set of nodes that work together to run containerized applications. These nodes can be physical or virtual machines, and they communicate with each other to distribute workloads and manage resources efficiently.

Pods: Pods are the basic building blocks of a Kubernetes cluster. They encapsulate one or more containers and provide a dedicated environment for these containers to run. Pods can be thought of as the unit of deployment and scaling in Kubernetes, allowing for more efficient resource allocation.

Services: Services enable communication between different parts of an application running in a Kubernetes cluster. They provide a stable networking interface and load balancing capabilities, allowing pods to easily discover and connect to each other.

ReplicaSets: ReplicaSets ensure that a specified number of pod replicas are running at all times. They provide scalability and fault tolerance by automatically adjusting the number of replicas based on defined criteria. This ensures high availability and efficient resource utilization.

Deployments: Deployments provide a way to manage the deployment and updates of applications in a Kubernetes cluster. They allow for declarative configuration and easy rollback, making it simple to manage complex application deployments with minimal downtime.

ConfigMaps: ConfigMaps store key-value pairs of configuration data that can be used by pods and containers in a Kubernetes cluster. They allow for easy separation of configuration from application code, making it more flexible to manage and update configurations without rebuilding the application.

Secrets: Secrets are used to store sensitive information, such as passwords or API keys, that need to be securely accessed by pods and containers. They provide a way to manage and distribute these secrets to the appropriate containers without exposing them directly in the application code.

Namespaces: Namespaces provide a way to logically divide and isolate resources within a Kubernetes cluster. They allow for better organization and management of resources, ensuring that applications and teams can operate independently without interfering with each other.

These key components form the foundation of Kubernetes, enabling the efficient orchestration and management of containerized applications. Understanding how these components work together is essential for harnessing the full potential of Kubernetes in your infrastructure.

Prerequisites for Operating Kubernetes

To effectively operate Kubernetes, certain foundational requirements must be met to ensure a smooth and efficient system. This section will outline the essential components and settings necessary for successfully running Kubernetes.

- Operating System:

- Kernel Version:

- Resource Allocation:

- Networking Configuration:

- Container Runtime:

- Cluster Infrastructure:

- The chosen operating system should provide the necessary stability, compatibility, and performance for hosting Kubernetes and its associated workloads.

- The kernel version should be carefully selected to support the required features and functionalities needed by Kubernetes. It is crucial to ensure that the kernel includes the necessary drivers and modules for various hardware devices and networking capabilities.

- Sufficient system resources, such as CPU, memory, and storage, should be allocated to Kubernetes to handle the desired workloads without resource contention or performance degradation.

- Proper networking configuration is essential for seamless communication between Kubernetes cluster nodes, as well as external resources and services. It is important to configure networking settings, such as IP addressing, routing, and network policies, in a way that aligns with Kubernetes networking requirements.

- A compatible container runtime, such as Docker or containerd, should be installed and configured on each node of the Kubernetes cluster. This ensures efficient management and execution of containerized workloads.

- The underlying infrastructure where Kubernetes is deployed should meet the necessary hardware and software requirements to support the desired scale, resilience, and performance of the cluster. This includes considerations for storage, network availability, and fault tolerance.

By carefully addressing these prerequisites, administrators can establish a robust foundation for running Kubernetes and effectively manage containerized applications at scale.

Compatibility of Linux Kernel Versions

In the context of the topic discussing the requirements for deploying Kubernetes on a Linux system, one crucial aspect to consider is the compatibility of different Linux kernel versions with the Kubernetes platform. Understanding the compatibility of the Linux kernel with Kubernetes is essential for ensuring the smooth operation and optimal performance of containerized applications.

Linux Kernel Version Compatibility:

It is vital to have a clear understanding of the compatibility matrix between various Linux kernel versions and Kubernetes. The compatibility matrix provides guidelines on which kernel versions are recommended or required to run specific Kubernetes releases effectively. This matrix considers the features, bug fixes, and security patches implemented in different kernel versions. Matching the appropriate Linux kernel version with the Kubernetes release version helps to avoid compatibility issues and ensures the stability of the environment.

The Linux kernel, being the core component of the operating system, must be compatible and provide the necessary functionality for the Kubernetes platform to operate optimally. Therefore, understanding the compatibility requirements for the Linux kernel versions when running Kubernetes is crucial to achieve a successful deployment and efficient management of containerized workloads.

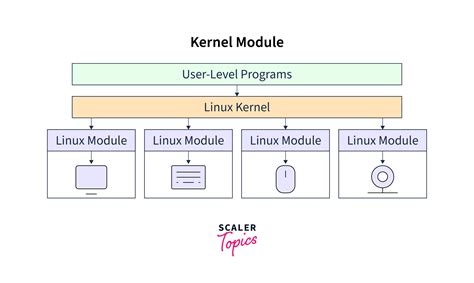

Kernel Modules and Kernel Features

In the realm of operating systems, there exists a vital component that plays a crucial role in facilitating various functionalities and capabilities. This component is commonly referred to as the kernel, which serves as the core of an operating system, executing essential tasks and managing the system's resources efficiently. When it comes to orchestrating containerized applications and managing them at scale, the utilization of the Kubernetes platform has gained significant prominence. However, to ensure a seamless integration between Kubernetes and the underlying operating system, it is imperative to consider the compatibility of the kernel modules and kernel features.

Kernel modules serve as dynamically loaded components that can be added or removed from the running kernel. These modules offer the ability to extend the functionality of the kernel by providing support for specific hardware devices, file system types, network protocols, and more. In the context of running Kubernetes, an understanding of the required kernel modules is essential to ensure optimal performance and compatibility. By supporting the necessary kernel modules, the operating system can seamlessly interact with the Kubernetes platform and enable the execution of containerized applications.

In addition to kernel modules, kernel features also play a significant role in determining the compatibility of an operating system with Kubernetes. Kernel features encompass a broad range of functionalities implemented within the kernel, such as namespaces, cgroups, and containerization features. These features contribute to the isolation, control, and resource management capabilities necessary for orchestrating containerized applications effectively. Therefore, ensuring the presence and proper configuration of essential kernel features is crucial to enabling a robust Kubernetes environment.

| Kernel Modules | Kernel Features |

|---|---|

| Device Drivers | Namespaces |

| File System Support | Cgroups |

| Network Protocols | Containerization |

In conclusion, the compatibility between the operating system and Kubernetes heavily relies on the presence and correct configuration of kernel modules and kernel features. By ensuring the necessary modules are supported and the essential features are enabled, organizations can optimize their Kubernetes deployments and harness the full potential of containerized applications.

Minimum Hardware Specifications

In order to efficiently utilize the capabilities of the Linux operating system and effectively run Kubernetes, it is essential to have adequate hardware specifications. These specifications determine the minimum requirements for the system to operate smoothly and ensure optimal performance.

Processor: The central processing unit (CPU) is the brain of the computer, responsible for executing instructions and performing calculations. A powerful CPU with multiple cores and high clock speed is recommended to handle the demands of running Kubernetes efficiently.

Memory: Random-access memory (RAM) plays a crucial role in providing temporary storage for data and instructions that the CPU can access quickly. Sufficient RAM is necessary to accommodate the operating system, Kubernetes, and any running applications or containers. The larger the RAM capacity, the better the system can handle resource-intensive tasks.

Storage: Storage refers to the devices used to store data and applications. Kubernetes requires a certain amount of disk space to install and run its components. It is essential to have enough free disk space to prevent performance issues and ensure smooth operation. Consider using solid-state drives (SSDs) for faster access and better I/O performance.

Network: A stable and reliable network connection is crucial for communication between Kubernetes nodes, as well as connections to external services. The network interface, whether wired or wireless, should support high-speed data transfer and low latency to prevent bottlenecks and ensure optimal performance.

Graphics: Although not directly related to running Kubernetes, a capable graphics card can enhance the overall user experience when managing the system. It provides smoother graphics rendering for the user interface and graphical applications, making it easier to monitor and control Kubernetes resources.

Note: These hardware specifications serve as a guideline for running Kubernetes effectively. Depending on the specific use case and workload, additional resources may be required to meet performance requirements.

Optimizing the Linux Core for Orchestrating Containerized Applications

In this section, we will explore methods for fine-tuning the foundational element of running and managing containerized workloads: the Linux core. By implementing key optimizations, we can enhance the overall performance and stability of Kubernetes, enabling efficient orchestration of applications and resources.

Maximizing Resource Utilization

One crucial aspect of optimizing the Linux kernel for Kubernetes is ensuring efficient utilization of system resources. Through careful configuration, administrators can strike a balance between resource allocation and performance, leading to improved workload management and scalability. By fine-tuning parameters such as CPU and memory allocation, we can optimize the Linux core to meet the demands of dynamically orchestrated containerized applications.

Improving Network Latency and Throughput

An important consideration in optimizing the Linux kernel for Kubernetes is minimizing network latency and improving overall throughput. By optimizing kernel parameters related to network handling, such as enhancing network stack performance and fine-tuning congestion control algorithms, we can ensure smooth and efficient communication between containers and improve overall network performance within a Kubernetes cluster.

Enhancing Security and Isolation

Secure isolation of containers is a fundamental requirement in any Kubernetes deployment. By leveraging Linux kernel features such as container security modules and namespaces, administrators can enhance the security and isolation of containerized applications. In this section, we will explore strategies for optimizing the Linux core to enforce strict access control policies and mitigate potential security risks, enabling a secure and robust Kubernetes environment.

Tuning File System Performance

The performance of file systems can significantly impact the overall efficiency of containerized workloads. By optimizing the configuration of file systems and disk schedulers, we can improve I/O performance and reduce latency. In this subsection, we will delve into various techniques for optimizing file system performance on the Linux core, enhancing the overall responsiveness of applications running on Kubernetes.

By implementing these optimizations, we can harness the full potential of the Linux core, enabling Kubernetes to seamlessly orchestrate containerized applications and maximize resource utilization, network performance, security, and file system efficiency.

Linus Torvalds Guided Tour of His Home Office

Linus Torvalds Guided Tour of His Home Office by DrUbuntu1 1,561,462 views 9 years ago 4 minutes, 25 seconds

FAQ

What are the Linux kernel requirements for running Kubernetes?

The Linux kernel requirements for running Kubernetes depend on the specific version of Kubernetes you are using. Generally, Kubernetes supports running on any Linux kernel version released in the last few years. However, it is recommended to use a Linux kernel version 3.10 or later for optimal performance and support for all Kubernetes features.

Can I run Kubernetes on an older Linux kernel?

While it is possible to run Kubernetes on older Linux kernel versions, it is recommended to use a Linux kernel version 3.10 or later for performance and compatibility reasons. Running Kubernetes on older kernels may result in limited functionality and potential issues. It is always best to use the latest stable kernel version available.

Is it necessary to upgrade the Linux kernel for running Kubernetes?

It is not always necessary to upgrade the Linux kernel for running Kubernetes. If you already have a Linux kernel version 3.10 or later, you can use it without any further upgrades. However, if you are running an older kernel version, it is recommended to upgrade to at least version 3.10 to ensure optimal performance and support for all Kubernetes features.