Keeping up with the ever-changing demands of modern cloud computing can be a daunting task. As technology continues to evolve, so do the requirements for hosting applications and managing resources efficiently. One notable area where these changes are happening is in the realm of containerization. The practice of deploying applications within isolated environments has exploded in popularity in recent years, with Docker emerging as a leading solution for container management.

However, even the most powerful containerization platforms can encounter limitations when it comes to size. While Docker containers offer several benefits, including enhanced security, scalability, and portability, they can sometimes be constrained by the restricted capacity of the underlying infrastructure. This is where the advantages of expanding container size in the latest version of the widely used cloud platform become evident.

By increasing the allocated resources available to Docker containers, users can unlock new possibilities for their applications. The ability to accommodate larger workloads and handle more complex processes opens up a world of opportunities for developers and businesses alike. With the latest advancements in cloud technology, users no longer need to be bound by the previous restrictions on container size.

Discover the benefits of tapping into the potential of the newest version of the popular cloud platform and expand the capabilities of your Docker containers. Explore the various techniques and strategies that can help you maximize efficiency, optimize resource allocation, and take full advantage of the vast computing power available in the latest iteration of this widely recognized cloud solution.

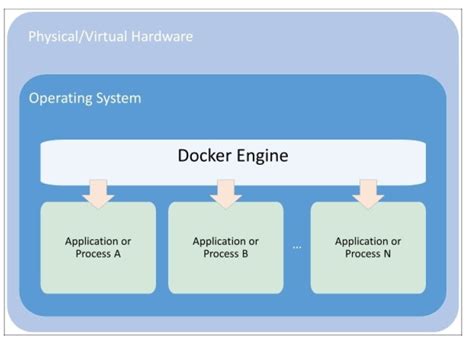

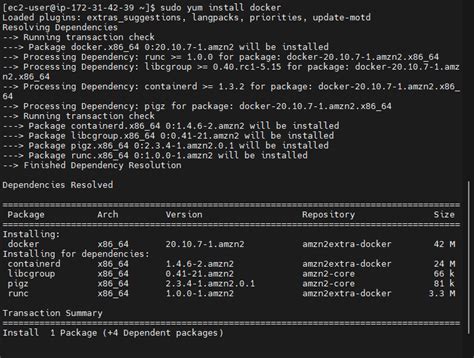

Understanding Docker Containers in the Operating System of Amazon 2

In this section, we will delve into comprehending the functioning and mechanics of Docker containers within the operating system environment of Amazon Linux 2. By gaining a deeper understanding of how Docker containers operate in this context, users will be able to effectively utilize and manage their containerized applications.

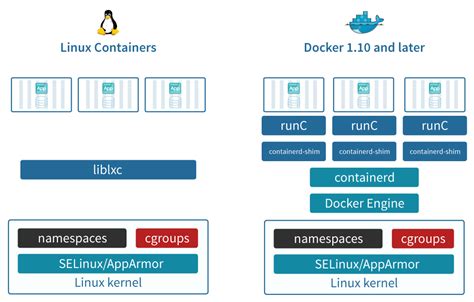

To begin, it is essential to grasp the fundamental concept of containerization, which involves encapsulating applications and their dependencies, allowing them to run consistently in different computing environments. Docker, as a widely-used containerization platform, offers a streamlined approach to package applications, along with their required libraries and dependencies, into self-contained units known as containers.

By isolating applications and their dependencies from the underlying host system, Docker containers ensure consistency and portability across different computing environments, making it easier to deploy and scale applications. Containers in Amazon Linux 2 specifically provide a lightweight and efficient execution environment, with the ability to efficiently share the host operating system kernel while maintaining isolation.

- Efficient Utilization of Resources: Docker containers in Amazon Linux 2 optimize the utilization of system resources by efficiently sharing the kernel with the host operating system. This enables containers to operate with minimal overhead and provides flexibility in managing workloads.

- Enhanced Security: By isolating applications and their dependencies from the host system, Docker containers in Amazon Linux 2 offer enhanced security measures. The isolation ensures that any potential vulnerabilities or issues within a container are contained, preventing them from affecting the host system or other containers.

- Easy Scalability: Docker containers in Amazon Linux 2 enable seamless scalability of applications. Users can easily replicate and deploy containers across multiple hosts, allowing for efficient distribution of workloads and improved performance.

- Improved Development and Deployment Workflow: Docker containers facilitate a streamlined development and deployment workflow. Developers can build and test applications within containers, ensuring consistent and reproducible environments. Once built, these containers can be transported and deployed in any compatible computing environment with ease.

By gaining a comprehensive understanding of how Docker containers function within the operating system of Amazon Linux 2, users will be equipped with the knowledge and insights to effectively leverage the benefits of containerization for their applications.

Benefits of Using Docker Containers in Amazon Linux 2

In this section, we will explore the advantages of utilizing Docker containers in the context of Amazon Linux 2, showcasing the various benefits brought forth by this innovative technology.

- Enhanced Portability: Docker containers in Amazon Linux 2 offer remarkable portability, allowing applications to be effortlessly moved across different environments and platforms. This flexibility simplifies the deployment process and enables seamless transitions between development, testing, and production environments.

- Improved Resource Utilization: Docker containers effectively utilize system resources, optimizing the allocation of CPU, memory, and storage. By leveraging containerization, Amazon Linux 2 enables efficient scaling and enhanced performance, ensuring that resources are efficiently utilized without unnecessary wastage.

- Isolated Environments: Docker containers provide a high level of isolation, enabling multiple applications or services to run independently without interfering with each other. This isolation ensures that any changes or issues in one container do not impact the functionality or stability of other containers, establishing a robust and secure environment.

- Rapid Deployment and Rollback: Docker containers facilitate quick and seamless application deployments. With the ability to package all dependencies and configurations within a container, developers can easily distribute and deploy applications consistently across various environments. Additionally, Docker containers support easy rollback options, allowing for quick restoration to a previous version in case of any issues.

- Efficient Collaboration: Docker containers in Amazon Linux 2 streamline collaboration between developers and operations teams. By creating a standardized environment, containers enable developers to build, test, and share applications without worrying about differences in underlying system configurations. This fosters collaboration, accelerates development cycles, and improves overall productivity.

These are just a few of the many benefits that Docker containers bring to the Amazon Linux 2 ecosystem. By leveraging containerization technology, developers and organizations can enjoy enhanced portability, resource utilization, isolation, deployment speed, and collaboration, ultimately leading to improved efficiency and scalability in their applications.

Enhancing Storage Capacity for Containers on Amazon Linux 2

Exploring strategies to optimize the amount of storage available for your containers running on the Amazon Linux 2 operating system.

- Understanding the Importance of Expanding Container Storage

- Choosing the Right Approach

- Utilizing Efficient Storage Management Techniques

Having ample storage capacity is crucial for the smooth operation of containers on Amazon Linux 2. In this section, we will delve into different methods to enhance the storage capabilities of your containers without compromising performance or stability.

- Understanding the Importance of Expanding Container Storage: Gain insights into why expanding container storage is necessary for accommodating growing data and application needs, as well as for optimizing performance and resource utilization.

- Choosing the Right Approach: Explore various strategies to increase container storage capacity, such as utilizing external storage systems, adjusting disk space allocation, or implementing distributed file systems.

- Utilizing Efficient Storage Management Techniques: Learn best practices for optimizing storage management, including efficient data deduplication, compression techniques, and leveraging available storage optimization tools and services.

Why you might need to expand the capacity of your Docker container

Understanding the reasons behind the need to increase the capability of your Docker environment is crucial for optimizing your application's performance and scalability. The ability to adjust the size and capacity of your Docker container is often essential for accommodating changes in your application's demands and requirements.

- Meeting increased resource demands: As your application grows, it might require more system resources, such as CPU, memory, or storage space. Expanding the capacity of your Docker container allows you to allocate additional resources and prevent performance bottlenecks.

- Enhancing application performance: Increasing the size of your Docker container can result in improved performance by providing more room for your application to operate efficiently. This is particularly important when your application performs resource-intensive tasks or handles a large amount of data.

- Facilitating future scalability: By increasing the capacity of your Docker container in advance, you ensure that your application can handle future growth smoothly. This proactive approach eliminates the risk of unexpected capacity limitations down the line and minimizes the disruption to your application's operation.

- Enabling efficient deployment of new features: Expanding the size of your Docker container provides the necessary space for deploying new features and functionalities without compromising the performance of the existing application. This flexibility allows for seamless updates and enhancements without disrupting the user experience.

- Improving fault tolerance and high availability: Increasing the capacity of your Docker container can enhance fault tolerance by distributing workloads across multiple instances or nodes. This redundancy ensures that your application remains resilient and available even in the face of failures or spikes in demand.

Considering these factors, expanding the capacity of your Docker container becomes imperative for maintaining a reliable and scalable application environment. By understanding the underlying reasons for doing so, you can effectively plan for future growth and optimize performance based on your application's unique requirements.

Optimizing the Capacity of Your Container: A Step-by-Step Approach

In this section, we will delve into the process of improving the efficiency and maximizing the available resources within your container. By employing a systematic and thoughtful approach, you can enhance the capacity of your container without compromising its performance or stability.

- Performing a Comprehensive Analysis of Resource Utilization

- Implementing Intelligent Resource Allocation Strategies

- Optimizing Application and Workload Performance

- Balancing Workload Distribution

- Monitoring and Fine-Tuning

Before embarking on the journey to expand your container's capacity, it is crucial to conduct a thorough assessment of resource utilization. By understanding how your container currently utilizes CPU, memory, and storage, you can identify potential areas for improvement and make informed decisions about capacity enhancement.

One of the key steps in maximizing your container's potential lies in the implementation of intelligent resource allocation strategies. By employing techniques such as vertical scaling, horizontal scaling, and dynamic resource allocation, you can dynamically allocate resources based on the specific needs of your container, ensuring optimal utilization without wastage.

In order to effectively increase the capacity of your container, it is essential to optimize the performance of your applications and workloads. This involves identifying and eliminating any bottlenecks or inefficiencies within your containerized environment. By fine-tuning your configurations, optimizing code, and implementing caching mechanisms, you can significantly enhance the overall performance and efficiency of your container.

Achieving optimal resource utilization also entails balancing workload distribution across your containerized environment. By evenly distributing the workload among multiple containers, you can prevent resource overload on a single instance and ensure that all containers operate at their full potential. Implementing load balancing mechanisms and leveraging container orchestration tools can greatly assist in achieving this balance.

Once you have implemented the necessary optimizations, it is crucial to continually monitor and fine-tune your container's performance. By utilizing monitoring tools and employing proactive alerting mechanisms, you can address any performance degradation or resource spikes promptly. Regularly revisiting and tuning your configurations and resource allocation strategies will help maintain an efficient and resilient container environment in the long run.

By following these step-by-step guidelines, you can successfully enhance the capacity of your container, making the most of the available resources and improving overall performance. With a mindful approach to resource utilization and continuous optimization, you can ensure that your container environment meets the demands of your applications and workloads effectively.

Considerations for resizing the capacity of your Docker environment

In the process of scaling your Docker environment to meet the growing demands of your applications, it is essential to consider various factors that might impact the effective resizing of your container capacity. By understanding these considerations, you can ensure a seamless expansion of your Docker ecosystem without compromising its stability and performance.

| Consideration | Explanation |

|---|---|

| Resource Utilization Metrics | Before resizing your container capacity, it is crucial to analyze resource utilization metrics, such as CPU, memory, and disk usage. By assessing the current resource consumption patterns, you can accurately determine the required capacity increase and avoid over-provisioning resources. |

| Application Requirements | Understanding the specific requirements of your applications is vital in deciding the appropriate container size. Different applications may have unique resource needs, such as higher memory allocation or increased CPU power. By aligning the container size with the application requirements, you can optimize performance and enable efficient resource allocation. |

| Networking Considerations | Resizing container capacity may affect the networking aspects of your Docker environment. Ensure that your network infrastructure can support the increased container size and that you have considered factors such as network bandwidth, latency, and security requirements. |

| Data Storage | Expanding the container size may impact data storage requirements. Evaluate the storage capacity and scalability of your data storage solution to accommodate the increased container size and ensure seamless data access and management for your applications. |

| Orchestration and Management | Consider the implications of resizing on the orchestration and management of your Docker environment. Ensure that your container orchestration platform can handle the increased capacity and that you have planned for the necessary administrative and monitoring tasks. |

By carefully considering these factors, you can effectively resize the capacity of your Docker environment and support the growing demands of your applications, ultimately facilitating scalable and efficient software delivery.

Monitoring and optimizing Docker container size on the Amazon Linux 2 platform

In this section, we will explore the techniques and strategies for effectively monitoring and optimizing the size of Docker containers running on the Amazon Linux 2 platform. By taking a proactive approach to managing the container size, you can minimize resource usage, improve performance, and reduce costs.

- Understanding the importance of container size optimization

- Implementing container size monitoring tools and techniques

- Analyzing container dependencies and usage patterns

- Identifying and removing unnecessary dependencies and files

- Implementing container size optimization best practices

- Optimizing container networking and storage

- Using container orchestration frameworks for efficient resource allocation

- Automating container size monitoring and optimization processes

- Considerations for scaling container size in a dynamic environment

By following the steps outlined in this section, you will be able to effectively monitor and optimize the size of your Docker containers on the Amazon Linux 2 platform, ensuring efficient resource utilization and improved overall performance.

Troubleshooting common issues when adjusting the storage capacity of Docker containers

When managing the storage capacity of your Docker containers, there are various challenges that might arise. In this section, we will explore some of the common issues that you may encounter and how to troubleshoot them effectively.

- Insufficient disk space: One of the most common problems when adjusting container storage capacity is running out of disk space. This can occur due to a variety of reasons, such as large log files, data accumulation, or inefficient storage allocation. To resolve this issue, you can start by analyzing the disk space usage using commands like

df -hordu -sh. Removing unnecessary files, optimizing storage allocation, and increasing disk size can help mitigate this problem. - Performance degradation: Increasing the storage capacity of Docker containers can sometimes lead to performance degradation. This can be attributed to various factors, including inadequate system resources, inefficient disk I/O, or excessive container processes. To address this issue, consider optimizing resource allocation, monitoring performance metrics, and streamlining container processes. Implementing caching mechanisms and utilizing high-performance storage options can also enhance overall performance.

- Connectivity issues: Adjusting container storage capacity can potentially disrupt network connectivity. This can result in communication failures between containers, services, or external systems. To troubleshoot connectivity issues, ensure that relevant firewall rules, network configurations, and DNS settings are correctly configured. Checking logs, monitoring network traffic, and verifying container networking configurations can help identify and resolve connectivity problems.

- Data integrity concerns: Increasing container storage size may raise concerns about data integrity. For example, expanding volumes associated with databases or critical file systems may introduce potential risks to data consistency and reliability. To address this, consider taking appropriate backup measures, implementing redundancy strategies, and performing thorough testing before making any changes. Regularly monitoring data integrity and performing validation checks can further minimize the risk of data corruption.

- Compatibility issues: Modifying container storage capacity can sometimes introduce compatibility problems with existing applications or frameworks. This can occur due to reliance on specific storage technologies, dependencies on storage paths, or rigid storage configurations. Before adjusting container storage, thoroughly assess the compatibility impact and plan accordingly. Testing in isolated environments, utilizing container orchestration platforms, and employing compatibility validation tools can help mitigate potential compatibility issues.

By understanding and troubleshooting these common issues, you can effectively manage and adjust the storage capacity of your Docker containers to meet the evolving requirements of your applications and infrastructure.

FAQ

Can I change the size of a Docker container in Amazon Linux 2?

Yes, you can increase the size of a Docker container in Amazon Linux 2. This can be done by modifying the container's resource constraints and allocating more memory and CPU resources.

What are the steps to increase the size of a Docker container in Amazon Linux 2?

To increase the size of a Docker container in Amazon Linux 2, you need to first stop the container. Then, you can modify its resource limits by using the "docker update" command and specifying the new memory and CPU limits. Finally, restart the container for the changes to take effect.

Will increasing the size of a Docker container affect its performance?

Increasing the size of a Docker container can potentially improve its performance, especially if the previous resource limits were too low. However, it is important to consider the available resources on the host system and ensure that there is enough capacity to handle the increased container size.

Are there any limitations to increasing the size of a Docker container in Amazon Linux 2?

There are certain limitations to consider when increasing the size of a Docker container in Amazon Linux 2. For example, the host system must have enough resources available to handle the increased container size. Additionally, there may be specific limits imposed by the underlying infrastructure or cloud provider, such as maximum memory or CPU limits.