In the world of modern computing, developers seek innovative solutions to package, deploy, and execute their applications across different environments seamlessly. One such technology that has gained significant traction in recent years is containerization. These lightweight, portable, and self-contained units of software have revolutionized the way we build and deploy applications. Today, we will delve into the intricate details of how operating system containers, commonly known as containers, are leveraged in the Docker ecosystem.

Operating system containers provide a standardized and efficient approach to run applications, ensuring consistent behavior across various operating systems and environments. These containers encapsulate the application and all its dependencies into a single, isolated unit, eliminating the need for separate virtual machines or other heavyweight virtualization technologies. Instead, containers leverage the host operating system's kernel to isolate and restrict resources, providing better performance and utilization.

In this article, we will dissect the inner workings of containers, shedding light on how they guarantee independence, security, and scalability. We will explore the core concepts of namespaces and control groups, which serve as the foundation for containerization. Additionally, we will dive into the topics of image layers, container runtimes, and orchestration, all of which play crucial roles in the successful execution of containerized applications. Through this exploration, we aim to enhance your understanding of the underlying mechanics behind these powerful technologies.

Understanding the Fundamentals of Containerization Technology in Docker

In this section, we will delve into the core principles of containerization technology and explore its significance in the Docker ecosystem. With the rise of virtualization and the need for lightweight, isolated environments, containers have emerged as a vital component in modern application development and deployment processes.

- Introduction to Containerization: Understand the concept of containerization and its key advantages in terms of efficiency, portability, and scalability. Explore how it differs from traditional virtualization.

- Container Engines: Learn about the role of container engines in creating, managing, and running containers. Delve into the specifics of container engines supported by Docker.

- Isolation and Resource Allocation: Discuss the mechanisms of isolation within containers and how they allow for the efficient use of resources. Dive into concepts such as namespaces and control groups.

- Image Packaging and Distribution: Explore the process of packaging applications into container images and their subsequent distribution across different environments. Gain insights into the use of registries and repositories.

- Network and Storage Management: Examine the networking and storage capabilities within containerized environments. Discover how Docker enables efficient networking between containers and the host system, as well as the various storage options available.

- Security and Compliance: Assess the security aspects of containerization and understand the measures that Docker takes to ensure the integrity of containerized applications. Explore topics such as image signing, access control, and vulnerability scanning.

By comprehending the fundamental concepts of containerization in Docker, you will develop a foundation to leverage the power of Windows and Linux containers effectively. Understanding the principles discussed in this section will allow you to optimize your development and deployment workflows, making your applications more portable and scalable.

Exploring the differences between Windows and Linux containers

When it comes to containerization, it is important to understand the distinctions between Windows and Linux containers. These two types of containers operate differently and offer unique features and advantages. In this section, we will delve into the dissimilarities between Windows and Linux containers, exploring their functionalities and how they cater to different operating systems.

| Feature | Windows Containers | Linux Containers |

|---|---|---|

| Operating System | Windows containers are designed to run on Windows hosts. | Linux containers are intended to run on Linux hosts. |

| Isolation | Windows containers utilize a technology called Hyper-V isolation to provide strong isolation between containers. | Linux containers use the Linux kernel's namespaces and control group (cgroup) functionality for resource isolation. |

| Image Compatibility | Windows containers require specific Windows base images. They cannot run Linux-based images without the use of a virtualization layer. | Linux containers are highly compatible with a wide range of Linux distributions and can run on different versions without any virtualization overhead. |

| Networking | Windows containers can use both Windows and Linux networking technologies, providing flexibility in network configuration. | Linux containers primarily rely on Linux networking technologies for communication with other containers and the host. |

| Size and Performance | Windows containers tend to be relatively larger in size and have slightly slower startup times compared to Linux containers. | Linux containers are known for their small footprint and fast startup times, making them ideal for efficient resource utilization. |

By understanding these differences, developers and system administrators can make informed decisions about which type of container to use based on their specific requirements and constraints. Whether it's leveraging Windows containers for Windows-centric applications or harnessing the lightweight and flexible nature of Linux containers, the choice ultimately depends on the target operating system and the desired functionalities.

Facilitating Deployment with Docker

In the realm of application deployment, Docker plays a crucial role in facilitating the use of containers. By providing a streamlined and efficient environment, Docker offers a multitude of benefits that simplify the process of deploying applications.

One of the primary advantages of Docker is the isolation it provides, enabling applications to run independently within their own containers. This isolation ensures that each application has its own set of dependencies, libraries, and configurations, minimizing conflicts and enhancing compatibility.

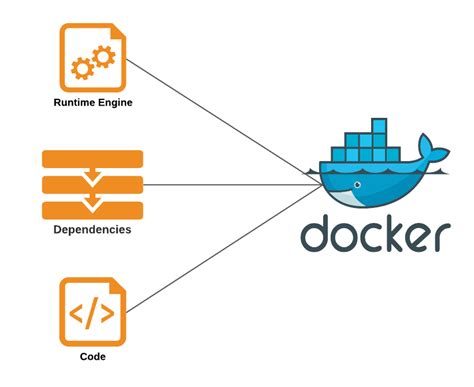

Additionally, Docker simplifies the deployment process by enabling the creation of container images, which encapsulate all the necessary components required to run an application. These images act as self-contained units that can be easily replicated, shared, and deployed across various environments.

- Docker also facilitates efficient resource management, allowing applications to efficiently utilize system resources while avoiding resource wastage.

- Moreover, Docker's lightweight nature ensures fast startup times and minimal overhead, enabling quick and seamless application deployment.

- Furthermore, Docker provides a centralized and standardized platform for managing application deployment, making it easier to version, update, and monitor different containerized applications.

- Docker's extensive ecosystem offers a wide range of tools and services that enhance the deployment process, such as container orchestration frameworks like Kubernetes.

In conclusion, Docker serves as a powerful tool for application deployment, simplifying the process by leveraging the benefits of containerization. Its ability to isolate applications, create portable container images, optimize resource usage, and provide a centralized management platform makes it an indispensable asset for efficient and scalable deployment of applications.

Exploring the Architecture of Windows Containers within the Docker Ecosystem

In this section, we will delve into the intricacies of the architectural framework underlying Windows containers in the Docker ecosystem. By understanding the fundamental principles at play, we can gain insight into the way Windows containers operate and their significance in enabling isolated, lightweight application deployments.

At the core of Windows containers lies the concept of containerization, which focuses on encapsulating applications and their dependencies in isolated runtime environments. These containers provide a portable and consistent runtime environment, allowing applications to run seamlessly across different computing systems.

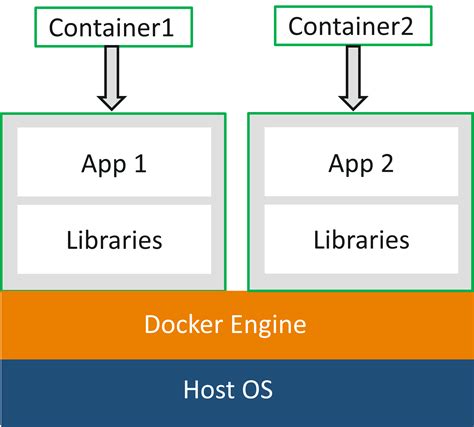

Windows containers utilize a layered architecture, which is composed of several key components. The Host Operating System forms the foundation, providing the necessary resources and services for the containers to function. It enables isolation and manages the distribution of resources among the containers.

- The Container Host is responsible for managing the execution and operation of the containers. It coordinates the communication between the containers and the host operating system, ensuring seamless integration and resource utilization.

- The Container Runtime Engine plays a crucial role in the orchestration of containerized applications. It oversees the creation, execution, and termination of containers, acting as a bridge between the host operating system and the containers themselves.

- The Container Images serve as the building blocks of Windows containers. These images are lightweight, standalone packages that encapsulate all the necessary components and dependencies required to run a specific application. They can be readily cloned and deployed across different environments, promoting flexibility and scalability.

The orchestration of Windows containers within the Docker ecosystem is facilitated by the Docker Engine, a powerful tool that simplifies the management and deployment of containerized applications. It provides a comprehensive set of features for building, distributing, and running containers, making the process more efficient and user-friendly.

By studying the architecture of Windows containers in Docker, we gain a deeper understanding of the underlying infrastructure that enables their seamless integration and operation. With this knowledge, developers can leverage the advantages of containerization, enabling them to build scalable, portable applications with ease.

Advantages and Benefits of Utilizing Windows Containers in Docker

When it comes to leveraging containerization technologies, utilizing Windows containers within the Docker ecosystem offers a diverse range of key features and advantages. These features provide organizations with enhanced flexibility, scalability, and reliability, empowering them to efficiently manage their applications and services in a dynamic and secure manner.

1. Lightweight and Isolated Environments: Windows containers ensure lightweight and isolated environments that enable the encapsulation of applications and their dependencies. This encapsulation allows for easy sharing and deployment, eliminating conflicts between various components and simplifying the development and deployment process.

2. Portability and Compatibility: Windows containers in Docker provide excellent portability across different systems and environments. This portability allows organizations to seamlessly migrate applications and services between on-premises and cloud-based infrastructures, ensuring consistent functioning regardless of the underlying operating system.

3. Rapid Deployment and Scalability: With Docker's Windows containers, organizations can quickly deploy and scale applications, enabling efficient utilization of resources while meeting changing demands. The ability to rapidly provision containers simplifies the deployment process, reduces downtime, and ensures high availability of the applications.

4. Enhanced Security and Resource Management: Windows containers leverage the robust security features of Docker, enabling granular control over access, permissions, and network configurations. Additionally, containerization allows for better resource management, ensuring efficient utilization of system resources and isolation of potentially vulnerable components.

5. Simplified Application Maintenance: By utilizing Windows containers, organizations can easily update and maintain their applications without disrupting the entire system. Containers can be individually managed and updated, reducing the complexity and improving the reliability of the maintenance process.

Overall, the utilization of Windows containers in Docker brings significant advantages to organizations, empowering them to build and deploy applications efficiently, enhance security, and achieve seamless interoperability across a variety of environments.

Exploring the architecture and advantages of Linux containers in Docker

In this section, we will delve into the underlying structure and benefits of Linux containers within the Docker ecosystem. By understanding the architecture and functionalities, we can comprehend the significance of Linux containers and how they contribute to the overall effectiveness of Docker.

Understanding the Architecture:

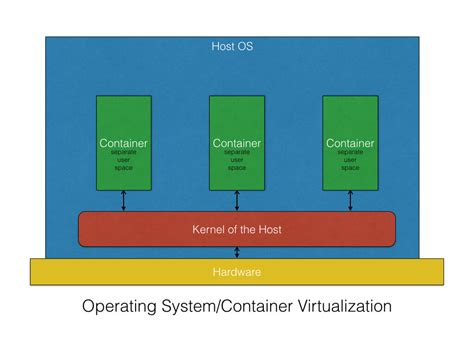

Linux containers, also known as LXC, provide a lightweight and efficient way to isolate applications from the underlying host system. They operate on the principle of utilizing the Linux kernel's namespaces and control groups (cgroups) to create isolated environments, allowing applications to run with their dependencies and libraries. This architecture ensures that each container has its file system, network interfaces, and process space, maintaining the necessary isolation.

Advantages of Linux Containers in Docker:

Linux containers in Docker offer numerous advantages that have led to their widespread adoption in the software development community. Firstly, due to their lightweight nature, containers allow for easier deployment, scaling, and management of applications compared to traditional virtual machines. They provide a consistent and reproducible runtime environment, ensuring compatibility across different systems and eliminating "it works on my machine" issues.

Furthermore, Linux containers enable efficient resource utilization, allowing multiple containers to run on a single host while maintaining isolation. This results in improved efficiency and cost-effectiveness, particularly in cloud computing and microservices architecture. Containers can be quickly started, stopped, and restarted, facilitating rapid development, testing, and deployment cycles.

In conclusion, understanding the architecture and advantages of Linux containers in Docker provides valuable insights into their crucial role in modern software development. Leveraging the isolation and efficiency of Linux containers allows for streamlined application management, increased scalability, and enhanced resource utilization, ultimately contributing to the success of Docker as a containerization platform.

An overview of containerization technologies used in Windows and Linux

In this section, we will delve into the fundamental concepts of containerization technologies employed in both the Windows and Linux operating systems. Rather than focusing solely on Docker, we will explore the broader landscape of containerization, examining the different methods and approaches used to isolate and manage applications within containers. By understanding the key principles and mechanisms behind these technologies, we can gain insights into how they offer enhanced portability, scalability, and efficiency for modern software development and deployment.

The Process of Building and Running Containers on the Windows Platform with Docker

In this section, we will explore the step-by-step process of creating and running containers using Docker on the Windows platform. We will delve into the intricacies of containerization, examining the various components and techniques required for successful container deployment.

1. Identifying Container Requirements

- Understanding the specific software requirements and dependencies of the application to be containerized.

- Ensuring compatibility with the Windows platform and selecting the appropriate base image for the container.

2. Configuring Container Environment

- Defining the desired configurations and settings for the container.

- Specifying networking options, storage, security constraints, and resource limits.

3. Building the Container Image

- Creating a Dockerfile to define the instructions for building the container image.

- Using commands to add the necessary files and dependencies, configure the environment, and set up the application.

4. Building and Running the Container Image

- Executing the Docker build command to generate the container image based on the provided Dockerfile.

- Verifying the successful creation of the container image.

- Running the container using the Docker run command, specifying any required runtime parameters.

5. Interacting with the Container

- Accessing the container shell or command prompt to perform administrative tasks or execute commands within the container.

- Using Docker exec command to run additional commands inside a running container.

- Accessing the running container's logs to troubleshoot issues or monitor application behavior.

6. Managing Containers

- Monitoring the container's resource usage and performance.

- Stopping, starting, and restarting containers as needed.

- Removing containers that are no longer required.

By following these steps, users can effectively build and run containers on the Windows platform using Docker. Understanding the process and the various tools and options available will allow developers and administrators to efficiently leverage containerization technology to manage and deploy applications.

Creating and Deploying Linux Containers in Docker: Step-by-step Guide

Embark on a journey into the world of Linux containers in Docker as we guide you through the process of creating and deploying these agile and portable computing environments. Discover the power and flexibility of containerization, as we take you step-by-step through the creation and deployment process. In this comprehensive guide, we will unveil the secrets behind harnessing the true potential of Linux containers, sharing valuable insights and expertise along the way.

Step 1: Installation and Setup

Begin your Linux container adventure by installing the necessary tools and setting up your development environment. We will guide you through the process of obtaining and installing Docker, enabling you to leverage its vast array of features to create and deploy Linux containers effortlessly.

Step 2: Creating a Dockerfile

In this step, we will delve into the heart of Linux container creation - the Dockerfile. We will explore the essential syntax and commands used to define the composition and configuration of your containers, allowing you to customize their functionality and behavior with ease.

Step 3: Building the Container Image

Transform your Dockerfile into an executable container image through the process of building. We will provide expert guidance on leveraging Docker's build commands, enabling you to efficiently create a fully functioning Linux container tailored to your specific needs.

Step 4: Running the Container

Witness the magic as your container comes to life with a single command. Learn how to launch and run your freshly built Linux container, exploring options for configuring port bindings, container names, and more. We will also uncover tricks to effectively manage your running containers.

Step 5: Deploying the Container

Now that your container is up and running locally, it's time to deploy it. Discover the various methods for deploying your Linux container to different environments, including local servers and cloud platforms. We will discuss best practices and considerations for successful deployment, ensuring seamless integration into your infrastructure.

Step 6: Monitoring and Scaling

Unlock the power of monitoring and scaling your Linux containers with Docker's flexible tools. Explore different strategies for efficiently managing resources and enhancing performance, while gaining insights into the health and status of your containers.

Step 7: Troubleshooting and Debugging

Delve into the realm of troubleshooting and debugging as we equip you with invaluable techniques for resolving common issues that may arise during the container lifecycle. Master the art of diagnosing problems and deploying effective solutions, ensuring the smooth operation of your Linux containers.

By following this step-by-step guide, you will not only gain a thorough understanding of how to create and deploy Linux containers in Docker but also acquire the knowledge and confidence to harness the full potential of containerization technology. Embrace the future of software deployment and take your development workflow to the next level with Linux containers in Docker.

Enhancing the Efficiency of Containerization in Docker

In the realm of containerization, the proper utilization of Windows and Linux containers plays a pivotal role in maximizing efficiency and streamlining processes. This section delves into best practices and strategies for effectively harnessing the potential of these containers in the Docker ecosystem.

| Best Practice | Key Considerations |

|---|---|

| Container Image Optimization | Optimizing container images for both Windows and Linux platforms helps conserve resources, reduce image size, and accelerate deployment and scaling processes. |

| Version Control and Image Tagging | Implementing version control and assigning appropriate tags to container images facilitates management, tracking, and rollback of changes, ensuring consistency while making updates or releases. |

| Security and Vulnerability Management | Adopting robust security practices, regularly updating images, and actively monitoring vulnerabilities significantly mitigate potential risks and ensure secure containerized environments. |

| Optimized Resource Allocation | Effectively allocating and managing resources, such as CPU, memory, and storage, within containers enhances performance and avoids resource bottlenecks or wastage. |

| Scalability and Load Balancing | Implementing proper load balancing methods and leveraging container orchestration platforms, like Kubernetes or Docker Swarm, enables seamless scalability, improved availability, and efficient utilization of resources. |

| Monitoring and Logging | Implementing comprehensive monitoring and logging solutions helps in analyzing container performance, identifying bottlenecks, troubleshooting issues, and ensuring seamless operations. |

| Automated Testing and CI/CD Integration | Integrating automated testing practices and continuous integration/continuous deployment (CI/CD) pipelines ensures the rapid and reliable deployment of containerized applications, reducing downtime and improving overall efficiency. |

By following these best practices and considering the unique characteristics of Windows and Linux containers, organizations can harness the full potential of Docker containerization, achieve optimal performance, reduce overheads, and enable agile and scalable software development and deployment processes.

FAQ

What are Windows and Linux containers?

Windows and Linux containers are lightweight, isolated environments that can run applications and services efficiently on their respective operating systems. Windows containers are designed to run on Windows servers and Linux containers are designed to run on Linux servers.

How does Docker enable the functioning of Windows or Linux containers?

Docker provides a platform that allows the creation and management of containers. It uses containerization technology to isolate and package applications with their dependencies into portable containers, which can then be run on any supporting host operating system.

What is the difference between Windows and Linux containers in Docker?

The main difference lies in the underlying operating system. Windows containers use Windows as the base OS and have compatibility with Windows-specific tools and libraries, while Linux containers use Linux as the base OS and have compatibility with Linux-specific tools and libraries.

Can you run Windows containers on Linux or vice versa?

No, you cannot run Windows containers on Linux hosts or Linux containers on Windows hosts directly. Docker ensures that containers are only executed on the appropriate host operating system based on their compatibility.

What are some benefits of using Windows or Linux containers in Docker?

Both Windows and Linux containers in Docker offer benefits such as improved scalability, faster deployment, resource efficiency, isolation of applications, and easier management and versioning of software components.