Building powerful and compact Docker images is a crucial skill for any Linux developer. One of the key aspects to master is the seamless integration of modules into your Dockerfile, facilitating the creation of lightweight and efficient containers. By effectively harnessing the capabilities of module loading, you can elevate your Docker images to new heights, enhancing their stability, scalability, and functionality.

Embracing Dynamic Extension

Dynamic extension, often referred to as module loading, is an indispensable technique in designing Docker images that possess the versatility to adapt to different environments. By incorporating dynamic loading capabilities into your Dockerfile, you enable the runtime loading and unloading of modules, allowing your containers to efficiently utilize resources as per the specific requirements of the target platform.

Optimizing Resource Utilization

Efficient module loading techniques enable you to judiciously manage your Docker image's resource consumption. With the ability to dynamically load and unload modules, you can finely tailor your container's functionality and minimize unnecessary overhead. This optimization not only enhances resource utilization but also contributes to faster startup times and improved overall performance.

Enhancing Flexibility and Adaptability

Incorporating module loading capabilities in your Dockerfile unlocks a world of possibilities, empowering your containerized applications to seamlessly adapt and evolve. With the ability to selectively load modules at runtime, you can effortlessly achieve a modular architecture that facilitates seamless upgrades, plugin integration, and customization. This flexibility allows your Docker image to serve as a foundation for a wide variety of applications and user requirements.

By embracing efficient module loading techniques, you can take your Docker images to the next level, transforming them into powerful, adaptable, and resource-efficient containers. Mastering the art of dynamic extension empowers you to optimize resource utilization, enhance flexibility, and embrace an architecture that scales effortlessly. Elevate your Docker game today and unlock the full potential of your containers!

Introduction to Dockerfile and Creation of Docker Images

In this section, we will explore the fundamental concepts of Dockerfile and the process of creating Docker images. Dockerfile is a text file that contains a set of instructions for building a Docker image. It serves as a blueprint for creating consistent and reproducible containers that encapsulate applications and their dependencies.

Creating a Docker image involves constructing a layered filesystem, where each instruction in the Dockerfile adds a new layer to the image. These layers are incremental and can be cached, enabling faster and more efficient image builds.

The Dockerfile consists of a series of instructions that define the environment and steps required to build an image. These instructions can include pulling base images, installing software packages, copying files, setting environment variables, configuring network settings, and executing commands.

By structuring the Dockerfile correctly, we can create lightweight and efficient images that only include the necessary components for running our applications. Optimizing Dockerfile can lead to faster builds, smaller image sizes, and improved security.

Once the Dockerfile is ready, we can use the Docker command-line interface (CLI) or a Docker build command to build the image. The Docker CLI reads the instructions from the Dockerfile and executes them, resulting in the creation of a Docker image.

In conclusion, understanding Dockerfile and the process of creating Docker images is crucial for effectively using Docker containers. It allows developers and system administrators to package their applications and dependencies into portable and isolated environments, enabling easy deployment and scalability.

Understanding the Process of Incorporating Components in a Docker Image

When building a Docker image, it is essential to have a comprehensive understanding of how to incorporate various components or modules into the image. Utilizing these components enables you to enhance the functionality and capabilities of the image, adapting it precisely to your requirements. This section aims to shed light on the intricacies of incorporating modules in a Dockerfile, highlighting the process and importance of this integration.

| Steps in Incorporating Modules | Key Aspects to Consider |

|---|---|

|

|

By comprehending the process and considering the key aspects, you will be able to execute the incorporation of modules effectively. This understanding empowers you to optimize the capabilities and performance of your Docker image, resulting in an efficient and robust environment for your applications.

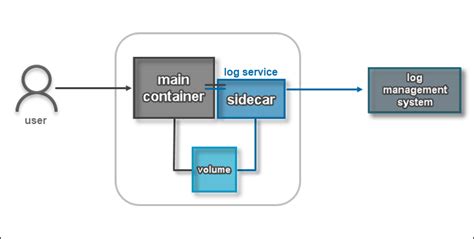

Methods for Incorporating Additional Functionality within Docker Containers

In the realm of container management with Docker, there exists a need to introduce additional functionality and features to the base image being utilized. This unique capability to enhance the capabilities of a Docker container is achieved through the incorporation of modules. These modules, also referred to as packages or plugins, provide additional software libraries, tools, and dependencies that extend the functionality of the base image. This section explores various methods for loading modules within a Dockerfile, enabling the customization and expansion of Docker containers.

Method 1: Utilizing Package Managers

A widely adopted approach to loading modules within a Dockerfile is leveraging package managers, such as apt-get (for Debian-based systems) or yum (for Red Hat-based systems). Package managers enable the installation of specific software packages or libraries, along with their dependencies, from predefined repositories. By including appropriate package manager commands in the Dockerfile, users can easily load modules and ensure their availability within the container environment.

Method 2: Utilizing Source Code Compilation

Another method for loading modules involves compiling the source code of the desired software directly within the Dockerfile. This approach allows for fine-grained control over the installation process and ensures that the modules are tailored to the specific requirements of the container. By downloading the source code from trusted repositories or official websites, users can use build tools like make or cmake within the Dockerfile to compile and install modules, resulting in an optimized and customized environment.

Method 3: Utilizing External Scripts

For complex module loading requirements or cases where additional configuration is necessary, utilizing external scripts within the Dockerfile can be a viable solution. These scripts can be written in any desired programming language, such as Bash or Python, and can incorporate module installation commands as well as any necessary configuration steps. By executing these scripts within the Dockerfile, users can ensure a streamlined and automated process for loading modules while maintaining flexibility and customization.

Method 4: Utilizing Pre-built Docker Images

A convenient method for loading modules is by utilizing pre-built Docker images that are specifically designed for the desired functionality. These images, often referred to as "base images" or "supplementary images," already include the required modules and dependencies. By selecting an appropriate pre-built image as the base image within the Dockerfile, users can directly inherit the desired modules, eliminating the need for explicit module loading steps. This method offers simplicity and ease of use, particularly for commonly required functionality.

By employing these diverse methods for loading modules within a Dockerfile, users can effectively customize and enhance the capabilities of Docker containers to match their specific requirements and use cases. Whether through package managers, source code compilation, external scripts, or pre-built images, Docker provides a flexible and extensible platform for incorporating additional functionality into containers.

The Significance of Properly Loading Modules

Loading modules correctly plays a pivotal role in ensuring the smooth functioning and optimal performance of a system. Properly importing modules is essential for effectively incorporating additional functionalities, enhancing security measures, and maintaining stability within a system.

The process of loading modules refers to incorporating external software components or extensions into a system to expand its capabilities. By correctly loading modules, system administrators can bolster the functionality and versatility of their applications, empowering them to handle diverse tasks and requirements.

Loading modules correctly not only enables the integration of new features but also assists in managing security vulnerabilities. By carefully selecting and importing only trusted and secure modules, developers can mitigate the risks associated with potential security breaches and safeguard the integrity of their system.

Furthermore, loading modules correctly promotes system stability and efficient resource management. By ensuring that the necessary modules and dependencies are loaded in a systematic and organized manner, administrators can avoid conflicts, minimize crashes, and optimize the overall performance of their system.

In conclusion, understanding the importance of loading modules correctly is crucial for system administrators and developers. By diligently incorporating modules, system functionality can be expanded, security measures can be enhanced, and stability can be maintained. Therefore, it is of utmost importance to prioritize the proper loading of modules to ensure the smooth operation and success of any system.

Troubleshooting Common Issues with Loading Modules in Dockerfile

When working with Docker and managing modules within your Dockerfile, it is not uncommon to encounter various issues that can hinder the loading and functionality of these modules. This section aims to address some of the most common issues you may face, providing troubleshooting tips and solutions to overcome them.

One common issue is the inability to import or load specific modules, which can be caused by incorrect module naming, missing dependencies, or conflicts with other modules or libraries. To resolve this, double-check the module names and ensure they are spelled correctly and match the required naming conventions. Additionally, verify that all necessary dependencies are installed, and if conflicts arise, try using different versions or alternative modules that offer similar functionality.

Another common problem is encountering permission errors when attempting to load modules. This can occur due to insufficient privileges within the Docker container or conflicts with the host system. To fix this, you can try running the Docker commands with elevated privileges or modifying the container settings to grant the necessary permissions to access and load the modules.

In some cases, the module loading process may result in performance issues or slowdowns, affecting the overall efficiency of your applications. This can be caused by several factors, such as large module sizes, inefficient code implementation, or high resource consumption. To improve performance, consider optimizing your code, implementing caching mechanisms, or using smaller, more lightweight modules where possible.

Lastly, it is crucial to keep your modules and dependencies up to date to ensure compatibility and avoid potential issues. Regularly check for updates from module developers or maintainers, and incorporate them into your Dockerfile's build process. Additionally, make sure to thoroughly test any new modules or updates before deploying them to production environments, to identify and address any compatibility or functionality issues beforehand.

By troubleshooting these common issues with loading modules in your Dockerfile, you can ensure smooth and efficient module integration, enhancing the overall reliability and performance of your Docker containers and applications.

Best Practices for Incorporating Additional Functionality in Docker Image Build Process

In the context of creating Docker images, it is often necessary to include additional functionality by loading modules and dependencies. This can enhance the capabilities of the resulting image and enable the execution of specific tasks or applications.

When incorporating modules into the Docker image build process, it is important to follow best practices to ensure efficiency and maintainability. These practices involve selecting appropriate modules, managing dependencies effectively, and optimizing the build process.

1. Selecting Suitable Modules: A crucial aspect of incorporating modules is selecting the most suitable ones for the desired functionality. It is important to carefully evaluate the requirements of the project or application and choose modules that fulfill those needs. This involves considering factors such as compatibility, performance, and security.

2. Managing Dependencies: Modules often have dependencies on other components or libraries. It is essential to properly manage these dependencies to ensure the smooth functioning of the modules within the Docker image. This can be achieved by using package managers, like apt or yum, to install the required dependencies. Additionally, it is advisable to avoid unnecessary dependencies to minimize the overall size of the Docker image.

3. Optimizing the Build Process: To ensure efficient and streamlined module loading, optimizing the Docker image build process is crucial. This can involve techniques such as multi-stage builds, where only necessary components are included in the final image. Furthermore, leveraging caching mechanisms can significantly reduce build time by reusing previously built layers.

4. Version Control and Updates: Regularly updating and versioning the loaded modules is essential to keep the Docker image up to date and secure. This can involve following release notes and security advisories to ensure timely updates. Additionally, maintaining a version control system for the Dockerfile and associated scripts can aid in tracking changes and rolling back if necessary.

By following these best practices, developers can ensure that the Docker image incorporates the required additional functionality effectively, promotes maintainability, and optimizes the build process.

FAQ

Why do I need to load modules in a Dockerfile?

In some cases, you may need to load kernel modules within your Docker image in order to use certain features or functionality that rely on those modules. This allows you to customize your image and ensure that it includes all the necessary components for your application to run successfully.

How can I load modules in a Dockerfile?

To load modules in a Dockerfile, you can add the necessary commands to the Dockerfile itself. This typically involves using the `modprobe` command followed by the name of the module you want to load. You can also specify additional options as needed. By incorporating these commands into your Dockerfile, the modules will be automatically loaded when the image is built and run.

What should I do if a module fails to load in a Docker image?

If a module fails to load in a Docker image, there are several things you can try. First, make sure that the module is supported and compatible with the kernel version in your Docker image. You should also check for any dependencies or requirements that need to be satisfied before the module can be successfully loaded. Additionally, reviewing the Dockerfile and ensuring that the module loading commands are correct is important.

Can I load multiple modules in a Dockerfile?

Yes, you can load multiple modules in a Dockerfile. To do this, you can include multiple `modprobe` commands, each specifying a different module. You can also use loops or conditional statements in the Dockerfile to load multiple modules based on certain conditions or requirements. This flexibility allows you to customize your Docker image and ensure that it includes all the necessary modules for your application to function properly.