Technology continually evolves, offering developers an ever-expanding array of tools and frameworks to enhance their projects. Among the myriad of options, HDF5 stands out as a robust and efficient data management solution. In this article, we will take you on a journey to seamlessly integrate HDF5 into a Docker image running on the versatile Linux Alpine 3.13.

By leveraging the power of Docker containers, we ensure a streamlined and isolated environment for our HDF5 installation. Docker allows us to encapsulate the necessary dependencies and configurations, making our application highly portable across different systems. Combining this with the lightweight Linux Alpine 3.13 distribution, renowned for its efficiency and simplicity, we create an ideal foundation for an optimal HDF5 implementation.

Throughout this step-by-step tutorial, we will demonstrate how to install and configure HDF5 within a Docker container running on Linux Alpine 3.13. We will explore the fundamental concepts of Docker, guiding you through the process of setting up your development environment. With our in-depth explanations and practical examples, even those new to Docker and HDF5 will be able to follow along seamlessly.

By the end of this tutorial, you will have not only obtained a comprehensive understanding of how to integrate HDF5 into a Dockerized Linux Alpine 3.13 environment but also gained the knowledge to adapt this process to suit your specific requirements. So let's dive in and unlock the immense potential that HDF5 offers for managing and analyzing your data!

Setting up a Docker Environment

In this section, we will explore the process of creating a Docker environment tailored for installing and running HDF5 using the Linux Alpine 3.13 distribution. By following these steps, you will be able to set up a self-contained and efficient environment that allows for easy deployment and management of HDF5 within a Docker container.

To begin, we will walk through the necessary steps for installing Docker on your Linux Alpine 3.13 system. Once Docker is successfully installed, we will proceed with configuring the environment by creating an Alpine-based Docker image. This image will serve as the foundation for hosting HDF5 and any additional dependencies or customizations.

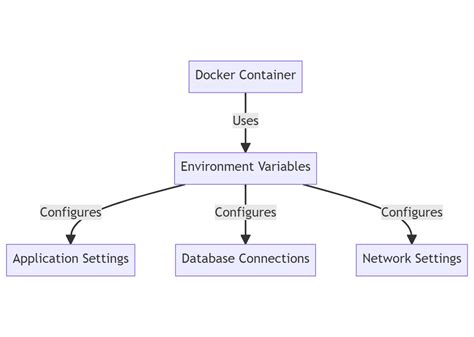

In order to ensure the image is optimized for HDF5, we will explore common practices such as minimizing the image size, installing necessary packages and libraries, and setting up the appropriate environment variables. These steps will ensure a smooth installation and operation of HDF5 within the Docker container.

Additionally, we will cover best practices for managing Docker images and containers, including techniques for monitoring and troubleshooting. By following these guidelines, you will be able to maintain a reliable and scalable Docker environment for your HDF5 installation.

Finally, we will discuss the benefits of using a Docker environment for HDF5, such as improved portability, reproducibility, and ease of collaboration. We will also touch upon advanced topics, such as integrating HDF5 with other Docker containers or orchestration tools.

By the end of this section, you will have a well-configured Docker environment ready for installing HDF5, setting the stage for the subsequent steps in our comprehensive guide.

Downloading and Configuring the Alpine 3.13 Image

In this section, we will explore the process of acquiring and setting up the Alpine 3.13 image for our Docker environment. This image serves as the foundation for our HDF5 installation and provides a lightweight and secure Linux distribution that we can work with.

First, we need to download the Alpine 3.13 image. Open your preferred terminal or command line interface and execute the following command:

| Command | Description |

|---|---|

docker pull alpine:3.13 | Downloads the Alpine 3.13 image from the Docker Hub. |

Once the image is downloaded, we can proceed to configure it according to our requirements. To do this, we need to create a Dockerfile that outlines the necessary instructions for building our customized image.

Open a text editor and create a new file called Dockerfile. Inside this file, we will define the steps to configure the Alpine 3.13 image. Here is an example of a basic Dockerfile for our setup:

| Instruction | Description |

|---|---|

FROM alpine:3.13 | Sets the base image for our Docker image as Alpine 3.13. |

RUN apk update && apk upgrade | Updates and upgrades the packages in the Alpine image. |

RUN apk add --no-cache build-base | Installs the necessary build tools for compiling HDF5. |

RUN apk add --no-cache hdf5-dev | Installs the HDF5 development package. |

Feel free to customize the Dockerfile according to your specific requirements.

Save the Dockerfile and return to your terminal or command line interface. Navigate to the directory where the Dockerfile is located and execute the following command to build your customized Alpine 3.13 image:

| Command | Description |

|---|---|

docker build -t my_alpine:3.13 . | Builds a new Docker image based on the Dockerfile in the current directory and tags it as my_alpine:3.13. |

With the image successfully built, you now have a customized Alpine 3.13 image that is ready for the installation of HDF5.

Installing HDF5 Dependencies

In order to successfully install HDF5 in a Docker image with Linux Alpine 3.13, it is essential to first ensure that all the necessary dependencies are in place. These dependencies are crucial for the proper functioning of HDF5 and its various features.

Here is a step-by-step guide to installing the HDF5 dependencies:

- Update the package manager by running the command:

apk update. - Install the build tools that are required for compiling HDF5 by running the command:

apk add build-base. - Install the C library development files by running the command:

apk add libc-dev. - Install the Fortran compiler by running the command:

apk add gfortran. - Install the zlib compression library by running the command:

apk add zlib-dev. - Install the bzip2 compression library by running the command:

apk add bzip2-dev. - Install the szip compression library by running the command:

apk add szip-dev. - Install the libjpeg-turbo library by running the command:

apk add libjpeg-turbo-dev.

By following these steps and installing the necessary dependencies, you will be ready to proceed with the installation of HDF5 in your Docker image. These dependencies ensure that HDF5 functions optimally and provides a reliable environment for performing data analysis and manipulation.

Downloading and Compiling HDF5

In this section, we will explore the process of acquiring and compiling HDF5, a widely-used file format for storing and managing large amounts of numerical data. By following the steps outlined below, you will be able to obtain the necessary files and compile HDF5 on your Linux Alpine 3.13 system.

Here are the steps to download and compile HDF5:

- Visit the official HDF5 website to find the latest version of the software.

- Download the HDF5 source code tarball to your local machine.

- Extract the contents of the tarball using the appropriate command.

- Navigate to the extracted directory.

- Configure the HDF5 build by specifying any desired options and settings.

- Compile the HDF5 source code using the make command.

- Verify the successful compilation of HDF5 by running the appropriate tests.

- Install HDF5 onto your system using the make install command.

By following these steps carefully, you will have successfully downloaded and compiled HDF5 on your Linux Alpine 3.13 system. This will enable you to take advantage of the powerful features provided by HDF5 for managing and manipulating large datasets.

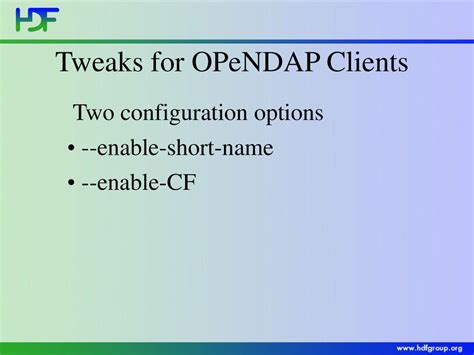

Configuring HDF5 Installation Options

In this section, we will explore various options for configuring the installation of HDF5 in your Docker image with Linux Alpine 3.13. By understanding and leveraging these options, you can tailor the HDF5 installation to meet the specific requirements of your project.

Setting Installation Prefix

One of the primary options to consider is the installation prefix, which determines the directory where HDF5 will be installed on your system. By specifying a custom prefix, you can organize the HDF5 installation according to your desired file structure and naming conventions.

Selecting Compression Libraries

HDF5 supports various compression algorithms for efficient storage and retrieval of data. Depending on your needs, you can choose the compression libraries to include during the installation process. This allows you to optimize HDF5's performance and disk space consumption based on the types of datasets you plan to work with.

Enabling Parallel I/O Support

If you anticipate working with large datasets and require high-performance data access, enabling parallel I/O support in HDF5 can significantly boost read and write operations by distributing the workload across multiple processes or nodes. We will explore how to enable this feature and discuss its implications for your application's scalability.

Customizing Chunk Layout

HDF5 organizes data into chunks to optimize storage and access. You can customize the chunk layout parameters, such as the chunk size and compression options, to align with the characteristics of your datasets. This customization can enhance data access efficiency and minimize storage overhead.

Specifying File Format Versions

HDF5 introduces new features and enhancements with each release. By specifying the desired file format versions during installation, you can ensure compatibility with older applications or restrict the use of newer features to maintain compatibility with a specific toolchain or ecosystem.

Enabling Optional Features

In addition to the core functionality, HDF5 offers optional features and plugins that provide extended capabilities. Depending on your requirements, you may want to include or exclude specific features during installation to keep the resulting HDF5 installation lean and focused on your project's needs.

By understanding and leveraging these configuration options, you can customize the HDF5 installation in your Docker image and strike a balance between performance, functionality, and compatibility for your specific use case.

Building HDF5 in the Docker Image

In this section, we will explore the process of constructing HDF5 within the Docker image to enable the utilization of its powerful features. Throughout this process, we will delve into the intricacies and steps required to successfully build HDF5, ensuring a seamless integration into your environment.

- Begin by setting up the necessary dependencies and tools required for building HDF5. These include the installation of essential libraries and packages, which will enable smooth compilation and execution of HDF5 within the Docker image.

- Next, we will obtain the source code for HDF5, acquiring the latest version from the official repository. It is crucial to ensure that the appropriate version is selected for compatibility and optimal performance.

- Once the source code is obtained, we will proceed to configure the build settings according to the specific requirements of your environment. This step involves customizing options, enabling desired features, and tailoring the build process to suit your needs.

- After the configuration is complete, we will initiate the build process by executing the designated commands. This step will compile the HDF5 source code, generating the necessary binaries and libraries that will be integrated into the Docker image.

- Upon successful completion of the build process, we will proceed to install HDF5 within the Docker image. This step involves copying the generated binaries and libraries into the appropriate directories, ensuring their accessibility and seamless integration into the image.

- Finally, we will perform necessary tests and verifications to ensure that HDF5 is functioning correctly within the Docker image. This step involves executing sample applications or scripts that utilize HDF5, validating its performance and verifying the successful integration.

By following these steps, you will be able to construct and incorporate HDF5 into your Docker image effortlessly. Building HDF5 within the Docker image allows for convenient utilization of its extensive capabilities, providing a powerful tool for data management and analysis within your Linux Alpine 3.13 environment.

Verifying the HDF5 Installation

In this section, we will validate the successful installation of HDF5 in the Docker image running Linux Alpine 3.13. Verifying the installation ensures that all necessary components of HDF5 are properly installed and configured.

To begin the verification process, we will check for the presence of HDF5 libraries and executables. This can be done by running the following command in the terminal:

h5dump -V

If the command returns the version number of HDF5, it indicates that the installation was successful. However, if an error message or command not found message appears, it suggests that the installation was not completed correctly.

Additionally, we can verify the HDF5 installation by creating a simple HDF5 file and reading its metadata. This can be achieved by executing the following commands:

h5dump example.h5

This command will display the metadata of the HDF5 file named example.h5. If the metadata is displayed correctly, it confirms that the HDF5 installation is functional.

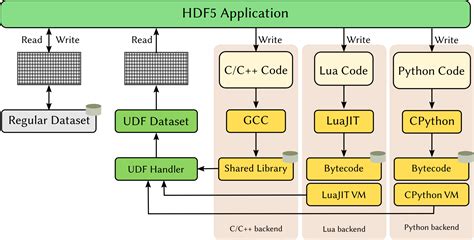

Furthermore, we can test the HDF5 installation by writing a simple C or Python program that utilizes the HDF5 library. By compiling and executing the program, we can ensure that the HDF5 library is accessible and functioning as expected.

In conclusion, verifying the HDF5 installation is crucial to ensure that the Docker image with Linux Alpine 3.13 is correctly equipped with HDF5 and its components. By performing these verification steps, we can confidently proceed with utilizing HDF5 for data storage and manipulation in our development environment.

Testing HDF5 with a Simple Example

In this section, we will explore how to test the functionality of HDF5 using a straightforward example. By gaining hands-on experience with a simple application of HDF5, you will gain a deeper understanding of its capabilities and potential applications.

Firstly, we will define a sample dataset that we will use for testing purposes. This dataset consists of various data points that represent a hypothetical experiment's results. We will employ HDF5 to store and manage this data efficiently, ensuring easy accessibility and robustness.

Next, we will walk through the process of creating an HDF5 file using the HDF5 library. We will define a hierarchical structure for our data, specifying groups, datasets, and attributes that enable logical organization and efficient storage of information.

Once we have created the HDF5 file, we will demonstrate how to retrieve and manipulate data from it. We will explore retrieving specific datasets or subsets of the data to demonstrate the flexibility and power provided by the HDF5 library.

In addition to data retrieval, we will cover how to modify and update existing datasets within an HDF5 file. This capability allows for dynamic updates to the stored data, making HDF5 a versatile choice for handling evolving datasets.

Finally, we will discuss the importance of thoroughly testing and validating HDF5 functionality. We will outline best practices for testing HDF5, including stress-testing the library, checking for data integrity, and ensuring compatibility with different operating systems and HDF5 versions.

By following this approach and conducting rigorous testing, you can confidently leverage HDF5 for your specific data storage and management requirements.

[MOVIES] [/MOVIES] [/MOVIES_ENABLED]FAQ

What is HDF5?

HDF5 stands for Hierarchical Data Format version 5. It is a file format and library for storing and managing large amounts of heterogeneous data.

Why would I need to install HDF5 in a Docker image?

You might need to install HDF5 in a Docker image if you are working on a project that requires HDF5 functionality and you want to use Docker for containerization and deployment.

What is Linux Alpine 3.13?

Linux Alpine 3.13 is a lightweight Linux distribution known for its small size and security features. It is often used as a base image for Docker containers.

Can HDF5 be used with other programming languages?

Yes, HDF5 can be used with multiple programming languages. It provides APIs and libraries for languages such as C, C++, Java, Python, and more. This allows developers to work with HDF5 files and data using their preferred programming language.