In the dynamic world of modern computing, the seamless integration of software and hardware has become paramount. As technology continues to advance, so does our need to efficiently manage system resources. This is where containerization comes into play, offering a flexible and scalable approach to application deployment and management.

Now let's explore a powerful containerization platform that has revolutionized the way we build, package, and distribute applications – Docker. By encapsulating applications inside lightweight, isolated containers, Docker eliminates the dependencies and compatibility issues often associated with traditional deployment methods. But what about caching? How can we further optimize our containerized environment for faster and more efficient data retrieval?

Enter the world of caching mechanisms, a fundamental component of any high-performance system. In this article, we'll delve into one particular caching tool – nscd, which stands for Name Service Caching Daemon. While often overlooked, nscd plays a crucial role in optimizing DNS lookups and enhancing overall system performance. By caching frequently requested data, nscd reduces the need for repeated network queries, resulting in significant time savings and improved response times.

In addition to nscd, we'll also explore the utilization of the "hosts" file, an essential part of any Linux system. Although commonly associated with mapping IP addresses to hostnames, the hosts file can be leveraged as a simple yet effective caching mechanism. By manually adding entries to this file, we can bypass time-consuming DNS lookups and directly resolve hostnames to IP addresses, further boosting application performance and reducing network overhead.

So, join us on this journey as we unravel the intricate world of Linux containerization and delve into the realm of efficient data caching. Gain insights into the versatility of Docker and the resourcefulness of caching mechanisms like nscd and the "hosts" file. Discover how these tools can elevate your application's performance and scalability, enabling you to unlock the true potential of your Linux environment.

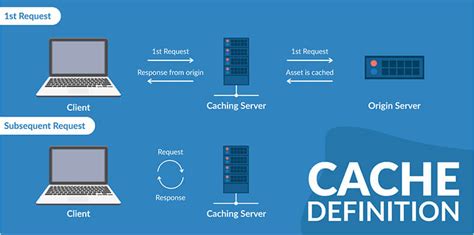

Exploring the Basics of Caching

In this section, we delve into the fundamental concepts of caching. We explore the importance and benefits of caching in a technological context, emphasizing its role in optimizing system performance and improving user experience.

Caching is a powerful technique utilized in various computing environments to expedite data retrieval and enhance responsiveness. By storing frequently accessed data in a dedicated cache, redundant requests to the primary data source can be minimized, resulting in faster access times and reduced latency.

With an understanding of the basic caching principles, we can better appreciate the role it plays in systems like Linux and Docker. By embracing caching strategies such as nscd and hosts caching, these platforms can efficiently manage resource consumption and improve overall system performance.

Throughout this article, we will explore the different types of caching mechanisms commonly employed, such as memory caching and disk caching. We will also discuss key considerations when implementing caching solutions, including cache invalidation strategies and cache consistency.

By the end of this section, readers will have a solid understanding of the underlying principles of caching and how it can be leveraged to optimize various systems, including Linux and Docker.

The Role of nscd in Enhancing Performance

In the realm of system administration, caching plays a pivotal role in optimizing the performance of various processes. One such component that plays a crucial role in caching is nscd, which stands for Name Service Cache Daemon. The primary function of nscd is to cache the results of various name resolution requests, thereby reducing the time and resources required for subsequent lookups. By leveraging the power of caching, nscd enhances the overall efficiency and responsiveness of the system.

Understanding the Hosts File in Linux

In this section, we will explore the concept of the hosts file in the Linux operating system. The hosts file is a plain text file that maps hostnames to IP addresses, allowing the system to resolve domain names locally. By understanding how the hosts file works, you can gain more control over network configurations and enhance the performance of your Linux system.

1. Introduction to the hosts file

- Explanation of the purpose and importance of the hosts file

- How it differs from DNS servers and why it is useful in certain scenarios

2. Structure and syntax

- Overview of the file format and its components

- Understanding the role of IP addresses and hostnames in the file

- Rules for formatting entries and comments

3. Editing the hosts file

- Location of the hosts file in different Linux distributions

- Using text editors to modify the file

- Permissions and security considerations

4. Common use cases and best practices

- Mapping local hostnames for development and testing purposes

- Blocking access to specific websites or malicious domains

- Overriding DNS resolution for faster and more reliable network connections

5. Troubleshooting and potential issues

- Debugging common mistakes and typos in the hosts file

- Dealing with conflicts between the hosts file and DNS servers

- Restoring the default hosts file in case of errors

By delving into the intricacies of the hosts file in Linux, you can gain a deeper understanding of how network name resolution works and how to utilize this knowledge to optimize your Linux system's performance and security.

Leveraging Docker and nscd for Enhancing Caching Performance

In this section, we will delve into the effective utilization of Docker containers and the nscd (name service caching daemon) to optimize caching performance. By leveraging containerization technology and the caching capabilities of nscd, we can enhance overall system efficiency, reduce the load on the network, and improve response times for frequently accessed resources.

| Subtopic | Description |

| Introduction to Docker | Explore the key concepts and benefits of Docker for application deployment and management. Discover how Docker containers can be utilized to isolate applications and their dependencies, providing a lightweight and portable environment for deploying caching solutions. |

| The Role of Caching in Performance Optimization | Understand the significance of caching in improving overall system performance. Learn how caching mechanisms can minimize redundant requests, reduce latency, and enhance the scalability of applications. |

| Understanding nscd and Its Functionality | Examine the purpose and features of nscd (name service caching daemon). Gain insights into how nscd caches frequently accessed information such as DNS entries, passwords, and group information, thereby accelerating subsequent lookup requests. |

| Integration of Docker and nscd | Discover the integration possibilities of Docker containers with nscd to optimize caching performance. Learn how to configure Dockerized applications to take advantage of nscd for efficient caching of frequently requested data. |

| Performance Benchmarks and Case Studies | Explore real-world performance benchmarks and case studies highlighting the effectiveness of leveraging Docker and nscd for improved caching performance. Gain insights into the quantifiable benefits that organizations can achieve by adopting this caching approach. |

| Best Practices and Considerations | Obtain valuable best practices and considerations for effectively implementing Docker and nscd caching solutions. Understand the potential challenges and how to overcome them while ensuring the security and integrity of cached data. |

By employing Docker and nscd for caching optimization, organizations can achieve significant performance enhancements, increase application responsiveness, and deliver an improved user experience. This section will provide a comprehensive guide to leverage these technologies effectively and maximize the benefits of caching in Linux environments.

Improving Performance and Efficiency: Effective Strategies for Caching in Linux Docker Environments

In order to optimize the performance and efficiency of applications running in Linux Docker environments, implementing caching mechanisms is crucial. Caching plays a vital role in reducing the response time of an application by storing frequently accessed data and serving it quickly when requested.

1. Employing Caching for Enhanced Performance

By utilizing caching techniques in Linux Docker environments, applications can experience significant improvements in performance. Caching can reduce the time taken to fetch data from external sources or execute expensive computational tasks, therefore enhancing the overall response time of the application.

2. Choosing the Appropriate Caching Mechanism

It is important to carefully select the optimal caching mechanism for your specific Linux Docker environment. There are various options available such as in-memory caching, file-based caching, and distributed caching systems. Each mechanism offers its own set of advantages and limitations, making it essential to evaluate and choose the most suitable one.

3. Implementing Caching Layers in Docker Containers

One effective strategy for caching in Linux Docker environments is to implement caching layers within Docker containers. This approach allows for isolating and optimizing caching processes within individual containers, ensuring better resource utilization and minimizing overall system overhead.

4. Leveraging Caching Frameworks and Libraries

Incorporating well-established caching frameworks and libraries can greatly simplify the implementation and management of caching in Linux Docker environments. These frameworks provide ready-to-use functionalities, advanced caching strategies, and robust performance optimizations, enabling developers to efficiently leverage caching benefits.

5. Leveraging Caching in Container Orchestration

Container orchestration platforms, such as Kubernetes, offer built-in features for integrating and managing caching mechanisms. By leveraging the capabilities provided by these orchestration tools, administrators can deploy, configure, and scale caching layers seamlessly within Linux Docker environments.

6. Regular Monitoring and Fine-tuning

To ensure the continued effectiveness of caching in Linux Docker environments, it is essential to regularly monitor and fine-tune the caching configuration. Monitoring key performance metrics, analyzing cache hit rates, and adjusting caching strategies can help optimize the cache utilization and further improve application performance.

In conclusion, implementing efficient caching mechanisms in Linux Docker environments is crucial for enhancing application performance, reducing response times, and improving overall system efficiency. By adopting best practices and leveraging appropriate caching techniques, developers and administrators can unlock the full potential of caching in their Dockerized applications.

[MOVIES] [/MOVIES] [/MOVIES_ENABLED]FAQ

What is Linux Docker?

Linux Docker is an open-source platform that allows developers to automate the deployment and management of applications within containers. It provides an isolated and lightweight environment to run various software applications without the need for a complete operating system.

How does caching work in Linux?

Caching in Linux involves storing frequently accessed data in a fast and easily accessible location, such as the memory or disk, to improve performance. When a file or data is accessed, the system checks if it is already in the cache and retrieves it from there instead of searching for it again, resulting in faster response times.

What is nscd in Linux and how does it optimize caching?

nscd stands for Name Service Cache Daemon. It is a special system daemon in Linux that caches various name resolution requests, such as hostname and user/group information. By caching this data, nscd reduces the load on the system by avoiding repetitive and time-consuming lookups, significantly improving the overall performance of the system.