With the increasing demand for efficient file management and storage solutions in the ever-evolving digital landscape, it has become paramount for businesses to streamline the access to their data. In this era of technological advancements, containerization has emerged as a game-changing strategy for businesses seeking agility, scalability, and resource optimization.

Combining the flexibility of Docker with the robustness of Windows, organizations can leverage the power of containerization to encapsulate their applications and create isolated environments. These containers provide a seamless and lightweight way to package and deploy applications, enabling developers to ship software quickly and reliably across various environments.

However, the true value of Docker and Windows containerization lies in its ability to revolutionize the way files are accessed within these environments. By breaking down traditional barriers and reimagining the file access process, businesses can unlock their full potential and realize unprecedented productivity and collaboration.

Understanding the Concept of Containerization

In today's technology-driven world, the use of containerization has gained immense popularity as a means to simplify the deployment, scaling, and management of applications. Containerization provides an efficient and lightweight solution for running applications across different environments, ensuring consistency and eliminating potential conflicts.

Containerization refers to the encapsulation of an application and its dependencies, including the runtime environment, libraries, and configurations, into a self-contained unit known as a container. These containers are isolated from the host system and can be easily deployed on various platforms, such as Windows, Linux, or macOS, without the need for complex setup processes.

By abstracting the application and its dependencies from the underlying infrastructure, containerization enables applications to run reliably and consistently across different environments, making them highly portable and scalable. Instead of relying on the traditional approach of deploying applications on individual physical or virtual machines, containerization allows developers to package applications and their dependencies into a single, portable unit.

Docker, one of the most popular containerization platforms, streamlines the process of creating, distributing, and running containers. It provides a standardized format for packaging applications and their dependencies, along with tools for managing the lifecycle of containers. With Docker, developers can easily build, deploy, and scale applications, enabling faster and more efficient software delivery.

Value of Employing Docker Windows Containers

Unlocking the Potential for Seamless Application Deployment

Modern businesses aim to optimize their software development and deployment processes, necessitating the exploration of innovative solutions. Docker Windows containers have emerged as a game-changer in the realm of application deployment. Such containers enable companies to encapsulate applications and their dependencies within a standalone and lightweight environment. This approach streamlines the application lifecycle, allowing for swift deployment across various platforms and environments.

Bolstering Portability and Flexibility

By leveraging Docker Windows containers, organizations can achieve heightened portability and flexibility in their application deployment strategies. These containers encapsulate the entire runtime environment, including the application code, libraries, and dependencies, ensuring consistent behavior regardless of the underlying system. This portability empowers software teams to deploy applications seamlessly across different Windows servers, easing the burden of compatibility concerns and reducing time-to-market.

Enhancing Resource Efficiency

Utilizing Docker Windows containers optimizes resource allocation and utilization, as these containers leverage the host operating system and share the underlying kernel. By employing containerization, companies can achieve higher density in application hosting, effectively maximizing server resources. This efficient resource utilization contributes to cost savings, as organizations can achieve more with fewer physical or virtual machines.

Streamlining Collaboration and DevOps Practices

Docker Windows containers foster collaboration and align with modern DevOps practices. Encapsulating applications and dependencies within containers enables seamless sharing of development environments, ensuring consistency across teams. Moreover, the containerization approach facilitates the creation of reproducible builds, making it easier for developers and operations teams to collaborate and deploy applications reliably across development, testing, and production environments.

Strengthening Security and Isolation

Docker Windows containers offer enhanced security and isolation for applications. By encapsulating applications within a container, potential vulnerabilities and conflicts are isolated from the host system. Additionally, containerized applications can be run with limited privileges, reducing the attack surface and minimizing the impact of security breaches. This isolation further contributes to creating a secure application environment, bolstering overall system security.

Driving Scalability and Manageability

Through the use of Docker Windows containers, organizations can easily scale up or down their applications based on demand. Containers provide a flexible and scalable solution that allows businesses to dynamically allocate resources according to changing requirements. Moreover, managing and orchestrating containers can be achieved through specialized container orchestration platforms, simplifying the task of deploying and managing applications on a large scale.

In conclusion, adopting Docker Windows containers introduces numerous benefits to organizations seeking streamlined application deployment, enhanced portability, improved resource efficiency, collaborative DevOps practices, heightened security, and scalable manageability. By employing this technology, businesses can take advantage of a robust toolset to optimize their software development lifecycle and drive innovation in today's fast-paced digital landscape.

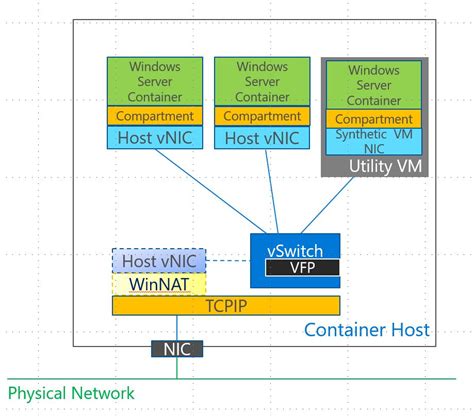

Exploring the Pathways to Achieve Connectivity with the Resources Inside a Windows Container

In the realm of synergy between software development and virtualization, understanding the mechanisms for bridging the gap between external file systems and Windows containers is an integral part of efficient workflow management. This section aims to shed light on the various strategies and techniques employed to gain seamless connectivity with the resources residing inside a Windows container, allowing developers to harmoniously navigate and manipulate their files.

Utilizing Volumes for File Accessibility in Docker Windows Container

In this section, we will explore the effective approach of leveraging volumes to enable seamless interaction with data within a Windows container deployed on Docker. By employing volumes, we can conveniently manage and manipulate files in a Windows container, fostering easy collaboration and efficient data sharing. This method eliminates the complexities of direct file access through alternative means, ensuring a smoother workflow and improved accessibility.

To achieve smooth file interaction within the Docker Windows container, volumes offer a valuable solution by providing a dedicated space for storing and accessing data. By creating a volume and linking it to a specific container, you can effortlessly share and access files between the container and the host system. This streamlined process simplifies development tasks, facilitating efficient and organized file management.

| Benefits of Utilizing Volumes |

|---|

|

Volumes offer a range of benefits, including simplifying file sharing and enabling smooth collaboration among team members. With volumes, files can be seamlessly synchronized between the Windows container and the host system, guaranteeing efficient data transfer. Additionally, the utilization of volumes ensures enhanced security and isolated access to shared files, improving the overall integrity of the containerized environment.

The flexibility offered by volumes allows for easy management of file permissions, granting specific access rights to different users or groups. This feature promotes secure file handling within the container and ensures the privacy and integrity of the data. Furthermore, volumes seamlessly integrate with existing workflows and tools, eliminating the need for complex workarounds or adjustments to established development practices.

Understanding file accessibility in Windows Containers with bind mounts

Exploring file connectivity options within Windows Containers can be a crucial aspect of managing your application's data. By utilizing bind mounts, developers can efficiently link files and directories between the host machine and the container, facilitating straightforward access to data without the need for complex configuration.

Bind mounts enable seamless communication between the Windows host system and the container, establishing a bridge for file sharing and data manipulation. This approach allows developers to leverage the existing file system of the host as if it were part of the container's internal file structure. By binding specific directories or individual files, users can easily retrieve or modify relevant data within the container, streamlining the development and deployment process.

Whether it is exchanging code, accessing configuration files, or managing persistent storage, the utilization of bind mounts simplifies the interaction between the host and the container, providing a robust foundation for efficient and flexible file management. Understanding the intricacies of bind mounts in Windows Containers empowers developers to take full advantage of the available resources and streamline their workflow.

Effective Strategies for Managing File access within the Windows Environment of a Docker Container

One critical aspect of working with Docker containers in a Windows environment is efficiently managing file access. To ensure seamless operations, it is imperative to follow best practices when it comes to accessing files within the container. This section will present a set of effective strategies that can be employed to optimize file access and minimize potential disruptions.

1. Leveraging Mount Points: Utilizing mount points, which allow the mapping of specific directories or volumes from the host system to the Docker container, can streamline file access. By carefully selecting the appropriate mount points, developers can establish a robust connection between the Windows environment and the container, enabling swift and efficient file retrieval.

2. Implementing Shared Volumes: Employing shared volumes is another powerful approach to manage file access within a Docker Windows container. This method involves specifying a host directory as a volume, enabling seamless file access and modification from both the host system and the container. Shared volumes allow for convenient collaboration and synchronization of files, ensuring enhanced productivity and ease of use.

3. Utilizing Container APIs: Taking advantage of container APIs can facilitate efficient file access within the Windows environment. These APIs provide developers with comprehensive control over the container's file system, allowing them to seamlessly interact with files, directories, and their respective permissions. Leveraging container APIs empowers users to perform various file-related tasks, such as reading, writing, and deleting files, with minimal efforts.

4. Optimizing Network Storage: When working with Docker Windows containers, optimizing network storage can significantly enhance file access capabilities. Configuring a high-performance network storage solution, such as NAS or SAN, ensures reliable and fast access to files within the container. By leveraging advanced networking techniques and prioritizing network performance, developers can mitigate potential latency and bottlenecks, guaranteeing smooth file operations.

5. Employing Caching Mechanisms: To further enhance file access in a Docker Windows container, developers can implement caching mechanisms. Caching frequently accessed files within the container's local storage or implementing a dedicated caching layer minimizes the need for constant network access. This approach improves file retrieval speed, reduces latency, and optimizes the overall performance of the container.

By implementing these best practices for file access in Docker Windows containers, developers can ensure smooth operations and efficient handling of files within the container environment. Leveraging strategies such as mount points, shared volumes, container APIs, network storage optimization, and caching mechanisms empowers users to seamlessly interact with files, fostering a productive and streamlined development process.

[MOVIES] [/MOVIES] [/MOVIES_ENABLED]FAQ

How can I access files in a Docker Windows container?

To access files in a Docker Windows container, you can use the Docker volume feature. By mapping a host directory to a directory inside the container, you can easily access and modify files.

What is the process for mapping a host directory to a directory inside a Docker Windows container?

To map a host directory to a directory inside a Docker Windows container, you need to use the `-v` or `--mount` flag when running the container. For example, you can use the command `docker run -v C:/host-directory:C:/container-directory image-name` to map the `C:/host-directory` on the host to `C:/container-directory` inside the container.

Can I modify files inside a Docker Windows container and see the changes on my host machine?

Yes, you can modify files inside a Docker Windows container, and the changes will be reflected on your host machine. When you modify a file inside a mapped directory, the changes will be synchronized between the host and the container.

Is it possible to access files from a Docker Windows container on a different machine?

Yes, it is possible to access files from a Docker Windows container on a different machine. However, you need to make sure that the container is running in a network mode that allows access from other machines, such as `host` or `bridge` mode. Additionally, you might need to configure the firewall settings on the host machine to allow incoming connections to the container.