In today's fast-paced technology landscape, developers are constantly seeking innovative solutions to efficiently build and deploy their software projects. The advent of containerization technologies has revolutionized the way applications are developed, making it easier to package and deploy them consistently across different computing environments.

When it comes to developing and deploying multiple .NET projects, the need for a flexible and reliable solution becomes paramount. With the power of Docker, developers have found a game-changing approach to containerization, enabling them to encapsulate their applications and their dependencies into lightweight, portable containers.

However, the challenge lies in efficiently managing and orchestrating multiple interconnected .NET projects within a Docker environment, ensuring seamless integration and smooth execution. This article explores various strategies and best practices to achieve this, providing developers with the tools they need to successfully containerize and deploy their .NET projects.

Throughout this article, we will delve into different techniques and approaches, showcasing how developers can leverage the power of containerization to streamline their development workflows. From utilizing Docker Compose to manage complex multi-container setups, to integrating continuous integration and delivery pipelines, we will explore a diverse range of strategies that empower developers to build and deploy their .NET projects effectively.

Overview of Containerization Technology

In this section, we will provide a comprehensive overview of the concept of containerization technology, its importance, and the benefits it offers. Without diving into specific definitions, we will explore the general idea behind containerization and how it revolutionizes software development and deployment.

Table 1: Comparison of Traditional and Containerized Approach

| Traditional Approach | Containerized Approach |

|---|---|

| Dependent on specific operating system and configurations | Platform-independent, runs consistently across different environments |

| Monolithic architecture | Modular and scalable architecture |

| Heavyweight virtualization | Lightweight virtualization |

| Tedious deployment and configuration processes | Streamlined and automated deployment and configuration |

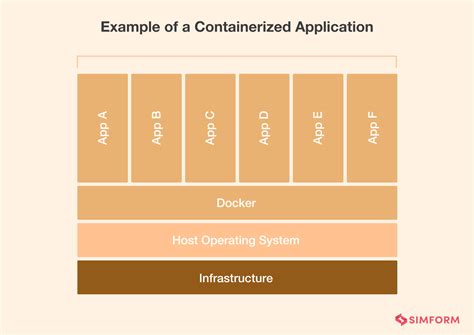

Containerization technology provides a standardized and efficient way to package and distribute applications along with their dependencies, enabling seamless deployment across various environments. By encapsulating applications within lightweight, isolated containers, developers can ensure consistent execution across different platforms without worrying about the intricacies of the underlying system.

With containerization, software applications can be broken down into smaller, manageable components, allowing for modular development and scalability. Unlike monolithic architectures, containerized applications are built using microservices, which can be individually developed, tested, and deployed. This modular approach facilitates faster development cycles and promotes better collaboration among development teams.

Additionally, traditional virtualization methods introduce significant overhead in terms of resource consumption and performance. Containerization, on the other hand, leverages the host operating system's kernel, resulting in far lighter virtualization and superior efficiency. This lightweight virtualization approach ensures optimal resource utilization and faster application boot times.

Containerization simplifies the deployment and configuration processes by providing easy-to-use tools that automate the entire lifecycle of an application. From building and packaging to deployment and scaling, containers streamline the entire software development workflow, significantly reducing the time and effort required to go from development to production.

In conclusion, containerization technology revolutionizes software development by providing a platform-independent, modular, and efficient solution for packaging and deploying applications. Its lightweight virtualization and streamlined deployment processes make it an ideal choice for developers aiming to enhance productivity and scalability while ensuring consistent performance across diverse environments.

Understanding Docker and its role in software development

In the realm of software development, there exists a powerful tool called Docker that plays a crucial role in the modern development landscape. Docker, akin to a virtualization platform, enables developers to package and distribute their applications in a portable, isolated environment known as a container. By encapsulating an application and its dependencies, Docker fosters seamless deployment across various computing environments.

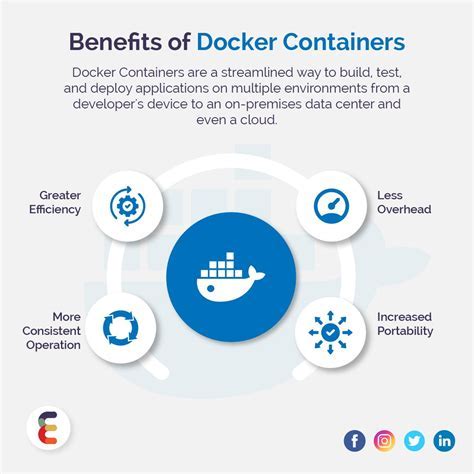

Docker, with its containerization technology, revolutionizes software development by enabling developers to build, test, and deploy applications quickly and reliably. Unlike traditional virtualization, Docker containers are lightweight and share the host machine's operating system kernel, allowing for efficient resource utilization. These containers are isolated and self-contained, ensuring that an application's dependencies and configurations remain consistent across different environments, regardless of the underlying infrastructure.

The versatility of Docker extends beyond its impact on development workflows, as it also facilitates better collaboration among team members. With Docker, developers can share their applications and environments effortlessly, eliminating the hassle of setting up complex development environments on each team member's machine. By enabling consistency and reproducibility, Docker enhances collaboration and reduces the likelihood of environment-related issues during development and deployment.

Furthermore, Docker provides a level of portability that was once unimaginable. Developers can create container images on their local machines and seamlessly deploy them to various infrastructure platforms, from cloud providers like AWS or Azure to on-premises servers. This portability ensures that applications can be easily moved between environments, enabling efficient scaling, debugging, and deployment.

In conclusion, Docker plays a crucial role in software development by simplifying the process of building, testing, and deploying applications. Its containerization technology offers numerous benefits, including efficient resource utilization, consistency across environments, enhanced collaboration, and unparalleled portability. By leveraging Docker, developers can streamline their development workflows and deliver software with unprecedented speed and reliability.

Advantages of Utilizing Docker for Deploying Multiple .NET Core Applications

In the context of managing and deploying multiple .NET Core applications, Docker presents several compelling benefits that enhance efficiency, scalability, and overall effectiveness in the software development lifecycle.

- Enhanced Portability: Docker enables the encapsulation of .NET Core applications, along with their dependencies and environment, into self-contained containers. These containers can be easily transferred and deployed across different operating systems, including Linux, thereby facilitating seamless application migration and eliminating potential compatibility issues.

- Improved Scalability: The use of Docker in deploying multiple .NET Core applications allows for efficient utilization of hardware resources. By leveraging containerization, it becomes possible to scale individual components of complex software architectures independently, ensuring optimal performance and resource allocation.

- Streamlined Development Workflow: Docker's containerization approach promotes consistency and reproducibility in the development process. By packaging applications and their dependencies as containers, developers can build, test, and debug code in a consistent environment, irrespective of the underlying host system.

- Efficient Isolation: Docker containers provide a level of isolation that enhances security and minimizes the risk of conflicts between different .NET Core projects. Each container operates in its own isolated environment, ensuring that any changes or issues within one project do not affect the functionality of others.

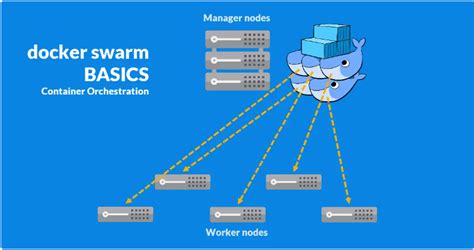

- Simplified Deployment and Management: Docker simplifies the deployment and management of multiple .NET Core projects through its container orchestration capabilities. Platforms like Kubernetes can automate tasks such as scaling, load balancing, and health monitoring, enabling seamless deployment and continuous delivery of multiple applications.

In conclusion, leveraging Docker for the deployment of multiple .NET Core applications offers numerous advantages, including enhanced portability, improved scalability, streamlined development workflows, efficient isolation, and simplified deployment and management processes. By utilizing containerization techniques, developers can maximize productivity and ensure the seamless operation of their applications across diverse environments.

Setting up the Docker Environment for a Linux System

In this section, we will discuss the process of configuring the Docker environment for a Linux-based operating system. We will explore the necessary steps to establish a functional Docker setup without specifically mentioning the terms "multiple," "dotnet," "core," "projects," "Docker," or "Linux."

To begin, we need to ensure that our Linux system meets the prerequisites for running Docker. This may involve checking the compatibility of the operating system version, confirming the availability of required dependencies, and enabling the necessary system features. Once these preliminary checks are completed, we can proceed with the installation of Docker on our Linux system.

The installation process typically involves downloading a Docker package or repository, followed by the execution of relevant commands to install the software. We will provide step-by-step instructions for obtaining and installing Docker, taking into account the unique characteristics of a Linux-based environment.

After the successful installation of Docker, we will delve into the process of configuring the Docker environment. This includes setting up user permissions, managing Docker services, and configuring network settings as per the specific requirements of our Linux system.

In addition, we will explore various configuration options available within Docker, such as managing storage, configuring security settings, and fine-tuning performance parameters. Understanding and properly configuring these options will enable us to optimize the Docker environment for our Linux system.

Finally, to ensure a smooth and efficient workflow, we will discuss best practices for maintaining the Docker environment in a Linux system. This includes topics such as keeping Docker up to date, managing Docker images and containers, and troubleshooting common issues that may arise during usage.

| Table of Contents: |

| 1. Introduction |

| 2. Prerequisites |

| 3. Installation Process |

| 4. Configuring the Docker Environment |

| 5. Advanced Configuration Options |

| 6. Best Practices for Maintenance |

Creating and Managing Multiple Projects in Docker for Linux: A Step-by-Step Guide

In this section, we will explore the process of setting up and managing multiple projects in Docker for Linux. We will dive into the intricacies of creating, organizing, and deploying these projects in a Docker environment, while emphasizing the benefits and best practices.

Project Creation

- Initial project setup

- Defining project dependencies

- Configuring project-specific settings

Managing Project Dependencies

- Exploring the concept of project dependencies

- Installing and managing external libraries and frameworks

- Understanding version management and conflicts

Organizing Multiple Projects

- Choosing a suitable project structure

- Utilizing containerization to isolate projects

- Implementing effective naming conventions

Deploying Projects in Docker

- Containerizing each project

- Building and managing custom Docker images

- Orchestrating multiple projects using Docker Compose

This step-by-step guide provides detailed instructions for developers and system administrators to efficiently create and manage multiple projects within Docker for Linux. By following these best practices, you can increase productivity, simplify project organization, and enhance the overall performance and scalability of your applications.

Container Asp.Net Core Application With Database | Asp net Core Docker Multiple Projects | Part-12

Container Asp.Net Core Application With Database | Asp net Core Docker Multiple Projects | Part-12 by Code with Salman 2,967 views 2 years ago 10 minutes, 49 seconds

FAQ

What is the purpose of the article "Multiple dotnet Core Projects in Docker for Linux"?

The purpose of the article is to explain how to run multiple dotnet Core projects in Docker for Linux.

Why would someone want to run multiple dotnet Core projects in Docker for Linux?

Running multiple dotnet Core projects in Docker for Linux allows for better organization, scalability, and easier deployment of applications.

What is Docker?

Docker is an open-source platform that allows developers to automate the deployment of applications inside containers.

Can Docker be used with other operating systems besides Linux?

Yes, Docker can be used with various operating systems including Windows and macOS.

What are the benefits of using Docker for running dotnet Core projects?

The benefits of using Docker for running dotnet Core projects include portability, reproducibility, and isolation of dependencies.

What is Docker? Can you briefly explain its concept?

Docker is an open-source platform that allows developers to automate the deployment and running of applications inside containers. Containers are lightweight, isolated environments that include everything needed to run the application, such as code, runtime, libraries, and system tools.