Imagine a world where the boundaries between different operating systems are seamlessly bridged. A place where the confinement of data to a single platform is a thing of the past, replaced by a unified system that effortlessly transfers essential components between various environments. This utopian vision is becoming a reality with a groundbreaking method that defies traditional limitations and reshapes the way we perceive the transportation of software images.

Breaking away from the conventions of the past, this innovative technique empowers users to efficiently exchange vital operating system information across different systems. Gone are the laborious steps, intricate processes, and compatibility issues that have long plagued cross-platform endeavors. In this new paradigm, the export of a compressed file, referred to fondly as a "container," emerges as the key to harmoniously transitioning data across diverse landscapes.

At the heart of this transformative approach lies a sophisticated mechanism that leverages the capabilities of modern technology. By encapsulating the essence of an entire operating system into an easily transportable container, the boundaries dividing systems are irrevocably blurred. This novel solution not only simplifies the migration of data but also eradicates the need for tedious manual configurations that once consumed valuable time and resources.

This article delves into a specific manifestation of this revolutionary process, a virtually frictionless transfer of Linux image content from the tar format to Windows. Through a series of meticulously crafted steps and supported by robust algorithms, the container ingeniously circumvents the conventional limitations that once hindered such cross-environment endeavors. With each step carefully detailed and accompanied by explanations, readers will gain valuable insight into the inner workings of this groundbreaking approach, as well as the limitless possibilities it presents for the efficient exchange of data between operating systems.

Docker and its Role in Containerization

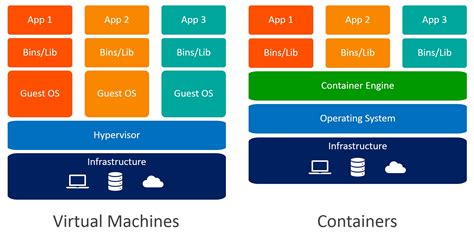

Containerization is a revolutionary technology that has transformed the way applications are deployed and run. At the forefront of this movement is Docker, a powerful platform that enables the creation and management of lightweight, isolated containers. By utilizing containerization, organizations can achieve greater scalability, flexibility, and efficiency in their software development and deployment processes.

One of the key advantages of Docker is its ability to package an application and all of its dependencies into a single container, which can then be deployed and run on any system that supports Docker. This eliminates the need for complex configuration and setup processes, making it easier to deploy applications across different environments. Additionally, Docker containers are isolated from each other and from the underlying host system, providing a secure and consistent runtime environment.

| Benefits of Docker in Containerization |

|---|

| 1. Portability: Docker containers can be easily moved between different systems, allowing applications to run consistently regardless of the underlying infrastructure. |

| 2. Efficiency: Docker's lightweight nature results in faster application startup times and reduced resource usage. |

| 3. Scalability: Docker's ability to scale horizontally allows applications to handle increased workloads by spinning up additional containers. |

| 4. Version control: Docker enables version control for both the application code and its dependencies, making it easier to manage updates and rollbacks. |

In conclusion, Docker plays a crucial role in containerization by providing a robust platform for creating, managing, and deploying containers. By leveraging the benefits of Docker, organizations can streamline their software development processes, improve application portability, and enhance overall operational efficiency.

Transferring Linux Images to Windows Using Docker

When it comes to migrating Linux images to Windows, Docker provides a seamless and efficient solution. This section explores the process of transferring Linux images to Windows using Docker, without relying on the traditional methods of sending tar files.

A Step-by-Step Guide on Transferring Linux Images across Different Operating Systems

In this comprehensive guide, we will walk you through the process of seamlessly transferring Linux images between various operating systems without relying on Docker. By following these step-by-step instructions, you will gain a deeper understanding of how to migrate Linux images from one environment to another, using alternative methods that do not involve the traditional process of sending tar files from Linux to Windows.

Step 1: Preparation

Before diving into the image transfer process, it is crucial to ensure you have the necessary tools and configurations in place. This step discusses the essential prerequisites, including the relevant software, network settings, and compatibility considerations to guarantee a successful image transfer.

Step 2: Image Export

Learn how to export Linux images from the source operating system, preserving all the necessary data and configurations. Explore different techniques for creating compressed archives or tarballs that can be easily transferred to the target environment.

Step 3: Network Communication

This step delves into establishing network communication between the source and target operating systems. Discover various methods for establishing a secure connection and transferring the exported Linux image across different networks.

Step 4: Image Import

Once the image has been successfully transferred to the target operating system, it is time to import and integrate it with the new environment. This step describes the procedures for unpacking the exported image, ensuring compatibility, and configuring the necessary settings for seamless integration.

Step 5: Testing and Verification

Conduct comprehensive testing and verification activities to ensure the successful transfer of the Linux image. This step covers the necessary tests to confirm that the image has been imported correctly and is functioning as expected within the new operating system.

Step 6: Troubleshooting and Fine-Tuning

In case of any challenges or issues encountered during the image transfer process, this step provides troubleshooting tips and techniques to identify and resolve common problems. Additionally, it covers ways to fine-tune the transferred Linux image to optimize its performance within the target operating system.

By following this step-by-step guide, you will gain the knowledge and skills to seamlessly transfer Linux images between different operating systems, enabling smooth migration and integration without relying on Docker or traditional tar file transfers.

Optimizing Docker for Cross-Platform Compatibility

In the context of transferring software packages between different operating systems, it is essential to optimize Docker for cross-platform compatibility. The ability to seamlessly migrate applications across various environments can greatly enhance productivity and flexibility in software development and deployment processes. This section explores strategies and best practices for ensuring smooth transitions and efficient utilization of Docker for cross-platform deployments.

Ensuring Compatibility

One of the primary challenges in achieving cross-platform compatibility involves addressing the inherent differences and dependencies between operating systems. By understanding the unique characteristics of each environment, developers can employ effective techniques for optimizing Docker for compatibility across various platforms. This can include identifying and managing system-specific packages, libraries, and configurations.

Containerization Techniques

Containerization lies at the core of Docker's functionality and plays a crucial role in cross-platform compatibility. It enables the encapsulation of applications along with their dependencies into portable containers, which can be seamlessly transferred between different operating systems. By adopting efficient containerization techniques, such as utilizing lightweight base images and minimizing the number of layers, developers can optimize Docker's performance and portability.

Versioning and Compatibility

To ensure smooth operations across different environments, it is vital to maintain meticulous versioning and compatibility practices. This involves accurately documenting the versions of Docker, operating systems, and any additional software components utilized within the containers. Additionally, developers should regularly test their applications on various platforms to identify and address compatibility issues in a proactive manner.

Adopting Cross-Platform Tooling

Utilizing cross-platform tools and technologies can significantly streamline the process of optimizing Docker for compatibility. Tools such as Docker Compose, Kubernetes, and Jenkins provide developers with powerful capabilities for managing and orchestrating containers across different operating systems. By leveraging these tools, teams can simplify the deployment process and minimize compatibility challenges.

Continuous Integration and Testing

By integrating continuous integration and automated testing practices into the software development lifecycle, teams can identify and rectify compatibility issues at an early stage. Automated testing scripts can be utilized to validate the behavior and performance of applications in various environments, ensuring that Docker containers function optimally across different platforms. This approach helps establish a robust and reliable cross-platform compatibility pipeline.

Conclusion

Optimizing Docker for cross-platform compatibility is a critical aspect of modern software development. By focusing on ensuring compatibility, employing effective containerization techniques, maintaining versioning practices, adopting cross-platform tooling, and implementing continuous integration and testing, developers can harness the full potential of Docker for seamless application deployment across different operating systems.

Exploring Strategies to Ensure Successful Transfer of Linux Images to Windows Systems

Introduction:

In an increasingly interconnected digital landscape, the ability to transfer Linux images to Windows systems is becoming a crucial aspect of efficient data management. This article examines various strategies that can be employed to seamlessly facilitate this process and ensure a smooth transition between the two distinct operating systems.

Understanding the Compatibility Challenges:

The translation of Linux images to Windows systems poses a unique set of compatibility challenges due to the inherent differences in their architectures and file systems. These differences necessitate the utilization of specialized techniques to overcome potential roadblocks and ensure the successful transfer of Linux images to Windows environments.

Implementing Virtualization:

One effective approach to address compatibility challenges is the utilization of virtualization technology. By creating a virtualized environment on the Windows system, it becomes possible to run Linux images seamlessly. This allows for the preservation of Linux-specific configurations, dependencies, and functionalities while ensuring a harmonious coexistence between the two operating systems.

Adopting Cross-Platform Containerization:

A promising strategy for the smooth transfer of Linux images to Windows systems involves the adoption of cross-platform containerization solutions. By encapsulating the required Linux components and dependencies within lightweight containers, it becomes possible to abstract away the differences in underlying architectures. This approach facilitates the straightforward deployment of Linux images on Windows, offering users the benefits of containerization while mitigating compatibility concerns.

Ensuring File System Compatibility:

An essential aspect of successfully transferring Linux images to Windows systems is ensuring file system compatibility. Windows utilizes the NTFS file system, while Linux often employs ext-based file systems. To overcome this hurdle, file system conversion tools or third-party solutions can be leveraged to enable seamless collaboration and effective transfer of Linux images to Windows environments.

Conclusion:

The ability to transfer Linux images to Windows systems is a critical capability in today's diverse computing landscape. By employing virtualization, cross-platform containerization, and addressing file system compatibility, users can ensure a smooth transition and harness the full potential of both Linux and Windows operating systems within their infrastructures.

The Significance of Efficient Packaging and Distribution of Containerized Content

In the modern era of software development and deployment, containerization has emerged as a revolutionary approach to streamline the packaging and distribution of applications across platforms. An integral component of this process is the efficient management of container images, ensuring their seamless transfer and availability across diverse environments.

Containerization, synonymous with lightweight virtualization, has ushered in a paradigm shift by enabling developers to encapsulate applications and their dependencies into self-contained units known as containers. These containers can then be effortlessly deployed, scaled, and orchestrated, offering unparalleled flexibility and efficiency.

One critical aspect of containerization is the packaging and distribution of container images, which contain all the necessary components and configurations to instantiate the desired application environment. The efficient handling of these images is crucial for seamless and rapid deployment across different computing platforms, irrespective of their underlying architectures.

- Enhancing Portability: By efficiently packaging container images, developers can achieve a high degree of portability, allowing applications to run consistently across diverse operating systems and cloud providers.

- Streamlining Deployment: Effective image distribution facilitates streamlined deployment processes, enabling rapid provisioning and replication of application environments without the need for complex setup and configuration.

- Optimizing Resource Utilization: Efficient packaging and distribution of containerized content minimize the resource overheads associated with network transmission and storage, optimizing overall system performance.

- Enabling Scalability: Seamless image transfer enables effortless scaling of applications, empowering organizations to handle fluctuating workloads and accommodate the ever-changing demands of their users.

In conclusion, the efficient packaging and distribution of container images play a vital role in the successful adoption and utilization of containerization technologies. By optimizing the transfer and availability of these images, developers can ensure consistent application deployment, enhanced portability, and efficient resource utilization, ultimately enabling organizations to deliver robust and scalable solutions.

docker save and load

docker save and load by DEVOPS GUY! 23,183 views 6 years ago 6 minutes, 18 seconds

run Linux on Windows Docker containers!!

run Linux on Windows Docker containers!! by NetworkChuck 170,887 views Streamed 4 years ago 37 minutes

FAQ

What is Docker?

Docker is an open-source platform that allows developers to automate the deployment, scaling, and management of applications using containerization.

How does Docker send a Linux image to Windows?

Docker uses the tar format to package the Linux image, and then sends it to Windows. Once received, Windows uses Windows Subsystem for Linux (WSL) to run the Linux image contained within the tar file.

Why would someone want to send a Linux image to Windows using Docker?

There could be various reasons for sending a Linux image to Windows using Docker. One possible reason is to allow developers to easily test and run Linux-based applications on their Windows machines without needing a separate virtual machine or dual-boot setup.

What is the advantage of using Docker for sending a Linux image to Windows?

Using Docker for sending a Linux image to Windows brings the advantage of encapsulating the entire Linux environment within a container, making it easy to deploy and manage the Linux applications on Windows without conflicts or dependencies.

Are there any limitations or compatibility issues when sending a Linux image from Docker to Windows?

While Docker provides a seamless way to send Linux images to Windows, it's important to note that not all Linux applications may be compatible with Windows. Some applications may have dependency issues or may not function properly due to differences in the Windows and Linux operating systems.