In the realm of technological advancements, the convergence of cross-platform compatibility presents an opportunity for seamless integration between diverse systems. This article explores a novel method of establishing a cohesive bond between distinctive software environments, fostering enhanced collaboration and efficiency.

Within the dynamic landscape of software development, the need to connect RO Linux code is fundamental to foster fluid communication and interoperability. By deploying cutting-edge techniques, developers can bridge the gaps that have traditionally impeded the coexistence of distinct software ecosystems, promoting a unified and streamlined approach.

Through the application of innovative strategies, developers can cultivate a harmonious alliance between disparate software entities without relying on traditional methods. This approach shuns the limitations of conventional linkage techniques, paving the way for a more flexible and robust connection between RO Linux code, transcending the boundaries of individual platforms. By embracing this paradigm shift, developers can unlock the full potential of collaboration and innovation, ultimately redefining the possibilities in software development.

Understanding Containerization: An Introduction to Docker

In today's rapidly evolving technology landscape, organizations are constantly seeking innovative and efficient ways to develop, deploy, and manage applications. Docker, a powerful and versatile containerization platform, has emerged as a game-changer in the world of software development and deployment.

In this section, we will explore the concept of containerization and delve into the workings of Docker, a leading containerization technology. Through the use of lightweight and isolated containers, Docker revolutionizes the way applications are packaged, deployed, and run across different environments.

- What is a Container?

- Benefits of Containerization

- How Does Docker Work?

A container is a lightweight, standalone executable unit that encapsulates an application and its runtime dependencies. Think of it as a virtual environment that isolates the application from the underlying system, making it portable and reliable across different environments.

Containerization offers numerous benefits, such as enhanced scalability, portability, and resource efficiency. By packaging applications and their dependencies into containers, organizations can easily deploy and manage software across different operating systems, cloud platforms, and development environments.

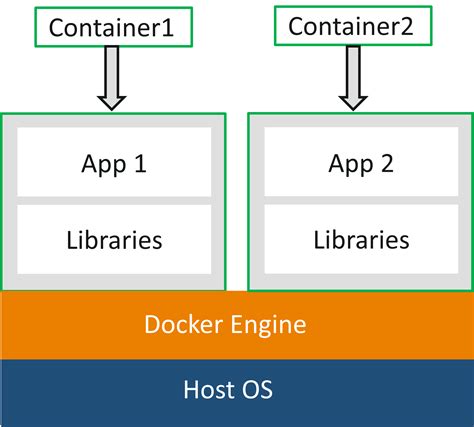

Docker works on the principle of using containerization technology to package applications into standardized containers. These containers are built from Docker images, which are essentially lightweight and immutable blueprints of the application and its dependencies.

When a Docker image is run, it creates a container that is isolated from the host system and other containers. This isolation ensures that the application runs consistently and reliably across different computing environments, irrespective of their underlying infrastructure.

In conclusion, Docker provides a flexible and efficient solution for deploying applications in a consistent and reproducible manner. By leveraging the power of containerization, Docker enables organizations to build, ship, and run applications seamlessly across different platforms, making it an indispensable tool in the modern software development landscape.

Diving into the fundamentals of containerization technology and its functionality

In this section, we will explore the foundational concepts and features of containerization technology, delving deep into its functionality and benefits. By understanding the basics of containerization, you can harness its power to streamline development processes, improve efficiency, and enhance scalability.

Containerization technology allows applications to be packaged with all their dependencies into isolated and lightweight units known as containers. These containers provide a consistent and reproducible environment across different platforms, making it easier to deploy and run applications seamlessly.

| Key Features of Containerization |

|---|

| 1. Resource Isolation |

| 2. Process Segregation |

| 3. Portable and Reproducible |

| 4. Efficient Resource Utilization |

One of the primary advantages of containerization is its ability to provide resource isolation, ensuring that each container has its own allocated resources such as CPU, memory, and storage. This isolation prevents interference between containers, leading to enhanced security and stability of applications.

Furthermore, containerization allows for process segregation, enabling multiple applications to run independently within their own containers. This segregation eliminates conflicts between different applications and facilitates better management and monitoring capabilities.

Another key aspect of containerization is its portability and reproducibility. Containers can be easily moved across different environments, including development, testing, and production, without dependencies on specific hardware or operating systems. This portability provides developers with a consistent environment and simplifies the deployment process.

Additionally, containerization optimizes resource utilization by leveraging the lightweight nature of containers. Compared to traditional virtual machines, containers require fewer resources, leading to more efficient use of hardware and improved scalability.

In conclusion, understanding the basics of containerization technology is crucial for harnessing its functionality and reaping the benefits it offers. By leveraging containerization, organizations can streamline their development workflows, improve application deployment, and achieve greater scalability and flexibility.

Benefits of using Docker in Linux environments

In the world of Linux environments, employing Docker brings a multitude of advantages that streamline development processes and enhance the efficiency of software applications. By leveraging the power of containerization, Docker enables easier application deployment, improved scalability, and simplified maintenance.

Enhanced Portability: Docker allows for the creation of lightweight and self-contained containers, which can be easily deployed across different Linux distributions and hardware platforms. This level of portability simplifies the migration of applications between development, testing, and production environments, reducing the risk of compatibility issues.

Isolated Environments: With Docker, each application is encapsulated within its own container, isolating it from other applications and the underlying host system. This isolation provides a higher level of security, as any potential vulnerabilities or conflicts are confined to the individual container without affecting other components or the overall system.

Efficient Resource Utilization: Docker optimizes the utilization of system resources by sharing the host's kernel among multiple containers. This lightweight approach minimizes the overhead and improves performance, allowing for the deployment of numerous containers on a single Linux instance, without consuming excessive memory or CPU power.

Faster Deployment: Docker simplifies the process of application deployment by packaging all necessary dependencies, libraries, and configuration files within a container. This eliminates the need for manual setup and configuration on multiple machines, resulting in faster and more consistent deployment across different Linux environments.

Scalability and Versatility: Docker enables horizontal scalability by allowing the creation of multiple containers, each running a specific service or component of an application. This modular architecture makes it easy to scale up or down based on demand, ensuring optimal performance and resource utilization without disrupting the entire system.

Easier Maintenance and Updates: With Docker, updating or making changes to an application becomes a seamless process. By simply replacing or updating the container image, the updated version of the application is readily available, eliminating the need for manual intervention on each machine. This automated approach greatly simplifies maintenance tasks and reduces the risk of errors or inconsistencies.

Community Support and Extensibility: Docker benefits from a vibrant and active community that continuously develops and shares new container images, tools, and best practices. This community-driven ecosystem allows for easy integration with other technologies and makes Docker a highly extensible and flexible solution for Linux environments.

Overall, the adoption of Docker in Linux environments offers numerous advantages, ranging from enhanced portability and resource utilization to simplified deployment and maintenance. By leveraging the power of containerization, developers and system administrators can streamline their workflows, achieve higher efficiency, and create more resilient and scalable software applications.

Exploring the Advantages of Docker for Linux Software Development

In this section, we will delve into the numerous benefits that Docker brings to the table when it comes to Linux application development. By utilizing Docker, developers can streamline their workflow, increase efficiency, and enhance collaboration with their team members.

Simplifying the Development Environment:

One of the key advantages of using Docker for Linux development is the ability to create a consistent and reproducible environment across different machines. Developers can package their code along with all required dependencies into a container, allowing them to easily share the same environment with colleagues or seamlessly switch between different projects.

Enhanced Portability and Scalability:

With Docker, Linux developers can encapsulate their applications and dependencies into lightweight containers that can be deployed and run on any Linux machine. This brings portability, allowing the application to be seamlessly moved from development to testing or production environments. Additionally, Docker's containerization technology enables easy scalability by quickly spinning up multiple instances of the application to handle increasing workloads.

Isolation and Security:

By leveraging Docker's containerization, Linux developers can isolate their applications and ensure that they do not interfere with other processes or affect the underlying operating system. This isolation provides an added layer of security, as any vulnerabilities or issues within the container are contained and do not impact the entire system. Additionally, Docker's container runtime offers various security features, such as resource limitations and strict access controls, ensuring that the applications remain secure.

Streamlined Collaboration:

Docker simplifies collaboration for Linux developers by providing a standardized format for packaging and distributing applications. Team members can easily share their Docker images, allowing others to quickly set up the same development environment with minimal configuration. This promotes seamless collaboration, as it eliminates the need for manual setup and ensures consistency across the team.

Efficient Resource Utilization:

With Docker, Linux developers can optimize resource utilization by running multiple containers on the same machine, each with its own isolated environment. This eliminates the need for running separate virtual machines for each application, resulting in reduced overhead and improved efficiency. Docker's lightweight nature also allows for faster container startup times and better overall performance.

Conclusion:

Overall, Docker provides significant advantages for Linux development, offering simplified development environments, enhanced portability, heightened security, streamlined collaboration, and efficient resource utilization. By harnessing Docker's capabilities, developers can focus more on coding and less on managing complex environments, leading to increased productivity and accelerated software delivery.

Understanding the concept of containerization and its significance in the Linux environment

In the vast world of computer programming, the concept of containerization has emerged as a game-changer. It offers a way to package and isolate software applications along with their dependencies, enabling them to run reliably and consistently across different computing environments.

At the heart of containerization lies the concept of containers, lightweight and portable units that encapsulate an application and its runtime environment, including libraries, dependencies, and configuration settings. These containers provide a standardized and self-contained environment, ensuring that the application runs consistently, regardless of the underlying operating system.

The use of containers in the Linux environment has revolutionized software development and deployment processes. By leveraging the power of containerization, developers can easily package their code into containers, eliminating the need for manual setup and configuration of the runtime environment. This not only saves time and effort but also reduces the chances of conflicts and inconsistencies that often arise when moving applications between different systems.

Furthermore, containers offer a level of isolation, enabling multiple applications to run concurrently on the same Linux host without interfering with each other. Each container operates within its own isolated environment, providing a high degree of security and preventing any potential conflicts or breaches caused by one application affecting another.

In addition, containers allow for easy scalability and flexibility. They can be easily deployed and replicated across different environments, whether it be on-premises servers, cloud platforms, or even virtual machines. This makes it possible to rapidly scale applications, adapt to varying workload demands, and seamlessly integrate new features or updates.

In summary, the concept of containerization, exemplified by containers, has revolutionized the way software applications are developed, deployed, and managed in the Linux ecosystem. By providing a standardized and isolated runtime environment, containers ensure that applications can run consistently across different environments and offer numerous benefits, including easier deployment, scalability, flexibility, and enhanced security.

Installing and Configuring Docker on a Linux System

In this section, we will explore the process of setting up and configuring Docker on a Linux-based operating system. We will discuss the steps involved in installing Docker, as well as the necessary configurations to ensure its smooth operation.

Step 1: Checking Linux Version

Before installing Docker, it is important to verify that your Linux system meets the necessary requirements. Use the command line to check the version of your Linux distribution and ensure compatibility with Docker.

Step 2: Downloading Docker

Once you have confirmed compatibility, the next step is to obtain the Docker package. This can be done by downloading the Docker repository key and adding it to the package manager's trusted keys list.

Step 3: Installing Docker

After obtaining the Docker package, the installation process can begin. Using the package manager, install the Docker engine and its dependencies on your Linux system. Ensure that you have the necessary permissions for the installation.

Step 4: Verifying Docker Installation

Once the installation is complete, it is important to verify that Docker has been successfully installed on your Linux system. Use simple commands to check the Docker version and ensure that the engine is functioning properly.

Step 5: Configuring Docker

In order to optimize the Docker environment and customize it for your needs, it is necessary to configure certain aspects. This includes configuring Docker to start on system boot, setting up networking options, and adjusting resource limits, among other configurations.

Step 6: Adding Users to Docker Group

To allow non-root users to run Docker commands, they must be added to the Docker group. This step is crucial for enabling multiple users to interact with Docker on the Linux system.

Step 7: Testing Docker

After completing the installation and configuration process, it is important to perform some tests to ensure that Docker is running correctly. Create a simple Docker container and verify its functionality to ensure that the setup was successful.

In this section, we have discussed the step-by-step process of installing and setting up Docker on a Linux system. By following these instructions, you will be able to successfully install Docker and configure it to meet your specific requirements.

A step-by-step guide to install and configure the versatile containerization platform in a Linux setting

In this section, we will delve into the step-by-step process of setting up and configuring Docker on a Linux environment, allowing you to leverage the power and flexibility of containerization. By following these instructions, you will be able to successfully deploy Docker and harness its vast array of features to streamline your development workflows and enhance the scalability and portability of your applications.

To begin, we will outline the prerequisites for installing Docker on Linux and provide guidance on ensuring your system meets these requirements. We will then guide you through the installation process, detailing the necessary commands and considerations for different Linux distributions. Following the installation, we will explain how to conveniently configure Docker, customizing its settings according to your specific needs and preferences.

The guide will also cover post-installation steps, such as setting up Docker to run without superuser privileges, enabling you to seamlessly integrate Docker into your daily development tasks. We will explore important security considerations, including user management and access control mechanisms, to ensure the safe and controlled usage of Docker containers on your Linux system.

Furthermore, we will provide an overview of commonly used Docker commands, empowering you to effortlessly manage containers, images, and networks. We will explain how to pull and push Docker images from public and private repositories, enabling fast and efficient collaboration with other developers. Additionally, we will introduce the concept of Dockerfiles and provide practical examples of creating and building custom Docker images for your projects.

To consolidate your knowledge and understanding, we will offer troubleshooting tips and address common issues that may arise during the installation and configuration process. By the end of this guide, you will have a comprehensive understanding of how to install, configure, and utilize Docker in your Linux environment, empowering you to optimize your development workflow and take full advantage of the benefits containerization provides.

| Table of Contents |

| 1. Prerequisites |

| 2. Installation Process |

| 3. Configuration and Customization |

| 4. Post-Installation Steps |

| 5. Security Considerations |

| 6. Essential Docker Commands |

| 7. Working with Docker Images |

| 8. Creating Custom Docker Images |

| 9. Troubleshooting and Common Issues |

Introduction to Docker Images

In this section, we will explore the fundamental concepts of working with Docker images. Docker images serve as the building blocks for deploying applications and services in a lightweight and isolated manner. By understanding how to work with Docker images, you will be able to streamline the application deployment process, improve scalability, and efficiently manage dependencies.

What are Docker images?

Docker images are self-contained packages that include everything needed to run a piece of software, including the code, runtime environment, system tools, libraries, and dependencies. They provide a standardized way of packaging software and its dependencies into a single unit that can be easily shared, replicated, and deployed.

Why use Docker images?

Using Docker images offers several benefits. They allow you to isolate your applications and their dependencies, ensuring that they run consistently across different environments. Docker images also enable easy version control, enabling you to roll back to previous versions if needed. Additionally, Docker images provide a fast and efficient way to distribute and deploy applications, as they can be easily transferred between different machines without requiring complex setup procedures.

Working with Docker images

To work with Docker images, you will need to use the Docker CLI (Command-Line Interface). The Docker CLI provides a set of commands that allow you to build, list, pull, and push Docker images. You can customize and configure your Docker images through the use of Dockerfiles, which are text files that define the instructions for building the image.

Building Docker images

To build a Docker image, you need to create a Dockerfile that describes the steps and dependencies required to build the image. The Dockerfile includes commands such as copying files, running commands, and setting environment variables. Once the Dockerfile is created, you can use the Docker CLI to build the image by running the docker build command.

Using Docker Hub

Docker Hub is a cloud-based registry that provides a wealth of pre-built Docker images for you to use. It allows you to easily find and pull images from the Docker community, reducing the need for you to build your images from scratch. You can pull these images using the docker pull command.

Conclusion

In this section, we have explored the basics of Docker images and their importance in the containerization and deployment of applications. By understanding how to work with Docker images, you can leverage the power of containerization to streamline your development and deployment processes.

Mastering the Creation, Pulling, and Management of Docker Images in a Linux Environment

Unlock the full potential of Linux by delving into the world of Docker images, where you can effortlessly build, retrieve, and handle containerized software components. Through this section, we will explore the fundamental concepts and techniques required to become proficient in the creation, pulling, and management of Docker images.

Understanding the Art of Image Creation

Embark on a journey to discover the intricacies of crafting Docker images from scratch. Learn how to leverage a multitude of tools, configure dependencies, and tailor software installations to suit your particular needs. We will explore the essential steps, techniques, and best practices for creating high-quality and efficient Docker images that reflect your unique software requirements.

Effortlessly Pulling Images from Various Sources

Discover the vast array of options available for accessing Docker images from a wide range of repositories and registries. Dive into the Docker Hub, explore private registries, and uncover the secrets to finding and retrieving images that perfectly fit your project. We will delve into the intricacies of pulling images, exploring versioning, and optimizing the process to ensure seamless integration into your Linux environment.

Streamlined Management of Docker Images at Scale

Unleash the power of Docker image management as we delve into advanced techniques for organizing, categorizing, and updating your containerized software components. Explore strategies for creating efficient systems to manage multiple images, monitor their performance, and seamlessly update them when needed. This section will equip you with the skills needed to efficiently manage a vast library of Docker images in your Linux environment.

Join us on this knowledge-packed journey to master the art of creating, pulling, and managing Docker images in the Linux realm. Unlock the potential to streamline your software deployment and revolutionize your Linux development workflow.

Containerizing and Deploying an Application on Linux using Docker

In this section, we will explore the process of containerizing and deploying a Linux application using Docker. We will dive into how containers provide a lightweight and isolated environment to run applications, eliminating compatibility issues and simplifying the deployment process.

First, we will discuss the concept of containerization and its significance in modern application development. Containerization encapsulates an application and its dependencies into a single package called a container, ensuring that it can run consistently across different environments.

- Next, we will explore the Docker platform, which is one of the most popular tools for containerization. Docker provides a comprehensive set of tools and features that simplify the containerization and deployment process.

- We will examine the steps involved in containerizing a Linux application with Docker. This includes creating a Dockerfile, which defines the application's requirements and configuration, pulling the necessary base image, and building the container image.

- Once the application is containerized, we will delve into the process of deploying the container to a Linux host. We will explore different deployment options, such as local deployment, deploying to a remote server, or deploying to a cloud-based container orchestration platform like Kubernetes.

- Furthermore, we will discuss best practices for monitoring and managing Docker containers to ensure optimal performance and scalability. This involves techniques like scaling containers, monitoring resource usage, and implementing container health checks.

By the end of this section, you will have a clear understanding of how to containerize and deploy a Linux application using Docker. This knowledge will empower you to leverage containerization technology to streamline your application deployment process and achieve greater flexibility and efficiency in your development workflow.

A Practical Guide to Packaging and Deploying Linux Applications with Containerization

Containerization has revolutionized the way applications are packaged and deployed, providing a lightweight and efficient solution for software distribution. In this tutorial, we will explore the process of packaging and deploying Linux applications using containerization technology.

We will begin by discussing the benefits of containerization and how it simplifies the deployment process. Docker, a popular containerization platform, will be used as our tool of choice for this tutorial. We will explore the basics of Docker and how it can be leveraged to package and distribute Linux applications.

- Understanding the Docker image format: We will delve into the details of Docker images, discussing the layers that make up an image and how they contribute to efficient storage and distribution.

- Building Docker images: You will learn how to create Docker images by writing Dockerfile manifests. We will cover various instructions and best practices to optimize the image build process.

- Packaging Linux applications: We will explore different strategies for packaging Linux applications using Docker. This includes bundling dependencies, configuring environment variables, and managing application-specific settings.

- Deploying Dockerized applications: Once the images are built, we will demonstrate how to deploy them to various environments. We will discuss different deployment options, such as local development environments, testing environments, and production servers.

- Managing containerized applications: We will cover the essentials of managing containerized applications, including monitoring and scaling containers, updating application versions, and troubleshooting common issues.

- Securing Dockerized applications: Security is a crucial aspect of any application deployment. We will discuss best practices for securing Docker containers and protecting your Linux applications from potential vulnerabilities.

By the end of this tutorial, you will have a solid understanding of how to leverage Docker and containerization technology to package and deploy Linux applications. You will be equipped with the necessary knowledge to streamline your application deployment process and ensure scalability, efficiency, and security.

Managing Containers in the Linux Environment

Container management plays a crucial role in the efficient utilization of resources in the Linux environment. This section will explore various strategies and techniques for managing containers, optimizing their performance, and ensuring their reliability and security.

| Section | Description |

|---|---|

| Container Orchestration | Discover how container orchestration platforms, such as Kubernetes and Docker Swarm, simplify the deployment, scaling, and management of containers across a cluster of Linux hosts. |

| Container Monitoring | Learn about the importance of monitoring container metrics and logs to identify performance bottlenecks, troubleshoot issues, and ensure the smooth operation of containerized applications. |

| Container Networking | Explore different networking options available for containers in Linux, including bridge networks, overlay networks, and host networks. Understand how to create and manage network connections between containers and external networks. |

| Container Storage | Discover various storage solutions and techniques for managing persistent data in containers. Learn about volume management, storage drivers, and container-specific storage services. |

| Container Security | Explore best practices for securing containers in the Linux environment. Understand the importance of container isolation, image security scanning, and implementing access control measures. Ensure the integrity and confidentiality of your containerized applications. |

By mastering the management techniques discussed in this section, developers and system administrators can effectively leverage the power of containers in the Linux environment, enabling seamless deployments, efficient resource utilization, and improved application performance.

FAQ

What is the purpose of the article "Connecting Docker Linux Code — RO"?

The purpose of the article "Connecting Docker Linux Code — RO" is to provide information and guidelines on how to connect Docker containers running on Linux to read-only code.

Why is it important to connect Docker Linux code to read-only?

Connecting Docker Linux code to read-only provides better security and stability to the containers. It prevents unauthorized modifications to the code and reduces the risk of accidental changes or deletions.

How can I connect Docker containers to read-only code on Linux?

To connect Docker containers to read-only code on Linux, you can use the "--read-only" flag when running the container or configure the container to mount a read-only volume. Additionally, you can use the "docker run" command with the "--kernel-memory" option to limit write access.

What are the advantages of using read-only code in Docker containers?

Using read-only code in Docker containers offers increased security by preventing modifications to the code, improved stability by eliminating accidental changes or deletions, and better reproducibility of containerized applications.

Are there any potential drawbacks or limitations when connecting Docker Linux code to read-only?

While connecting Docker Linux code to read-only offers several benefits, it may limit the ability to make runtime changes or store application data within the container. It requires careful planning and consideration of application requirements to ensure proper functionality.

What is Docker Linux Code — RO?

Docker Linux Code — RO is a tool that allows connecting Docker containers running on a Linux operating system.