Imagine a world where operating systems can seamlessly communicate and collaborate, transcending the boundaries of their individual environments. In this realm, Windows and Linux work together in perfect harmony, exchanging data and resources effortlessly, fostering a truly integrated ecosystem.

Today, we delve into the intricacies of enabling this seamless dialogue between Windows and Linux, bringing forth an understanding of the underlying intricacies and configuration requirements. Delving into the depths of interoperability, we explore the principles and techniques that unlock a world of possibilities for developers and system administrators alike.

By establishing a bridge between Windows and Linux, we open the floodgates to a wealth of opportunities, allowing for the free flow of ideas, functionalities, and capabilities. Gone are the days of isolation and restricted collaboration, as this connectivity presents a new frontier where two titans merge their powers.

Spanning the Divide: This article uncovers the mechanisms and strategies to configure communication channels between Windows and Linux. We navigate through the complexities of this integration process, exploring the usage of various tools, techniques, and protocols that pave the way for a harmonious coexistence.

So, join us on this journey as we embark on a quest to unleash the true potential of Windows and Linux interoperability. Together, let us discover the art of bridging the gap and creating a seamless and efficient collaboration between these two giants of the operating system world.

Understanding the Fundamentals of Containerization Technology

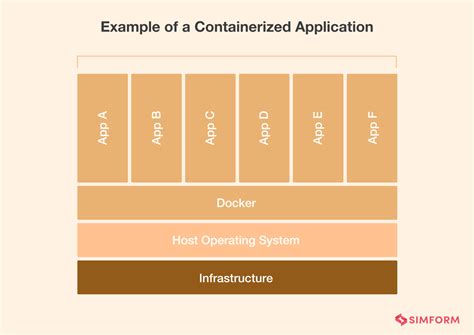

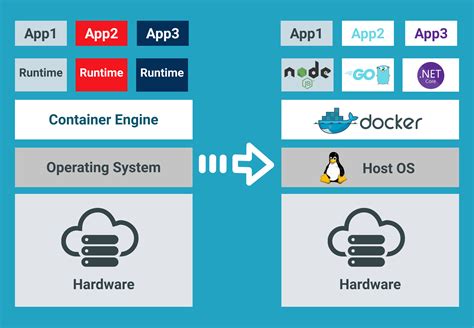

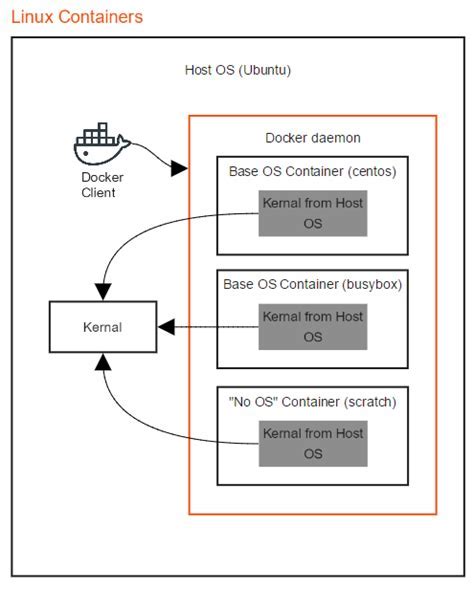

The concept of containerization has revolutionized software development and deployment by providing a lightweight, isolated environment for running applications. This technology enables the packaging of an application along with its dependencies in a single, self-contained unit known as a container. Containers are designed to be portable and run consistently across different computing environments.

One of the key benefits of containerization is its ability to abstract the underlying infrastructure and operating system, allowing applications to run seamlessly on diverse platforms, including Windows, Linux, and macOS. By leveraging containerization, developers and system administrators can ensure consistent behavior of their applications across various environments without the need to worry about the specific configurations and dependencies of each host system.

| Advantages of Containerization: |

|---|

| 1. Isolation: Containers provide encapsulation, preventing conflicts with other applications and dependencies. |

| 2. Portability: Containers can easily be moved between different hosts and environments, providing flexibility and scalability. |

| 3. Consistency: Applications running in containers behave consistently regardless of the underlying host system. |

Containerization technology is implemented through container engines or runtimes, with Docker being one of the most popular and widely used solutions. Docker simplifies the process of creating, deploying, and managing containers by providing a user-friendly interface and comprehensive toolset.

With a solid understanding of the fundamentals of containerization, you'll be well-equipped to explore the specific configuration and communication aspects of Docker containers in a Windows environment.

Exploring the Advantages of Utilizing Docker in a Windows Environment

In this section, we will delve into the various benefits that arise from harnessing the power of Docker within a Windows operating system. By leveraging the capabilities of Docker, users can unlock a range of advantages that enhance development processes, increase efficiency, and foster collaboration.

Enhanced Portability: Docker allows for the creation of containers that encapsulate all the necessary dependencies, libraries, and configurations required to run an application. This portability ensures that the application can be easily deployed and executed across different environments, irrespective of the underlying infrastructure.

Efficient Resource Management: Docker provides a lightweight and isolated runtime environment, enabling the allocation of specific resources to each container. This eliminates conflicts between applications and optimizes resource utilization, resulting in improved performance and scalability.

Rapid Deployment and Testing: Leveraging Docker's ability to create consistent and reproducible environments, developers can quickly deploy applications on various machines for testing and validation purposes. This accelerated deployment process promotes faster iteration cycles and enables software teams to identify and resolve issues more efficiently.

Isolation and Security: Docker containers offer a higher level of isolation by segregating applications and their dependencies, minimizing the risk of interference and potential security breaches. By encapsulating applications within containers, Docker provides an additional layer of protection against unauthorized access and potential vulnerabilities.

Collaboration and Scalability: Docker's image-based approach allows for easy sharing and distribution of applications, fostering collaboration among development teams. Furthermore, the scalability of Docker enables applications to be seamlessly deployed and scaled across multiple instances, facilitating the growth and expansion of projects.

Improved Developer Productivity: With Docker, developers can focus on coding and building applications rather than spending valuable time on complex environment setup and configuration. The encapsulation of dependencies and pre-configured environments significantly reduces the time required to set up development environments, increasing overall productivity.

By harnessing the manifold benefits of Docker within a Windows environment, organizations and developers can optimize their workflows, simplify application deployment, enhance collaboration, and ultimately deliver high-quality software solutions efficiently.

Configuring Docker for Windows

In this section, we will explore the necessary steps to set up Docker for Windows, allowing seamless communication between different environments and enabling efficient containerization of applications.

First, it is crucial to establish a proper configuration for Docker in the Windows operating system. By configuring the settings, you can optimize the performance and ensure compatibility with your requirements. This process involves adjusting various parameters and options to create an environment that facilitates the smooth operation of Docker containers.

One key aspect of configuring Docker for Windows is determining the resource allocation for the containers. By allocating appropriate resources, such as CPU and memory, you can ensure that the containers have enough capacity to run efficiently without impacting the overall performance of the host system. This step is crucial in maintaining a balance between the needs of the containerized applications and the availability of resources on the Windows machine.

Moreover, it is essential to consider networking configuration in Docker for Windows. This involves establishing connectivity between containers and external systems, enabling seamless communication between different parts of your application architecture. By configuring the networking options, you can create isolated environments for your containers while allowing them to interact with other components as needed.

Additionally, ensuring security is paramount when configuring Docker for Windows. By implementing appropriate security measures, such as setting up user permissions and restricting access to sensitive data, you can protect your containers and the host system from potential threats. This step is crucial in creating a secure and reliable environment for containerized applications.

In conclusion, configuring Docker for Windows is a crucial step in establishing a seamless and efficient containerization environment. By adjusting resource allocation, networking settings, and security measures, you can create a stable and secure platform for running your applications, promoting efficient communication and collaboration between different components of your system.

Installing Docker on a Windows Machine

In this section, we will explore the process of setting up and installing Docker on a Windows machine to enable containerization of applications. Containerization allows for the efficient and isolated running of applications, enabling developers to package their applications with all the necessary components and dependencies.

Check system requirements: Before installing Docker, ensure that your Windows machine meets the necessary hardware and software requirements. This includes having a compatible operating system, sufficient memory and storage, and virtualization capabilities.

Download Docker: Visit the Docker website and download the Docker Desktop for Windows installer. Ensure that you choose the appropriate version for your operating system architecture.

Install Docker: Once the installer is downloaded, run it and follow the on-screen instructions to complete the installation. During the installation process, Docker will set up various components, including the Docker Engine, Docker CLI, and the Docker Compose tool for managing multi-container applications.

Configure Docker settings: After the installation is complete, launch Docker Desktop and access its settings. Here, you can customize various aspects of Docker, such as resource allocation, network settings, and integration with other tools and platforms.

Verify installation: To ensure Docker is properly installed and functioning, open a command prompt and run

docker version. This command will display the installed version and various information about the Docker installation.

By following these steps, you will be able to successfully install Docker on your Windows machine, allowing you to leverage the power of containerization for your development and deployment processes.

Configuring Docker to establish connection with an external Linux server

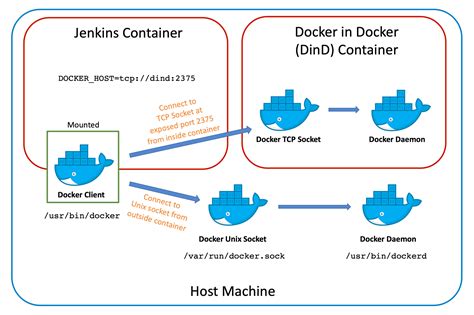

In this section, we will explore the process of setting up the necessary configuration to establish communication between Docker, running on a Windows environment, and a remote Linux server. Our primary aim is to enable seamless interaction between the two entities by configuring the appropriate networking settings.

Elevating connectivity: Before we can establish a connection, it is crucial to elevate the level of connectivity between the Docker container and the external Linux host. This involves configuring network settings to ensure smooth and uninterrupted communication.

Networking considerations: Here, we will delve into the various networking considerations that need to be taken into account when configuring Docker to communicate with an external Linux server. From understanding IP addresses and subnets to dealing with port mappings and network modes, we will cover all aspects to ensure a robust connection.

Securing the connection: Additionally, it is imperative to prioritize security when configuring Docker to communicate with an external Linux host. We will explore the implementation of secure protocols and authentication mechanisms to protect our communication channels from potential threats.

Optimizing performance: Finally, we will discuss strategies for optimizing the performance of the connection between Docker and the external Linux server. By fine-tuning various parameters and leveraging container orchestration tools, we can ensure efficient and speedy communication for our application.

Setting up connectivity with an outside Linux system

In order to establish seamless communication between your Windows environment and an external Linux host, a configuration process must be carried out. This allows for the secure and efficient transfer of data and resources across different operating systems.

Firstly, it is essential to configure the network settings on both the Windows and Linux systems. This involves ensuring that the IP addresses are properly assigned and that the network connection is enabled. By establishing a network connection, you can enable the exchange of information between the two systems.

Next, you need to set up the necessary protocols that will facilitate communication. One commonly used protocol is SSH (Secure Shell), which provides a secure channel for remotely accessing a Linux system. By configuring SSH, you can establish a secure connection between the Windows host and the external Linux system, allowing for remote management and file transfer.

Once the protocols are set up, it is vital to configure the firewall settings on both systems. Firewalls serve as a protective barrier, filtering incoming and outgoing network traffic. By configuring the firewalls on both the Windows and Linux systems to allow the necessary communication ports and protocols, you can ensure that the connection between the two systems remains secure and unhindered.

Lastly, it is crucial to verify the connectivity between the Windows and Linux systems after the configuration process. This can be done by testing the connection using various tools and commands, such as ping or SSH. Verifying the connectivity ensures that the communication has been successfully established and allows for any troubleshooting or further configuration if necessary.

Configuring the Linux host for establishing Docker connectivity

In this section, we will explore the steps required to configure the Linux host in order to establish a seamless connection with the appropriate software and tools used for Docker management and deployment.

First and foremost, it is important to ensure that the Linux host is equipped with all the necessary dependencies and software packages. The installation of these components will enable the system to effectively communicate with the external environment and facilitate the smooth functioning of Docker containers.

Once the dependencies are installed, the next step involves configuring the essential network settings on the Linux host. This includes assigning a suitable IP address, subnet mask, and gateway, ensuring compatibility with the external Linux host and the network infrastructure. These network settings will facilitate the establishment of a reliable and secure communication channel for Docker operations.

In addition to the network settings, proper configuration of the firewall and security measures on the Linux host is crucial. By configuring the firewall rules and enabling the necessary ports, we can ensure that Docker traffic can flow freely between the Linux host and the external environment without any hindrance.

Furthermore, it is important to consider other factors such as optimizing network performance, enabling network packet forwarding, and configuring DNS settings on the Linux host. These measures will enhance overall connectivity and enable Docker containers to effectively interact with external services and resources.

In conclusion, by carefully configuring the Linux host and ensuring proper network settings, security measures, and other necessary configurations, we can establish a robust and seamless connectivity environment for Docker operations.

Establishing a secure connection between Windows and Linux

In this section, we will explore the process of establishing a safe and secure connection between a Windows operating system and a Linux environment.

Ensuring a reliable and protected connection between Windows and Linux is crucial for various reasons, such as efficient data transfer, secure remote access, and seamless collaborative work. To achieve this, we need to implement robust security mechanisms that guarantee the confidentiality, integrity, and authenticity of the communication.

One common approach to establishing a secure connection between Windows and Linux is through the implementation of secure shell (SSH) protocols. SSH provides a secure channel over an unsecured network, allowing us to exchange information between the Windows and Linux systems in a protected manner. With SSH, we can establish encrypted connections, authenticate users, and securely transfer data between the two platforms.

To enable SSH communication between Windows and Linux, we need to configure the necessary SSH server and client components on each respective system. On the Linux side, we typically find OpenSSH, a widely-used implementation of the SSH protocol suite. On the Windows side, there are several SSH client options available, such as PuTTY or Windows PowerShell's built-in SSH functionalities.

Once the SSH components are set up on both ends, we can establish a secure connection by exchanging cryptographic keys between the Windows and Linux systems. This key exchange process ensures that only authorized parties can access the communication channel, preventing unauthorized interception or tampering of data. With a secure connection established, we can confidently and securely transfer files, run remote commands, or perform other tasks between Windows and Linux environments.

In summary, establishing a secure connection between a Windows operating system and a Linux environment is crucial for efficient and protected communication. By implementing SSH protocols and configuring the necessary components on both ends, we can ensure the confidentiality, integrity, and authenticity of the data exchanged between the two platforms.

Self Hosting on your Home Server - Cloudflare + Nginx Proxy Manager - Easy SSL Setup

Self Hosting on your Home Server - Cloudflare + Nginx Proxy Manager - Easy SSL Setup by Raid Owl 328,081 views 2 years ago 15 minutes

FAQ

What is the purpose of Docker in Windows?

The purpose of Docker in Windows is to provide a platform for running and managing containerized applications. It allows developers to package their applications and their dependencies into isolated containers, ensuring consistent and predictable execution across different environments.

How can I configure communication between Docker in Windows and an external Linux host?

To configure communication between Docker in Windows and an external Linux host, you need to make sure that both the Windows host and the Linux host are on the same network. Then, you can use the Docker CLI to create a network bridge between the two hosts, allowing containers running on the Windows host to communicate with the Linux host.

What are the benefits of using Docker in Windows with an external Linux host?

Using Docker in Windows with an external Linux host offers several benefits. Firstly, it allows developers to utilize the Windows operating system for development while leveraging the power and stability of Linux for hosting containers. Additionally, it provides flexibility in terms of hosting options, as containers can be deployed on both Windows and Linux hosts in a hybrid environment.

Can I use Docker in Windows with multiple external Linux hosts?

Yes, you can use Docker in Windows with multiple external Linux hosts. By configuring network bridges between Docker in Windows and each Linux host, you can establish communication between the containers running on the Windows host and the different Linux hosts. This allows for distributed deployment scenarios and load balancing across multiple hosts.