In an ever-evolving digital landscape, enhancing efficiency and streamlining workflows are crucial for businesses to thrive. The advent of containerization technology has been a game-changer, revolutionizing application development and deployment. One of the leading platforms enabling this transformation is Windows, offering developers and operators versatile tools and an environment primed for innovation.

When discussing the concept of containerization, a multitude of synonyms come to mind: virtualization, isolation, encapsulation. These terms embody the essence of breaking down applications into smaller, self-contained units, enabling them to run seamlessly across different environments. Windows, with its robust foundation, provides a fertile ground for containerized applications, bringing together scalability, security, and flexibility.

Containerization has become synonymous with enhancing the way applications are developed, shipped, and deployed. Through the use of lightweight, portable containers, developers can ensure consistency and reliability in their applications, irrespective of the underlying infrastructure. Windows, long-known for its user-friendly interface and widespread adoption, has embraced container technology wholeheartedly, enabling developers to harness its power to design innovative solutions.

The Enduring Influence of Containerization in Continuous Execution of Commands within the Windows Environment

In the realm of information technology, there exists a powerful tool that offers unparalleled flexibility and efficiency in the execution of commands within the Windows operating system. This tool, often referred to as "Docker forever in 'docker runs..'," leverages the concept of containerization to provide a seamless and persistent environment for executing commands and running applications. By examining this innovative approach, we can gain a deeper understanding of how containerization has revolutionized the way tasks are performed within the Windows ecosystem.

Understanding the fundamentals of executing containerized applications on the Windows operating system

In this section, we will explore the core concepts and principles behind the execution of containerized applications on the Windows platform. Without delving into specific terminology, we will delve into the mechanics of running containers and discuss the key components involved.

Analyzing the Container Execution Process Firstly, let's examine how containerized applications are executed on the Windows operating system. We will break down the process into several fundamental steps, providing an overview of each one without diving into technical jargon. |

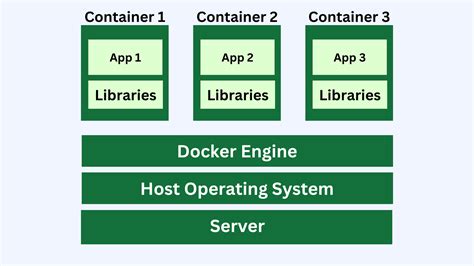

The container execution process on Windows involves several key stages, including setting up the necessary runtime environment, initializing the container image, and executing the desired application within the isolated container environment. By comprehending these steps, one can gain a better understanding of how container instances are managed and provisioned on Windows systems.

Key Components Involved in Container Execution Various elements play crucial roles in the execution of containerized applications. By grasping the significance of each component, we can better appreciate the mechanics and intricacies of the "docker runs.." process on Windows. |

Among the essential components involved are the container runtime, container images, and the container orchestrator. While these terms may sound technical, we will explore their significance in a simplified manner, shedding light on their individual contributions to the successful execution of containerized applications.

Exploring the Advantages of Utilizing Docker in Windows Functions

In today's rapidly evolving technological landscape, businesses are constantly searching for innovative solutions to optimize their workflows and maximize efficiency. This has led to the rise of containerization technologies like Docker, which offer a wide array of advantages for various operating systems, including Windows.

| Enhanced Efficiency | Streamlined Deployment | Improved Scalability |

| By employing Docker within Windows functions, organizations can achieve enhanced efficiency through the isolation and lightweight nature of containers. Their ability to package applications and their dependencies into discrete units enables streamlined deployment and reduces the need for extensive configuration. | With Docker, organizations can simplify the deployment process of Windows tasks by encapsulating all necessary components within a container. This eliminates the risk of compatibility issues and allows for consistent deployment across different environments, ensuring seamless execution of tasks. | The scalability of applications can be easily managed when using Docker in Windows tasks. The containerized approach allows for horizontal scaling, enabling organizations to effortlessly increase or decrease the number of instances to meet the demands of their workload. |

Additionally, Docker facilitates easier collaboration and portability of Windows tasks. By encapsulating the application and its dependencies within a container, developers can share the container image, ensuring consistent execution across different development environments. This portability also enables simplified migration and maintenance of applications, reducing downtime and enhancing overall efficiency.

In conclusion, the utilization of Docker in Windows tasks brings numerous benefits to organizations, including enhanced efficiency, streamlined deployment, improved scalability, and simplified collaboration and portability. By utilizing these advantages, businesses can optimize their workflows, reduce operational complexities, and pave the way for future growth and innovation.

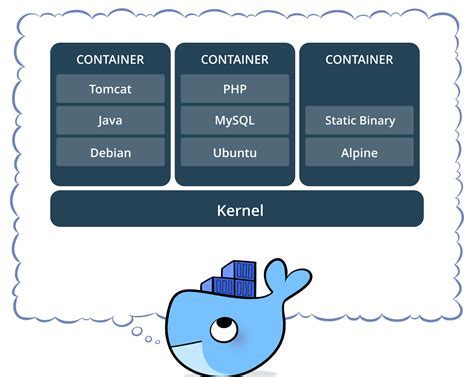

Docker vs traditional virtualization: A comparison in the Windows environment

In this section, we will compare Docker containers with traditional virtualization methods in the context of Windows environment. We will explore the fundamental differences and examine their respective advantages and drawbacks.

- Resource Efficiency: Docker containers utilize shared operating system resources, allowing for higher resource utilization compared to traditional virtualization solutions that require dedicated resources for each virtual machine.

- Start-up Time: Docker containers can be started almost instantly due to their lightweight nature, whereas traditional virtual machines may require significant time for booting up an entire operating system.

- Isolation: Docker containers employ OS-level virtualization, enabling application-level isolation without the need for a full operating system. Traditional virtualization, on the other hand, provides complete isolation by emulating hardware, resulting in stronger isolation but also increased overhead.

- Portability: Docker containers are highly portable and can be easily moved between different systems without compatibility issues, making it convenient for development and deployment. Traditional virtual machines may require additional configuration adjustments when migrating between different host environments.

- Management: Docker offers a more streamlined management process through its Dockerfile and Docker Compose tools, allowing for automatic deployment and scaling of containers. Traditional virtualization often requires manual configurations and management of virtual machines.

Overall, while traditional virtualization provides stronger isolation and a more comprehensive virtualization environment, Docker containers offer higher resource efficiency, faster start-up times, easier portability, and simpler management process. The choice between the two approaches depends on the specific requirements and priorities of the Windows environment.

A Step-by-Step Guide: Embracing Docker Containerization in Windows Workflows

In this section, we will provide a comprehensive walkthrough on how to seamlessly integrate Docker containerization into your Windows operations. By adopting this approach, you can harness the capabilities of containerization technology to streamline and enhance your workflow.

Understanding the Power of Containerization:

Containerization enables the isolation and organization of applications and their dependencies within lightweight virtual environments. By leveraging containers, you can achieve consistent and reproducible deployments, ensure application portability, and enhance overall resource utilization.

Setting Up the Environment:

Before running Docker containers in your Windows tasks, certain prerequisites need to be fulfilled. These may include installing Docker Engine, ensuring compatibility with Windows, and configuring network settings. We will guide you through each step with detailed explanations and clear instructions.

Creating a Docker Container:

Once the environment is prepared, you can proceed with creating and configuring your Docker container. This involves defining the desired image, specifying resource allocation, and configuring network connectivity. We will walk you through the necessary steps, ensuring a seamless setup process.

Running the Docker Container in Windows Tasks:

With the container properly configured, the final step is to execute it within your Windows tasks. This may involve running specific commands, automating tasks through scripts, or integrating containerized applications into existing workflows. We will provide detailed instructions and examples to empower you to effectively incorporate Docker containers into your operational processes.

Understanding Key Considerations:

Throughout the guide, we will address common challenges and considerations that may arise when working with Docker containers in Windows tasks. This includes managing storage, networking, and security aspects, as well as troubleshooting potential issues. By being aware of these factors, you can optimize your containerized workflows and ensure smooth operations.

Unlock the Potential: Transform Your Windows Tasks with Docker Containers

By following this step-by-step guide, you will gain the knowledge and skills to successfully leverage Docker containerization in your Windows tasks. By harnessing the power of containerization technology, you can enhance flexibility, scalability, and efficiency within your workflows, empowering your organization to achieve greater success.

Optimizing Docker Performance on the Windows Platform: Key Recommendations

In this section, we will explore essential guidelines for enhancing the efficiency and productivity of Docker containers when utilizing the Windows operating system. By following these best practices, users can ensure smoother execution, improved resource utilization, and enhanced overall performance of their containerized applications.

1. Container Image Optimization: To enhance the performance of Docker containers on Windows, it is important to optimize the container images by reducing their size. This can be achieved by minimizing the number of unnecessary layers, removing unused dependencies, and utilizing lightweight base images. By keeping the container image size to a minimum, users can significantly reduce startup time and improve resource utilization.

2. Host OS Configuration: Optimizing the underlying Windows host operating system can have a direct impact on the performance of Docker containers. Users should ensure that host machines have sufficient resources, including CPU, memory, and storage. Additionally, optimizing the host OS by disabling unnecessary services and configurations can free up system resources and enhance Docker container performance.

3. Networking Best Practices: Efficient networking configurations can greatly influence the performance of Docker containers. Users should consider utilizing user-defined networks instead of relying on default bridge networks, as they offer better isolation and improved network performance. It is also recommended to leverage Docker's built-in networking features, such as DNS caching and IP address assignment, to optimize container communication and reduce latency.

4. Resource Limitations: Setting appropriate resource limits for Docker containers is crucial for maintaining optimal performance. Users should define CPU and memory limits to prevent one container from overpowering others and causing performance degradation. By effectively allocating resources, users can avoid contention and ensure stable and efficient container execution.

5. Container Monitoring and Logging: Regularly monitoring container metrics, such as CPU, memory, and network usage, can provide valuable insights into performance bottlenecks and resource utilization. Additionally, implementing robust logging mechanisms within containers helps facilitate troubleshooting and identification of potential performance issues.

Table:

| Best Practice | Description |

|---|---|

| Container Image Optimization | Minimize image size and remove unnecessary dependencies |

| Host OS Configuration | Optimize host machine resources and disable unnecessary services |

| Networking Best Practices | Utilize user-defined networks and leverage Docker's networking features |

| Resource Limitations | Set appropriate CPU and memory limits for containers |

| Container Monitoring and Logging | Regularly monitor container metrics and implement robust logging mechanisms |

By adhering to these best practices, developers and system administrators can optimize the performance of Docker containers on the Windows platform, resulting in efficient resource utilization, improved application stability, and enhanced overall user experience.

Troubleshooting common issues with "docker runs.." in Windows

When working with containerization on Windows systems, it is important to be aware of the potential challenges that can arise when using the "docker runs.." command. By understanding and addressing these common issues, you can ensure smooth and efficient execution of your Docker containers.

One of the challenges that may occur is related to container initialization. Sometimes, when you run a Docker container, it may fail to initialize properly and result in errors or unexpected behavior. This can be caused by various factors, such as incorrect configuration settings, incompatible dependencies, or conflicts with other running processes. To troubleshoot this issue, it is recommended to carefully review the container's configuration and verify that all required dependencies are properly installed and compatible.

Another common issue is related to networking problems. Docker containers rely on network connectivity to communicate with other containers or external services. If a container fails to establish a connection or experiences intermittent network issues, it can disrupt the expected behavior of your application. In such cases, it is essential to check the network configuration, ensure that the required ports are open, and verify that the container has access to the necessary network resources.

Additionally, resource constraints can lead to problems when running Docker containers. If a container consumes excessive memory, CPU, or disk space, it may impact the performance or stability of other containers or the host system itself. To address this issue, it is important to monitor resource usage, optimize container configurations, and allocate appropriate resources to ensure smooth operations.

Lastly, permissions and access rights can sometimes be a source of trouble when running Docker containers on Windows. In certain scenarios, containers may fail to access required files or directories due to inadequate permissions. It is crucial to review and adjust the access rights to necessary resources, both within the container and on the host system, to avoid any permission-related conflicts.

In conclusion, troubleshooting common issues with the "docker runs.." command in Windows involves addressing container initialization problems, resolving networking issues, managing resource constraints, and ensuring proper permissions and access rights. By understanding and resolving these challenges, you can maximize the efficiency and reliability of your Docker containers on Windows systems.

Integrating Docker with existing Windows workflows

Enhancing the efficiency and productivity of existing Windows workflows can be achieved through the seamless integration of Docker containerization technology. By incorporating Docker into established processes, organizations can unlock a myriad of benefits without disrupting their current systems and practices.

- Facilitating Resource Optimization: Docker enables the efficient utilization of hardware resources by packaging applications and their dependencies into self-contained containers. This eliminates the need for deploying and managing each application separately, reducing operational overhead and boosting overall performance.

- Streamlining Development Environments: Leveraging Docker allows developers to create consistent and reproducible environments across multiple Windows machines. By encapsulating the application and all its dependencies within a container, developers can ensure that their code is executed in the same controlled environment, regardless of the underlying host system.

- Enabling Agility and Scalability: With Docker, organizations can easily scale their applications, adapt to changing demands, and respond to market needs quickly. By leveraging containerization, businesses gain the ability to deploy, update, and roll back applications efficiently, ensuring seamless continuity and minimizing downtime.

- Enhancing Collaboration: Docker promotes better collaboration between developers, system administrators, and other stakeholders involved in the software development and deployment lifecycle. Sharing containerized applications with standardized dependencies allows for simplified testing, debugging, and deployment, reducing potential conflicts and communication gaps.

Integrating Docker into existing Windows workflows opens up a world of possibilities for organizations seeking to streamline processes, optimize resources, and foster an environment of collaboration and agility. By harnessing the power of containerization, businesses can revolutionize their workflows without sacrificing the familiarity and robustness of their Windows-based systems.

Security considerations when utilizing Docker within a Windows environment

Ensuring the security of Docker containers in Windows entails implementing a comprehensive approach that addresses potential vulnerabilities and protects sensitive data. By considering key security considerations, organizations can safeguard their systems and prevent potential cyber threats.

- Utilize robust authentication mechanisms: Employing strong authentication techniques such as multi-factor authentication and access control lists helps mitigate unauthorized access to Docker containers and the underlying Windows environment.

- Implement container isolation: Leveraging virtualization and containerization technologies such as Hyper-V and Windows Sandbox can enhance the isolation between containers, limiting the impact of potential security breaches.

- Regularly update and patch Docker images: Keeping Docker images up to date with the latest security patches and software updates helps protect against known vulnerabilities and ensures the inclusion of robust security features.

- Monitor container runtime behavior: Implementing tools and frameworks that monitor the runtime behavior of Docker containers allows for the detection of any suspicious activities or unauthorized access attempts.

- Implement network segmentation: Employing network segmentation techniques and isolating Docker containers within separate network segments helps limit the potential spread of threats and enhances overall network security.

- Employ container image scanning: Utilizing container image scanning tools can help identify any potential security risks or vulnerabilities present within Docker images before deploying them into a production environment.

- Regularly conduct security assessments and audits: Performing routine security assessments and audits helps identify any existing vulnerabilities, assess the effectiveness of implemented security measures, and proactively address emerging threats.

By adhering to these security considerations and implementing robust security measures, organizations can ensure a secure and resilient Docker environment within the Windows operating system. Vigilance in addressing potential vulnerabilities and proactive security practices are key to protecting sensitive data and maintaining the integrity of Docker deployments.

Exploring the Evolving Landscape of Containerization in Windows Workflows

The rapid evolution of containerization technology has brought forth exciting possibilities for Windows workflows, allowing for greater efficiency, scalability, and portability in software development and deployment. As organizations continue to leverage containerization solutions for various tasks, it is imperative to stay abreast of the future trends and developments shaping the utilization of containers in Windows environments.

- 1. Adoption of Immutable Infrastructure:

- 2. Embracing Microservices Architecture:

- 3. Enhanced Security Paradigms:

- 4. Integration of Machine Learning and Artificial Intelligence:

- 5. Development of Cross-Platform Solutions:

One noteworthy trend is the growing adoption of immutable infrastructure, which embraces the concept of treating infrastructure as a disposable asset. Instead of making ad hoc changes to individual servers, the focus shifts towards deploying containers with pre-configured setups, allowing for easier management, rapid scalability, and improved resilience.

Microservices architecture is gaining prominence as organizations strive for modular, scalable, and loosely coupled systems. By breaking down monolithic applications into smaller, more manageable components, containers facilitate the deployment and orchestration of microservices, enabling enhanced flexibility and agility.

As containerization matures in Windows workflows, security concerns become paramount. Future developments aim to reinforce container security through enhanced isolation mechanisms, fine-grained access controls, and improved vulnerability management. This ensures that containers remain secure and reliable, even when handling sensitive applications or data.

The integration of machine learning and artificial intelligence techniques within containerization frameworks opens up new possibilities in optimizing resource allocation, automating orchestration, and streamlining workflows. The ability to intelligently manage containers based on historical and real-time data analysis will further enhance performance, cost efficiency, and overall productivity.

As containerization technologies continue to evolve, there is an increasing emphasis on cross-platform compatibility. Future developments may enable seamless deployment of containers across different operating systems, including Windows, Linux, and macOS, providing developers with greater flexibility in their choice of infrastructure.

By understanding and embracing these emerging trends and developments, organizations can optimize their utilization of containerization in Windows tasks, unlocking newfound efficiency, scalability, and resilience in their workflows.

A practical guide on Docker with projects | Docker Course

A practical guide on Docker with projects | Docker Course by Hitesh Choudhary 132,223 views 1 year ago 2 hours, 43 minutes

Introduction to Docker for CTFs

Introduction to Docker for CTFs by LiveOverflow 123,671 views 4 years ago 11 minutes, 30 seconds

FAQ

What is Docker and how does it work in Windows?

Docker is an open-source platform that allows developers to automate the deployment and scaling of applications using containers. In Windows, Docker works by leveraging the native Windows containerization technology to create lightweight, isolated environments for running applications.

Can I use Docker on Windows to run Linux-based applications?

Yes, Docker provides support for running Linux-based applications on Windows using a lightweight virtualization technique called Hyper-V isolation. This allows you to take advantage of the benefits of Docker even if you're developing or running applications that are designed for Linux.

What are the advantages of using Docker in Windows?

There are several advantages of using Docker in Windows. First, Docker provides a consistent development and production environment, ensuring that your applications behave the same way across different machines. Second, it allows for easy application scaling and deployment, making it ideal for modern cloud-native architectures. Lastly, Docker simplifies the management and packaging of applications, making it easier to distribute and collaborate on software projects.