In the realm of efficient software development and computational tasks, the ability to manage different versions of CUDA with ease is an indispensable advantage. With the advent of Windows 11, a groundbreaking operating system, there lies an opportunity to explore innovative approaches in harnessing the power of Docker for seamless storage and organization of multiple CUDA versions. By leveraging this cutting-edge technology, developers and researchers can enhance productivity, simplify their workflow, and embrace the flexibility required to navigate through various iterations of CUDA.

Streamlined Development Environments

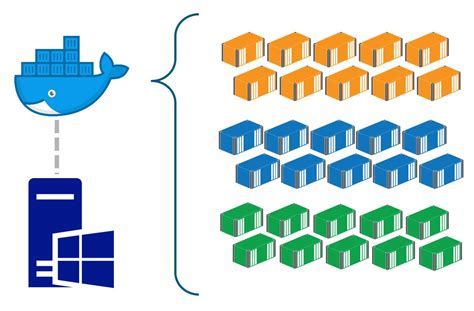

Within the ever-evolving landscape of GPU-accelerated computing, compatibility issues often arise when transitioning between different versions of CUDA. However, by incorporating Docker into the Windows 11 ecosystem, developers can establish streamlined development environments that are isolated, reproducible, and readily accessible. With Docker's containerization capabilities, each CUDA version can be encapsulated within its own self-contained environment, eliminating concerns about version conflicts and facilitating efficient code compilation, testing, and deployment.

Unleashing Collaboration and Innovation

Collaboration is the cornerstone of progress, and Docker for Windows 11 further amplifies the collaborative potential in relation to CUDA development. With the ability to store multiple CUDA versions in Docker containers, teams can effortlessly share their work and collaborate on projects without the hurdles presented by conflicting software environments. This newfound agility fosters interdisciplinary collaboration and empowers researchers, developers, and data scientists to collectively explore the boundaries of GPU computing, driving innovation to new horizons.

Diverse Storage Options in Docker for Windows 11: A Comprehensive Overview

When it comes to managing storage options in Docker for Windows 11, there are numerous alternatives available that cater to various requirements and scenarios. In this guide, we will explore the diverse range of storage solutions that can be leveraged within the Docker environment to enhance performance, scalability, and flexibility.

| Storage Solution | Description |

|---|---|

| Alternate Versions | Discover how to effectively handle multiple iterations or releases of software libraries, such as CUDA, within Docker for Windows 11. |

| Parallel Storage | Explore the implementation of parallel storage systems that can leverage the full potential of multi-core processors. |

| Virtualized File Systems | Learn how to set up and optimize virtualized file systems to efficiently store and retrieve data within containers. |

| Distributed Storage | Discover strategies for managing distributed storage solutions in Docker for Windows 11, enabling seamless sharing and synchronization of data across multiple containers. |

| External Volume Mounting | Explore the concept of external volume mounting and understand how it can be utilized to persist data between container instances and the host system. |

| Efficient Data Backups | Discover best practices for backing up critical data within Docker for Windows 11, ensuring data integrity and recoverability. |

By delving into these various storage options, Docker for Windows 11 users can tailor their containerized environments to suit their specific needs, optimizing resource utilization and increasing overall efficiency.

What is CUDA and why it is crucial for the latest version of Windows?

Modern computing demands powerful tools that can efficiently handle complex tasks and accelerate processing. CUDA, a parallel computing platform and programming model developed by NVIDIA, is a key technology that achieves this goal. It revolutionizes how graphics processing units (GPUs) are utilized, enabling high-performance parallel computing on a wide range of applications.

In the context of Windows 11, CUDA plays a vital role in enhancing the overall computing experience. By harnessing the parallel processing capabilities of GPUs, CUDA significantly boosts the performance of various applications, ranging from scientific simulations and data analysis to machine learning and artificial intelligence. Windows 11, the latest version of Microsoft's renowned operating system, supports CUDA and leverages its immense power to deliver exceptional performance and efficiency.

With CUDA support in Windows 11, users can leverage their compatible graphics cards to unlock the potential of parallel processing. This results in faster and more accurate simulations, quicker data processing, and improved graphics rendering. Whether it's accelerating complex calculations, training deep learning models, or even rendering lifelike 3D visuals, CUDA plays a critical role in maximizing the hardware resources available.

- Harnesses the immense processing power of GPUs for parallel computing

- Enhances performance and efficiency in various domains

- Empowers faster simulations, data processing, and graphics rendering

- Optimizes resource utilization for complex calculations and AI models

In conclusion, CUDA is a fundamental technology that empowers Windows 11 to leverage the parallel processing capabilities of GPUs. By incorporating CUDA support, Windows 11 enables users to utilize their compatible graphics cards to achieve unprecedented levels of performance and efficiency across various computing domains.

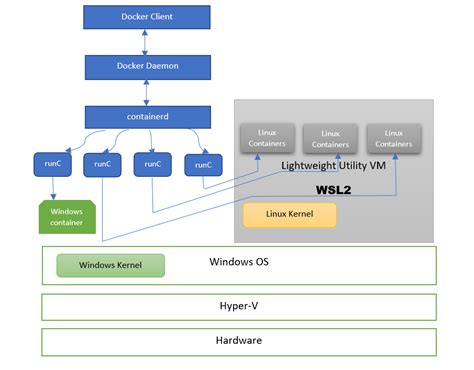

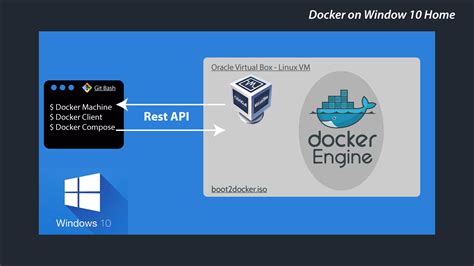

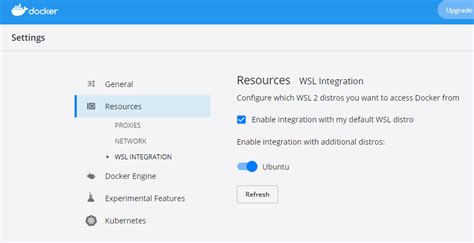

Setting Up Docker on the Latest Windows OS

Learn how to conveniently install and configure Docker on your Windows 11 operating system, enabling seamless management of multiple versions of CUDA, a parallel computing platform.

This section provides a step-by-step guide to installing Docker, a powerful containerization platform, on your newly upgraded or freshly installed Windows 11 environment. By following these instructions, you will gain a solid understanding of the necessary steps to set up Docker and leverage its capabilities for managing different CUDA versions.

- Ensure your Windows 11 system meets the necessary prerequisites for Docker installation.

- Download the latest version of Docker compatible with your Windows 11 OS.

- Run the Docker installer and follow the on-screen instructions for a smooth installation process.

- Configure Docker settings based on your specific requirements.

- Test the Docker installation to ensure it is functioning correctly.

- Explore Docker's command-line interface (CLI) and familiarize yourself with its basic commands.

- Discover how to pull and run Docker images containing different versions of CUDA for your parallel computing needs.

- Optimize Docker performance by adjusting resource allocations and container configurations.

- Maintain and manage your Docker environment easily through Docker Compose and other helpful tools.

- Implement best practices for securing your Docker setup and maintaining the integrity of your CUDA environments.

By the end of this installation guide, you will have a fully functional Docker setup on your Windows 11 system, ready to store and manage multiple versions of CUDA effortlessly. With Docker's containerization capabilities, you can easily switch between different CUDA versions and maximize your parallel computing workflows.

Understanding the concept of containerization

In the world of modern software development, the concept of containerization has emerged as a powerful tool for managing and deploying applications. It offers a lightweight and efficient way to package software, along with all its dependencies, into a single, self-contained unit. This allows the application to be easily moved between different computing environments without being affected by the underlying system configurations and settings.

Containerization achieves this by abstracting the application and its dependencies from the host operating system. It creates an isolated environment, known as a container, that encapsulates everything the application needs to run successfully. This includes the runtime, libraries, system tools, and even the operating system itself, if necessary.

By employing containerization, developers can ensure their applications run consistently and reliably across various platforms, without the need to worry about conflicting dependencies or configuration issues. It provides a standardized approach to software deployment, making it easier to scale and distribute applications, as well as facilitating collaboration between development teams.

In addition to its portability and flexibility, containerization also offers improved resource utilization, as containers can be scaled up or down as needed. This allows for efficient allocation of computing resources, reducing costs and optimizing performance.

Overall, the concept of containerization revolutionizes the way applications are developed, deployed, and managed. It simplifies the process of software distribution, improves system compatibility, and enhances the overall efficiency of the development lifecycle.

Storing Multiple Iterations of CUDA with Docker on the Latest Windows Operating System

In the ever-evolving landscape of data processing and parallel computing, CUDA has emerged as a powerful platform for GPU-accelerated applications. However, as new versions of CUDA are released, compatibility issues may arise, making it crucial to have the ability to store and manage multiple iterations of CUDA.

With the advent of Windows 11, a new opportunity presents itself to leverage the capabilities of Docker, a popular containerization technology, for efficient management and deployment of multiple CUDA versions. By encapsulating each CUDA version within its own Docker container on the Windows 11 platform, users can easily switch between different CUDA environments without conflict.

Utilizing Docker containers allows for isolation and separation of different CUDA versions, ensuring that applications developed on one version can be easily maintained and supported even when new versions are introduced. This not only streamlines the development process but also provides a reliable and efficient solution for managing and testing CUDA-based workflows.

- Enhanced Compatibility: By using Docker containers, users can avoid conflicts caused by the coexistence of different CUDA versions on the same system, ensuring maximum compatibility and smooth execution of GPU-accelerated applications.

- Efficient Version Control: Docker's containerization technology facilitates the easy installation and management of multiple CUDA versions, enabling effortless switching between different environments for seamless development and testing.

- Reproducibility and Portability: With Docker, CUDA-based applications can be packaged and deployed across different systems without worrying about compatibility constraints, ensuring consistent behavior and reproducible results.

- Isolation and Security: By encapsulating each CUDA version within its own Docker container, potential security vulnerabilities are confined to the containerized environment, maintaining the integrity of the host system.

With the combination of Docker and the latest Windows 11 operating system, users can take full advantage of the vast opportunities offered by CUDA, ensuring efficient utilization of GPU resources while effectively managing and storing multiple versions of this powerful computing platform.

Setting up and managing containers for application deployment

When it comes to deploying applications, having a reliable and efficient system is crucial. Docker provides a versatile solution for setting up and managing containers, which can greatly simplify the deployment process.

Containers offer a lightweight and isolated environment for running applications, allowing them to be portable across different operating systems and hardware configurations. This means that you can easily package all the dependencies and required libraries for your application into a single container, ensuring consistency and reducing the chances of compatibility issues.

Setting up Docker containers involves creating a Dockerfile, which serves as a blueprint for building the container image. This file specifies the base image, sets up the necessary configurations and dependencies, and defines the commands that will be executed when the container is created. By utilizing the appropriate instructions and parameters in the Dockerfile, you can efficiently build and customize your container image.

Once the container image is built, it can be deployed on any Docker-compatible environment, including local machines, cloud platforms, or even on a cluster of servers. Docker provides a robust set of commands and tools for managing containers, such as creating, starting, stopping, and removing containers. These commands can be easily executed using the Docker CLI or accessed through Docker's user-friendly graphical interface.

In addition to managing individual containers, Docker also offers powerful features for orchestrating and scaling applications. Docker Swarm and Kubernetes are popular container orchestration platforms that can help automate the deployment, scaling, and management of containerized applications across multiple hosts.

- Efficiently package and deploy applications

- Isolate applications and their dependencies

- Create custom container images with Dockerfile

- Manage containers using Docker CLI or graphical interface

- Orchestrate and scale applications with Docker Swarm or Kubernetes

By leveraging Docker's capabilities for setting up and managing containers, developers and system administrators can streamline the application deployment process and ensure consistent performance and reliability across different environments.

Best Practices for Utilizing Docker and CUDA on the Latest Windows Environment

Efficiently leveraging the combination of Docker and CUDA on the advanced Windows 11 platform requires adherence to a set of best practices. These practices ensure smooth operation and maximum productivity, allowing for optimal utilization of resources without compromising performance. Here, we present essential guidelines for effectively harnessing the power of Docker and CUDA on Windows 11.

- Optimize Resource Allocation: Prioritize resource allocation by striking the right balance between Docker containers and CUDA versions. Find the optimum distribution of resources that allows for smooth and efficient execution of CUDA-enabled applications, guaranteeing high-performance computing even in complex scenarios.

- Enable Isolation with Containers: Leverage the containerization capabilities of Docker to isolate different versions of CUDA and prevent conflicts between them. By encapsulating each CUDA version in its own container, you can ensure that applications relying on a specific CUDA version run smoothly without interference from other versions, promoting stability and consistency in your development workflow.

- Ensure Compatibility: To avoid compatibility issues, it is crucial to verify the compatibility between the desired CUDA versions and Windows 11. Research the CUDA support matrix and ensure that you choose CUDA versions that are explicitly compatible with Windows 11, guaranteeing seamless operation and the full utilization of CUDA's capabilities.

- Regularly Update Docker and CUDA: Keep both Docker and CUDA up to date to benefit from the latest enhancements, optimizations, and bug fixes. Regular updates ensure that your development environment remains secure, stable, and capable of leveraging the newest features offered by Docker and CUDA, empowering you to stay ahead in your application development.

- Performance Monitoring and Optimization: Monitor and optimize the performance of your Dockerized CUDA applications by leveraging built-in tools or third-party solutions. Utilize monitoring tools to gain insights into resource utilization, bottlenecks, and potential optimization opportunities. By closely monitoring performance metrics, you can fine-tune your setup, improving efficiency and overall application performance.

Adhering to these best practices enables developers to harness the combined power of Docker and CUDA on the cutting-edge Windows 11 environment effectively. By optimizing resource allocation, ensuring compatibility, and leveraging containerization, developers can create an efficient and stable workflow for CUDA-enabled application development, promoting productivity and enabling seamless execution of complex computing tasks.

Troubleshooting common issues with utilizing Docker on the latest Windows operating system

In this section, we will explore some common challenges that users may encounter when working with Docker on the latest version of the Windows operating system, specifically in the context of managing multiple versions of CUDA. We will discuss potential solutions and steps to resolve these issues effectively.

1. Compatibility conflicts: One of the common problems that users might face with running Docker containers on Windows 11 is compatibility conflicts. These conflicts can arise when attempting to utilize multiple versions of CUDA simultaneously. It is crucial to understand the underlying factors that may cause these conflicts and how to mitigate them.

2. Resource allocation: Another issue that often arises is inadequate resource allocation, leading to performance degradation or system instability. Docker containers relying on different CUDA versions require the appropriate allocation of system resources to function optimally. We will explore strategies to ensure efficient resource management to avoid potential bottlenecks.

3. Networking and connectivity problems: Networking issues can hinder the smooth functioning of Docker containers, especially when managing multiple CUDA versions. Ensuring proper connectivity and network configuration is essential for efficient communication between containers and host systems. We will discuss troubleshooting techniques to tackle networking-related problems.

4. Dependency management: Managing dependencies within Docker containers can be complex, especially when dealing with multiple versions of CUDA. Issues related to package conflicts, dependencies resolution, and compatibility can arise. We will explore strategies and best practices to effectively manage dependencies to maintain container stability.

5. Performance optimization: Maximizing performance while utilizing different CUDA versions in Docker containers can be challenging. In this section, we will delve into techniques to optimize the performance of Docker containers, including fine-tuning resource allocation, leveraging GPU capabilities, and optimizing application workflows.

Conclusion: By understanding and addressing these common issues faced when using Docker on the latest Windows operating system, users can minimize disruptions and enhance their experience in managing multiple CUDA versions effectively. Implementing troubleshooting strategies and best practices will enable smooth and efficient operation of Docker containers with diverse CUDA requirements.

Exploring the Future Advancements in Docker Compatibility on the Latest Windows Platform

In this section, we will delve into the potential future developments and advancements in the seamless integration of Docker with the latest version of the Windows operating system. We will explore how Docker can continue to enhance its compatibility and functionality on the Windows 11 platform, ensuring efficient storage and management of various software versions, including those of CUDA.

One of the key areas of focus for Docker on Windows 11 could be the optimization of resource allocation and utilization. Docker can work towards offering improved performance by efficiently utilizing the available system resources, such as CPU, memory, and disk space. Through advancements in resource allocation algorithms, Docker will be able to ensure smooth operation even when storing multiple software versions, including different CUDA versions.

Another potential development in Docker for Windows 11 could be the enhancement of containerization technology. Docker may explore ways to make the process of containerization more streamlined and flexible, allowing users to easily create, deploy, and manage containers that encapsulate specific software versions. This would provide users with a convenient way to store and access multiple versions of CUDA within separate containers, eliminating potential compatibility issues.

Furthermore, Docker for Windows 11 might focus on improving security and isolation features. By implementing robust security protocols, such as secure container sandboxes and access controls, Docker can ensure that different software versions, including various CUDA versions, remain isolated and protected from external threats. This would enable users to confidently store and utilize multiple CUDA versions without compromising system integrity or the privacy of their data.

| Potential Future Developments in Docker for Windows 11: |

|---|

| 1. Optimization of resource allocation and utilization |

| 2. Enhancement of containerization technology |

| 3. Improvement of security and isolation features |

In summary, the future developments in Docker for Windows 11 aim to provide users with an enhanced experience of storing and managing multiple versions of software, including CUDA. These advancements may involve optimizing resource allocation, refining containerization technology, and strengthening security measures to ensure efficient and secure storage of different CUDA versions.

FAQ

Can Docker for Windows 11 store multiple versions of CUDA?

Yes, Docker for Windows 11 can store two versions of CUDA. Docker allows you to create separate containers for different versions of CUDA, making it easy to switch between them as needed.

Why would I need to store multiple versions of CUDA using Docker for Windows 11?

There are several reasons why you might need to store multiple versions of CUDA. For example, you may have different applications or projects that require specific versions of CUDA. By using Docker, you can easily manage and switch between different CUDA versions without interfering with other projects or applications.

How do I create separate containers for different versions of CUDA in Docker for Windows 11?

To create separate containers for different versions of CUDA in Docker for Windows 11, you can utilize Docker images that contain the specific CUDA version you need. By pulling these images and running them in separate containers, you can effectively store and manage multiple versions of CUDA.

Can I run applications that use different versions of CUDA simultaneously in Docker for Windows 11?

Yes, Docker for Windows 11 allows you to run applications that use different versions of CUDA simultaneously. Since each CUDA version is isolated within its own container, you can run multiple containers with different CUDA versions simultaneously without conflicts.

What are the benefits of using Docker for storing multiple versions of CUDA in Windows 11?

Using Docker for storing multiple versions of CUDA in Windows 11 offers several benefits. Firstly, it simplifies the management of different CUDA versions, allowing for easy switching between versions as needed. Additionally, it enables isolation, ensuring that applications using different CUDA versions do not interfere with each other. Lastly, Docker provides a lightweight and efficient way to store and run multiple CUDA versions without the need for separate virtual machines or installations.

Can Docker for Windows 11 be used to store two versions of CUDA?

Yes, Docker for Windows 11 can be used to store multiple versions of CUDA. Docker allows for the creation of isolated containers that can host different versions of software, including CUDA. This means that you can have separate containers for each version of CUDA that you require, allowing you to easily switch between them as needed.