Perplexing. Bewildering. Unforeseen. These are the words that come to mind when a digital entity ceases its operations without a moment's notice. Imagine a world where businesses rely on the seamless execution of programs, where the stability and continuity of operations are vital. Yet, lurking in the depths of this digital landscape, occasionally, problems emerge. One such conundrum that has mystified engineers and developers alike is the occurrence of a swift and inexplicable termination, leaving a trail of disrupted processes and unanswered questions.

It is amidst this enigmatic landscape that a tale emerges, transcending the boundaries of software and breaching the realms of perplexity.

Imagine, if you will, an intricate system of interconnected processes, each carrying out their designated tasks, harmoniously working towards a common goal. With every thread meticulously stitched together, and seamless communication traversing the system, this symphony of digital endeavors progresses smoothly. However, somewhere in this intricate tapestry, a flaw lies dormant, lurking in the shadows, waiting for the perfect moment to reveal itself.

The Unexpected End of a Running Environment

Imagine a situation when a software system, encased in its fortified chamber, unexpectedly faces a sudden and involuntary halt on its path. The very heart of this operating environment, meant to be eternal, comes to an abrupt and unforeseen end. Such an event unfolds on a powerful computational entity governed by a renowned open-source operating system, renowned for its agility and versatility.

Understanding the causes of unexpected shutdowns

In the ever-evolving world of technology, it is not uncommon for systems to experience sudden and unpredicted terminations. These unexpected shutdowns can lead to significant disruptions, loss of data, and frustrated users. To address this issue and prevent future occurrences, it is crucial to comprehend the underlying causes behind these abrupt terminations.

Vulnerable Environments:

One potential cause of unexpected shutdowns lies in the susceptibility of the system's environment. Factors such as insufficient resources, incompatible software configurations, or outdated hardware can create an unstable foundation, leading to sudden failures.

Software Glitches:

Software glitches or bugs are another significant contributor to abrupt terminations. Errors within the code, memory leaks, or conflicts between different software components can trigger crashes, forcing the system to shut down unexpectedly.

Hardware Failures:

Hardware malfunctions can also play a role in sudden system terminations. Issues such as faulty memory modules, overheating processors, or power supply problems can cause the entire system to abruptly shut down, potentially resulting in data loss or corruption.

Insufficient System Maintenance:

Regular system maintenance is crucial for maintaining a stable and reliable environment. Neglecting routine updates, security patches, or failing to monitor system logs can increase the likelihood of unexpected shutdowns. Over time, these neglected maintenance tasks can lead to a weakened system that is more prone to sudden failures.

User Actions:

Lastly, user actions can inadvertently cause unexpected shutdowns. Accidental clicks on critical system functions, insufficient user privileges leading to conflicts, or inexperienced users attempting complex tasks can all result in sudden terminations.

Understanding the numerous potential causes of unexpected shutdowns is the first step towards implementing effective prevention strategies. By addressing issues related to vulnerable environments, rectifying software glitches, maintaining hardware, prioritizing regular system maintenance, and providing proper user training, it becomes possible to minimize the occurrence of abrupt terminations and create a more seamless and reliable computing experience.

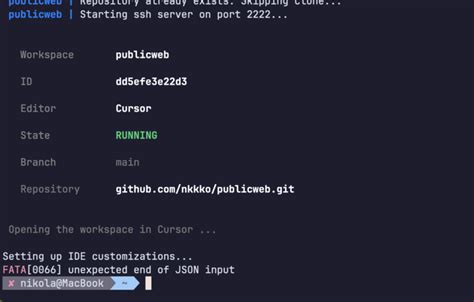

Understanding Common Error Messages and Their Meanings

In the context of troubleshooting issues with software deployment and execution, it is crucial to understand the meaning behind common error messages. These messages serve as valuable clues in identifying and resolving issues that arise during the execution of programs or services.

By familiarizing yourself with the most frequently encountered error messages, you can effectively diagnose and address problems without relying on specific technical terms. It is important to comprehend the underlying issues and possible solutions rather than becoming overwhelmed by complex terminology.

Without further ado, let us delve into some commonly encountered error messages, exploring their possible meanings and suggesting potential troubleshooting steps.

Debugging techniques for troubleshooting container failures

When dealing with the unexpected termination of a container, it is crucial to have effective debugging techniques in place to identify and resolve the underlying issues. By employing various strategies, such as monitoring and log analysis, diagnosing container failures can become a more manageable task.

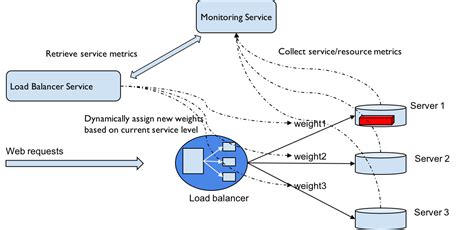

One approach to debugging container failures is through continuous monitoring. This involves setting up monitoring tools that can track the performance and activities of the container. By regularly monitoring key metrics and events, such as CPU usage, memory usage, and network traffic, abnormalities or patterns leading to failures can be detected and analyzed.

Another valuable technique is log analysis. Containers generate various logs that contain valuable information about their behavior and any errors encountered. By parsing and analyzing these logs, it is possible to gain insights into the root causes of failures. Identifying recurring error messages or patterns can help in establishing a troubleshooting framework.

| Technique | Description |

|---|---|

| Memory profiling | Analyzing memory utilization patterns to identify potential leaks or excessive usage that may lead to container failures. |

| Resource allocation analysis | Evaluating the allocation of resources, such as CPU and network bandwidth, to ensure they are appropriately assigned and not causing performance bottlenecks. |

| Dependency analysis | Investigating the dependencies of the container and verifying that all required services or components are available and functioning correctly. |

| Failure simulation | Creating controlled environments to simulate failures and observe their impact on the container, allowing for better understanding and preemptive measures. |

In addition to these techniques, effective debugging often involves collaboration with other stakeholders, such as developers and system administrators. It is crucial to share findings, observations, and hypotheses, as well as leverage their expertise to jointly investigate and resolve container failures.

Debugging container failures can be a complex and challenging task, but with a systematic and thorough approach, it is possible to identify and address the underlying issues. By utilizing monitoring, log analysis, and other debugging techniques, container failures can be mitigated, ensuring the reliability and stability of the overall system.

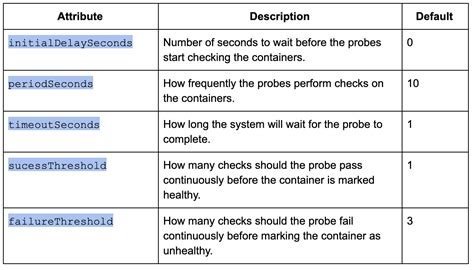

Preventing unexpected termination through container health checks

One of the challenges faced by system administrators and developers working with containerized environments is ensuring the continuous and reliable operation of their applications. In order to address this challenge, it is important to implement effective health checks within the containers, which can help detect and prevent unexpected termination.

Health checks are essential mechanisms that monitor the status of a container and determine whether it is functioning properly. By regularly examining vital aspects of the container's operation, such as its network connectivity, resource utilization, and dependencies, health checks enable administrators to detect and resolve potential issues before they lead to sudden termination.

Several approaches can be employed to implement container health checks. One common method involves creating a dedicated endpoint within the container that can be accessed by an external monitoring system. This endpoint can be designed to respond with specific information about the container's health, such as the status of its components or the availability of required resources. By regularly querying this endpoint, the monitoring system can assess the container's health and take appropriate action if any problems are detected.

In addition to external monitoring, containers can also perform their own internal health checks. This can involve continuously evaluating their own processes, services, and dependencies to ensure that they are functioning as expected. Internal health checks can also include monitoring important metrics, such as memory usage or network latency, and triggering actions if certain thresholds are exceeded.

By combining both external and internal health checks, administrators can establish a robust system for preventing sudden termination of containers. This proactive approach to monitoring and maintaining container health can significantly improve the reliability and stability of applications running within containerized environments.

In conclusion, implementing container health checks is crucial for ensuring the continuous operation of applications in containerized environments. By regularly monitoring the health of containers from both external and internal perspectives, administrators can identify and address potential issues before they lead to unexpected termination. This proactive approach can help optimize the performance and reliability of containerized applications, providing a more seamless and seamless experience for both administrators and end-users.

Ensuring Optimal Performance and Recovery for Unexpectedly Terminated Instances

In the dynamic world of containerized environments, unexpected terminations of instances can be a rather common occurrence. In order to minimize the impact of these abrupt disruptions, it is essential to implement best practices for monitoring and recovery of terminated containers.

One of the key aspects of efficient container management is establishing comprehensive monitoring systems. By continuously tracking the health and performance of containers, you can promptly detect and respond to any signs of instability or potential terminations. Monitoring solutions equipped with robust alerting mechanisms enable timely notifications, ensuring that you can take immediate action to address any issues.

- Implementing container orchestration platforms, such as Kubernetes, allows for a proactive approach towards container management. These platforms offer features like automatic scaling, self-healing, and replication, which enhance the resilience of your containers and provide efficient recovery options in the event of terminations.

- Utilizing container health checks within your applications can significantly contribute to the detection of unhealthy instances. Regular health checks enable the identification of potential issues before they escalate into critical problems. By incorporating these checks into your monitoring system, you can quickly determine if an instance needs to be restarted or replaced.

- Ensuring proper container logging and centralized log management is crucial for effective troubleshooting and recovery. By aggregating logs from terminated instances, you gain valuable insights into the causes of the terminations, allowing you to implement preventive measures to avoid similar issues in the future.

- Implementing automated backups and disaster recovery mechanisms provides an additional layer of protection for your containers. By regularly backing up container configurations and data, you can restore terminated instances quickly and minimize the impact on your applications and services.

- Regularly reviewing and updating your monitoring and recovery strategies is important to address emerging challenges and adapt to changing requirements. By continuously optimizing your practices, you can ensure the reliability and availability of your containerized environments.

In conclusion, employing best practices for monitoring and recovery of containers plays a vital role in maintaining the stability and resilience of your containerized environments. Through comprehensive monitoring, proactive management, adequate logging, automated backups, and continuous review, you can minimize the impact of unexpectedly terminated containers and ensure optimal performance for your applications and services.

Enhancing container stability and resilience for optimal performance on Linux infrastructure

When running containerized applications on a Linux-based server environment, ensuring the stability and resilience of these containers is crucial for maintaining overall system performance and uptime. By implementing robust strategies and best practices, organizations can improve the reliability and effectiveness of their containerized deployments.

Emphasizing fault-tolerant design:

Adopting fault-tolerant design principles can help mitigate the impact of unexpected container terminations. By incorporating redundant components, distributing workloads across multiple containers, and implementing automated failover mechanisms, organizations can increase system resilience and minimize potential disruptions.

Implementing proactive monitoring and remediation:

Deploying comprehensive monitoring solutions that provide real-time insights into container health and performance is essential. By utilizing monitoring tools and actively monitoring resource usage, organizations can identify and address potential issues before they escalate, preventing sudden container terminations and optimizing overall system stability.

Optimizing resource allocation:

Efficiently allocating system resources, such as CPU, memory, and disk space, is critical for maintaining container stability. By analyzing resource usage patterns and adjusting resource allocations accordingly, organizations can ensure that containers have the necessary resources to operate optimally, reducing the risk of sudden terminations and enhancing system resilience.

Implementing container orchestration platforms:

Utilizing container orchestration tools such as Kubernetes or Docker Swarm can significantly enhance the stability and resilience of containerized applications. These platforms provide automated container deployment, scaling, and management capabilities, ensuring that containers are distributed and balanced effectively across the infrastructure, minimizing the impact of individual container failures.

Employing continuous integration and deployment practices:

By implementing CI/CD practices, organizations can automate the container build, test, and deployment processes. This enables faster identification and resolution of any issues that may cause abrupt terminations. Regularly updating containers with bug fixes, security patches, and performance optimizations ensures a more stable and reliable containerized environment.

Conducting regular vulnerability assessments:

Regularly assessing container images and underlying infrastructure for vulnerabilities is crucial for maintaining container stability and resilience. By implementing vulnerability scanning and patch management processes, organizations can identify and mitigate potential security risks, minimizing the chances of container failures due to exploitation of vulnerabilities.

Cultivating a culture of proactive system maintenance:

Encouraging a proactive approach to system maintenance can significantly improve container stability. Regularly reviewing container configurations, updating dependencies, and resolving identified issues in a timely manner ensures that containers remain in a healthy state, reducing the likelihood of sudden terminations and enhancing overall system resilience.

In conclusion, taking a comprehensive and proactive approach to container stability and resilience on Linux servers can significantly enhance the performance and reliability of containerized applications. By implementing fault-tolerant designs, proactive monitoring, resource optimization, and utilizing container orchestration platforms, organizations can minimize the impact of sudden terminations and ensure optimal system operations.

FAQ

Why does a Docker container abruptly terminate on my Linux server?

There could be several reasons for a Docker container to abruptly terminate on a Linux server. One possible reason is that the container runs out of memory or other system resources, causing it to crash. Another reason could be an error or issue with the application running inside the container. Additionally, the container could be terminated by an external process or a misconfiguration in the container's settings.

How can I troubleshoot the abrupt termination of Docker containers on my Linux server?

To troubleshoot the abrupt termination of Docker containers on a Linux server, you can start by checking the container logs using the Docker command line tool. The logs can provide valuable information about any errors or issues that occurred before the container crashed. Additionally, you can monitor system resource usage, such as memory and CPU, to identify any resource-related problems. It's also essential to ensure that the application running inside the container is properly configured and not causing any crashes.

What are some best practices to prevent Docker containers from abruptly terminating on Linux servers?

To prevent Docker containers from abruptly terminating on Linux servers, it's crucial to allocate sufficient system resources to the containers, such as memory, CPU, and disk space. Monitoring resource usage and setting resource limits can help prevent resource-related crashes. Additionally, using health checks within Docker containers can detect and handle application failures, ensuring that the container is restarted in case of issues. Regularly updating Docker and its dependencies can also help mitigate bugs or vulnerabilities that could lead to container crashes.