As modern software development evolves, new challenges arise in the realm of containerization. Docker has undoubtedly revolutionized the field, enabling developers to encapsulate their applications and run them consistently across different environments. However, when working with Docker containers, it is crucial to seamlessly connect and leverage the vast resources that Windows networks offer.

Imagine the possibilities of effortlessly integrating your Windows network resources into your Docker containers. This synergy can enhance the performance, functionality, and scalability of your applications, enabling them to tap into the power of the entire network ecosystem. Breaking down the barriers between your containers and the robust Windows environment opens up a world of endless opportunities for collaboration and resource sharing.

Unlocking the potential of Windows network resources within Docker containers is a game-changer. This integration empowers developers to harness the strengths of both worlds, bringing together the nimbleness and efficiency of containerization with the expansive capabilities of Windows networks. By seamlessly fusing these two technologies, you can transcend the limitations of standalone containers, enriching your applications with the full spectrum of Windows-based functionalities.

Achieving Seamless Integration: Establishing a Connection between Docker and Windows Network Assets

In this section, we will delve into the intricacies of how to seamlessly integrate Docker with various assets in the Windows network environment. By establishing a connection between Docker containers and Windows resources, you can leverage the full potential of both technologies to enhance your development and deployment processes.

With the increasing popularity of Docker containers, it becomes imperative to bridge the gap between containerized applications and the vast array of resources available within the Windows network ecosystem. Whether it be accessing databases, file shares, or other Windows-specific services, understanding the methods to establish a secure and efficient connection can greatly streamline your development workflow.

Throughout this comprehensive guide, we will explore a variety of techniques and best practices for integrating Docker containers with Windows network resources. We will discuss different approaches, such as using Docker volumes to connect to shared drives, establishing connectivity through network aliases, or even leveraging container networking modes to access Windows-based services.

Moreover, we will delve into the security considerations that come into play when connecting Docker containers to Windows network resources. We will cover authentication and authorization mechanisms, as well as strategies to ensure data integrity and confidentiality. By understanding these security practices, you can mitigate potential risks and enhance the overall security posture of your containerized applications.

By the end of this guide, you will have a comprehensive understanding of the various techniques and best practices for establishing a seamless connection between Docker containers and Windows network resources. Armed with this knowledge, you can confidently leverage the power of both technologies to optimize your development workflows and maximize the potential of your Windows network assets.

Understanding the Fundamentals of Docker

In this section, we will explore the essential concepts and principles that form the foundation of Docker technology. Through a comprehensive understanding of these fundamentals, you will gain insight into the core concepts that drive Docker's functionality.

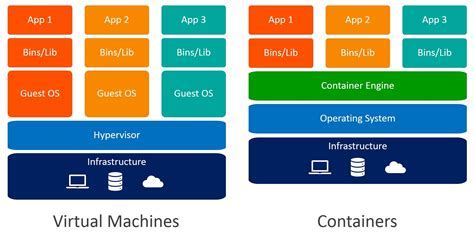

Through Docker, you can unlock the power of containerization, a technology that allows you to encapsulate your applications and their dependencies into a portable and lightweight environment. By utilizing containerization, you can ensure consistent and reliable deployment across various platforms and operating systems.

Containers serve as the building blocks of Docker. They are isolated environments that contain everything required to execute a specific piece of software, including the code, system libraries, and runtime. Containers are lightweight and can run on any compatible host machine, making them incredibly versatile.

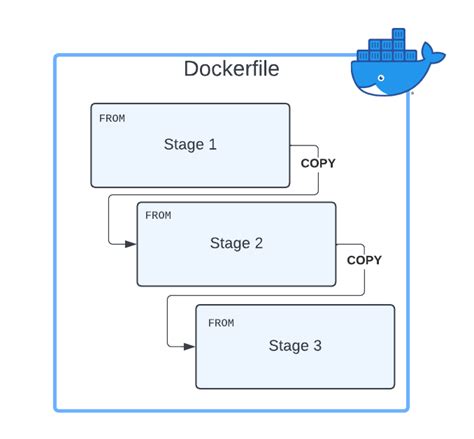

Images are the blueprints for creating containers. They define the necessary configuration and tools required to run a particular application. Images are created through a predefined script known as a Dockerfile and can be easily shared and reused.

Containerization allows for efficient resource utilization, as each container runs in isolation from other containers and the host system. This isolation enables applications to coexist without interference, ensuring scalability and flexibility.

Furthermore, Docker utilizes a layered file system approach, allowing for efficient storage and retrieval of files. Each layer represents a specific set of changes to the file system, enabling quick updates and minimal duplication of data during image creation and deployment.

Finally, container orchestration is a crucial aspect of Docker, as it allows for the management and scaling of containerized applications across multiple hosts. By utilizing container orchestration tools such as Docker Swarm or Kubernetes, you can ensure high availability, load balancing, and fault tolerance.

By delving into these fundamental concepts, you will develop a solid understanding of Docker's core principles and be well-equipped to explore its various use cases and capabilities.

Leveraging Docker containers for optimized utilization of computing resources

In the modern era of technology, efficient management of computing resources is crucial for organizations to maximize productivity and cost-effectiveness. Docker containers have emerged as a revolutionary solution for isolating applications and their dependencies, enabling seamless portability and scalability. In this article, we will explore how Docker containers can be leveraged to achieve efficient resource management and ensure optimal utilization of computing resources.

1. Utilizing lightweight containers: One of the key advantages of Docker containers is their lightweight nature, allowing for efficient utilization of computing resources. By leveraging containerization, organizations can run multiple isolated applications on a single host machine, thereby reducing the hardware requirements and costs associated with maintaining separate virtual machines.

2. Dynamic resource allocation: Docker containers offer the flexibility to dynamically allocate computing resources based on the needs of each application. Through the use of container orchestration platforms like Kubernetes, resources can be allocated and scaled up or down in real-time, ensuring that each application receives the necessary computational power without wasting resources.

3. Efficient resource utilization with microservices architecture: Docker containers complement microservices architecture, where applications are built as a collection of small, loosely coupled services. Each microservice can be encapsulated within a separate container, allowing for efficient resource allocation based on the demand of individual services. This results in optimized utilization of computing resources, as only the required containers are deployed and scaled as needed.

4. Resource monitoring and optimization: Docker provides comprehensive tools for monitoring the performance and resource utilization of containers. By regularly analyzing resource usage metrics, organizations can identify bottlenecks and optimize the allocation of computing resources. Additionally, containerization enables easy migration of applications across different environments, allowing for resource optimization based on the specific requirements of each deployment.

5. Integration with cloud services: Docker containers seamlessly integrate with various cloud services, enabling organizations to leverage the scalability and cost-effectiveness of cloud computing. By deploying containers on cloud platforms such as AWS or Azure, organizations can dynamically allocate resources based on demand, ensuring optimal resource utilization and cost-efficiency.

- Rapid application deployment and scaling

- Improved fault tolerance and reliability

- Streamlined development and testing processes

In conclusion, Docker containers provide a powerful platform for efficient resource management, allowing organizations to optimize the utilization of computing resources and streamline their operations. By leveraging the lightweight and flexible nature of containers, along with monitoring tools and cloud integration, organizations can achieve cost-effective scalability and improved application performance.

Understanding the Importance of Connecting a Windows Network Asset

When working with modern technology, it is essential to recognize the significance of establishing connections between various components. In the context of Windows networking, this involves linking relevant resources to optimize productivity and streamline overall operations.

Recognizing the need to connect a Windows network asset allows businesses to leverage the full potential of their existing infrastructure. By establishing effective connections, organizations can enhance collaboration, facilitate seamless data exchange, improve efficiency, and ultimately achieve their desired goals.

By understanding the importance of connecting a Windows network asset, businesses can make informed decisions and adopt strategies that lead to increased productivity, improved communication, and strengthened overall performance.

Configuring networking settings in Docker containers

In the context of managing network settings within Docker containers, it is important to understand the various configurations and options available. This section will explore the different ways to configure networking settings to enable seamless communication between containers and external resources.

1. Network Bridge Configuration

- Setting up a network bridge allows containers to communicate with each other and with external resources. It provides a dedicated network interface for containers, enabling the creation of isolated network environments.

- Configuring the network bridge involves defining IP addresses, subnet masks, and gateway addresses for containers to establish connectivity. This ensures effective communication within the container network.

- Additionally, configuring DNS settings helps resolve domain names to IP addresses, allowing seamless access to external resources from within the containers.

2. Port Mapping

- Port mapping is a crucial aspect of networking in Docker containers. It allows containers to expose specific ports to the host system, enabling external access to services running within the containers.

- By mapping container ports to host ports, containers can receive incoming network traffic on specific ports and forward it to the respective services running within the containers.

- Port mapping is particularly useful when running multiple containers on a single host, as it allows each container to use its own set of ports without conflicts.

3. Network Driver Selection

- The choice of network driver in Docker containers can significantly impact networking performance and capabilities.

- Each network driver has its own set of features and characteristics, such as bridge networking, overlay networks, or host networking. Selecting the appropriate driver depends on the specific requirements of the containerized application.

- Understanding the capabilities and limitations of different network drivers ensures optimal network configuration for containers and allows for seamless communication with external resources.

In conclusion, configuring networking settings within Docker containers involves various considerations, including network bridge configuration, port mapping, and network driver selection. By understanding and correctly implementing these settings, containers can effectively communicate with each other and with external resources, enabling seamless network connectivity.

Step-by-step guide: Accessing a Windows network resource within a Dockerized environment

In this section, we will explore a comprehensive step-by-step process to seamlessly integrate a Windows network asset within a containerized setup. By following these instructions, you can effectively make use of a Windows network resource without the need for complex configurations or manual intervention.

| Step 1: | Setting up the Docker Container |

| Step 2: | Ensuring Network Connectivity |

| Step 3: | Configuring the Security Settings |

| Step 4: | Mapping the Windows Network Resource |

| Step 5: | Verifying the Resource Accessibility |

| Step 6: | Testing the Integration |

Each step in this guide will walk you through the necessary actions required to access a Windows network resource within your Docker container. By following this comprehensive tutorial, you will successfully gain access to the desired asset and enhance the capabilities of your containerized environment.

Troubleshooting common issues during establishment of connectivity

In the process of establishing connectivity between a Windows resource and a container, there can be some common issues that arise. These issues can impede the successful connection and require troubleshooting to ensure a smooth integration. In this section, we will explore some of the typical problems that can occur during resource connection and discuss possible solutions to overcome them.

- Authentication problems: One common issue that can be encountered relates to authentication. This occurs when the container lacks the necessary credentials to access the Windows resource. To resolve this, ensure that the correct authentication credentials, such as username and password, are provided to the container.

- Firewall restrictions: Another challenge that can hinder connectivity is firewall restrictions. Windows may have a firewall in place that blocks access to certain resources. Check the firewall settings and make sure that the necessary ports are open to allow communication between the container and the Windows resource.

- Incorrect network configuration: Incorrect network configuration can also lead to connection problems. Ensure that the container and the Windows resource are on the same network or subnet. Additionally, verify that the IP addresses and subnet masks are correctly configured.

- Network latency: Network latency can impact the connection between the container and the Windows resource. If the network performance is poor, it can result in delays or timeouts during communication. Consider troubleshooting the network infrastructure to address any latency issues.

- Incompatible protocols: Compatibility issues can arise if the container and the Windows resource use different protocols or versions. Make sure that both sides are using compatible protocols to establish a successful connection.

By being aware of these common issues and employing the appropriate troubleshooting techniques, you can overcome connectivity problems when connecting a Windows network resource inside a container. Troubleshooting requires careful analysis and investigation, but with the right approach, you can ensure a seamless integration between your container and the desired resource.

Ensuring Secure Connections: Deploying and Maintaining a Safe Network Environment

In the context of establishing and maintaining secure connections within a network environment, it is crucial to follow best practices that prioritize the security of data and resources. By implementing robust measures and protocols, organizations can safeguard their networks against potential threats and vulnerabilities.

One fundamental aspect of maintaining secure connections is to establish strong authentication mechanisms. Implementing multifactor authentication, such as the combination of passwords and biometric factors, enhances the overall security posture of the network. Additionally, implementing role-based access control ensures that only authorized individuals have access to critical resources.

Encrypting data transmission is another vital practice to ensure secure connections. By utilizing industry-standard encryption protocols, such as Transport Layer Security (TLS) or Secure Sockets Layer (SSL), organizations can protect sensitive data from interception and unauthorized access during transit. Regularly updating encryption protocols and certificates is necessary to address emerging security vulnerabilities.

Regular network monitoring and auditing play a significant role in maintaining secure connections. By leveraging advanced network monitoring tools and intrusion detection systems, organizations can detect and mitigate potential threats in real-time. Conducting regular audits allows organizations to identify and remediate any security gaps or vulnerabilities within the network infrastructure.

| Best Practices for Maintaining Secure Connections: |

|---|

| Implement strong authentication mechanisms |

| Utilize encryption protocols for data transmission |

| Regularly update encryption protocols and certificates |

| Monitor network traffic and employ intrusion detection systems |

| Conduct regular network audits for identifying vulnerabilities |

Unlocking the full potential: Harnessing the benefits of Windows network resource integration with Docker

Enhancing your Docker experience extends beyond simple connectivity. By seamlessly integrating Windows network resources into your containers, you can unlock a whole new level of efficiency and convenience. In this section, we will explore the various ways to harness the benefits of this integration and optimize your workflow.

- Streamlined Collaboration: Embrace the power of collaboration by effortlessly accessing and sharing Windows network resources within your Docker containers. Break down the barriers between team members and accelerate project delivery by making vital resources readily available to everyone involved.

- Improved Accessibility: Never be limited by physical location again. Windows network resource integration allows you to access critical files, databases, and applications from anywhere, at any time. Empower your team with the flexibility to work remotely without sacrificing productivity.

- Seamless Integration: By integrating Windows network resources directly into your Docker containers, you can eliminate the need for complex workarounds or manual file transfers. Enjoy a seamless and uninterrupted workflow as you seamlessly access and manipulate your resources within the familiar Docker environment.

- Efficient Resource Management: Optimize resource allocation and save valuable storage space with Windows network resource integration. Centralize your files and databases, enhance data security, and avoid duplication by leveraging the existing network infrastructure. Make the most out of your container environments without compromising on performance.

- Enhanced Scalability: Unlock the true scalability potential of Docker with Windows network resource integration. Seamlessly scale your applications by leveraging the resources of your network infrastructure, without the need to replicate or duplicate data. Empower your applications to handle increased workloads and adapt to evolving business needs with ease.

With Windows network resource integration, Docker becomes the gateway to a world of unlimited possibilities. Take advantage of this powerful feature to streamline collaboration, improve accessibility, optimize resource management, and unlock the full potential of your Docker containers.

Install Docker on Windows Server 2022 Complete Tutorial - Build your own Custom IIS Container!

Install Docker on Windows Server 2022 Complete Tutorial - Build your own Custom IIS Container! 来自VirtualizationHowto 36,138次观看 1年前 12分钟54秒钟

How to setup SSH on Docker Container to access it remotely

How to setup SSH on Docker Container to access it remotely 来自Awais Mirza 45,576次观看 2年前 6分钟21秒钟

FAQ

Can I connect a Windows network resource inside a Docker container?

Yes, it is possible to connect a Windows network resource inside a Docker container. Docker provides networking features that allow containers to access resources on the host machine network.

How can I connect a specific Windows network share to a Docker container?

To connect a specific Windows network share to a Docker container, you can use the Docker volume flag (-v) in the command line. For example, you can run the following command to connect a network share named "sharename" to a container: docker run -v //servername/sharename:local/path/to/mount.

Is it possible to connect multiple Windows network resources to a single Docker container?

Yes, you can connect multiple Windows network resources to a single Docker container. You can use the Docker volume flag (-v) multiple times in the command line to specify multiple network shares to be mounted in the container.

Can I access a Windows network resource inside a Docker container from another container?

Yes, it is possible to access a Windows network resource inside a Docker container from another container. Docker provides network connectivity between containers, allowing them to communicate with each other. You can use the Docker networking features to connect containers and access the network resources.

What are the advantages of connecting Windows network resources inside a Docker container?

Connecting Windows network resources inside a Docker container offers several advantages. It allows for more flexible deployment and management of applications that rely on these resources. It also provides isolation and security, as the network resources are contained within the Docker container environment. Additionally, it simplifies the development and testing process by providing consistent access to the network resources across different environments.

Can I connect a Windows network resource inside a Docker container?

Yes, it is possible to connect a Windows network resource inside a Docker container. Docker provides the capability to access network resources by using the host's network stack, allowing containers to communicate with resources on the same network.