In a digital era driven by exponential data growth, organizations are constantly searching for innovative solutions to increase the efficiency and reliability of their data processing systems. Leveraging cutting-edge technology, a new paradigm has emerged that promises to revolutionize the way data is distributed and processed. This article delves into the fascinating world of distributed data processing, exploring the powerful capabilities of a state-of-the-art framework whilst harnessing the agility of containerization technology.

Imagine a future where data is seamlessly partitioned across interconnected nodes, creating a resilient network capable of handling massive volumes of information. This novel approach enables computations to be performed in parallel, accelerating the processing time and ensuring fault tolerance. By decoupling data and computation, organizations gain the ability to easily scale their systems to meet growing demands, providing a flexible and adaptable infrastructure for the ever-evolving digital landscape.

Introducing the concept of actor-based programming, this paradigm shift in distributed data processing is spearheaded by the innovative technology known as "Akka". Employing a lightweight and message-driven model, Akka epitomizes the principles of elasticity and resilience. Acting as the building blocks of the distributed system, actors encapsulate both data and computational logic, enabling efficient communication and collaboration across the network. Leveraging the power of actors, this approach ensures that each piece of data is processed independently, maximizing throughput and minimizing latency.

Harnessing the immense potential of Akka is only part of the story. In order to fully exploit its benefits, a modern and efficient deployment strategy is required. Enter Docker, a groundbreaking containerization platform that provides a streamlined environment for a wide range of applications. By encapsulating the Akka framework within lightweight and isolated containers, Docker empowers developers to build, ship, and run distributed data processing applications with ease. The use of Docker combined with the lightweight and secure Alpine Linux distribution further enhances the efficiency and scalability of the overall system, making it an optimal choice for organizations seeking rapid deployment and reliable execution.

This article uncovers the intricacies of deploying Akka 2.5 on Docker Alpine Linux, offering insights and best practices for building resilient and scalable distributed data processing systems. Through the power of containerization and the seamless integration of Akka, organizations can unlock a new level of agility, enabling them to navigate the complexities of data processing in the digital age with confidence and precision.

Understanding the Concept of Distributed Data Akka

In the realm of distributed systems and software development, one encounter is an innovative technology known as Distributed Data Akka. This powerful framework aims to address the challenges surrounding data management and processing across multiple nodes in a networked environment. By leveraging the inherent capabilities of Akka, developers gain the ability to create fault-tolerant and highly scalable applications.

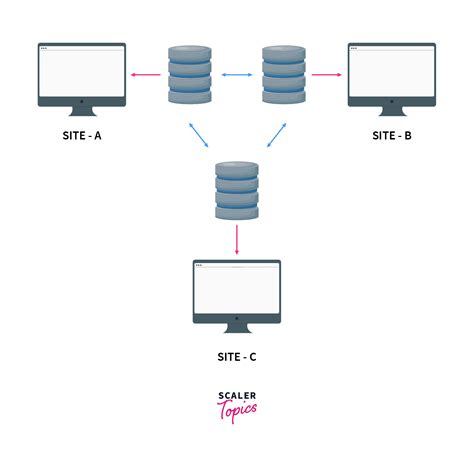

One of the core principles behind Distributed Data Akka lies in its ability to enable decentralized architectures. By allowing data to be spread across nodes in a network, it promotes a distributed approach to handling information. This approach not only enhances the system's fault tolerance but also improves its ability to handle high volumes of data.

The concept of Distributed Data Akka involves the utilization of various data replication strategies, which are designed to ensure consistency and availability across the network. These strategies enable developers to choose the most appropriate replication scheme based on their specific requirements and constraints. This flexibility empowers developers to build robust and resilient applications that can seamlessly handle failures and adapt to changing circumstances.

Moreover, Distributed Data Akka offers advanced conflict resolution mechanisms. These mechanisms come into play when multiple nodes attempt to update the same piece of data simultaneously. Through techniques such as version vectors and conflict-free replicated data types (CRDTs), Distributed Data Akka provides the means to handle conflicting updates in a deterministic and orderly manner.

Ultimately, the Distributed Data Akka framework revolutionizes the way developers handle data in distributed systems. By embracing the concepts of decentralization, replication strategies, and conflict resolution, it opens up new possibilities for creating highly scalable and fault-tolerant applications that can thrive in today's increasingly complex and demanding computing landscapes.

Advantages of Utilizing Akka for Distributed Information

In today's digital landscape, organizations are constantly seeking ways to effectively manage and process vast amounts of information from various sources. Distributing data across multiple systems has become a critical requirement to ensure scalability, fault-tolerance, and efficient utilization of resources. Akka, a powerful framework for building distributed systems, offers numerous advantages in handling and processing data in such complex environments.

- Efficient Message-Based Communication: Akka enables asynchronous and event-driven messaging between distributed components, allowing for fast and reliable data transfer. This approach helps in decoupling different parts of the system, enabling concurrent processing and improved scalability.

- Supervision and Fault-Tolerance: Akka's actor model provides built-in mechanisms for supervision and fault-tolerance, ensuring that failures in one part of the system do not escalate and affect the overall data processing. By isolating failures and providing automatic recovery, Akka enables systems to maintain high availability and data consistency.

- Adaptive Load Balancing: Akka's built-in load balancing capabilities ensure efficient utilization of resources by distributing data processing across multiple nodes. This helps in preventing bottlenecks and allows for dynamic scaling based on the system's needs.

- Ease of Development: Akka's well-defined abstractions and APIs make it easier to develop and maintain distributed data applications. Its actor-based programming model simplifies concurrency management and reduces the complexity of handling distributed state.

- Scalability: Akka is designed to scale horizontally, allowing organizations to handle increasing data volumes by adding more processing nodes. This scalability ensures that data-intensive applications can seamlessly handle growing workloads without compromising performance.

In conclusion, leveraging Akka for distributed data processing brings numerous advantages, including efficient communication, fault-tolerance, adaptive load balancing, ease of development, and scalability. By harnessing these benefits, organizations can build robust and responsive systems capable of handling massive amounts of data in real-time.

What's New in the Latest Version of Akka?

Discover the latest features and enhancements introduced in the newest release of Akka. In this article, we will explore the exciting updates that have been made to this powerful framework for building highly concurrent and distributed applications.

Enhanced functionality: Version 2.5 of Akka brings a range of new capabilities to developers, empowering them to create even more robust and efficient applications. From improved error handling and fault tolerance mechanisms to advanced message routing strategies, the latest version of Akka offers a wealth of new tools for building resilient and scalable systems.

Increased performance: The performance of Akka applications has been optimized in version 2.5, allowing for higher throughput and reduced latency. With enhanced actor supervision strategies and optimized message processing algorithms, developers can expect improved responsiveness and resource utilization in their Akka-based systems.

Streamlined development process: Akka 2.5 introduces new features and tools that simplify the development process and make it more intuitive. From enhanced debugging capabilities to improved testing frameworks, developers can now write and maintain Akka applications with greater ease and efficiency.

Expanded ecosystem: With version 2.5, Akka's ecosystem continues to grow, offering developers an even wider range of tools and integrations. From new plugins and extensions to enhanced support for popular libraries and frameworks, the latest release of Akka fosters a vibrant and thriving community of developers.

Conclusion: Version 2.5 of Akka brings a host of exciting new features and improvements, empowering developers to create highly concurrent and distributed applications with enhanced performance and functionality. With a streamlined development process and an expanded ecosystem, Akka continues to evolve as a powerful and versatile framework for building reactive systems. Stay tuned for more updates and innovations from the Akka community.

Getting Started with Docker on Minimalistic Alpine OS

In this section, we will explore the process of setting up Docker on an Alpine Linux distribution. The focus will be on the lightweight nature of Alpine OS and how it can benefit Docker deployment.

Exploring Alpine Linux

Alpine Linux is a minimalistic operating system that provides a secure and lightweight base for Docker containers. Built with a focus on simplicity, security, and efficiency, Alpine Linux offers a stripped-down environment perfect for running containerized applications.

With its minimalistic design and small footprint, Alpine Linux allows for faster container startup times, reduced memory usage, and improved overall performance. This makes it an attractive choice for deploying Docker containers, especially in resource-constrained environments.

Setting up Docker on Alpine Linux

The process of setting up Docker on Alpine Linux involves a few steps, but it is relatively straightforward. First, we need to ensure that the necessary dependencies are installed, including the Docker Engine and Docker Compose. Next, we will configure the Docker daemon to start automatically upon system boot.

- Install the Docker Engine: Use the package manager of Alpine Linux to install the Docker Engine package.

- Install Docker Compose: Download and install the Docker Compose binary on your Alpine Linux distribution.

- Configure Docker Daemon: Set up the Docker Daemon to start automatically on system boot by modifying the appropriate config file.

Once these steps are completed, Docker should be up and running on your Alpine Linux distribution. You can now start working with containers, pulling images from Docker Hub, and deploying your applications in a lightweight and efficient manner.

In conclusion, setting up Docker on Alpine Linux provides an excellent platform for running containerized applications. The lightweight nature of Alpine Linux enhances performance, reduces resource usage, and improves overall efficiency. By following the steps outlined in this section, you can easily get started with Docker on Alpine Linux and enjoy the benefits it offers.

Advantages of Alpine Linux in Docker

Lightweight and Efficient

When it comes to choosing an operating system for Docker containers, Alpine Linux stands out as a lightweight and efficient option. This Linux distribution is specifically designed to be minimalistic and optimized for resource usage. Its compact size allows for faster download and deployment of containers, making it ideal for distributed systems.

Enhanced Security

Alpine Linux follows a security-first approach, prioritizing the safety of your containerized applications. Its minimalistic nature reduces the potential attack surface, making it less vulnerable to security threats. Additionally, Alpine Linux utilizes the musl libc library, known for its strong focus on security, which provides an extra layer of protection.

Faster Container Startup Times

One of the key advantages of using Alpine Linux with Docker is its fast startup times. Due to its small footprint, Alpine Linux can quickly download and extract the required dependencies, enabling containers to launch swiftly. This feature is particularly beneficial in distributed systems where rapid scaling and responsiveness are crucial.

Streamlined Maintenance and Updates

Alpine Linux offers a simplified approach to maintenance and updates. The distribution employs a rolling release model, which means that updates are continuously and seamlessly delivered. This ensures that you have access to the latest security patches and software enhancements without the need for major system upgrades or disruptions.

Rich Ecosystem and Community Support

Despite its minimalist design, Alpine Linux benefits from a vibrant and active community that provides extensive support. Its ecosystem includes a wide range of pre-built packages and community-maintained repositories, making it easy to find and install the necessary tools and libraries for your Docker applications.

Conclusion

Considering the benefits of Alpine Linux, such as its lightweight nature, enhanced security, fast startup times, streamlined maintenance, and active community support, it becomes clear why it is a preferred choice for running Docker containers. By leveraging Alpine Linux, you can optimize your containerized applications for efficiency, security, and performance in distributed systems.

Deploying the Latest Version of Akka Framework on a Lightweight Container

In this section, we will explore the process of running the most up-to-date release of the popular Akka framework on a small and efficient container. By leveraging the power of containerization, we can easily deploy and manage our Akka applications without the need for complex infrastructure setup.

Step-by-Step Guide to Deployment

In this section, we will walk you through a detailed step-by-step guide on the deployment process for our solution. We will cover all the necessary steps and provide clear instructions to ensure a smooth and successful deployment.

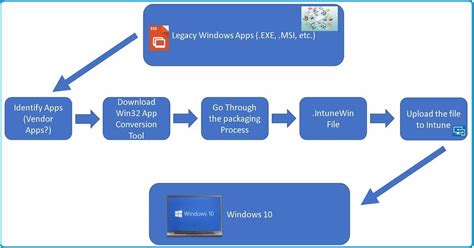

- Preparation: Before starting the deployment process, it is essential to prepare the environment by setting up the required resources and dependencies. This includes installing the necessary software, configuring network settings, and allocating appropriate hardware resources. Ensure that all prerequisites are met to avoid any issues during deployment.

- Configuration: Next, we will guide you through the configuration process. This involves defining various parameters and settings that will govern the behavior of the system. We will provide detailed explanations and examples to help you customize the configuration based on your specific requirements.

- Build and Packaging: Once the environment is prepared and the configuration is finalized, we will demonstrate how to build and package the solution. This step involves compiling the source code, resolving dependencies, and creating a deployable artifact. We will provide instructions for different build tools and highlight best practices for efficient packaging.

- Deployment Setup: In this step, we will guide you through the setup process for deploying the packaged solution. We will cover different deployment options, such as deploying to a single server or a cluster of machines. We will explain the necessary steps for setting up networking, establishing communication channels, and ensuring high availability and fault tolerance.

- Testing and Validation: Once the deployment setup is complete, it is crucial to conduct thorough testing and validation to ensure the correct functioning of the system. We will provide guidelines on designing and executing test cases, monitoring system performance, and troubleshooting common issues.

- Monitoring and Maintenance: After the successful deployment and validation of the system, it is essential to establish robust monitoring and maintenance practices. We will guide you through the process of setting up monitoring tools, configuring alerts, and performing routine maintenance tasks. These practices will help you identify and address potential issues proactively, ensuring the smooth operation of the deployed solution.

By following this step-by-step guide, you will be able to deploy our solution efficiently and effectively. Each step is carefully explained to provide clear instructions, and we have included best practices and tips to help you avoid common pitfalls. Stay tuned for the upcoming sections, where we will delve deeper into each step and provide additional insights for a successful deployment.

Best Practices for Running Akka in Containerized Environments

In this section, we will explore the recommended practices for effectively running Akka applications in containerized environments. Containerization has provided developers with a convenient way to deploy and manage applications, enabling scalability and portability. However, when it comes to running Akka, there are certain considerations that should be taken into account for optimal performance and stability.

One of the key aspects to consider is the resource allocation in a containerized environment. Akka applications heavily rely on memory and CPU resources, and it is crucial to properly configure resource limits for containers to prevent performance degradation and ensure stability. This involves analyzing the resource requirements of the Akka application, monitoring resource utilization, and setting appropriate resource limits for each container.

Another important practice is to effectively manage the lifecycle of Akka actor systems in containerized environments. Akka applications often have multiple actor systems, each with its own set of actors, supervisor strategies, and dispatcher configurations. It is necessary to carefully design the actor hierarchy and ensure proper supervision and fault tolerance mechanisms are in place to handle failures and ensure application resilience.

Furthermore, it is advisable to implement proper logging and monitoring mechanisms for Akka applications running in containers. Logging plays a crucial role in troubleshooting and performance analysis, providing valuable insights into the behavior of the Akka system. Integrating a centralized logging solution, such as ELK stack or Prometheus, can greatly simplify the monitoring and analysis of Akka logs, helping to identify and resolve issues more efficiently.

| Best Practices for Running Akka in Containers |

|---|

| 1. Properly allocate and manage resource limits |

| 2. Design resilient actor hierarchies and fault tolerance mechanisms |

| 3. Implement effective logging and monitoring |

In summary, running Akka applications in containerized environments requires careful consideration of resource allocation, actor system design, and logging and monitoring practices. By following these best practices, developers can ensure the optimal performance and stability of their Akka applications in a containerized setting.

[MOVIES] [/MOVIES] [/MOVIES_ENABLED]FAQ

What is Distributed Data Akka 2.5?

Distributed Data Akka 2.5 is a framework for building distributed, elastic, and fault-tolerant systems using the Akka toolkit. It provides a set of APIs and tools to manage and replicate state across multiple nodes in a distributed system.

What is Docker Alpine Linux?

Docker Alpine Linux is a lightweight, minimalistic Linux distribution designed specifically for running Docker containers. It is known for its small size, fast boot time, and reduced attack surface compared to other Linux distributions.

Why should I use Distributed Data Akka 2.5 on Docker Alpine Linux?

Using Distributed Data Akka 2.5 on Docker Alpine Linux can bring various benefits. It allows you to build distributed systems that are highly scalable, fault-tolerant, and easy to deploy. Docker Alpine Linux's lightweight nature ensures efficient resource utilization and faster container startup times.

How does Distributed Data Akka 2.5 handle data replication?

Distributed Data Akka 2.5 handles data replication by using a combination of conflict-free replicated data types (CRDTs) and gossip protocols. CRDTs enable concurrent updates and conflict resolution, while gossip protocols facilitate the exchange of state updates between nodes in the distributed system.

Are there any performance considerations when using Distributed Data Akka 2.5 on Docker Alpine Linux?

When using Distributed Data Akka 2.5 on Docker Alpine Linux, it is important to consider the hardware resources available for your containers. Ensure that each container has sufficient CPU, memory, and disk resources to handle the workload. Additionally, optimizing network communication between nodes can also improve overall performance.

What is Akka?

Akka is a toolkit and runtime for building highly concurrent, distributed, and fault-tolerant applications on the Java Virtual Machine (JVM).