Efficiently deploying cutting-edge applications that harness the power of GPU acceleration is an essential aspect of modern software development. Today, we delve into the realm of streamlining the deployment process for Windows-based software by leveraging the remarkable capabilities of Nvidia Docker.

Imagine a scenario where deploying and configuring software is a seamless experience, free from the intricacies and compatibility concerns that often arise with conventional deployment methods. With Nvidia Docker, developers can unleash the potential of their applications, ensuring optimized performance and hardware utilization, while simplifying the entire deployment workflow.

Our exploration focuses on the invaluable benefits of Nvidia Docker in facilitating the deployment of GPU-accelerated Windows applications. By encapsulating the software within a containerized environment, Nvidia Docker eliminates the need for manual installation and configuration of GPU drivers, libraries, and dependencies in diverse systems, saving developers countless hours of painstaking work.

Understanding Docker Containers and Nvidia Docker

In this section, we will explore the fundamental concepts behind Docker containers and Nvidia Docker, shedding light on their functionalities and benefits. By comprehending these concepts, readers will gain a deeper understanding of how these technologies contribute to efficient application deployment and utilization of Nvidia GPUs.

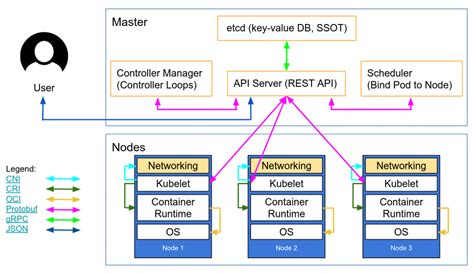

Docker containers are lightweight and portable virtualization units that encapsulate applications and their dependencies. They enable developers to package software with all the necessary components, such as libraries and frameworks, ensuring consistent behavior across different environments. By isolating applications from the underlying infrastructure, Docker containers provide a reliable and reproducible environment for running applications.

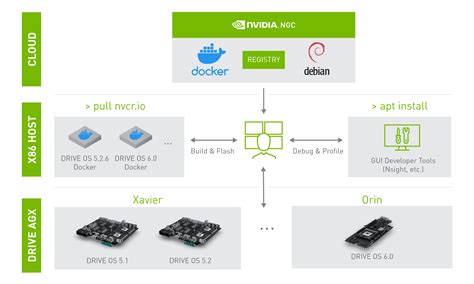

Nvidia Docker, on the other hand, is an extension of Docker that enables the deployment of GPU-accelerated applications inside Docker containers. It leverages Nvidia's GPU virtualization technology, allowing applications to directly access the power of Nvidia GPUs from within a container. This seamless integration between Docker and Nvidia GPUs enables developers to harness the computational capabilities of GPUs without the need for complex setup or hardware dependencies.

One of the key benefits of using Nvidia Docker is the ability to accelerate a wide range of applications, including machine learning algorithms, scientific simulations, and graphical rendering tasks. By leveraging the parallel processing capabilities of Nvidia GPUs, these applications can achieve significant performance gains, boosting productivity and reducing time-to-results.

| Docker Containers | Nvidia Docker |

|---|---|

| Encapsulate applications and dependencies | Enables deployment of GPU-accelerated applications |

| Isolates applications from underlying infrastructure | Allows direct access to Nvidia GPUs from within containers |

| Provides consistent behavior across different environments | Seamless integration for harnessing computational capabilities of Nvidia GPUs |

| Lightweight and portable virtualization units | Acceleration of machine learning, scientific simulations, and graphical rendering tasks |

By understanding the core concepts of Docker containers and Nvidia Docker, readers will be better equipped to deploy and utilize these technologies in the context of Windows applications. Proper utilization of Nvidia Docker can unlock the full potential of Nvidia GPUs, enabling faster and more efficient computation-intensive tasks.

Preparing the Setup for Deployment of a Windows Based Program

In order to successfully deploy a Windows application utilizing Nvidia Docker, it is necessary to establish a suitable environment that meets all the required prerequisites. This section will provide detailed instructions on configuring the necessary components to set up the environment for the seamless deployment of your application.

- Install the latest version of Windows operating system on the designated hardware or virtual machine.

- Ensure that the system meets the minimum hardware requirements, including CPU, RAM, and storage capacity.

- Upgrade the graphics drivers to the recommended version compatible with Nvidia Docker.

- Confirm the availability of the appropriate Nvidia runtime and toolkit.

- Verify that the Docker engine is installed and properly configured on the Windows system.

- Install the Nvidia Docker container runtime to enable GPU acceleration within Docker containers.

- Configure the network settings to ensure proper communication between the Windows host and the deployed application.

By following these steps, you will establish an environment that is ready for the deployment of your Windows application utilizing the power of Nvidia Docker. The successful setup of the environment will provide a seamless experience for running and managing your application, leveraging the capabilities of Nvidia GPU acceleration and Docker containerization.

Building and Configuring Images for GPU Support in Containerized Environments

In this section, we will explore the process of creating and customizing Docker images to enable GPU support in containerized environments. By leveraging the power of Nvidia Docker, we can build and configure images that efficiently harness the capabilities of Nvidia GPUs, opening up new possibilities for high-performance computing and machine learning applications.

- Understanding the Requirements for GPU-enabled Containers

- Installing Nvidia Drivers and CUDA Toolkit

- Optimizing Dockerfile for GPU Support

- Running Image Verification and Testing

- Adding Libraries and Dependencies

- Configuring Environment Variables and Paths

- Building and Tagging the Nvidia Docker Image

- Pushing and Sharing the Image to a Registry

By following the step-by-step guide provided in this article, you will gain the necessary knowledge and skills to build and configure Nvidia Docker images, allowing you to seamlessly deploy GPU-accelerated applications in containerized environments. Whether you are a developer, a data scientist, or an infrastructure professional, understanding the process of building and configuring these images will be invaluable in harnessing the full potential of Nvidia GPUs in your workflow.

Enabling Windows Programs to Run Efficiently with Nvidia Containerization

In this section, we will explore the process of optimizing the execution of Windows software within the Nvidia Docker environment. By harnessing the power of Nvidia's cutting-edge technology and containerization, we can achieve improved performance and streamlined deployment for Windows applications. Let's delve into the key strategies and considerations for deploying Windows programs in Nvidia Docker containers.

| Enhancing Performance with GPU Acceleration |

|---|

One of the significant advantages of leveraging Nvidia Docker is the ability to harness GPU acceleration for Windows applications. By offloading computational tasks to the GPU, programs can achieve substantial speed improvements and optimize resource utilization. In this section, we will explore how to enable GPU acceleration for Windows applications and the considerations for choosing the right GPU resources. |

| Containerization for Seamless Deployment |

|---|

Nvidia Docker offers a containerized approach to deploying Windows applications, ensuring consistent and reproducible environments across different systems. In this section, we will discuss the benefits of containerization, including isolation, portability, and scalability. We will also explore the steps involved in creating and managing Windows containers in Nvidia Docker, along with best practices for optimizing container performance. |

| Optimizing Resource Management |

|---|

Efficient resource management is crucial for maximizing the performance and stability of Windows programs running in Nvidia Docker. In this section, we will delve into strategies for effectively allocating CPU, memory, and disk resources to Windows containers. We will also explore ways to monitor and optimize resource usage, ensuring a balanced and efficient execution environment for your applications. |

| Ensuring Compatibility and Compatibility Checks |

|---|

Compatibility between Windows applications and Nvidia Docker is essential for successful deployment. In this section, we will discuss the considerations and steps involved in ensuring your applications are compatible with the Nvidia Docker environment. We will explore techniques for compatibility testing and validation, allowing you to identify and resolve any potential issues before deploying your applications in production. |

By following the strategies and best practices outlined in this section, you will be equipped with the knowledge and tools to efficiently deploy your Windows applications in Nvidia Docker. Leveraging the power of Nvidia's advanced technology and containerization, you can take advantage of GPU acceleration, seamless deployment, optimized resource management, and compatibility checks to unlock the full potential of your Windows programs.

FAQ

Can you explain what Nvidia Docker is?

Nvidia Docker is a tool that allows you to containerize applications that require NVIDIA GPU acceleration. It provides a way to run GPU-accelerated applications within Docker containers, making it easier to deploy and manage such applications.

Why is deploying Windows applications in Nvidia Docker beneficial?

Deploying Windows applications in Nvidia Docker comes with several benefits. Firstly, it allows for easy deployment as containers provide a consistent and isolated environment. Additionally, it enables efficient utilization of GPU resources, as multiple containers can share the same GPU without conflicts. Lastly, Nvidia Docker simplifies the setup process by automatically handling the necessary drivers and dependencies.

Are there any limitations or prerequisites for using Nvidia Docker with Windows applications?

Yes, there are some limitations and prerequisites for using Nvidia Docker with Windows applications. Firstly, you need a system running a compatible version of Windows with the necessary NVIDIA GPU drivers installed. Additionally, your GPU must support CUDA and have the corresponding CUDA toolkit installed. Furthermore, Docker must be installed on the system before Nvidia Docker can be utilized. Lastly, it's important to ensure that your application is compatible with running within a Docker container.