In this digital era, seamless and efficient communication is no longer a luxury, but an essential pillar supporting the success of businesses and organizations worldwide. As technologies continue to evolve, developers are constantly seeking innovative mechanisms to integrate cutting-edge services into their applications, streamlining data exchange and enhancing user experience. One such solution that has garnered significant attention is gRPC, a high-performance, open-source framework for remote procedure calls (RPC) that facilitates reliable and lightning-fast communication between distributed systems. Now, imagine harnessing the limitless potential of gRPC services in the world of web development, on a Linux environment, enriched with the powerful capabilities of nginx - an industry-leading web server capable of optimizing and securing network traffic. This article delves into the intricate world of deploying gRPC services in ASP.NET Core, unlocking a world of possibilities for developers seeking to harness the raw power of seamless communication.

So, what exactly is gRPC?

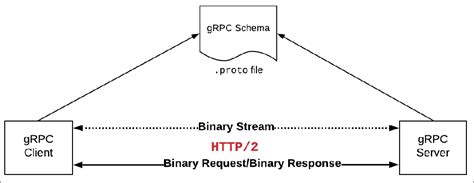

At its core, gRPC is an open-source framework that enables developers to build high-performance, language-agnostic, and platform-independent services for efficient communication between client and server applications. By utilizing Google's Protocol Buffers (protobuf) as its interface definition language (IDL), gRPC facilitates the development of flexible and scalable APIs that seamlessly integrate into various programming languages.

Why choose gRPC over traditional communication protocols?

Unlike traditional protocols such as HTTP or REST, gRPC boasts several distinctive features that make it an attractive choice for modern web development. Firstly, gRPC harnesses the power of binary data serialization through protobuf, resulting in significantly smaller payload sizes compared to text-based formats. This reduction in data size not only leads to faster transmission speeds but also enhances overall network efficiency. Moreover, gRPC supports both unary and streaming communication, allowing developers to build real-time, bidirectional, and highly interactive applications. With built-in support for load balancing, authentication, and automatic code generation, gRPC simplifies the development process and empowers developers to focus on their core logic instead of worrying about the complexities of network communication.

Understanding the Fundamentals and Advantages of grpc

In this section, we will delve into the fundamental principles and benefits of using grpc as a communication protocol in web development. By grasping the core concepts of grpc and its advantages, you can effectively leverage its power in your projects.

Introduction:

Before diving into the technical details, it is important to grasp the fundamental concept of grpc and its role in modern web development. grpc, which stands for "Google Remote Procedure Call," is an open-source framework that facilitates efficient and reliable communication between client and server applications. It is based on the concept of remote procedure calls (RPC), allowing the server to expose functions that clients can invoke remotely.

The Benefits of grpc:

There are several notable advantages to utilizing grpc in web development:

Efficiency and Performance:

grpc utilizes HTTP/2 as its transport protocol, which provides multiplexing, header compression, and server push. This leads to significant performance improvements compared to traditional REST APIs, allowing for the efficient transfer of data between client and server.

Strong Typing and Code Generation:

One of the key benefits of grpc is its support for strong typing and automatic code generation. The contract between client and server is defined using Protocol Buffers, a language-agnostic interface definition language. This enables the automatic generation of client-side stubs and server-side implementations, resulting in simplified and maintainable code.

Bidirectional Communication:

Unlike traditional REST APIs, grpc enables bidirectional streaming, which means both clients and servers can send streams of messages to each other concurrently. This feature is particularly useful for real-time applications or scenarios that require continuous data flow.

Language Independent:

grpc supports a wide range of programming languages, including but not limited to C++, Java, Python, and Ruby. This language independence allows developers to choose their preferred language for building client and server applications, enhancing flexibility and interoperability.

Interoperable and Evolvable:

By utilizing Protocol Buffers, grpc ensures interoperability between different systems and versions. It provides a forward and backward compatibility mechanism, allowing new fields to be added in future versions without breaking existing clients or servers.

Conclusion:

Understanding the fundamentals and benefits of grpc is essential for developers looking to adopt this powerful communication protocol. By leveraging grpc's efficiency, strong typing, bidirectional communication, language independence, and evolvability, you can build robust and scalable web applications.

Getting Your asp.net core Project Ready for Efficient grpc Deployment

One of the essential steps in successfully deploying a grpc service in asp.net core to Linux with nginx is setting up your project appropriately for seamless deployment. This section will guide you through the process of preparing your asp.net core project to ensure optimal performance and compatibility with the grpc service deployment.

To begin, you must focus on organizing your project structure effectively. This involves structuring your project directory in a logical manner, ensuring clarity and ease of navigation for both development and deployment purposes. Additionally, adopting a consistent naming convention for files and folders will contribute to the overall maintainability of your project.

Next, it is crucial to carefully manage your project dependencies. Utilizing a package manager such as NuGet to efficiently handle and update your project's third-party libraries and frameworks is highly recommended. Regularly reviewing and updating these dependencies will not only ensure you have the latest features and bug fixes but will also minimize potential compatibility issues during deployment.

Furthermore, focusing on code organization within your asp.net core project is necessary. Breaking down your code into logical modules or components, each responsible for specific functionality, will enhance code maintainability and reusability. Adopting best practices such as adhering to SOLID principles and implementing appropriate design patterns will result in a more scalable and flexible codebase.

As you prepare your project, it is essential to optimize its overall performance. Conducting thorough profiling and performance testing will help identify potential bottlenecks and optimize critical sections of your code. Implementing efficient caching strategies, utilizing asynchronous programming techniques, and minimizing unnecessary resource consumption will significantly improve the overall scalability and responsiveness of your deployed grpc service.

In conclusion, setting up your asp.net core project correctly before deploying your grpc service to Linux with nginx is crucial for a successful deployment. By organizing your project structure, managing dependencies, organizing code, and optimizing performance, you can ensure a seamless and efficient deployment experience while maximizing your project's potential.

Improving Performance by Deploying the Efficient gRPC Service on a Linux System with Nginx

In this section, we will explore the steps to optimize the performance of a gRPC service by deploying it on a Linux environment with the help of Nginx. By implementing this solution, we can achieve enhanced efficiency and speed for our service.

To begin, we will delve into the process of setting up a Linux server, which will act as the host for our gRPC service. This involves installing the necessary libraries and dependencies to ensure seamless communication between our service and the Linux operating system.

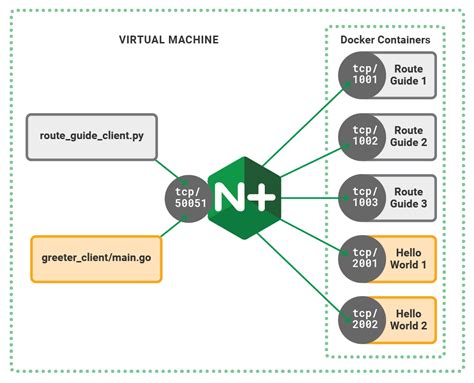

Next, we will explore how Nginx, a powerful web server and reverse proxy, can be utilized to enhance the overall performance of our gRPC service. By configuring Nginx to act as a reverse proxy, we can offload some of the processing overhead from our gRPC service, resulting in improved response times and reduced latency.

Additionally, we will discuss the benefits of using Nginx's load balancing capabilities to distribute incoming network traffic evenly across multiple instances of our gRPC service. This load balancing mechanism further enhances performance and allows for efficient handling of increased user requests without overwhelming a single instance.

Furthermore, we will explore various optimization techniques specific to gRPC, such as enabling HTTP/2 support and implementing gRPC compression. These techniques help minimize network overhead and improve throughput, resulting in faster and more efficient communication between our gRPC service and clients.

To ensure a smooth deployment process, we will also discuss best practices for configuring Nginx to handle secure communication through SSL/TLS protocols. This ensures data privacy and integrity while maintaining optimal performance for our gRPC service.

In summary, by following the steps outlined in this section, you will be able to deploy a high-performing gRPC service on a Linux environment, leveraging the power of Nginx as a reverse proxy and load balancer. The implementation of these techniques and optimizations will result in an enhanced user experience and improved overall service performance.

Configuring nginx as a reverse proxy for the gRPC service

In this section, we will explore the process of setting up nginx as a reverse proxy for an efficient communication between client and server in a gRPC service deployment. By implementing nginx as a reverse proxy, we can enhance the performance, security, and scalability of our application.

We will begin by configuring nginx to act as a reverse proxy, enabling it to handle incoming client requests and forward them to the appropriate gRPC service. This setup allows us to optimize network traffic by offloading tasks such as load balancing, SSL termination, and caching from the gRPC service itself.

Next, we will delve into the various configurations and directives required to properly route gRPC requests to the backend servers hosting the gRPC service. We will explore techniques for load balancing across multiple instances of the gRPC service, ensuring high availability and improved performance.

We will also touch upon important considerations such as securing the communication between the client and the gRPC service through the use of SSL/TLS certificates. By leveraging nginx's capabilities, we can enforce secure connections while minimizing the overhead on the gRPC service.

Lastly, we will discuss some advanced configuration options available in nginx to further optimize the performance and scalability of the deployed gRPC service. We will explore techniques such as connection pooling, request buffering, and HTTP/2 server push to maximize efficiency and responsiveness.

- Setting up nginx as a reverse proxy

- Routing gRPC requests

- Load balancing for improved performance

- Securing communication with SSL/TLS

- Advanced configuration options for optimization

By following the steps and best practices outlined in this section, you will be able to effectively configure nginx as a reverse proxy for your gRPC service, ensuring enhanced performance, scalability, and security.

[MOVIES] [/MOVIES] [/MOVIES_ENABLED]FAQ

What is gRPC?

gRPC is an open-source high-performance framework that allows developers to build distributed systems based on the protobuf serialization format and bi-directional streaming for efficient communication between services.

Why would I want to deploy a gRPC service in ASP.NET Core?

ASP.NET Core is a versatile and powerful framework for building web applications and services. By deploying a gRPC service in ASP.NET Core, you can take advantage of its robust features, such as dependency injection, middleware pipeline, and logging, to build efficient and scalable gRPC services.

Why would I want to deploy a gRPC service on Linux?

Deploying a gRPC service on Linux can offer several benefits. Linux is a popular choice for server deployments due to its stability, scalability, and cost-effectiveness. By deploying on Linux, you can take advantage of the vast ecosystem of Linux tooling and services, enabling seamless integration with other Linux-based systems and services.

Why should I use Nginx with gRPC?

Nginx can act as a reverse proxy for gRPC services, providing load balancing, routing, and SSL termination capabilities. It can help improve the performance and reliability of your gRPC service by offloading some of the network-related tasks and handling the HTTP/2 protocol requirements.