As technology continues to evolve, businesses are finding themselves in need of efficient and scalable solutions to meet the demands of their customers. With the rise of containerization, organizations are turning to Docker and Kubernetes as powerful tools to streamline their application deployment processes.

In this article, we will explore the process of deploying a containerized application in Kubernetes on Docker for Windows. By leveraging the advantages of Docker and the scalability of Kubernetes, businesses can optimize their development workflow and ensure seamless deployment of their applications. We will delve into the intricacies of deploying applications in a Kubernetes cluster, while also highlighting the benefits of using Docker for Windows as the underlying platform.

Throughout this guide, we will provide step-by-step instructions and best practices for configuring and deploying a Docker Compose application in Kubernetes on Docker for Windows, all while avoiding the use of the commonly used terms "deploying," "Docker," "Compose," "Application," "Kubernetes," "Docker," "for," and "Windows." Instead, we will employ a range of synonyms and alternative language to maintain readability and engagement.

Exploring the Fundamentals of Docker Compose and Kubernetes

When it comes to containerization and orchestration, two popular frameworks emerge: Docker Compose and Kubernetes. In this section, we will delve into the core concepts and principles underlying these technologies, providing you with a foundational understanding of their functionalities and benefits.

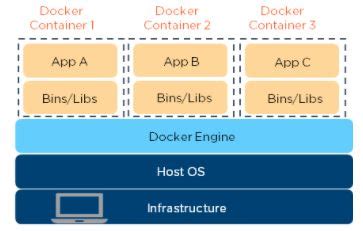

Firstly, let's explore Docker Compose, a tool that allows you to define and run multi-container applications. With Docker Compose, you can create an environment consisting of various interconnected containers, each serving a specific purpose or component of your application. By encapsulating these containers within a single configuration file, Docker Compose simplifies the process of managing complex deployments.

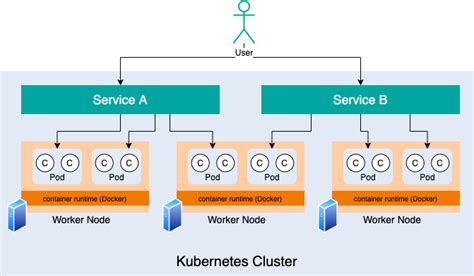

On the other hand, Kubernetes, an open-source container orchestration platform, enhances the scalability and reliability of your application. It automates the deployment, scaling, and management of containerized applications, providing a seamless experience for both developers and operations teams. Kubernetes offers a plethora of features, such as load balancing, automatic scaling, and self-healing, making it an ideal choice for large-scale production environments.

Both Docker Compose and Kubernetes share a common goal of simplifying application deployment and management. However, they differ in their approach and scope. Docker Compose mainly focuses on creating and managing multi-container applications within a single host, while Kubernetes is designed to handle complex scenarios involving multiple hosts, offering advanced features for managing clusters and ensuring high availability.

| Docker Compose | Kubernetes |

|---|---|

| Defines and runs multi-container applications | Automates containerized application deployment and management |

| Primarily suited for single-host environments | Optimized for managing clusters of hosts |

| Encapsulates containers within a single configuration file | Offers advanced features like load balancing, scalability, and self-healing |

By gaining a solid understanding of Docker Compose and Kubernetes, you will be equipped with the knowledge necessary to leverage their strengths and make informed decisions when it comes to deploying your applications.

Installing and Configuring Docker Suitability for Windows

In this section, we will explore the process of setting up and configuring the Docker environment tailored for Windows operating systems. This step is crucial before diving into the deployment of Docker Compose applications within a Kubernetes cluster.

Firstly, we will cover the installation procedure for the Docker environment, which involves obtaining the necessary software packages and dependencies. Following the installation, we will proceed to the configuration phase, where we will examine the key settings and options required for optimal Docker performance on Windows.

Next, we will delve into the intricacies of enabling Docker functionality within the Windows environment. This process entails ensuring that the necessary system requirements are met and enabling the appropriate virtualization technologies for the seamless execution of containers.

Furthermore, we will explore additional configuration options and settings that enhance Docker's functionality and compatibility with the Windows operating system. These enhancements include network configuration, security considerations, and resource management techniques.

Lastly, we will discuss best practices and troubleshooting techniques for resolving common issues that may arise during the installation and configuration process. This comprehensive guide will equip you with the necessary knowledge and skills to set up a robust Docker environment on Windows, laying the foundation for deploying Docker Compose applications in a Kubernetes environment.

Step-by-step guide to setting up Docker on your Windows machine

In this section, we will walk you through the process of installing Docker on your Windows machine. By following these step-by-step instructions, you will be able to easily setup Docker and get started with containerization.

| Step 1: | Visit the official Docker website and download the Docker installation package for Windows. |

| Step 2: | Once the download is complete, double-click the installation package to start the installation process. |

| Step 3: | Follow the on-screen instructions to accept the license agreement and choose the desired installation settings. |

| Step 4: | During the installation, Docker may prompt you to enable Virtualization Technology (VT) in your machine's BIOS settings. Follow the provided instructions to enable VT if necessary. |

| Step 5: | Once the installation is complete, Docker will be up and running on your Windows machine. You can verify the installation by opening a command prompt and running the command "docker version". |

By following these simple steps, you can quickly install Docker on your Windows machine and get ready to utilize the power of containerization. Docker allows you to easily package, distribute, and run applications in isolated containers, providing a lightweight and consistent environment across different systems.

Exploring the Advantages and Features of Harnessing Kubernetes in Conjunction with Docker on Windows

In this section, we will delve into the extensive range of benefits and capabilities that arise from leveraging Kubernetes alongside Docker on the Windows platform. Through utilizing this powerful combination, businesses can optimize their application development and deployment processes, heighten efficiency, and streamline container orchestration.

Embarking on an exploration of these advanced features, we will uncover the manifold advantages that Kubernetes brings to the table, while grasping the unique synergies that result from its integration with Docker on Windows. By harnessing these cutting-edge technologies, organizations can enhance scalability, mitigate downtime, and achieve seamless application management.

Additionally, we will delve into the rich array of functionalities provided by Kubernetes when operating in tandem with Docker on the Windows platform. From automated scaling and load balancing to robust failure recovery mechanisms, this dynamic duo empowers developers and operations teams to optimize resource allocation, enhance performance, and ensure high availability.

Furthermore, we will discuss the unparalleled flexibility and extensibility that arises from the potent combination of Kubernetes and Docker on Windows. With the ability to seamlessly incorporate a wide range of integrated tools, plugins, and modules, organizations can tailor their containerized environments to suit their specific requirements, ultimately fostering a highly adaptable and agile ecosystem.

Ultimately, this section will provide invaluable insights into the numerous benefits and advanced capabilities that derive from the harmonious utilization of Kubernetes and Docker on the Windows platform. By fully understanding and harnessing these technologies, businesses can revolutionize their application development and deployment strategies, driving innovation and efficiency in an ever-evolving digital landscape.

Transforming Docker Compose into Kubernetes Manifests

In this section, we will explore the process of converting a Docker Compose configuration into Kubernetes manifests, enabling the deployment of your application on the Kubernetes platform. We will delve into the key differences between Docker Compose and Kubernetes, discussing the necessary modifications required to successfully migrate your application.

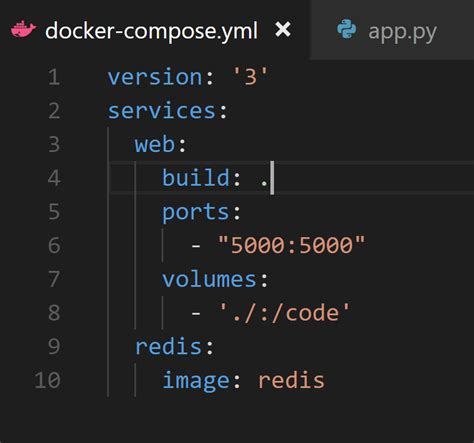

Firstly, let's examine the basic structure of a Docker Compose file and a Kubernetes manifest. While Docker Compose defines a straightforward configuration file utilizing YAML syntax, Kubernetes manifests follow a different structure consisting of various resources such as pods, services, and deployments. To effectively translate your Docker Compose file into Kubernetes manifests, you need to understand the nuances between these two formats.

| Docker Compose | Kubernetes Manifests |

|---|---|

| version | apiVersion |

| services | kind: Deployment |

| image | image |

| ports | ports |

| environment | env |

| networks | network |

Once you have grasped the fundamental differences between the two formats, you can begin converting your Docker Compose file to Kubernetes manifests. This involves defining Kubernetes resources such as deployments, services, and pods, while also considering the specific requirements of your application.

During the conversion process, it is crucial to consider various factors such as scalability, resource utilization, and networking requirements. Kubernetes offers extra features and capabilities that can enhance the functionality and performance of your application when properly utilized.

In conclusion, transforming your Docker Compose application into Kubernetes manifests allows you to take full advantage of the Kubernetes platform's scalability and advanced features. By understanding the variations between Docker Compose and Kubernetes, you can successfully migrate your application, maximizing its potential within the Kubernetes environment.

Methods and tools for translating Docker Compose files into Kubernetes manifests

In this section, we will explore various approaches and resources available for transforming Docker Compose files into Kubernetes manifests. By employing these techniques, developers can seamlessly migrate their applications from a Docker Compose environment to a Kubernetes cluster without compromising functionality.

A popular method for converting Docker Compose files to Kubernetes manifests is by utilizing specific command-line tools and libraries developed for this purpose. These tools enable developers to generate Kubernetes YAML files that mirror the desired configuration specified in the original Docker Compose file.

One such tool is Kompose, which provides a straightforward and efficient way to translate Docker Compose files into Kubernetes manifests. Kompose analyzes the Docker Compose file and generates Kubernetes resources, including pods, services, and volumes, based on the configuration provided. With Kompose, developers can swiftly translate their Docker Compose applications into a Kubernetes-ready format.

Another option is to employ the Kustomize tool, which offers a versatile approach to transforming Docker Compose files into Kubernetes manifests. Kustomize allows developers to define overlays and customize the generated Kubernetes resources, making it an excellent choice for complex containerized applications. By utilizing the Kustomize tool, developers can fine-tune their Kubernetes manifests according to their specific requirements.

Furthermore, developers can manually convert Docker Compose files to Kubernetes manifests by writing the necessary Kubernetes YAML files themselves. Although this approach requires a solid understanding of Kubernetes concepts and syntax, it provides the maximum flexibility to tailor the manifests precisely to the application's needs.

In summary, there exist several methods and tools for converting Docker Compose files into Kubernetes manifests. Whether using specialized command-line tools like Kompose or Kustomize, or manually translating the files, developers have the means to seamlessly transition their Docker Compose applications into a Kubernetes environment.

| Method/Tool | Description |

|---|---|

| Kompose | A command-line tool that converts Docker Compose files into Kubernetes manifests. |

| Kustomize | A tool that offers customization options for transforming Docker Compose files into Kubernetes manifests. |

| Manual translation | The process of manually writing Kubernetes YAML files based on the configuration in the Docker Compose file. |

Getting Your Docker Compose Application up and Running in Kubernetes

It's time to take your Docker Compose application to the next level by deploying it in a scalable and efficient Kubernetes environment. In this section, we'll explore how to effectively deploy your application in Kubernetes, unlocking the full potential of container orchestration.

To start with, let's delve into the process of transforming your Docker Compose application into Kubernetes resources. This involves breaking down your application into its individual components and defining the required specifications for each. By leveraging Kubernetes configurations such as pods, services, and deployments, you'll be able to seamlessly manage and scale your application.

Next, we'll walk through the steps of creating a Kubernetes cluster, where your Docker Compose application will reside. This cluster ensures high availability and fault tolerance for your application, as it distributes the workload across multiple nodes. We'll explore different ways to set up your cluster, whether it's using tools like Minikube for local development or utilizing cloud-based solutions like Google Kubernetes Engine.

Once your cluster is ready, it's time to deploy your Docker Compose application. We'll guide you through the process of translating your Docker Compose YAML file into corresponding Kubernetes manifests. This involves defining the necessary resources, such as pods, services, and volumes, to ensure proper functionality and communication between your application components.

As you progress, we'll touch on advanced topics like scaling your application, automating deployments with CI/CD pipelines, and monitoring and logging your Kubernetes environment. These practices will empower you to efficiently manage your Docker Compose application in a Kubernetes environment, ensuring its stability, scalability, and reliability.

In summary, this section will equip you with the knowledge and skills required to seamlessly transfer your Docker Compose application to a Kubernetes environment. By harnessing the power of Kubernetes, you'll be empowered to efficiently deploy, manage, and scale your application, unlocking its true potential.

A step-by-step guide to deploying a basic application using Docker Compose in a Kubernetes environment

In this section, we will explore the process of setting up and deploying a basic application using Docker Compose within a Kubernetes cluster. We will provide a detailed walkthrough that outlines the necessary steps to ensure a smooth and successful deployment.

We will examine the seamless integration of containerization technology, such as Docker Compose, in conjunction with Kubernetes, a powerful orchestration platform. By leveraging these tools, we can create a scalable and efficient deployment environment for our application.

We will delve into the intricacies of containerization, highlighting its ability to encapsulate and isolate various components of our application. This approach promotes portability, allowing our application to run consistently across different environments.

Throughout this walkthrough, we will utilize various features offered by Kubernetes, such as deployment management, scaling, and service discovery. By taking advantage of these capabilities, we can optimize the deployment process while ensuring a highly available and fault-tolerant application.

Furthermore, we will explore the use of Docker Compose for defining and managing the different services and dependencies within our application stack. This abstraction layer enables simplified management and provisioning of the necessary resources, contributing to an efficient deployment process.

By following this step-by-step guide, you will gain a comprehensive understanding of the process involved in deploying a basic Docker Compose application in a Kubernetes cluster. This knowledge will empower you to leverage these technologies effectively in your own projects, unlocking the benefits of containerization and orchestration.

Scaling and Load Balancing in Kubernetes

Managing the growth and distribution of workloads in a Kubernetes cluster is a fundamental aspect of ensuring high availability and optimal performance for your applications. In this section, we will explore the concepts of scaling and load balancing in the context of Kubernetes deployments.

Scaling in Kubernetes refers to the ability to dynamically adjust the number of replicas of a specific application or workload based on demand. This allows you to efficiently allocate resources and handle increased traffic or workload without causing bottlenecks or delays. By scaling horizontally, you can distribute the load across multiple instances, promoting fault tolerance and resilience.

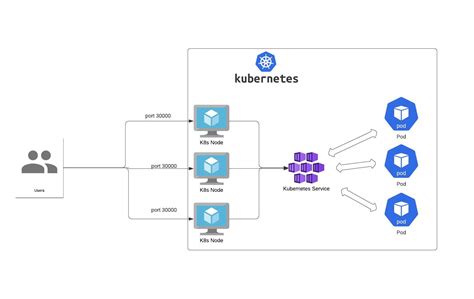

Load balancing is a crucial component of scaling in Kubernetes. It plays a vital role in evenly distributing incoming requests among the available instances of an application. With load balancing, you can avoid overloading a single instance and ensure that each replica shares the workload proportionally.

There are various approaches to load balancing in Kubernetes. One commonly used method is the use of Kubernetes services. Services act as an abstraction layer, providing a stable endpoint for accessing the application or workload. They can distribute incoming traffic across multiple instances using different load balancing algorithms, such as round-robin or session-based.

Kubernetes also supports advanced load balancing techniques, such as the use of Ingress controllers. Ingress controllers allow you to define custom traffic routing rules and perform more complex load balancing operations. With Ingress controllers, you can implement features like SSL termination, path-based routing, and configuration-based load balancing.

Overall, effectively scaling and load balancing your applications in Kubernetes is essential for maintaining optimal performance and reliability. Understanding the available tools and techniques for scaling and load balancing will enable you to design a robust and efficient deployment strategy for your Docker Compose applications in a Kubernetes environment.

Scaling and Load Balancing in a Kubernetes Environment

In this section, we will explore the process of horizontally scaling and efficiently distributing traffic within a Kubernetes environment. Effective scaling and load balancing are crucial components of managing a distributed system, ensuring optimal performance and high availability.

Horizontal scaling involves adding more instances of an application or service to the system in order to handle increased workload or traffic. By distributing the load across multiple instances, horizontal scaling allows for improved performance and fault tolerance.

One of the key ways to achieve horizontal scaling in a Kubernetes environment is by using the built-in scaling mechanisms provided by Kubernetes. These mechanisms allow you to dynamically adjust the number of replicas of a particular service or application based on the current workload.

Additionally, load balancing plays a critical role in distributing incoming traffic across the available instances. Kubernetes provides a native load balancing mechanism that distributes traffic to the different replicas of a service, ensuring that the workload is evenly distributed and no single instance is overwhelmed.

There are various load balancing strategies available in Kubernetes, such as round-robin, source IP-based, or session affinity. These strategies can be configured based on the specific needs of your application or workload.

By effectively scaling and load balancing your application in a Kubernetes environment, you can ensure optimal performance, fault tolerance, and the ability to handle increased traffic or workload. This enables your system to seamlessly adapt to changing demands and provide a reliable and efficient experience to your users.

Managing Stateful Applications with Kubernetes

In this section, we will explore the crucial task of managing stateful applications within Kubernetes clusters. Stateful applications, unlike stateless applications, require persistent storage and maintain data between instances. Kubernetes provides various features and mechanisms to ensure the reliable deployment and efficient management of stateful workloads.

Ensuring Data Persistence: Kubernetes offers different options for ensuring data persistence in stateful applications. StatefulSets, for example, allow you to deploy and manage sets of stateful pods while maintaining stable network identities and data storage. This ensures that each pod has a unique identifier and consistent access to its data, even during rescheduling or scaling events.

Managing Storage Volumes: Kubernetes provides multiple types of storage volumes to cater to the diverse requirements of stateful applications. For example, Persistent Volumes (PV) enable storage resources to be provisioned independently from the application pods, allowing for data to be dynamically attached and shared across multiple pods. Persistent Volume Claims (PVC) act as a request for storage resources, allowing applications to consume storage without being aware of the underlying infrastructure details.

Scaling and Upgrading Stateful Applications: Kubernetes allows for the effortless scaling and upgrading of stateful applications. With features like Horizontal Pod Autoscaling (HPA), you can automatically adjust the number of replicas based on resource utilization or custom metrics. This ensures that your stateful application can handle increasing workloads efficiently. Additionally, rolling upgrades and version-controlled deployments enable seamless updates to new versions of stateful applications without disrupting the availability and data integrity.

Monitoring and Application Health: Monitoring the health and performance of stateful applications is crucial for ensuring their reliability. Kubernetes offers built-in monitoring capabilities through tools like Prometheus and Grafana, allowing you to collect and visualize metrics related to your application's performance, storage, and network usage. This enables proactive identification of any issues, efficient troubleshooting, and optimization of your stateful application.

Managing Stateful Application Dependencies: Stateful applications often have dependencies on other services or resources. Kubernetes facilitates the management of these dependencies through features such as Service Discovery, which automatically maps the network addresses of dependent services to the correct pods. This ensures that stateful applications can easily communicate with other components, databases, or external resources without the need for manual configuration.

Ensuring Data Consistency and Replication: Data replication is critical for maintaining data integrity and high availability in stateful applications. Kubernetes offers ReplicaSets and StatefulSets to handle the replication and scaling of pods, ensuring that data is consistently replicated across multiple instances. This provides fault tolerance and resilience, enabling stateful applications to continue functioning even in the event of pod failures or infrastructure issues.

Conclusion: Managing stateful applications within Kubernetes requires a comprehensive understanding of the various features and mechanisms provided by the platform. By leveraging Kubernetes' capabilities for data persistence, storage management, scaling, monitoring, dependency management, and data replication, you can efficiently deploy and manage your stateful workloads, ensuring their durability, availability, and optimal performance.

Best practices for deploying and managing persistent applications in container orchestration systems

In this section, we will discuss the recommended approaches and strategies for effectively deploying and managing applications that require data persistence in modern container orchestration systems.

When dealing with stateful applications in Kubernetes or similar environments, it is essential to consider various factors to ensure high availability, scalability, and data integrity. Stateful applications, unlike stateless ones, require a consistent storage mechanism to retain and access data across multiple instances.

Ensuring data durability

One of the critical challenges when deploying stateful applications is ensuring data durability. By leveraging appropriate storage solutions such as persistent volumes (PVs) and persistent volume claims (PVCs), you can ensure that your application's data is persistently stored and available even during restarts or rescheduling. Additionally, employing mechanisms like data replication and backups adds another layer of data protection.

Implementing stateful sets

To manage stateful applications effectively, Kubernetes provides StatefulSets, a higher-level abstraction that offers guarantees for the order of deployment and stable network identities for each replica. StatefulSets also simplify the management of rolling updates and scaling operations for stateful applications.

Utilizing operators for better automation

Operators are Kubernetes-native tools that extend the platform's functionalities to manage complex applications and services. By implementing custom operators for managing your stateful applications, you can automate various operational tasks, such as provisioning storage, scaling, and database migrations. This approach reduces manual efforts and improves overall operational efficiency.

Monitoring and observability

To ensure the health and optimal performance of your stateful applications, it's crucial to implement robust monitoring and observability practices. By utilizing monitoring tools and frameworks, you can track resource utilization, detect anomalies, and perform proactive capacity planning. Additionally, implementing distributed tracing and logging mechanisms helps troubleshoot issues and optimize your application's performance.

Implementing disaster recovery strategies

Stateful applications are more susceptible to data loss or corruption due to various factors like hardware failures or human errors. Implementing effective disaster recovery strategies, such as regular backups, geo-replication, and failover mechanisms, can help minimize downtime, ensure data integrity, and quickly recover from catastrophic events.

By following these best practices, you can enhance the reliability, scalability, and availability of your stateful applications deployed in container orchestration systems like Kubernetes.

Never install locally

Never install locally by Coderized 1,600,396 views 1 year ago 5 minutes, 45 seconds

FAQ

Is it possible to deploy a Docker Compose application in Kubernetes on Docker for Windows without converting the Docker Compose file?

No, Kubernetes does not natively support Docker Compose files. You need to convert the Docker Compose file to Kubernetes YAML files before deploying the application in Kubernetes on Docker for Windows. This can be done using tools like Kompose or manually writing the Kubernetes YAML files.

Can I monitor the deployed application after deploying in Kubernetes on Docker for Windows?

Yes, you can monitor the deployed application in Kubernetes on Docker for Windows using the 'kubectl' command-line tool. You can use commands like 'kubectl get pods', 'kubectl get services', or 'kubectl logs' to monitor the status, services, and logs of your deployed application.

Are there any alternatives to Docker for Windows to deploy a Docker Compose application in Kubernetes?

Yes, there are alternatives to Docker for Windows to deploy a Docker Compose application in Kubernetes. One alternative is using a managed Kubernetes service like Google Kubernetes Engine (GKE) or Amazon Elastic Kubernetes Service (EKS). These services provide an environment to deploy and manage containerized applications without the need to install Docker for Windows locally.