In the fast-paced world of data analysis, the race to uncover insights and make informed decisions is more crucial than ever. As businesses strive to gain a competitive edge, the need for efficient and high-performing tools becomes paramount. To meet this demand, a groundbreaking solution has emerged, revolutionizing the field of data analysis and propelling it to new heights.

Imagine a world where processing vast amounts of data becomes seamless, where complex calculations are executed effortlessly, and where performance is optimized to an unprecedented level. This is exactly what the dynamic combination of Docker, Windows, and Numpy offers. By harnessing the power of cutting-edge technologies and leveraging their strengths, this innovative approach unleashes the true potential of data analysis.

Empowering your analytical prowess: With Docker on Windows, the hassle of complex system configurations becomes a thing of the past. Docker provides an efficient and lightweight framework for deploying applications, allowing seamless integration across various operating systems. Paired with the flexibility and reliability of Numpy, a highly efficient numerical computing library, data analysis becomes not just a task, but a well-orchestrated symphony of precision.

Revolutionizing performance: Gone are the days when sluggish computations impeded progress and hindered decision-making. By utilizing the optimized algorithms and functionalities offered by Numpy, the performance of data analysis workflows is elevated to an unprecedented level. Complex mathematical operations are executed swiftly, allowing for extensive experimentation and rapid iteration.

Unleashing untapped potential: The fusion of Docker, Windows, and Numpy opens up a world of infinite possibilities in data analysis. With enhanced performance and accelerated processing, analysts are empowered to delve deeper into their datasets, uncovering hidden patterns and gaining valuable insights. Decision-making becomes more informed, allowing businesses to stay one step ahead in an increasingly competitive landscape.

Embrace the combination of Docker, Windows, and Numpy, and unlock the full potential of data analysis. Experience the seamless integration, unparalleled performance, and limitless opportunities that this powerful trio brings. Dive into the world of enhanced productivity and precision, and witness the transformation of your data analysis endeavors like never before.

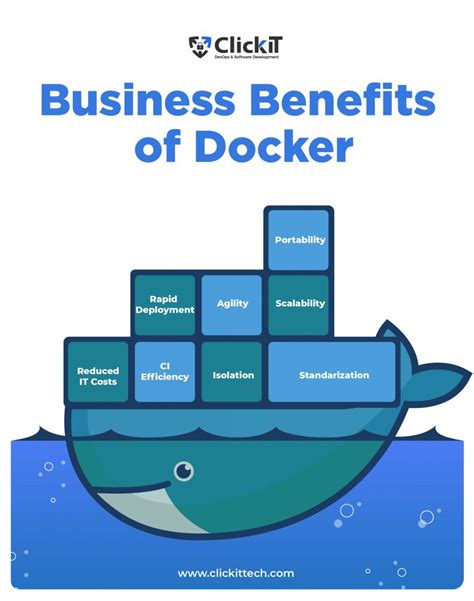

Understanding the Significance of Docker and Its Benefits

In today's digital landscape, efficient and reliable software development and deployment have become crucial for businesses to stay competitive. Docker, a cutting-edge technology, has emerged as a powerful tool that revolutionizes the way applications are built, shipped, and run.

Docker, also known as containerization, offers a lightweight and portable solution to package software into standardized units called containers. These containers include everything required to run the software, such as libraries, dependencies, and configurations. By encapsulating applications within containers, Docker eliminates the need for developers to worry about differences in operating systems, hardware, or software environments.

One of the primary reasons why Docker has gained immense popularity is its ability to enhance the scalability and flexibility of applications. With Docker, developers can easily replicate and deploy containers across various environments, be it a local machine, a virtual machine, or a cloud infrastructure. This level of portability enables seamless deployment, regardless of the underlying infrastructure, saving time and effort.

Moreover, Docker promotes collaboration among developers by providing a consistent and isolated environment for software development. With containerization, developers can share code and dependencies effortlessly, ensuring consistent results across different development environments. This accelerates the development process and makes collaboration more efficient and streamlined.

Additionally, Docker plays a significant role in optimizing resource utilization. Traditional virtualization methods often involve running multiple virtual machines on a hypervisor, which can lead to resource inefficiencies. Docker, on the other hand, uses a shared operating system kernel, allowing for greater resource utilization and eliminating the overhead caused by running multiple operating systems.

In conclusion, Docker offers numerous advantages for software development and deployment. Its ability to package applications into containers, ensure portable deployments, foster collaboration, and enhance resource utilization makes it a valuable tool for developers and businesses alike.

Improving Data Analysis Performance with Numpy

In the realm of data analysis, the efficiency and speed of processing large datasets are vital for obtaining meaningful insights. Numpy, a powerful numerical computing library, offers a range of functions and tools that can significantly boost the performance of data analysis tasks.

With Numpy, analysts can make use of optimized data structures and sophisticated algorithms to handle complex computations efficiently. This leads to faster processing times, thereby enabling quicker decision-making and enhanced productivity.

One of the key advantages of using Numpy is its support for vectorized operations, which allows performing mathematical operations on entire arrays rather than individual elements. This eliminates the need for costly loops and iterations, resulting in significant performance gains.

Beyond its computational efficiency, Numpy offers a wide range of mathematical functions and statistical tools that are essential for data analysis. These include methods for statistical operations, linear algebra, Fourier transforms, random number generation, and more. By leveraging these functionalities, analysts can streamline their workflows and obtain accurate results more effectively.

Moreover, Numpy's ability to integrate seamlessly with other popular data analysis libraries, such as Pandas and Matplotlib, further enhances its potential for improving performance. The combination of these libraries allows analysts to manipulate and visualize data with ease, providing a comprehensive toolkit for data analysis tasks.

In summary, Numpy serves as a valuable tool for boosting the performance of data analysis tasks by offering efficient computational capabilities, a wide range of mathematical functions, and seamless integration with other libraries. By harnessing the power of Numpy, analysts can expedite their data analysis workflows, unlock deeper insights, and ultimately achieve better outcomes.

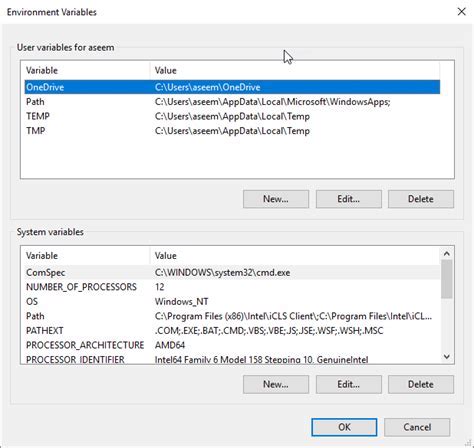

Creating an Optimized Windows Environment for Efficient Data Analysis

When it comes to performing data analysis tasks, having a fast and efficient environment is crucial. One way to achieve this is by leveraging the power of Docker to create a customized Windows image with the popular numerical computing library, Numpy.

In this section, we will explore the benefits and steps involved in using Docker to create a tailored Windows environment for data analysis. By utilizing the flexibility provided by Docker, we can optimize our working environment and ensure that Numpy performs at its best.

By containerizing our data analysis environment with Docker, we can isolate and encapsulate all the necessary dependencies and configurations. This not only ensures reproducibility but also simplifies the setup process, allowing us to quickly set up a standardized environment across different machines.

Furthermore, Docker enables us to fine-tune the performance of our Windows image by utilizing various optimization techniques. We can leverage container-level resource allocation and prioritization, ensuring that our data analysis tasks receive the necessary computational resources.

In addition to performance enhancements, using Docker to create a Windows image with Numpy provides us with the flexibility to easily scale our data analysis capabilities. We can replicate our optimized environment across multiple machines or cloud instances, allowing us to tackle larger datasets or perform parallel processing efficiently.

In summary, Docker offers a powerful solution for creating a customized Windows environment optimized for data analysis with Numpy. By leveraging Docker's isolation, reproducibility, and performance optimization capabilities, we can boost the efficiency and scalability of our data analysis tasks.

Data Analysis with Python Course - Numpy, Pandas, Data Visualization

Data Analysis with Python Course - Numpy, Pandas, Data Visualization by freeCodeCamp.org 2,499,987 views 3 years ago 9 hours, 56 minutes

Day-26 | Multi Stage Docker Builds | Reduce Image Size by 800 % | Distroless Container Images | #k8s

Day-26 | Multi Stage Docker Builds | Reduce Image Size by 800 % | Distroless Container Images | #k8s by Abhishek.Veeramalla 84,240 views 1 year ago 31 minutes

FAQ

How can Docker Windows Image with Numpy boost performance for data analysis?

Docker Windows Image with Numpy can boost performance for data analysis by providing a pre-configured environment with all the necessary dependencies, including Numpy. This eliminates the need for manual installation and configuration, saving time and effort. Additionally, Docker's containerization ensures isolation and reproducibility, allowing for efficient utilization of system resources.

Can Docker Windows Image with Numpy be used for data analysis on any Windows machine?

Yes, Docker Windows Image with Numpy can be used on any Windows machine that supports Docker. It provides a consistent and portable environment, eliminating the compatibility issues that may arise when installing and configuring dependencies manually. This means that data analysis tasks can be easily replicated and executed across different Windows machines without worrying about compatibility.

Is Docker Windows Image with Numpy suitable for large-scale data analysis projects?

Yes, Docker Windows Image with Numpy is suitable for large-scale data analysis projects. Its containerization technology allows for efficient resource utilization, making it ideal for handling large volumes of data. Additionally, Docker's scalability enables running multiple containers simultaneously, further boosting performance. With Numpy's optimized mathematical functions, the image provides a reliable and efficient platform for processing and analyzing large datasets.