Are you searching for a seamless method to mold your software environment into a portable unit? Look no further, as we unveil a simple yet powerful technique to fashion a specialized container specific to your needs. By harnessing the potential of cutting-edge technologies, you can effortlessly concoct a tailor-made virtual environment. No prior experience required! In this article, we will guide you through the step-by-step process of crafting a unique container, harnessing the capabilities of Linux command line tools.

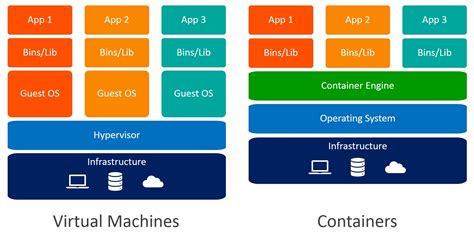

Embrace the boundless possibilities that Docker offers in terms of simplifying application deployment and management. Whether you're an aspiring developer or a seasoned professional, Docker presents an enchanting approach to encapsulate your software and its dependencies. With just a few command line instructions, you can encapsulate your application, preferences, and libraries within a self-contained entity, known as a container. These containers function independently of the underlying environment, providing a consistent experience across multiple platforms.

Amidst the sea of directories that make up your computer's file system, lies the foundation for your perfect container. By harnessing the power of the Linux command line, you can effortlessly manipulate existing folders, shaping them into a container tailored to your specific requirements. Equipped with the knowledge and skills to navigate the command line, you hold the key to unlocking a world of possibilities. Let's dive into the essential steps needed to transform your coveted directory into a mesmerizing container masterpiece!

The Fundamentals of Docker and Creating an Image

In this section, we will delve into the essential concepts of Docker and the process of constructing a customized container image. We will explore the core principles behind Docker, including its ability to effectively encapsulate and isolate applications, and the importance of image creation in leveraging the full potential of this powerful technology.

A containerized environment offers numerous advantages, enabling efficient deployment, scalability, and portability of applications. Docker, as a leading containerization tool, empowers developers to create lightweight and self-contained environments that can run consistently across various platforms.

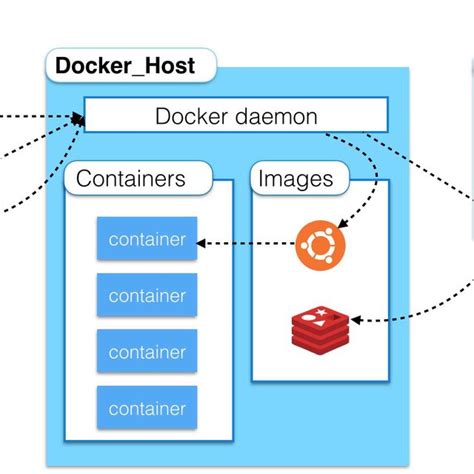

Within the Docker ecosystem, images play a crucial role. An image serves as a template or blueprint for constructing a container, encapsulating everything required for an application to run. By understanding the process of image creation, developers can tailor containers according to specific requirements, incorporating desired configurations, dependencies, and application code.

The image creation process involves assembling the necessary components, such as the base operating system, application code, and additional dependencies, into a cohesive package. Docker provides a straightforward mechanism to create and manage images, allowing developers to streamline application deployments and ensure reproducibility across different environments.

| Key Concepts | Benefits |

|---|---|

| Containerization | Efficient deployment and scalability |

| Docker | Consistent application execution across platforms |

| Images | Blueprints for constructing containers |

| Image Creation | Customizing containers for specific requirements |

Understanding the fundamentals of Docker and image creation is crucial for developers looking to harness the full potential of containerization. By mastering these concepts, you will be equipped with the knowledge and skills to efficiently utilize Docker and create customized container images that meet your application's unique needs.

Preparing the Directory for Docker Image Creation

In this section, we will discuss the steps to be taken before creating a Docker image from an existing directory using the Linux command line. Prior to the image creation process, it is important to ensure that the directory is properly organized and contains all the necessary files and dependencies.

- Organizing the Directory: Before creating a Docker image, it is crucial to structure the existing directory in a way that makes it easy to identify and access the required files. This can involve creating separate folders for different components or modules of the application, naming the files descriptively, and arranging them in a logical order of execution.

- Managing Dependencies: It is essential to identify and manage the dependencies of the application within the existing directory. This can include installing any required libraries, frameworks, or packages that are necessary for the proper execution of the application.

- Ensuring Compatibility: The existing directory should also be checked for compatibility with the Docker environment. This involves ensuring that the directory structure, file names, and file formats are all compatible with Docker and its requirements.

- Cleaning Up the Directory: To avoid any unnecessary files or clutter, it is advisable to clean up the existing directory before creating the Docker image. This can involve removing any temporary files, unnecessary logs, or other files that are not required for the application to run.

By following these preparatory steps, you can ensure that the existing directory is properly organized and ready for the creation of a Docker image using the Linux command line.

Cleaning Up Redundant Files and Directories

In this section, we will explore the process of eliminating excess files and folders that are not required for the Docker image creation. By removing these unnecessary elements, we can optimize the size and efficiency of our Docker image, ensuring a more streamlined and efficient deployment process.

To enhance the resource utilization and minimize the footprint of our Docker image, it is crucial to identify and eliminate any redundant files and directories. By doing so, we can reduce the overall image size, enhance the image build and deployment speed, and optimize the resources utilized during runtime.

Throughout this section, we will discuss various techniques and best practices to identify and remove redundant files and directories. These techniques may involve identifying duplicate files, filtering out temporary files, removing unused dependencies, and eliminating any unnecessary or redundant configurations. By following these practices, we can ensure that our Docker image remains lean, efficient, and devoid of any unnecessary clutter.

Identifying Duplicate Files: Before creating the Docker image, it is vital to detect and remove any duplicate files within the directory. Duplicate files are often redundant and can significantly increase the size and complexity of the image. By utilizing tools, such as fdupes or rmlint, we can easily identify and remove these duplicates, resulting in a more streamlined and efficient image.

Filtering Out Temporary Files: Temporary files, generated during development or build processes, are typically not required within the Docker image. Removing these transient files, such as logs, caches, or temporary backups, can help reduce the image size and avoid unnecessary clutter. Tools like find and grep can be utilized to identify and remove such temporary files effectively.

Removing Unused Dependencies: Analyzing and removing any unused dependencies is an essential step to declutter the Docker image. Unnecessary or redundant dependencies can unnecessarily increase the image size and introduce potential vulnerabilities. Utilizing package manager commands like apt (for Debian-based systems) or yum (for RPM-based systems) can help identify and remove these dependencies, ensuring a lean and more secure image.

Eliminating Unnecessary Configurations: Configurations specific to the development environment, such as development database configurations or debugging settings, are often not required within the Docker image. Removing these unnecessary configurations can improve the security and performance of the image. By carefully reviewing and filtering out such configurations, we can maintain a more streamlined and production-ready Docker image.

By implementing the practices mentioned above, you will be able to perform effective cleanup of unnecessary files and folders, thereby optimizing the Docker image for deployment. This process will result in improved performance, reduced resource utilization, and a more efficient overall Docker image creation process.

Building the Containerized Representation

In this section, we will explore the process of constructing the self-contained representation of our application using Docker. By encapsulating the necessary dependencies, configurations, and files within a Docker image, we can easily distribute and deploy our application across various environments without worrying about compatibility issues or dependencies conflicts.

We will discuss the step-by-step procedure involved in building the containerized representation of our application. This involves selecting a suitable Docker base image, defining the required configurations, setting up the necessary dependencies and environment variables, and finally, packaging all these components into a Docker image that encapsulates our application.

Throughout this process, we will demonstrate how to leverage the capabilities provided by Docker to efficiently package and distribute our application. We will also explore best practices and considerations that can be followed to ensure the resulting Docker image is optimized, secure, and easily maintainable.

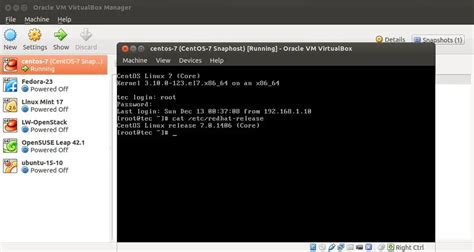

Using the Linux Terminal to Generate a Snapshot

In this section, we will explore how to leverage the power of the Linux command line to capture a snapshot of a specific directory, preserving its contents and structure.

- Generate a snapshot of a target directory

- Save the snapshot for future use

- Explore available command line options

- Efficiently preserve the directory's content and structure

- Understand the underlying Linux commands

By employing the Linux terminal, we can produce a snapshot that encapsulates the essence of a chosen directory. This allows us to preserve the integrity of its contents, ensuring that the snapshot can be easily shared or replicated without missing vital information.

We will delve into the different ways in which you can generate a snapshot, exploring various command options and their specific functionalities. Additionally, we will highlight the importance of understanding the underlying commands to efficiently capture and save snapshots of your desired directories.

Whether you are an experienced Linux user or a beginner, this section will provide you with the necessary knowledge to utilize the Linux command line effectively for creating targeted snapshots of directories.

Testing the Containerized Application

In this section, we will focus on the process of testing the application that has been containerized using Docker. Testing is an integral part of the development lifecycle as it ensures the reliability and functionality of the application.

Verifying Container Integrity: Before running any tests, it is important to ensure the integrity of the Docker container. This can be achieved by running a series of validation checks and examining the container's metadata and configuration settings.

Unit Testing: Unit testing is a crucial step in the development process that tests individual units of code to ensure their functionality. In the context of the containerized application, unit tests can be executed within the container to verify the behavior of individual components or functions.

Integration Testing: Integration testing focuses on examining the interaction between different components of the application. By simulating real-world scenarios and checking the compatibility of various elements, integration tests help identify any issues that may arise during the deployment of the containerized application.

Functional Testing: Functional testing evaluates the application based on its intended functionality. It aims to ensure that the containerized application functions as expected, fulfilling the requirements outlined in the project's specifications.

Performance Testing: Performance testing assesses the efficiency and speed of the containerized application. By subjecting the application to various load levels and stress testing scenarios, it helps identify potential bottlenecks and performance issues.

Security Testing: Security testing is crucial to uncover and address any vulnerabilities in the containerized application. By conducting various tests and assessments, such as penetration testing and vulnerability scanning, security testing helps ensure the application's robustness against potential threats.

Automated Testing: Automation plays a pivotal role in testing containerized applications. By automating repetitive test cases and scenarios, it streamlines the testing process and ensures consistent and reliable results.

Continuous Integration and Deployment: As part of the testing process, it is important to incorporate continuous integration and deployment practices. This allows for frequent testing and validation, ensuring any issues are detected and resolved early in the development cycle.

Overall, testing the Docker containerized application involves a comprehensive evaluation of its integrity, functionality, performance, and security. By implementing a robust testing strategy, developers can ensure the reliability and stability of their application in different deployment environments.

Running Containers and Verifying Functionality

In this section, we will explore the process of executing containers and confirming that they are working correctly. By following the steps outlined below, you will be able to ensure the successful implementation and functionality of your containerized environment.

| Action | Description |

|---|---|

| 1. Start the container | Launch the container using the appropriate command or orchestration tool. This will initiate the execution of the containerized application with all its dependencies and configurations. |

| 2. Access the container | Gain access to the running container, either through its command line interface or by connecting to its exposed ports. This will allow you to interact with the application and perform any necessary tests or operations. |

| 3. Execute functionality tests | Verify that the containerized application functions as intended by executing various tests. This may involve running specific commands, generating sample data, or simulating user interactions to assess the expected output and behavior. |

| 4. Monitor resource utilization | Monitor the usage of system resources, such as CPU, memory, and network, during the operation of the container. This will help identify any potential performance issues or bottlenecks that need to be addressed. |

| 5. Analyze logs and error messages | Inspect the logs and error messages generated by the containerized application. By examining these records, you can identify any issues or warnings that might affect the functionality of the application and take appropriate actions to resolve them. |

| 6. Repeat and refine | Iterate through the steps above, adjusting configurations, testing scenarios, and analyzing results until the containerized application is performing optimally and meeting the desired requirements. |

By following these steps and combining them with your knowledge and experience, you will be able to effectively run containers and validate their functionality, ensuring that your Docker-based solution operates smoothly and reliably.

creating directories in linux | mkdir command in linux

creating directories in linux | mkdir command in linux by Pedagogy 55,117 views 3 years ago 3 minutes, 6 seconds

FAQ

How can I create a Docker image from an existing directory in the Linux command line?

You can create a Docker image from an existing directory in the Linux command line using the following command: "docker build -t image_name path_to_directory". This will create a Docker image with the specified name from the contents of the directory.

What is the purpose of creating a Docker image from an existing directory?

The purpose of creating a Docker image from an existing directory is to bundle up all the necessary files and dependencies required to run an application into a single package. This makes it easy to distribute and share the application, ensuring that it runs consistently across different environments.

Can I specify a different name for the Docker image that I want to create?

Yes, you can specify a different name for the Docker image that you want to create by using the "-t" option followed by the desired name. For example, you can use the command "docker build -t my_image_name path_to_directory" to create an image with the name "my_image_name".

What happens if there are multiple Dockerfiles in the directory?

If there are multiple Dockerfiles in the directory, the default behavior is to use the one named "Dockerfile" for building the image. If you want to use a different Dockerfile, you can specify its path using the "-f" option followed by the path to the Dockerfile. For example, "docker build -t image_name -f path_to_Dockerfile path_to_directory".

Is it possible to include only specific files or directories from the source directory in the Docker image?

Yes, it is possible to include only specific files or directories from the source directory in the Docker image by using a ".dockerignore" file. This file works similarly to a ".gitignore" file, allowing you to specify the files or directories that should be excluded from the image. Only the files and directories that are not listed in the ".dockerignore" file will be included in the image.