In this fast-paced technological era, the integration of diverse systems has become paramount for efficient data management. As businesses strive to optimize their operations, find flexible solutions, and stay ahead of the competition, the need to seamlessly connect disparate platforms has emerged as a critical aspect.

Imagine a world where data flows effortlessly, unrestricted by the boundaries of specific operating environments. A world where the words "Connect," "DFS," "Docker," "Container," and "Windows" are transcended, replaced with innovative approaches that enhance the efficiency, flexibility, and reliability of data management.

Today, we delve further into the realms of connectivity, exploring the fascinating concept of linking Distributed File Systems (DFS) to Docker containers on Windows 10. By delving into this unique synergy, we unlock a whole new realm of possibilities, enabling organizations to harness the power of virtualization while seamlessly integrating their existing DFS infrastructure.

With each passing day, the demand for scalable and agile solutions reaches new heights. This article aims to shed light on the intricacies of connecting DFS to Docker containers on Windows 10, offering insights into the benefits, challenges, and best practices of this cutting-edge integration. So, join us on this journey as we navigate the ever-evolving landscape where data systems converge with virtual environments, opening doors to a world of unparalleled potential.

Getting Started with Docker on Windows 10

Introduction: This section will guide you through the process of setting up Docker on your Windows 10 machine. Docker, a popular containerization platform, allows you to run applications in isolated environments called containers. These containers offer a lightweight and portable solution, enabling you to develop and deploy software seamlessly across different operating systems.

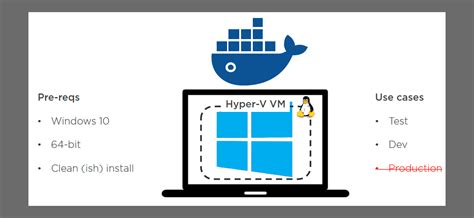

System Requirements: Before installing Docker, ensure that your Windows 10 system meets the necessary prerequisites. Make sure you have a compatible version of Windows installed and adequate system resources, such as memory and disk space. Also, check if virtualization support is enabled in your computer's BIOS settings, as Docker relies on virtualization technologies to run containers efficiently.

Downloading and Installing Docker: To start using Docker on Windows 10, you need to download the Docker Desktop application from the official Docker website. Once downloaded, run the installation file and follow the on-screen instructions to complete the installation process. Docker Desktop includes Docker Engine, the container runtime, along with other tools and services necessary for managing and working with containers.

Configuring Docker Settings: After installing Docker, you may need to configure certain settings based on your requirements. Docker Desktop provides an easy-to-use graphical interface that allows you to modify settings such as resource allocation, networking, and storage options. Adjusting these settings can optimize the performance and utilization of Docker containers on your Windows 10 machine.

Verifying the Installation: Once Docker is installed and configured, it is crucial to verify that it is working correctly on your Windows 10 system. You can do this by opening a command prompt or PowerShell window and executing Docker-related commands. By running simple commands like docker version or docker run hello-world, you can determine if Docker is up and running without any issues.

Conclusion: In this section, we explored the process of setting up Docker on a Windows 10 machine. By following these steps, you can quickly get started with containerization and leverage the benefits of Docker to develop, deploy, and manage applications in an efficient and isolated manner.

Installation Guide for Docker Desktop

In this section, we will explore the step-by-step process of setting up Docker Desktop on your Windows 10 machine. Docker Desktop allows you to create and manage containers, improving software development and deployment processes.

- Begin by downloading the Docker Desktop installer from the official website.

- Once the download is complete, double-click on the installer to initiate the installation process.

- Follow the on-screen instructions, carefully reading each step before proceeding.

- During the installation, you may be prompted to authorize certain actions and grant necessary permissions. Make sure to review these requests and approve them accordingly.

- After the installation is complete, Docker Desktop will launch automatically.

- Next, you will need to sign in to your Docker account. If you do not have an account, you can easily create one by selecting the "Create Docker ID" option.

- Once you are signed in, Docker Desktop will show a welcome screen with useful information and tips to get started.

- Take some time to explore the Docker Desktop interface and familiarize yourself with its features.

- Congratulations! You have successfully installed Docker Desktop on your Windows 10 machine. Now you can begin creating and managing containers to enhance your software development process.

Remember to regularly update Docker Desktop to benefit from the latest features and security enhancements. Stay tuned for additional articles on how to utilize Docker containers effectively.

Configuring Distributed File System (DFS) for Dockerized Environment

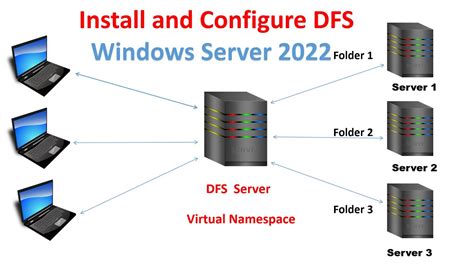

In this section, we will explore the process of setting up Distributed File System (DFS) for a containerized environment, enabling efficient data storage and retrieval across multiple nodes. By implementing DFS, users can experience enhanced scalability, reliability, and fault tolerance in their Dockerized applications.

| Step | Description |

|---|---|

| 1 | Create a DFS Namespace |

| 2 | Configure DFS Namespace Replication |

| 3 | Set Up DFS Targets |

| 4 | Integrate Docker Containers with DFS |

| 5 | Ensure Accessibility and Availability |

In the first step, we will create a DFS Namespace, which acts as a logical container for our shared data. This namespace allows us to organize and manage distributed file resources seamlessly. Next, we will configure DFS Namespace Replication, which ensures data consistency between different DFS servers. This replication feature enhances data availability and minimizes the risk of data loss in case of server failures.

Once the DFS Namespace and replication settings are in place, we can proceed to set up DFS targets. These targets represent the physical locations where the data resides, including Docker containers. By connecting our Docker containers to DFS, we can centralize data storage and facilitate seamless data access across multiple containers.

Finally, we need to ensure accessibility and availability of our DFS-enabled Dockerized environment. By monitoring DFS synchronization, network connectivity, and container health, we can address any potential issues proactively and maintain a reliable and performing system.

Understanding the Concept of Distributed File System

In the realm of modern computing, the Distributed File System (DFS) plays a pivotal role in facilitating efficient and reliable storage and retrieval of files across a network. This technology enables seamless access and management of data by distributing it across multiple servers, thus enhancing scalability, fault-tolerance, and performance.

- Streamlining File Organization

- Safeguarding Data Integrity

- Enhancing Data Accessibility

- Optimizing Storage Utilization

- Improving Data Recovery Capabilities

Streamlining File Organization: DFS provides a logical view of files and directories, eliminating the need for users to remember specific server names or paths. It offers a unified namespace, allowing users to access and locate their files without having to be aware of the underlying physical storage locations.

Safeguarding Data Integrity: Through the utilization of replication and synchronization mechanisms, DFS helps maintain data integrity and reliability. By distributing file replicas across multiple servers, it minimizes the risk of data loss due to hardware failures or network issues.

Enhancing Data Accessibility: DFS enables seamless access to files regardless of the physical location of the user or the file server. By implementing intelligent file location algorithms and caching mechanisms, it ensures that files are retrieved from the nearest and most available server, resulting in improved performance and reduced network latency.

Optimizing Storage Utilization: DFS intelligently manages storage resources by distributing files across multiple servers. It dynamically balances file placements based on factors such as file size, server capacity, and network bandwidth. This approach optimizes storage utilization and enables scalability without the need for costly hardware upgrades.

Improving Data Recovery Capabilities: In the event of a server failure or data corruption, DFS allows for seamless data recovery. By replicating files across multiple servers, it provides built-in redundancy, enabling the system to automatically route file requests to alternate servers, thereby minimizing downtime and ensuring uninterrupted data accessibility.

Bridging the Gap: Linking Distributed File Systems with Dockerized Environments

In the ever-evolving landscape of modern computing, the need to seamlessly integrate distributed file systems (DFS) with containers has become increasingly crucial. This article explores innovative approaches for establishing a connection between these two key components of contemporary software development.

As organizations embrace the power of containerization to enhance software deployment and scalability, it is essential to ensure that DFS platforms can seamlessly coexist within these dynamic environments. This section highlights novel strategies and techniques that enable the integration of DFS with Docker containers, without compromising performance, security, or compatibility.

[MOVIES] [/MOVIES] [/MOVIES_ENABLED]FAQ

What is DFS and why would I want to connect it to a Docker container?

DFS stands for Distributed File System, which is a feature in Windows that allows multiple servers to be linked together in a distributed file system. By connecting DFS to a Docker container, you can access shared files and folders that are hosted on the DFS from within the container. This can be useful for applications running in the container to have access to shared resources or for centralized management of files and folders across multiple servers and containers.

Are there any specific requirements or configurations needed to connect dfs to a docker container on Windows 10?

Yes, there are a few requirements and configurations needed to connect DFS to a Docker container on Windows 10. Firstly, you need to ensure that the DFS service is installed and running on your Windows 10 machine. Secondly, you need to create a Docker network using the "docker network create" command. Finally, when running the Docker container, you need to specify the created network using the "--network=" parameter.

Is it possible to connect dfs to multiple docker containers on Windows 10?

Yes, it is possible to connect DFS to multiple Docker containers on Windows 10. You can achieve this by creating a Docker network and running multiple containers within that network. Each container can then independently connect to the DFS using the appropriate hostname or IP address of the DFS server. This allows multiple containers to access and share files from the DFS simultaneously.

Can I connect dfs to a docker container on Windows 10 using a specific port?

No, DFS does not use specific ports for connection. Instead, it relies on the standard file sharing ports such as TCP ports 445 and 139. When connecting a Docker container to DFS on Windows 10, you do not need to specify a specific port for the connection. Simply ensure that the DFS service is running and accessible from the Docker container, and the connection will be established using the standard file sharing ports.

Can I connect a DFS to a Docker container on Windows 10 without mapping volumes?

No, you cannot connect a DFS (Distributed File System) to a Docker container on Windows 10 without mapping volumes. In Docker, volumes are used to persist data and provide a way to share files between the host machine and the container. By mapping a volume, you are essentially establishing a connection between a folder or file on your host machine and a directory inside the container. The volume mapping allows the container to access and manipulate files stored outside of its own file system. In the case of connecting a DFS to a Docker container, you need to map the DFS volumes to a directory within the container using the 'docker run' command. This step is essential for the container to be able to access and interact with the files stored in the DFS share.