In the fast-paced world of software development, the importance of managing package installations efficiently cannot be overstated. As developers, we are constantly seeking ways to create reproducible and isolated environments to ensure the stability and security of our applications. One popular solution is the utilization of containerization technologies, such as Docker, which allow us to encapsulate applications and their dependencies in lightweight, portable packages.

However, when dealing with packages installed via pip, the default package manager for Python, it becomes crucial to exercise careful control and monitoring. In certain scenarios, there may arise a need to restrict or regulate the installation of certain packages - whether it's to prevent security vulnerabilities, enforce a predefined software stack, or maintain consistency across development and production environments.

To address this need, a range of strategies can be employed to block the installation of specific packages within a Docker container running on a Windows operating system. By exploring alternate approaches and applying various techniques, we can effectively circumvent the inherent limitations and ensure a controlled environment for package management.

In this article, we will delve into the intricacies of blocking package installations within a Docker container on a Windows environment. We will explore several practical methods and techniques that will empower developers to take control of their package management process, guaranteeing the utmost reliability and integrity of their applications.

Why Should You Restrict the Usage of Python Package Installer within a Containerized Environment Running on the Microsoft Operating System?

Within a confined software deployment environment orchestrated by containerization technology, addressing the potential risks and ensuring the optimal performance of the system is crucial. One of the best practices involves restricting the utilization of the popular Python Package Installer, commonly known as pip, within a Docker container deployed on a Windows Server.

The Need for Controlled Package Installation: In a containerized environment, where various software components run isolated from the underlying host system, it is essential to carefully manage the installation and update processes of packages and dependencies. By controlling the usage of pip, administrators gain greater control over the package versions, dependencies, and potential security vulnerabilities introduced into the containerized environment.

Enhancing Security and Stability: Limiting the access to pip helps reduce the attack surface and potential security breaches within the containerized system. Furthermore, by keeping a restricted set of permitted packages, the stability and predictability of the application in the containerized environment can be vastly improved. This restriction prevents unknown or potentially disruptive package installations that may cause conflicts or unintended consequences.

Optimizing Resource Utilization: With controlled pip access, system administrators can better manage the utilization of system resources within the containerized environment. By limiting the installation of unnecessary or redundant packages, the overall resource consumption can be optimized, resulting in improved performance and efficiency of the containerized application.

Standardization and Reproducibility: By restricting pip usage, system administrators can enforce a standardized and reproducible deployment process within the containerized environment. By having control over the exact set of packages and dependencies used, it becomes easier to reproduce the environment across different deployments, ensuring consistent behavior and reducing the chances of unexpected errors or inconsistencies.

Conclusion: Restricting the usage of pip within a Docker container on a Windows Server provides improved security, stability, resource utilization, and deployment standardization. By carefully managing the installation and update processes of packages, system administrators can maintain greater control and mitigate potential risks within the containerized environment.

Security Concerns

When it comes to the implementation of software solutions, it is paramount to address any potential security concerns that may arise throughout the process. In the context of blocking certain packages in a containerized environment, there are several security considerations that need to be taken into account.

One of the primary concerns is the risk of unauthorized access and potential breaches by malicious actors. By meticulously managing and controlling the packages that are allowed in the Docker container, organizations can minimize the potential attack surface and reduce the likelihood of unauthorized access to critical systems and data.

Furthermore, maintaining strict control over the software packages installed within a container helps prevent the introduction of vulnerable or outdated components. In doing so, organizations can mitigate the risk of known security vulnerabilities that could be exploited by attackers.

Another security concern is the potential for package tampering, wherein an attacker may attempt to modify or inject malicious code into the packages used by the container. By implementing measures to verify the integrity and authenticity of packages, organizations can ensure that the software used within the container has not been tampered with, reducing the risk of compromise.

Additionally, it is crucial to consider the security implications of utilizing third-party repositories or sources for package installation. While community-driven repositories can provide a vast array of software options, it is essential to carefully evaluate their trustworthiness and security practices to avoid potential risks associated with downloading packages from untrusted sources.

In conclusion, by proactively addressing security concerns and implementing robust measures to control and verify the packages used within a Docker container, organizations can enhance the overall security posture of their software solutions and reduce the potential risks associated with unauthorized access, vulnerabilities, package tampering, and untrusted sources.

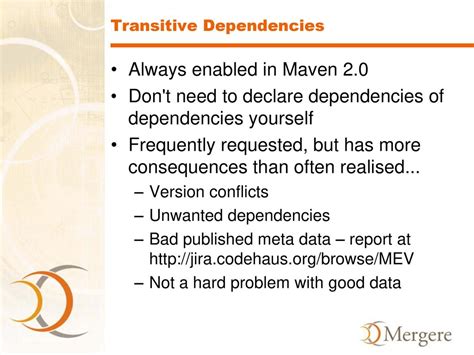

Avoiding Unwanted Dependencies

In the context of managing software packages within a containerized environment, it is crucial to minimize the presence of unnecessary dependencies. This section focuses on strategies to avoid the inclusion of unwanted packages or libraries, ultimately improving overall system efficiency and reducing potential conflicts.

- Practicing Selective Package Installation:

- Utilizing Lightweight Alternatives:

- Regular Dependency Audits:

- Using Static Linking:

- Regular Updates and Patching:

When installing or updating packages, it is important to carefully evaluate their necessity. Instead of blindly installing all available packages, selective installation helps to limit the introduction of unnecessary dependencies. This approach ensures that only essential libraries are included, reducing the overall size and complexity of the container.

In some cases, it is possible to replace heavy and resource-intensive libraries with lighter alternatives. By exploring different options and considering trade-offs between functionalities, developers can choose lightweight solutions that meet their application's requirements. This can significantly reduce the number of dependencies and improve overall performance.

Performing regular audits of the software's dependencies helps to identify and remove any unnecessary or outdated packages. By periodically reviewing the installed libraries and their associated versions, developers can ensure that the container remains lean and free from redundant dependencies.

In situations where it is not possible to avoid certain dependencies, an alternative approach is to statically link the required libraries. This method enables the inclusion of necessary components directly into the application, eliminating the need for separate dynamic libraries at runtime. While this approach may increase the size of the container, it can provide more control over the dependencies and reduce the risk of versioning conflicts.

Keeping all installed packages and libraries up to date is essential for avoiding unwanted dependencies. Regularly checking for updates and applying patches ensures that the software remains secure and benefits from the latest bug fixes and optimizations. This proactive maintenance approach contributes to the overall stability and efficiency of the containerized environment.

By following these suggested strategies, developers can effectively avoid the inclusion of unnecessary dependencies within their containerized applications. This optimization contributes to a more streamlined and manageable software environment, ultimately improving performance, reducing conflicts, and enhancing overall system efficiency.

Managing package versions

Controlling the versions of software packages installed in a development environment is crucial for ensuring consistency and stability of the system. In this section, we will explore different strategies and best practices for managing package versions, ensuring that the dependencies of your project are properly maintained.

- Dependency management: Understanding the relationship between different packages and their versions is essential for successful project development. By carefully managing package versions, developers can control how their code interacts with specific functionalities and avoid potential compatibility issues.

- Version pinning: Pinning specific package versions helps mitigate the risk of unexpected updates. By explicitly specifying the exact version of each package, developers can ensure consistent behavior across different development and deployment environments.

- Package managers: Utilizing package managers, such as pip, npm, or yarn, can greatly simplify the process of managing package versions. These tools provide mechanisms for installing, updating, and removing software packages, making it easier to keep track of dependencies and ensure compatibility.

- Virtual environments: Creating isolated environments for development and testing purposes can help avoid conflicts between different package versions. Virtual environments provide a clean slate for installing packages, enabling developers to precisely control the versions used within each project.

- Release management: Implementing a robust release management process ensures that package versions are properly tested and validated before deployment. By following a well-defined release pipeline, development teams can minimize the risk of using incompatible or unreliable software versions in production.

In conclusion, managing package versions is a critical aspect of software development. By employing effective techniques, such as dependency management, version pinning, package managers, virtual environments, and release management, developers can ensure the stability and maintainability of their projects.

Optimizing Performance

In the pursuit of maximizing efficiency and speed, it is crucial to optimize the performance of your software ecosystem. This section delves into various techniques and strategies that can be employed to enhance the overall performance, ensuring smooth and seamless execution of your application.

One key aspect to consider when aiming for optimal performance is the utilization of efficient resource management techniques. By implementing intelligent resource allocation and monitoring mechanisms, you can effectively allocate system resources and prevent bottlenecks that may hinder the performance of your application. Additionally, employing advanced caching mechanisms can further enhance the speed and responsiveness of your software, allowing for faster data retrieval and processing.

In order to streamline and expedite the execution of your code, it is crucial to adopt techniques such as code optimization and algorithmic improvements. Analyzing and refactoring your codebase can help eliminate redundant operations and reduce complexity, ultimately leading to faster execution times. Moreover, identifying and implementing efficient algorithms can greatly contribute to enhancing the overall performance of your application.

| Techniques | Description |

|---|---|

| Parallelization | Utilize multi-threading and distributed computing to execute tasks concurrently, reducing overall processing time. |

| Compression | Implement data compression algorithms to reduce file sizes and optimize storage and transfer efficiency. |

| Database Optimization | Tune database configurations, improve indexing strategies, and optimize query execution to enhance data retrieval performance. |

| Memory Management | Utilize efficient memory allocation techniques, such as object pooling and garbage collection optimization, to minimize memory usage and improve overall system performance. |

In summary, enhancing the performance of your software ecosystem involves various strategies, from efficient resource management to code optimization and algorithmic improvements. By carefully considering and implementing these techniques, you can achieve optimal performance, delivering a seamless and responsive user experience.

Creating Secure and Independent Environments

In the context of managing software dependencies and ensuring security, there is a growing need to isolate environments effectively. By utilizing isolation techniques, developers can create independent environments that are shielded from external influences and potential vulnerabilities. This article discusses the significance of isolating environments and explores various strategies to achieve this goal, emphasizing the importance of maintaining security and stability in software development processes.

Benefits of Environment Isolation

Environment isolation is crucial for several reasons. Firstly, it allows developers to minimize conflicts between different software components and versions, ensuring that each environment can function independently without impeding others. Additionally, isolation helps to mitigate the risk of security breaches by preventing unauthorized access to sensitive data or unauthorized modifications to critical components.

Strategies for Environment Isolation

Virtualization: One common approach to environment isolation is virtualization, which enables the creation of virtual machines (VMs) or containers. VMs provide a complete software environment with its operating system, while containers offer a lightweight and more portable alternative.

Sandboxing: Another technique in isolating environments is by implementing sandboxes, which limit the access and interaction of software components with the underlying system and prevent potential conflicts or security breaches.

Containerization: Containerization technologies, such as Docker, offer a means to encapsulate an application and its dependencies into a single executable unit, providing a self-contained and portable environment that can be easily replicated across different systems.

Conclusion

Isolating environments plays a critical role in ensuring the stability, security, and efficient management of software dependencies. By implementing strategies such as virtualization, sandboxing, and containerization, developers can create secure and independent environments that promote reliable software development processes and minimize the risks associated with conflicts and unauthorized access.

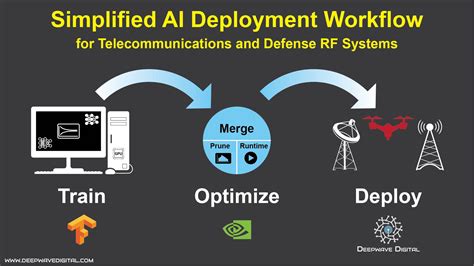

Simplifying the Deployment Process

Streamlining the process of deploying applications is crucial for ensuring efficiency and effectiveness. By implementing simplified deployment practices, developers can reduce complexity, eliminate potential obstacles, and enhance overall workflow.

Enhanced Efficiency: Simplifying the deployment process allows developers to save time and effort by minimizing unnecessary steps and streamlining the overall workflow. By eliminating redundant tasks and automating certain processes, valuable resources can be allocated towards more critical aspects of development.

Increased Productivity: With a simplified deployment process, developers can focus more on refining and enhancing the application itself rather than getting caught up in the intricacies of deployment. This allows for a more productive work environment, promoting innovation and faster development cycles.

Reduced Complexity: A simplified deployment process reduces the complexity associated with managing various dependencies and configurations. By utilizing standardized approaches and tools, developers can minimize compatibility issues and ensure consistent deployment across different environments.

Improved Stability: Simplifying the deployment process mitigates the risk of errors and instability that can arise from manual interventions. By automating repetitive tasks and implementing robust testing and verification mechanisms, developers can ensure the stability of the deployed application.

Eliminating Bottlenecks: The simplified deployment process eliminates potential bottlenecks that can hinder the progress of development. By removing unnecessary steps and optimizing the overall workflow, developers can deliver applications more efficiently, meet project deadlines, and improve customer satisfaction.

Facilitating Collaboration: A simplified deployment process fosters better collaboration among team members by providing a standardized and easily replicable framework. This allows developers to work seamlessly together, share knowledge, and resolve issues more effectively, resulting in a more cohesive and productive development environment.

In summary, simplifying the deployment process offers numerous benefits such as enhanced efficiency, increased productivity, reduced complexity, improved stability, bottleneck elimination, and facilitated collaboration. By adopting streamlined approaches, developers can optimize their deployment practices and achieve better outcomes in their applications' lifecycle.

FAQ

Is it possible to block pip from running inside a Docker container on Windows Server?

Yes, it is possible to block pip from running inside a Docker container on Windows Server by following certain steps. These steps include creating a Dockerfile and utilizing the PowerShell script to block the execution of pip commands.

Why would someone want to block pip inside a Docker container on Windows Server?

There could be various reasons why someone would want to block pip inside a Docker container on Windows Server. One of the main reasons is to ensure that only approved packages are installed in the container, minimizing security risks and maintaining control over the software environment.

What are the potential security risks of allowing pip inside a Docker container on Windows Server?

Allowing pip inside a Docker container on Windows Server can pose several security risks. First, it can lead to the installation of unauthorized or malicious packages that can compromise the security of the container. Secondly, if the container has access to the host system, pip can be used to install malicious software or modify critical files on the host. Blocking pip helps mitigate these risks and ensures a more controlled and secure environment.