Modern software development practices emphasize the importance of containerization for efficient deployment and scaling of applications. Docker, a leading container platform, enables developers to package applications along with their dependencies, ensuring consistent performance across multiple environments. In this article, we explore the process of incorporating the robust onnxruntime library into Docker containers, specifically designed for Linux platforms.

onnxruntime, a popular open-source deep learning framework, provides developers with a comprehensive toolkit to accelerate the development and deployment of machine learning models. Leveraging its vast array of pre-trained models and flexibility, developers can seamlessly integrate machine learning capabilities into their applications. By harnessing the power of onnxruntime, developers can achieve advanced predictive analytics, voice recognition, image recognition, and more.

With Docker's widespread adoption in the industry, it is essential to explore ways to incorporate the onnxruntime library into Docker containers. This integration allows developers to encapsulate their applications alongside the necessary onnxruntime dependencies, facilitating easy sharing and deployment. By utilizing the unified packaging and isolated runtime offered by Docker, developers can guarantee the seamless execution of their onnxruntime-powered applications across various Linux platforms.

Understanding the Advantages of Employing ONNXRuntime for Machine Learning

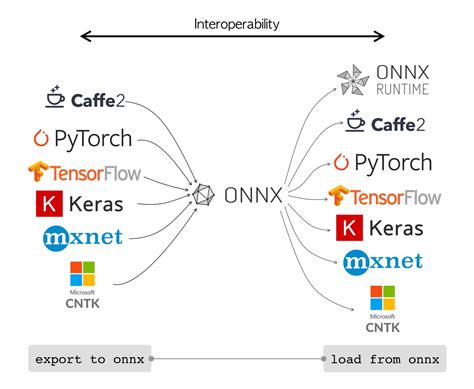

In the realm of machine learning, adopting the ONNXRuntime toolkit offers numerous benefits, revolutionizing the way algorithms are deployed and executed. By leveraging the power of ONNXRuntime, developers can streamline their processes, enhance performance, and promote interoperability in their machine learning models.

Enhanced Efficiency and Speed: ONNXRuntime provides a highly optimized execution environment, resulting in faster inference times and improved overall efficiency. Its advanced capabilities enable the efficient deployment of machine learning models across various platforms and devices, empowering developers to deliver high-performance solutions.

Flexible Framework Support: ONNXRuntime offers seamless integration with a wide range of popular machine learning frameworks, including TensorFlow, PyTorch, and more. This compatibility allows developers to leverage existing models and tools effortlessly, promoting collaboration and interoperability within the machine learning community.

ONNX Model Compatibility: ONNXRuntime is specifically designed to support the Open Neural Network Exchange (ONNX) format. This format provides a standardized way to represent machine learning models, enabling easy sharing and deployment of models across different frameworks and platforms. By utilizing ONNXRuntime, developers can take advantage of the vast ecosystem of pre-trained models available in the ONNX format.

Platform Independence: With ONNXRuntime, developers can build and deploy machine learning models that are platform-agnostic, reducing dependency on specific hardware or software configurations. This independence allows for seamless deployment on various devices, ranging from edge devices to cloud environments, ensuring consistent model execution regardless of the underlying infrastructure.

Continuous Improvement: ONNXRuntime is a dynamic framework actively supported by a vibrant community and the Microsoft team behind it. Regular updates and advancements ensure that developers have access to the latest optimizations, bug fixes, and new features, enabling them to continually enhance the performance and capabilities of their machine learning applications.

In conclusion, adopting ONNXRuntime empowers developers with enhanced efficiency, flexibility, and compatibility in deploying machine learning models. Whether it's accelerating inference, leveraging existing frameworks, ensuring model compatibility, achieving platform independence, or benefiting from continuous improvements, ONNXRuntime brings numerous advantages to the world of machine learning.

Exploring the Compatibility of ONNXRuntime with Docker and .NET Core

In this section, we will delve into the compatibility of ONNXRuntime, Docker, and .NET Core to understand how these technologies work together and can be leveraged in a Linux environment. We will explore the seamless integration of ONNXRuntime into Docker containers running .NET Core applications, highlighting the benefits and possibilities this combination brings.

By examining the compatibility of ONNXRuntime with Docker and .NET Core, we aim to shed light on the efficient deployment and management of machine learning models within containerized environments. We will discuss how ONNXRuntime, a high-performance inference engine for ONNX models, can be utilized in a Docker container running a .NET Core application, opening up new avenues for the deployment and scalability of machine learning solutions in a Linux environment.

Throughout this section, we will showcase the potential of employing ONNXRuntime in a Docker and .NET Core setup, emphasizing the advantages of utilizing containerization and the cross-platform capability of .NET Core. We will explore various use cases and considerations when adding ONNXRuntime to a Docker image, providing insights into the seamless integration of these technologies and the benefits they bring to the development and deployment process.

Additionally, we will discuss best practices and potential challenges that developers may encounter when incorporating ONNXRuntime into their Docker and .NET Core workflows. We will delve into the intricacies of managing dependencies, optimizing performance, and ensuring compatibility across different Linux distributions, empowering developers to harness the full potential of these technologies.

Overall, this section will serve as a comprehensive guide to understanding the compatibility of ONNXRuntime with Docker and .NET Core, offering insights, practical examples, and considerations for leveraging these technologies in a Linux environment.

Setting up a Docker Environment for Linux

In this section, we will explore the process of configuring a Docker environment specifically for Linux. The focus will be on setting up the necessary components and ensuring your Linux system is capable of running Docker containers without any compatibility issues.

First and foremost, it is crucial to understand the fundamental requirements for running Docker on a Linux machine. This includes ensuring the system has the necessary kernel requirements, such as support for cgroups and namespaces. Additionally, the installation process will include acquiring the Docker Engine, which serves as the runtime for Docker containers.

To begin, we will discuss the steps involved in installing Docker on a Linux system. This typically includes updating the system's package manager, adding the Docker repository, and then installing the Docker Engine. We will also cover the key command-line tools, such as Docker CLI, that are essential for managing Docker containers and images within the Linux environment.

Once the Docker installation is complete, we will explore how to configure Docker to run as a non-root user. This involves creating a Docker group, granting necessary permissions, and ensuring that the Docker daemon operates securely while allowing user access to Docker commands.

Furthermore, we will delve into best practices for managing Docker images and containers within a Linux environment. This includes discussing techniques for efficient image management, such as using Docker registries and creating custom Docker images. We will also cover container orchestration using tools like Docker Compose, which allows for easy management and deployment of multi-container applications.

Lastly, we will touch upon the importance of monitoring and securing your Docker environment in a Linux context. We will explore monitoring solutions for tracking container resource usage and performance. Additionally, we will discuss security considerations, such as limiting container privileges, implementing network segregation, and employing image vulnerability scanning tools.

In summary, this section will guide you through the process of setting up a Docker environment tailored for Linux. By following these guidelines, you will be equipped to effectively utilize Docker containers in a secure, efficient, and optimized manner within a Linux operating system.

Installing .NET Core on a Linux Docker container

Introduction:

In this section, we will discuss the process of installing .NET Core on a Linux Docker container, allowing you to leverage the benefits of cross-platform development and containerization. We will explore the steps required to set up the .NET Core framework on a Linux environment, ensuring compatibility for hosting .NET Core applications within Docker containers.

Step 1: Updating the Linux environment:

Before installing .NET Core, it is essential to ensure that the Linux environment is up to date. This involves updating the system packages and dependencies to avoid any compatibility issues during the installation process.

Step 2: Downloading .NET Core:

To proceed with the installation, we need to download the appropriate version of .NET Core for Linux. You can obtain the latest release from the official .NET Core website or use package managers like APT or YUM to fetch the necessary files.

Step 3: Installing .NET Core:

Once the .NET Core package is downloaded, the next step is to install it on the Linux Docker container. This usually involves executing a script or command to extract and configure the necessary components for the framework to function correctly within the Linux environment.

Step 4: Verifying the installation:

To ensure that the installation was successful, we can verify the presence and correctness of the .NET Core installation on the Linux Docker container. This can be done by executing certain commands or running sample applications to validate the functionality of the framework.

Conclusion:

By following the steps outlined above, you can successfully install .NET Core on a Linux Docker container. This allows you to take advantage of the cross-platform capabilities of .NET Core while harnessing the benefits of containerization for efficient deployment and management of .NET Core applications.

Step-by-step guide to incorporating ONNXRuntime into a Linux-based Docker image

In this section, we will explore the process of seamlessly integrating the powerful ONNXRuntime library into a Docker image specifically designed for Linux environments. By following these step-by-step instructions, you will be able to optimize your containerized applications with the ability to execute ONNX models efficiently and effectively.

Step 1: Provisioning the Docker Environment

To begin, ensure that you have a working Docker environment set up on your Linux system. This involves installing Docker and any necessary dependencies, configuring the Docker daemon, and verifying that you have appropriate access privileges.

Step 2: Preparing the ONNXRuntime Library

The next step involves obtaining the ONNXRuntime library and its associated dependencies. This can be accomplished by downloading the library from the official ONNXRuntime repository or by using package managers like apt or yum. It is important to pay attention to any version compatibility requirements and potential conflicts with existing libraries.

Step 3: Creating a New Docker Image

Once you have acquired the necessary ONNXRuntime library and dependencies, it is time to build a new Docker image. Start by creating a Dockerfile that specifies the base image, sets up the required environment, and copies the ONNXRuntime files into the appropriate directories. Utilize efficient layering techniques to ensure that each step is isolated and can be cached for faster builds in the future.

Step 4: Building and Running the Docker Image

With the Docker image configuration in place, execute the build command to create the image. This process may take some time depending on the size of the image and the complexity of the ONNXRuntime library. Once the build is complete, you can run the Docker image and verify that the ONNXRuntime library is successfully integrated.

Step 5: Testing and Troubleshooting

To ensure the smooth execution of your applications within the Docker container, it is essential to thoroughly test and troubleshoot the ONNXRuntime integration. This may involve writing and running sample code, checking for any potential error messages, and adjusting configuration settings as needed.

Step 6: Optimizing Performance

After successfully incorporating the ONNXRuntime library into your Docker image, take advantage of the various tuning options available to optimize the performance of your applications. These may include adjusting memory allocation, parallelizing computations, or utilizing hardware acceleration if available.

By following this step-by-step guide, you will have the necessary knowledge and understanding to effortlessly add the ONNXRuntime library to your Linux-based Docker containers, enabling you to leverage the power of ONNX models and enhance the capabilities of your applications.

Testing the Performance of the ONNXRuntime Library within a Containerized Environment

When working with technology stacks that involve ONNXRuntime, it is essential to ensure that the library performs optimally within a Docker container. In this section, we will delve into the process of thoroughly testing the ONNXRuntime library in a containerized environment to measure its overall efficiency and provide insights into its functionality.

In order to evaluate the performance of the ONNXRuntime library, a series of comprehensive tests were conducted within a Docker container. These tests were designed to assess the library's computational capabilities, response time, and general stability. By obtaining these metrics, we can gauge the library's suitability for various machine learning tasks and identify potential areas for improvement.

A variety of synthetic workloads were created to simulate real-world scenarios and capture different aspects of the library's functionality. These workloads encompassed tasks such as image classification, natural language processing, and anomaly detection. By subjecting the ONNXRuntime library to diverse workloads, we aimed to validate its versatility and its ability to handle a broad range of machine learning applications.

| Test Type | Objective | Metrics |

|---|---|---|

| Image Classification | Assess the accuracy and speed of ONNXRuntime when classifying images | Accuracy, Inference Time |

| Natural Language Processing | Evaluate the performance of ONNXRuntime in language-related tasks | Word Embedding, Language Generation, Sentiment Analysis |

| Anomaly Detection | Determine the effectiveness of ONNXRuntime in detecting anomalies | True Positive Rate, False Positive Rate |

Throughout the testing process, a wide range of inputs and datasets were utilized to ensure comprehensive coverage. By employing various input sizes, complexities, and distributions, we aimed to capture the library's behavior across different scenarios realistically. Additionally, the tests were repeated multiple times to account for any variations in results caused by factors such as system load and network latency.

By analyzing the data obtained from the tests, it will be possible to gain valuable insights into the ONNXRuntime library's strengths and limitations. This analysis will enable developers and data scientists to make informed decisions regarding the integration of ONNXRuntime into their Docker container environments, ensuring optimal performance and scalability for their machine learning applications.

Creating a Sample Machine Learning Model in ONNX Format

In this section, we will explore the process of creating a sample machine learning model in the ONNX format. We will discuss the step-by-step procedure to build a simple model using the available tools and libraries. This will provide a foundation for understanding the implementation aspects related to the adoption of ONNX in machine learning workflows.

To begin with, we will outline the importance of ONNX as a common format for representing machine learning models. ONNX serves as a bridge between different frameworks and provides a way to transfer models seamlessly across platforms. By adopting ONNX, developers can avoid the need for rewriting models when switching between frameworks, making it an essential tool in the machine learning ecosystem.

- First, we will select a framework or library to build our sample machine learning model. It could be TensorFlow, PyTorch, or any other popular deep learning framework that supports exporting models to the ONNX format.

- Next, we will define the structure of our model. This involves determining the number of layers, types of activation functions, and any other relevant parameters that affect the model's behavior.

- Once the architecture is defined, we will proceed to train the model using a suitable dataset. This step includes preprocessing the data, feeding it into the model, and updating the model's parameters iteratively.

- After the training process, we will evaluate the performance of our model using appropriate metrics. This will help us gauge the model's accuracy and identify any necessary improvements.

- Once we are satisfied with the model's performance, we can export it to the ONNX format. This step involves using the framework-specific functionality to convert the model into the standardized ONNX representation.

- Finally, we will save the ONNX model to a file for future use or integration into other machine learning workflows. This step ensures the model's portability and compatibility with ONNX-supported frameworks and tools.

By following this process, we can create a sample machine learning model in the ONNX format. This model can then be utilized across different frameworks and platforms, allowing for seamless integration and collaboration within the machine learning community.

Implementing model inference using the ONNXRuntime library in a Dockerized environment

Introduction: In this section, we will explore the process of implementing model inference by leveraging the capabilities of the ONNXRuntime library within a Docker container. We will delve into the steps required to optimize and deploy machine learning models in a Linux environment. By utilizing the ONNXRuntime library, we can seamlessly execute model inferences and harness its high-performance characteristics.

Understanding ONNXRuntime: Before diving into the implementation details, let's gain a better understanding of ONNXRuntime and its significance in the realm of machine learning. ONNXRuntime is an open-source engine developed for executing models that comply with the Open Neural Network Exchange (ONNX) format. It provides exceptional speed and efficiency, enabling seamless integration of ONNX models across different frameworks. By incorporating ONNXRuntime into our Dockerized environment, we can unlock the full potential of ONNX models and streamline the inference process.

Building the Docker container: To begin implementing model inference, we need to create a Docker container that incorporates all the necessary dependencies and settings for seamless ONNXRuntime integration. This section will guide you through the steps of building a Docker container tailored to leverage the ONNXRuntime library efficiently. We will explore the inclusion of the required packages, ensuring compatibility with Linux, and fine-tuning the container for optimal performance.

Preparing the model: Before delving into the actual implementation, we need to prepare the machine learning model for deployment within the Docker container. We will discuss the process of converting models to the ONNX format, ensuring compatibility with ONNXRuntime. This section will guide you through the necessary steps to convert your pre-trained models into ONNX models, allowing seamless integration within the Dockerized environment.

Implementing model inference: With a fully optimized Docker container and the model prepared in the ONNX format, we can now move on to implementing the model inference process. This section will provide a step-by-step walkthrough on how to integrate ONNXRuntime within the Docker container, load the ONNX model, and perform model inferences efficiently. We will explore the necessary coding techniques and configurations required to execute accurate predictions using the ONNX model.

Conclusion: In this section, we have explored the process of implementing model inference using the ONNXRuntime library within a Docker container. By leveraging ONNXRuntime's high-performance capabilities and creating a tailored Docker environment, we can efficiently execute machine learning model inferences in a Linux-based deployment. This implementation approach enables us to harness the benefits of both Docker and ONNXRuntime, facilitating seamless integration and optimized inference execution.

Create Docker Containers From .Net Code

Create Docker Containers From .Net Code by Frank Boucher 1,195 views 5 years ago 6 minutes, 51 seconds

Deploy .NET Application to Linux with Docker

Deploy .NET Application to Linux with Docker by Ayden's Channel 8,581 views 2 years ago 21 minutes

FAQ

Can I add the onnxruntime library to a Docker.Net Core container for Linux?

Yes, you can add the onnxruntime library to a Docker.Net Core container for Linux by specifying it as a dependency in your project's package management system.

What are the benefits of using the onnxruntime library in a Docker container?

The onnxruntime library enables you to run ONNX models efficiently in a Docker container. It provides high-performance inference capabilities and ensures compatibility with various machine learning frameworks.

How can I add the onnxruntime library as a dependency in my .NET Core project?

To add the onnxruntime library as a dependency in your .NET Core project, you can use the NuGet package manager. Simply search for the onnxruntime package and install it into your project.

Are there any specific considerations or steps to follow when adding the onnxruntime library to a Docker container for Linux?

Yes, there are a few considerations to keep in mind. Firstly, make sure that you have the necessary dependencies installed in the Docker image, such as any system-level dependencies required by the onnxruntime library. Additionally, you may need to specify the exact version of the library to install in your Dockerfile.

Is it possible to use the onnxruntime library in a Docker container for Windows as well?

Yes, you can use the onnxruntime library in a Docker container for both Linux and Windows. However, there may be slight differences in the specific steps required to add the library to your Docker image, depending on the operating system.

What is the purpose of adding the onnxruntime library to a .NET Core container for Linux?

The purpose of adding the onnxruntime library to a .NET Core container for Linux is to enable inference and execution of ONNX models in a .NET Core application running on a Linux environment.

Can the onnxruntime library be added to a .NET Core container for Linux?

Yes, the onnxruntime library can be added to a .NET Core container for Linux. It allows for seamless integration of ONNX models into .NET Core applications running on Linux environments.